Hackernoon

4w

351

Image Credit: Hackernoon

Understanding GAN Mode Collapse: Causes and Solutions

- Generative Adversarial Networks (GANs) are a type of deep learning model that has gained a lot of attention in recent years due to their ability to generate realistic images, videos, and other types of data.

- One of the most significant challenges GANs face is mode collapse, where a GAN generates only a limited set of output examples instead of exploring the entire distribution of the training data.

- There are several causes of mode collapse in GANs, including catastrophic forgetting and discriminator overfitting, both of which lead to the generator getting stuck in a particular mode or pattern.

- Catastrophic forgetting refers to the phenomenon in which a model trained on a specific task forgets the knowledge it has gained while learning a new task.

- Discriminator overfitting results in the generator loss vanishing, causing multiple flat regions to emerge, further leading to a decrease in the diversity of generated samples.

- In order to prevent catastrophic forgetting, the model must be trained with multiple tasks simultaneously, while the local maxima should have a wide shape to prevent mode collapse.

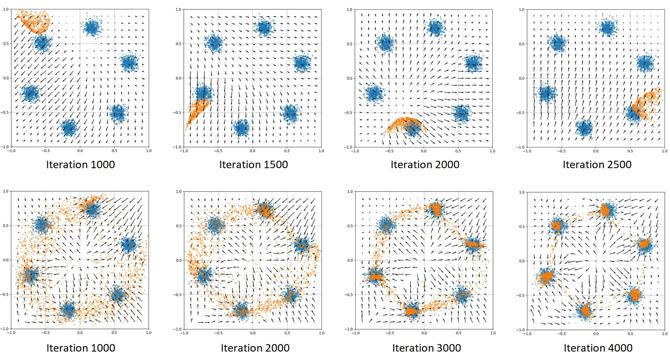

- The visualization of the surface of the discriminator shows that the generator produces similar outputs, indicating mode collapse, and the discriminator scores for images change between training steps.

- GANs demonstrate the same catastrophic forgetting tendencies when trained on symmetric 2D datasets, as displayed in the visualizations of the surface of the discriminator.

- To avoid discriminator overfitting and promote diversity in generated samples, local maxima in various regions of the data space should have a wide shape, and the generator should be trained on less specific targets.

- By understanding the causes of mode collapse in GANs, we can allow them to better develop GANs that are capable of generating diverse and high-quality outputs.

Read Full Article

21 Likes

For uninterrupted reading, download the app