Medium

21h

122

Image Credit: Medium

Understanding Positional Encoding in Transformer and Large Language Models

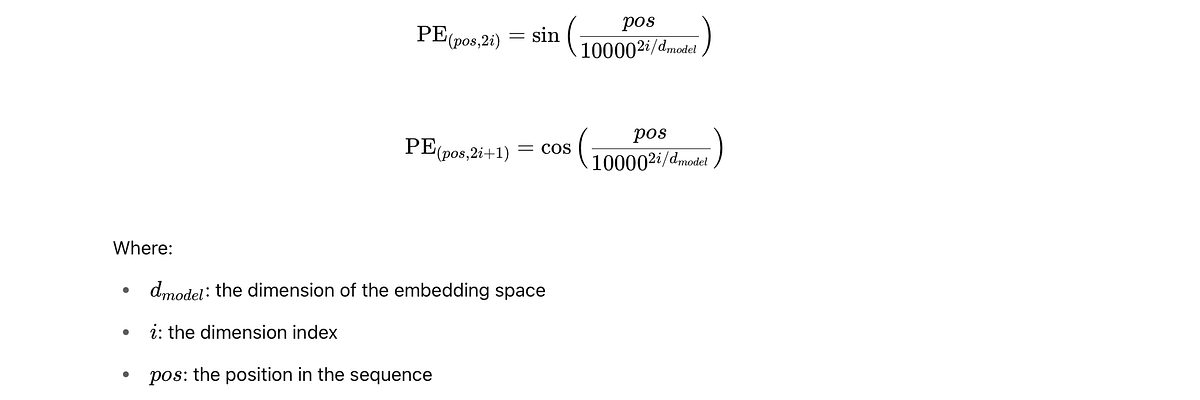

- Traditional models like RNNs and LSTMs process data sequentially, while Transformers use self-attention mechanisms to process all tokens simultaneously, requiring positional encoding to maintain positional information.

- Different positional encoding schemes exist, including sinusoidal encoding, trainable embeddings, relative positional encoding, and Rotary Positional Embedding (RoPE), each with unique benefits for model performance and generalization.

- Sinusoidal encoding allows models to attend based on relative positions, while learned embeddings and relative positional encoding focus on learning relative distances between tokens for improved natural language understanding.

- New positional encoding methods are continuously being explored to enhance LLM performance, interpretability, and scalability, playing a crucial role in advancing the next generation of language technologies.

Read Full Article

7 Likes

For uninterrupted reading, download the app