Medium

3w

401

Image Credit: Medium

What the “Model” in LLM Really Means — Explained Simply

- LLM stands for Large Language Model.

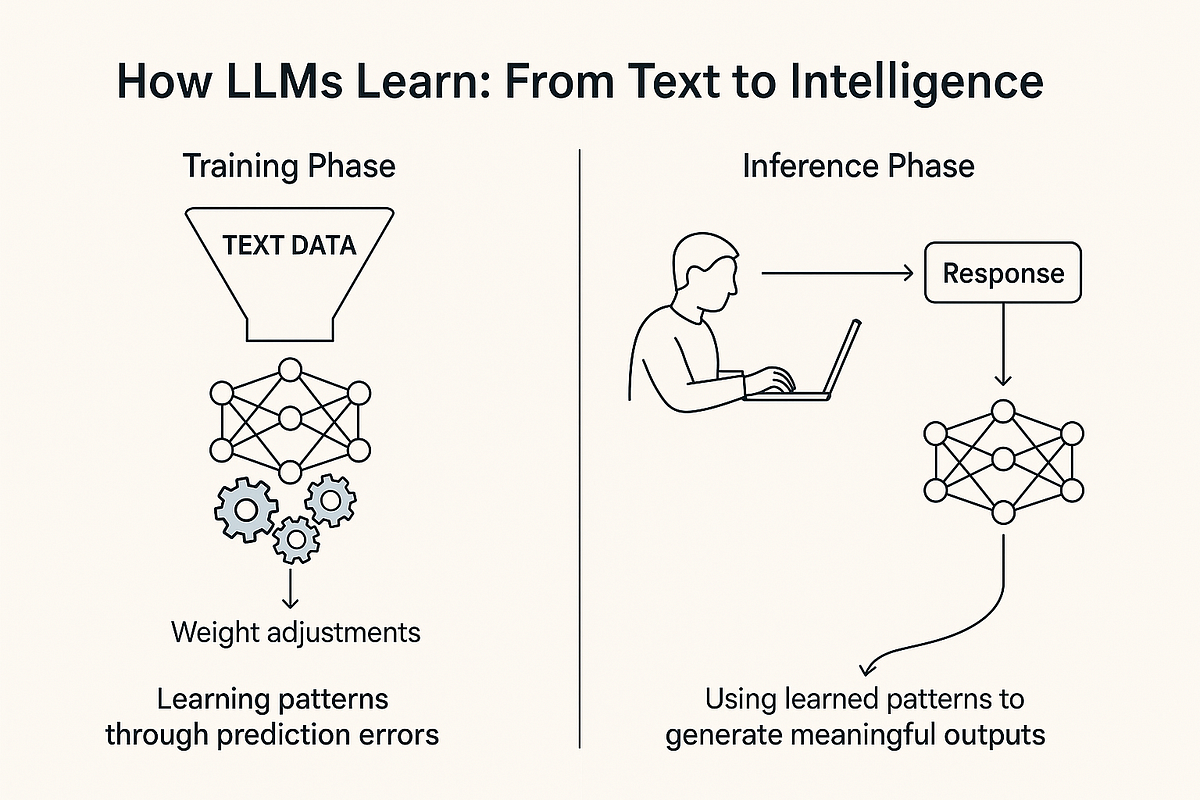

- The term 'model' in LLM refers to its ability to predict the next word based on learned statistical patterns in text.

- The model is essentially a trained mathematical function, usually a neural network, that predicts likely text sequences.

- It learns patterns from massive datasets like Wikipedia, books, and articles during training.

- LLMs predict the next word based on statistical pattern recognition but do not have human-like understanding or reasoning.

- The core functionality of LLMs is to predict the next token given prior input during both training and inference.

- Emergent behaviors like summarization, translation, and reasoning are by-products of LLMs' ability to predict text in context.

- LLMs excel in detecting and generalizing patterns in language such as grammar, tone, and reasoning structures.

- The 'model' aspect of LLMs comes from learning statistical relationships between tokens through adjusting weights in neural networks.

- Despite mimicking reasoning patterns, LLMs do not comprehend text like humans; they predict based on probability.

- Text ingestion is different from learning statistical patterns, which is crucial for the model's intelligence.

Read Full Article

24 Likes

For uninterrupted reading, download the app