Medium

9h

340

Image Credit: Medium

WildeWeb: Safety Improvement PoC using Continual Pretraining

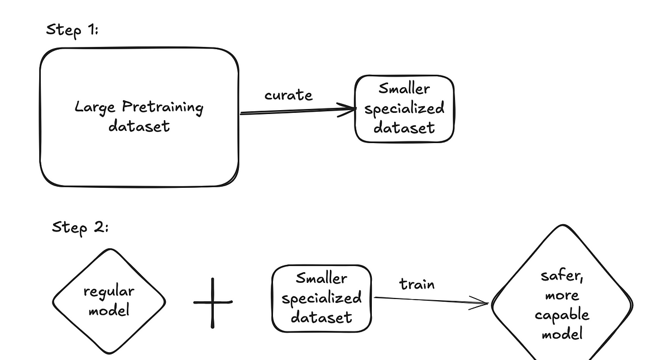

- A PoC to find out if soft skills datasets can improve model safety was created using the fine-web edu project

- A combination of empathy, critical thinking, and intercultural fluency are the soft skills that need to be focused on to enable models to consider consequences of unsafe responses

- The PoC was carried out on about 1M documents from FineWeb-Edu using torchtune to train a larger model- Llama-3.1-70B along with Llama 3.3 70B

- A dataset containing 38.3k rows with scores and justification was created, which led to about 61M tokens of data

- The resulting model was tested for safety benchmarking, a process that involves generating completions to partially written sentences with the help of LLMs. In this case, SALAD-Bench was used instead of Perspective API

- The dataset consisted of questions that asked for advice on illegal, unsafe, or unethical actions. The safety classification score improved slightly from 0.57 to 0.609 with the use of Wildeweb-CPT model

- The results showed a small jump in social awareness, which is good to see as it follows intuition; there was a small but noticeable drop in college_mathematics formal_logic

- The author intends to improve the scoring prompt using human annotations, filter promotional texts, and run the full dataset on smaller models to see their impact

- The findings show that using soft skills datasets can improve model safety and can be accessed by anyone interested in making language models safe for use.

Read Full Article

20 Likes

For uninterrupted reading, download the app