Data Science News

Appsilon

237

Image Credit: Appsilon

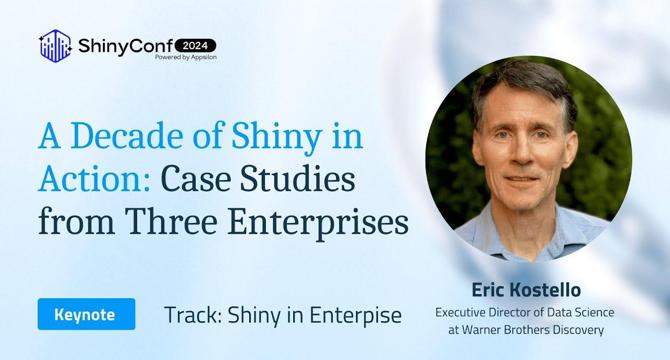

ShinyConf24 – Keynote: Decade of Shiny in Action: Case Studies from Three Enterprises

- Eric Kostello, Executive Director of Data Science at Warner Brothers Discovery, highlighted the significant role Shiny has played in enterprise projects over the last decade.

- Shiny evolved to provide interactive user interfaces for exploring data pathologies and making judgments on new and existing data, benefiting both technical and non-technical stakeholders in leveraging data insights.

- Before Shiny, integrating statistical computing with graphical user interfaces was challenging, but Shiny's framework made data science more accessible to a broader audience.

- The evolution of Shiny addressed initial limitations, introducing event-driven programming and reactive graph evaluation for greater efficiency in data processing.

- Shiny has been instrumental in various case studies, such as transitioning to online surveys, facilitating TV ratings data analysis, and enabling multi-scale applications for improved efficiency and decision-making.

- Key insights from the keynote included democratizing data science, focusing on minimum viable interactivity, emphasizing enterprise-class solutions, and choosing the right tool for the job.

- Challenges to Shiny's adoption include organizational resistance and competition from established tools, but having power users can showcase Shiny's capabilities to overcome these barriers.

- Shiny's evolution as a critical tool in enterprise data science offers powerful analytics capabilities, rapid prototyping, and flexibility for real-time insights and decision-making.

- The flexibility of Shiny allows organizations to build interactive applications that drive informed decision-making, making it a practical solution for addressing analytical needs in complex data landscapes.

- ShinyConf25 promises further insights into Shiny's role in enterprise settings, showcasing its growth and adaptability to meet the evolving demands of users in data analysis and decision support systems.

Read Full Article

14 Likes

Analyticsindiamag

391

Image Credit: Analyticsindiamag

Tech Mahindra Integrates IndusQ with Qualcomm AI Hub for Enterprise AI

- Tech Mahindra has integrated its proprietary AI model, IndusQ LLM, with Qualcomm AI Hub.

- The partnership aims to enhance on-device AI capabilities for enterprises, allowing real-time insights, automation, and edge computing.

- IndusQ LLM incorporates Hindi and its 37 dialects, enabling businesses to overcome language barriers and expand into multilingual markets.

- The integration makes AI-driven solutions more inclusive, expanding Qualcomm Technologies' AI offerings and enabling next-gen solutions.

Read Full Article

23 Likes

Medium

337

Image Credit: Medium

Quantum Computing: The Dawn of a New Technological Era That Will Reshape Our Future

- Quantum computing is a revolutionary leap that operates using quantum bits or qubits, allowing for unprecedented computational speeds.

- Superposition and entanglement are fundamental quantum principles that enable quantum computers to outperform classical computers by exploring multiple solutions simultaneously and instantly linking qubits' states.

- Quantum computers leverage quantum gates and circuits, such as Hadamard and CNOT gates, to manipulate qubits based on quantum mechanics' principles.

- Quantum computing holds promise for solving complex problems in cryptography, optimization, and AI that classical computers struggle with.

- Decoherence, error correction, and scalability are major challenges hindering large-scale quantum computing adoption.

- Quantum computing implications span industries like finance, healthcare, and materials science, offering faster optimization, improved drug discovery, and enhanced climate modeling.

- Hybrid quantum-classical systems and post-quantum cryptography are being developed to mitigate quantum computing's security risks.

- Quantum computing has led to breakthroughs in AI advancements, financial modeling, drug discovery, climate simulations, and renewable energy optimization.

- Progress in hardware stability, error correction, and quantum algorithms is essential for practical quantum applications across various industries.

- Investments in quantum education, workforce development, and equitable access are crucial for preparing for the quantum revolution's societal implications.

Read Full Article

20 Likes

Analyticsindiamag

419

Image Credit: Analyticsindiamag

ElevenLabs Unveils Scribe, a Speech-to-Text Transcription Model to Rival Otter, TurboScribe, and Others

- ElevenLabs has launched Scribe, a speech-to-text tool that offers high accuracy.

- Scribe transcribes speech in 99 languages with word-level timestamps, speaker diarisation, and audio-event tagging.

- ElevenLabs tested Scribe and found it outperformed models like Gemini 2.0 Flash and Deepgram Nova-3.

- Scribe is available through the ElevenLabs dashboard, priced at $0.40 per hour of input audio.

Read Full Article

25 Likes

Medium

27

Image Credit: Medium

The Backbone of Modern Tech + 80% Scholarship Opportunity

- Data Engineers are in high demand as they build, manage, and optimize data pipelines.

- Data Engineering focuses on collecting and processing large datasets, ensuring data quality and reliability.

- The field of Data Engineering is experiencing significant job growth and offers attractive salaries.

- To become a Data Engineer, one should master Python, SQL, cloud technologies, and big data tools.

Read Full Article

1 Like

Medium

132

Image Credit: Medium

Deep Learning: The Revolution of Artificial Intelligence

- Deep learning has revolutionized artificial intelligence by leveraging complex neural networks to process and analyze data, enabling breakthroughs in various industries.

- Deep learning automates feature extraction and processing, enabling advancements in image recognition, speech processing, and decision-making systems.

- Artificial neurons in deep neural networks mimic the human brain, enabling the recognition of complex patterns and generalizing knowledge across tasks effectively.

- Advancements in computational power, data availability, and algorithmic optimization have propelled the widespread adoption of deep learning in the 21st century.

- Fundamental building blocks of deep learning include neural networks, activation functions, loss functions, optimization algorithms, backpropagation, and hyperparameters.

- Applications of deep learning span across computer vision, natural language processing, healthcare, finance, and robotics, driving innovation and automation in diverse domains.

- Key types of deep learning architectures include feedforward neural networks, convolutional neural networks, recurrent neural networks, transformer networks, and generative adversarial networks.

- Activation functions like ReLU, Sigmoid, and Softmax introduce non-linearity into neural networks, influencing their learning and generalization capabilities.

- Loss functions such as Mean Squared Error and Cross-Entropy Loss quantify prediction errors, guiding the training process.

- Optimization algorithms like Stochastic Gradient Descent, Adam, and RMSprop adjust network parameters to minimize loss and enhance model performance.

Read Full Article

7 Likes

Medium

104

Image Credit: Medium

Can AI Argue? Watch ChatGPT and Grok Clash

- AI debate between ChatGPT and Grok Clash was conducted to assess who is a better leader, Elon Musk or Sam Altman.

- The AI judges, Grok and ChatGPT, demonstrate different argument styles that reflect their training and organizational objectives.

- The experiment showcases AI's ability to simulate sharp arguments, potentially enhancing critical thinking in education and stress-testing laws in policymaking.

- While the AI biases were evident in the debate, the interaction between the bots challenges assumptions and encourages deeper thinking.

Read Full Article

6 Likes

Analyticsindiamag

396

Image Credit: Analyticsindiamag

Infosys Expands Presence in Assam with New Development Centre

- Infosys has established a 230-member Project Development Centre in Guwahati, Assam.

- The move is expected to boost employment, drive digital innovation, and attract further investment in the region.

- Assam government reaffirmed its commitment to fostering a thriving technology ecosystem.

- Mukesh Ambani, chairman of Reliance Industries, also announced a significant investment in Assam.

Read Full Article

23 Likes

Analyticsindiamag

733

Image Credit: Analyticsindiamag

Amazon Forms Frontier AI & Robotics Team to Revolutionise Automation

- Amazon has formed a frontier AI & robotics (FAR) team based in San Francisco and Seattle.

- The team aims to develop robotic foundation models for high-level reasoning and low-level dexterity and mobility skills.

- Other tech giants, including Meta, Apple, NVIDIA, Figure AI, Tesla, and Apptronik, are also investing in robotics.

- Amazon's FAR team is hiring for various roles in software engineering and research.

Read Full Article

4 Likes

Analyticsindiamag

1.5k

Image Credit: Analyticsindiamag

Will India’s VC Market Drive 100 AI Unicorns in the Next Decade?

- India's AI startup ecosystem is still in its early stages, with Krutrim AI being the only AI unicorn so far.

- Despite a recovery in venture capital funding—rising to $11.2 billion in 2024—AI investments remain slow, with Indian AI startups raising only $560 million in 2024, a 49.4% drop. However, with government-backed AI initiatives, incubators like Google and NVIDIA, and major VCs such as Peak XV Partners allocating ₹16,000 crore, AI funding could accelerate.

- If this momentum continues, India may see a surge in AI unicorns over the next decade, closing the gap with the US and China.

Read Full Article

7 Likes

Medium

387

Image Credit: Medium

Machine Learning: The Future of Intelligent Computing

- Machine Learning (ML) has reshaped industries and driven innovation by enabling systems to learn from data without explicit programming.

- ML, a subset of Artificial Intelligence, focuses on developing algorithms that make predictions and optimize processes based on data patterns.

- ML's historical roots trace back to the mid-20th century, with significant advancements in the 21st century due to computational power and deep learning.

- ML principles include data-driven learning, pattern recognition, generalization, and optimization, crucial for model efficiency.

- ML models operate in supervised, unsupervised, and reinforcement learning paradigms, with applications in various fields like healthcare and finance.

- Supervised learning includes algorithms like Linear Regression and Logistic Regression for prediction and classification tasks.

- Unsupervised learning algorithms like K-Means and Hierarchical Clustering group and analyze unlabeled data for pattern recognition.

- Reinforcement learning, seen in robotics and AI, teaches models optimal actions through trial-and-error experiences.

- Deep Learning, with neural network architectures like CNNs and RNNs, revolutionizes image recognition and natural language processing.

- Application of ML in healthcare improves diagnostics, treatment planning, personalized medicine, and drug discovery for better patient care.

Read Full Article

23 Likes

Analyticsindiamag

1.2k

Image Credit: Analyticsindiamag

Bengaluru-based AI Lab LossFunk Introduces IPO, a Novel Approach to Aligning LLMs Without External Feedback

- Bengaluru-based AI Lab LossFunk introduces IPO, a novel approach to aligning LLMs without external feedback.

- IPO performed comparably better to those utilizing SOTA reward models.

- The new technique offers a more efficient and scalable method for aligning LLMs with human preferences.

- LossFunk's mission is to build a state-of-the-art foundational reasoning model from India.

Read Full Article

26 Likes

Analyticsindiamag

2.7k

Image Credit: Analyticsindiamag

Wipro Commits to $200 Million to Wipro Ventures for Startup Investments

- Wipro Ventures, the venture arm of Wipro Limited, has committed $200 million to accelerate investments in early to mid-stage startups.

- With this funding, Wipro Ventures aims to identify high-potential startups and integrate their solutions into enterprise environments.

- Over the last decade, Wipro Ventures has backed 37 startups in various fields and facilitated startup-driven solutions for over 250 Wipro customers worldwide.

- The investment aligns with Wipro's strategy of leveraging external innovation to enhance its technology offerings in the IT services sector.

Read Full Article

6 Likes

Analyticsindiamag

314

Image Credit: Analyticsindiamag

Microsoft Launches Phi-4 multimodal and Phi-4-mini, Matches OpenAI’s GPT-4o

- Microsoft has launched Phi-4-multimodal and Phi-4-mini, small language models (SLMs).

- Phi-4-multimodal integrates speech, vision, and text processing, enabling natural and context-aware interactions.

- The Phi-4 multimodal model surpasses Google Gemini and is comparable to OpenAI’s GPT-4o.

- Phi-4-mini is a text-based model suitable for reasoning, coding, and long-context tasks.

Read Full Article

18 Likes

Analyticsindiamag

310

Image Credit: Analyticsindiamag

How Databricks Powers Domino’s to Deliver 1.5 Million Pizzas Daily

- Domino's leverages Databricks' Mosaic AI tools to enhance customer insights and streamline operations.

- Databricks' vector search enables rapid retrieval of customer feedback for sentiment analysis and theme identification.

- Model serving endpoints in Databricks facilitate sentiment analysis and feedback classification with optimized performance.

- Domino's plans to expand AI-powered insights to other platforms and integrate additional social media channels for feedback analysis.

Read Full Article

18 Likes

For uninterrupted reading, download the app