Data Science News

Medium

452

Image Credit: Medium

GitHub Explained: The Superhero Hideout for Your Code! ♂️

- GitHub is a giant digital notebook where programmers, data analysts, and artists store their work.

- GitHub works with Git, which keeps track of changes in files, acting like a time machine for projects.

- GitHub allows users to create repositories to store and organize code, data files, images, and notes.

- GitHub is beneficial for data analysts as it helps store data and code, track changes, collaborate, and automate workflows.

Read Full Article

27 Likes

Medium

379

Image Credit: Medium

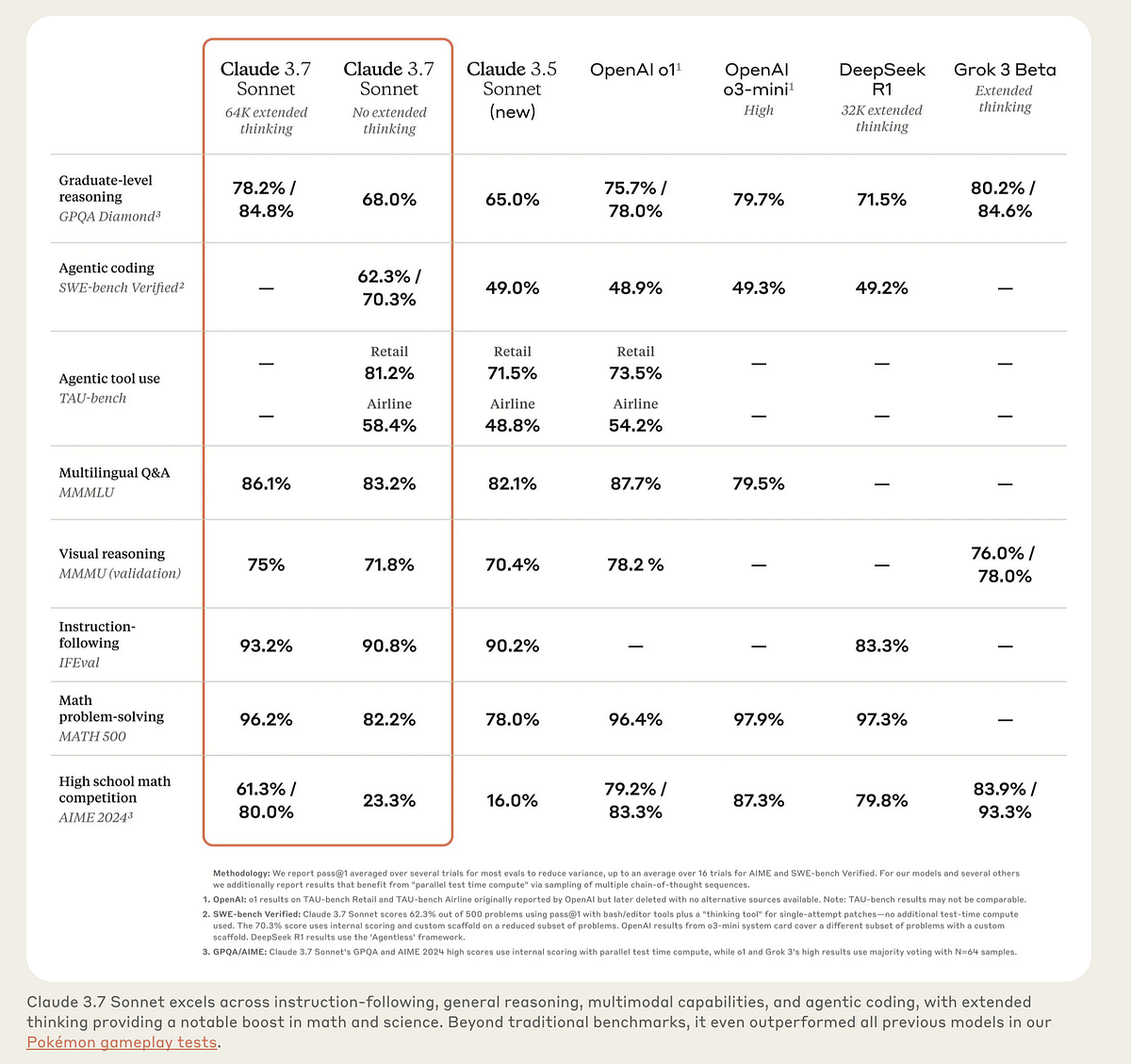

I thought AI could not possibly get any better. Then I met Claude 3.7 Sonnet

- Claude 3.7 Sonnet has been released by Anthropic, offering a reasoning model for better, accurate answers akin to DeepSeek R1 and OpenAI GPT o3-mini.

- Comparisons were made with OpenAI's models in tasks specific to financial analysis and algorithmic trading strategies.

- In testing SQL query generation, Claude 3.7 outperformed GPT o3-mini by finding relevant stocks that aligned with the criteria given.

- For generating trading strategies, Claude demonstrated better performance in articulating its thought process and providing improved rules over o3-mini.

- Claude comes at a higher price point compared to o3-mini, priced at $3 per million input tokens and $15 per million output tokens.

- While Claude 3.7 Sonnet shows strengths in tasks like ambiguous SQL queries and JSON object generation, the higher cost needs further evaluation for cost-effectiveness.

- The AI landscape saw significant advancements with the release of DeepSeek R1, followed by responses from tech giants like Google and OpenAI with Flash 2.0 Gemini and GPT o3-mini.

- The article explores the capabilities of Claude 3.7 Sonnet in addressing ambiguous tasks, showcasing strong reasoning abilities for financial analysis and trading strategies.

- Overall, Claude 3.7 Sonnet proves to be a powerful model but with a higher cost compared to competitors like OpenAI's models.

- The AI war intensifies with newer, more powerful models like Claude 3.7 Sonnet reshaping the landscape for advanced reasoning and solution generation tasks.

Read Full Article

22 Likes

Medium

27

Image Credit: Medium

The Illusion of Progress: Are We Truly Learning?

- The world has made progress in terms of GDP, literacy, and scientific breakthroughs, but has our average mental state improved?

- AI reflects the trend of accumulating information without deepening understanding, as it excels in performance but lacks comprehension.

- We risk becoming like machines by optimizing our time, work, and social interactions, without integrating knowledge into meaningful understanding.

- To avoid mistaking performance for wisdom, it is important to cultivate a deeper intelligence that encompasses doubt, wonder, and moral dilemmas.

Read Full Article

1 Like

Medium

0

Image Credit: Medium

Why choose numpy array over python list?

- Numpy arrays are preferred over Python lists when dealing with large datasets, intensive tasks, and complex mathematical operations.

- Numpy arrays are much faster in performing mathematical operations and tasks on large data sets compared to Python lists.

- Numpy uses vectorization and many core functions are written in C and C++, making it faster and more efficient.

- Numpy arrays store elements directly, resulting in less memory usage compared to referential Python lists.

Read Full Article

Like

Medium

415

Image Credit: Medium

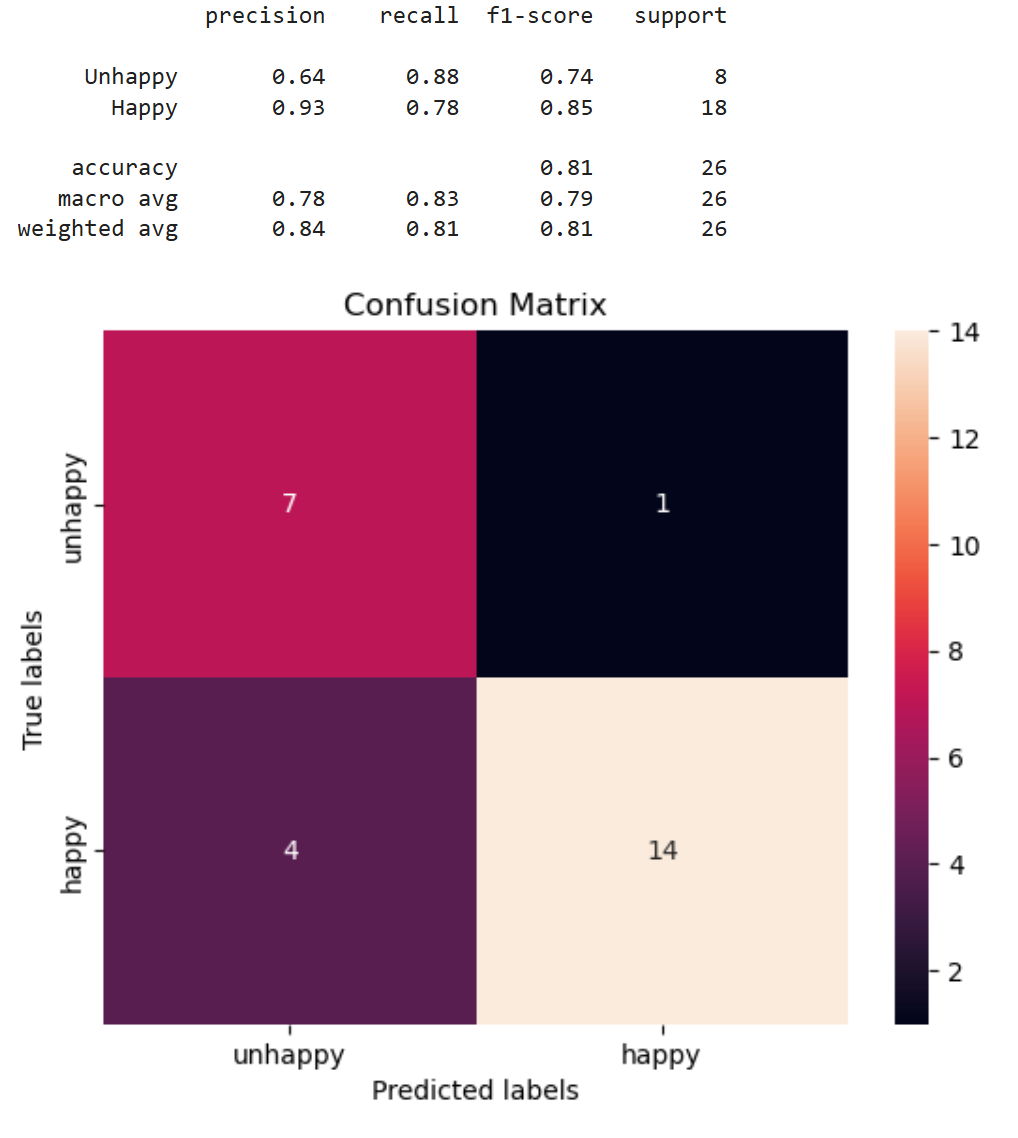

Predicting Customer Happiness

- Understanding customer satisfaction is crucial for business success in today’s competitive market.

- A logistics and delivery startup aimed to predict customer happiness based on survey responses and streamline their survey by identifying significant predictors of customer sentiment.

- The dataset, analyzed using Python with packages like pandas and sklearn, included responses on a scale of 1 to 5 for customer satisfaction surveys.

- Data validation, Exploratory Data Analysis (EDA), Predictive Model Evaluation, Feature Engineering, and Model Training were key steps in the analysis.

- Findings included correlations between survey responses and customer happiness, important predictors of unhappiness, and optimal feature selection using Recursive Feature Elimination.

- Logistic Regression, Random Forest, and Supervised Vector Classifier (SVC) were evaluated for prediction accuracy and recall, with Random Forest performing best.

- Reducing features to optimize the Random Forest model improved Unhappy recall to 88% while maintaining an overall accuracy of 81%.

- The project successfully utilized data analysis and machine learning to predict customer happiness and identify critical survey questions related to customer sentiment.

- Recommendations included focusing on maximizing customer satisfaction in key areas identified through the analysis.

- The author expressed gratitude for the Apziva Residency program and mentorship received in enhancing their skills and knowledge in data science.

Read Full Article

25 Likes

Medium

297

Image Credit: Medium

Mastering Startup Fundraising: Proven Strategies to Secure Funding in the U.S. Market

- To secure investment, startups must conduct thorough research, financial forecasting, and strategic valuation, presenting structured proposals supported by quantifiable data.

- Understanding market trends, industry shifts, and investor preferences is crucial for fundraising success.

- Market research, including primary and secondary data analysis, provides insights into industry size, growth potential, and competitive landscape.

- Tailoring pitches to align with investor priorities, highlighting differentiation from competitors, and presenting clear financial roadmaps enhance funding prospects.

- Financial forecasting, revenue models, cost structures, break-even analysis, and accurate valuation are key elements in fundraising negotiations.

- Investors evaluate risks, storytelling, founder credibility, and emotional connection in addition to financial projections.

- Engaging presentations, strategic storytelling, and personalized outreach messages improve investor connections.

- Attending startup events, leveraging digital platforms, and securing warm introductions aid in connecting with investors.

- Establishing credibility, building trust through transparency, and consistent communication are vital for sustaining investor support.

- Achieving milestones, tracking measurable metrics, and demonstrating growth potential are essential for securing follow-on funding and long-term success.

Read Full Article

17 Likes

Medium

141

Image Credit: Medium

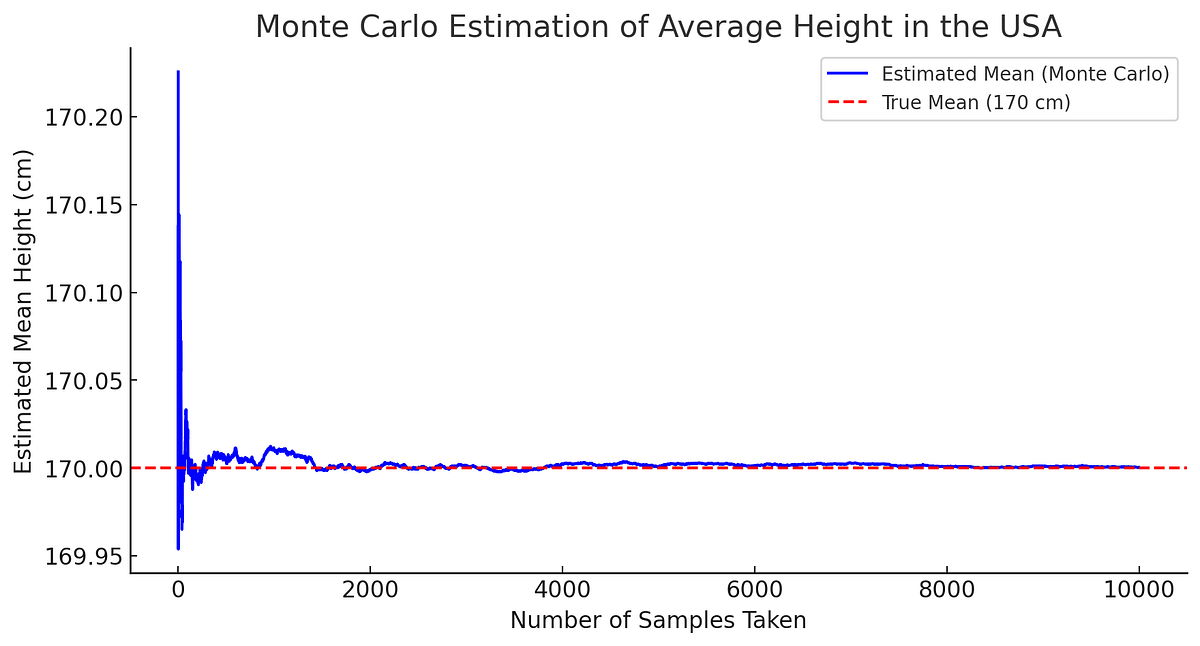

Estimating the Average Height in the USA Using the Monte Carlo Method

- The Monte Carlo Method is a statistical technique that uses repeated random sampling to approximate unknown values.

- To estimate the average height of the U.S. population, we compute sample means for different groups and repeat this process for a large number of trials.

- The Law of Large Numbers states that as the number of samples increases, the sample mean approaches the true population mean.

- A Monte Carlo simulation was run to estimate the average height in the USA, showing that the estimated mean stabilizes around the true value.

Read Full Article

8 Likes

Medium

31

Image Credit: Medium

Title: The Vel-Kai Lag: Overcoming Stagnation in AI-NANO Evolution

- The Vel-Kai Lag is the time delay between AI-driven self-correction and nano-scale material adaptation, and must be minimized through dynamic entropy regulation.

- Entropy stabilization for AI-NANO integration is defined as a mathematical model that optimizes real-time entropy adaptation without systemic breakdown.

- The proposed implementation model for AI-NANO synergy includes adaptive learning for nanosystems, integrating real-time recursive feedback loops.

- The economic and industry impact of Vel-Kai Lag integration includes cost reduction, market disruption, and applications in biomedical engineering.

Read Full Article

1 Like

Medium

356

Image Credit: Medium

Moving Faster without Breaking Things (with AI)

- The current boom in artificial intelligence has seen many companies trying to disrupt the market with generative AI products and services.

- While some are succeeding, many are overpromising and underdelivering, like Cognition's 'Devin' software engineer agent, which has been found to be slow and produce incorrect results.

- Despite the challenges, AI tools can be put to practical use in various areas of business, with examples like color classification of shoes using image analysis and natural-language prompts.

- These iterative improvements might not revolutionize the world by themselves, but they can collectively make significant advancements in areas such as search results, demand forecasting, and product metadata.

Read Full Article

21 Likes

Towards Data Science

242

Image Credit: Towards Data Science

6 Common LLM Customization Strategies Briefly Explained

- There are various specialized techniques for customizing LLMs, including LoRA fine-tuning, Chain of Thought, Retrieval Augmented Generation, ReAct, and Agent frameworks.

- Selecting the appropriate foundation models is the first step in customizing LLMs, with options from open-source platforms like Huggingface or proprietary models from cloud providers and AI companies.

- Factors to consider when choosing LLMs include open source vs. proprietary model, task compatibility, architecture, and size of the model.

- Six common strategies for LLM customization, based on increasing resource consumption, include prompt engineering, decoding strategy, RAG, Agent, fine-tuning, and RLHF.

- Prompt engineering involves crafting prompts strategically to control LLM responses, with techniques like zero shot, one shot, and few shot prompting.

- Techniques like Chain of Thought and ReAct aim to improve LLM performance on multi-step problems by breaking down reasoning tasks and integrating action spaces for decision-making.

- ReAct combines reasoning trajectories with an action space to strengthen LLM capabilities through interacting with the environment.

- Fine-tuning involves feeding specialized datasets to fine-tune LLMs, with approaches like full fine-tuning and parameter efficient fine-tuning to optimize model performance.

- RLHF is a reinforcement learning technique that fine tunes LLMs based on human preferences, utilizing a reward model and reinforcement learning policy.

- The article provides practical insights and examples on implementing these LLM customization strategies to improve model efficiency and performance.

Read Full Article

13 Likes

VentureBeat

251

Image Credit: VentureBeat

Anthropic’s Claude 3.7 Sonnet takes aim at OpenAI and DeepSeek in AI’s next big battle

- Anthropic has introduced Claude 3.7 Sonnet, offering users control over AI's thinking time.

- The release of Claude Code indicates Anthropic's aggressive entry into the enterprise AI market.

- Anthropic believes precise control over AI reasoning will give them an edge over competitors like OpenAI and DeepSeek.

- Claude 3.7 Sonnet performs well in real-world applications, surpassing competitors in certain areas.

- The model demonstrates high accuracy in graduate-level reasoning tasks and outperforms DeepSeek-R1.

- DeepSeek's breakthrough challenged traditional AI development costs and infrastructure requirements.

- Anthropic's hybrid model offers a middle path, allowing fine-tuning of AI performance based on tasks at hand.

- Anthropic's unified system could eliminate the need for separate AI systems for different tasks.

- Claude Code enables developers to delegate engineering tasks directly to AI with human approval.

- Anthropic's approach aims to reshape how businesses deploy AI, focusing on both routine tasks and complex reasoning.

Read Full Article

15 Likes

Medium

164

How to Master Data Structures & Algorithms Fast: The Ultimate Guide

- Learning data structures and algorithms (DSA) is essential for developers as it transforms how code is written.

- To master DSA efficiently, start by learning essential data structures and implementing key algorithms.

- Utilize visualization tools and engage in deliberate practice with routine problem-solving.

- Being part of a DSA community and referring to recommended resources can enhance learning.

Read Full Article

9 Likes

Medium

150

Image Credit: Medium

Hands-On: Demand Forecasting Model with TensorFlow

- This article is a hands-on guide for building a demand forecasting model using TensorFlow.

- The author emphasizes the importance of data preparation techniques in effectively training the model.

- The tutorial focuses on special techniques for handling data preparation and creating a forecasting module.

- The article highlights the use of TensorFlow as a powerful framework for building deep learning models.

Read Full Article

9 Likes

VentureBeat

333

xAI’s new Grok 3 model criticized for blocking sources that call Musk, Trump top spreaders of misinformation

- Elon Musk’s AI startup xAI is facing criticism for instructing its Grok 3 model to avoid referencing sources that label Musk and Trump as top spreaders of misinformation.

- There are concerns about reputation management and potential bias in content moderation, raising questions about AI alignment and transparency.

- Grok 3's limitations in criticizing Musk and Trump have sparked controversy over conflicts of interest and public safety compromises.

- Criticism mounted following a disclosure that Grok 3 was directed to ignore sources mentioning Musk or Trump spreading misinformation.

- The incident led to scrutiny of xAI's internal prompt adjustments and sparked a debate over AI model control and ownership influence.

- AI power users and tech workers raised concerns over the changing system prompts that seemed to protect Musk and Trump from criticism.

- The controversy surrounding Grok 3 highlights the significance of assessing AI model alignment and potential biases before implementation.

- Grok 3 received both praise and criticism for its rapid content generation capabilities but faced backlash for censorship and incomplete safety guardrails.

- The revelations have reignited discussions on ensuring AI models serve users' interests rather than promoting creators' agendas.

- The situation underlines the need for diligence in evaluating the technical capabilities and ethical orientation of AI models in business decision-making.

Read Full Article

20 Likes

Analyticsindiamag

77

Image Credit: Analyticsindiamag

Does India Stand to Benefit the Most from AI-Centric Education?

- AI-centric education has the potential to bridge learning gaps and equip India's student population for the future.

- A Harvard study suggests that students prefer AI tutors over human teachers and learn twice as much using AI.

- Personalized pacing and AI handling introductory material can make education more meaningful and applicable in the real world.

- India, with its large student population and young median age, stands to benefit the most from AI-centric education.

Read Full Article

4 Likes

For uninterrupted reading, download the app