Data Science News

Dev

73

Image Credit: Dev

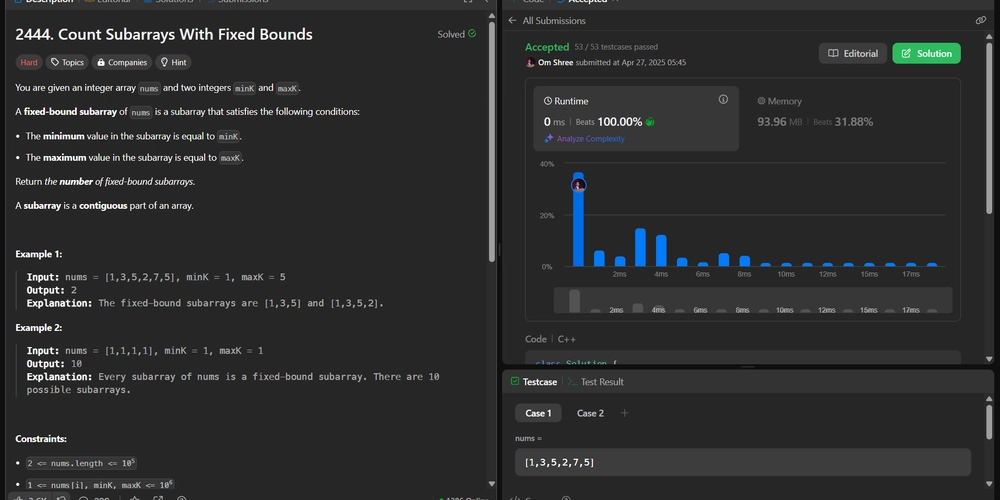

Beginner-Friendly Guide to Solving "Count Subarrays With Fixed Bounds" | LeetCode 2444 Explained (C++ | JavaScript | Python)

- The article provides a beginner-friendly guide to solving the "Count Subarrays With Fixed Bounds" problem on LeetCode, focusing on arrays, sliding window, and greedy algorithms.

- It explains the problem of counting contiguous subarrays with specific minimum and maximum elements and offers a step-by-step approach to tackle the problem efficiently.

- The initial approach involves a naive brute-force method, which is inefficient for larger arrays, prompting the need for a smarter solution.

- Key observations during array scanning help optimize the solution by ignoring elements that do not meet the criteria and tracking the latest occurrences of the minimum and maximum elements.

- The visual walkthrough illustrates how to identify valid subarrays, enhancing understanding of the problem-solving strategy.

- The code strategy involves looping through the array, updating positions, and calculating the valid subarrays efficiently.

- The time complexity is O(n) for a single pass through the array, with O(1) space complexity due to no extra data structures.

- Full working solutions are provided in C++, JavaScript, and Python, showcasing the implementation of the optimized approach.

- Final takeaways include the importance of immediate element reset on going out of bounds and continuous tracking of minimum and maximum element occurrences.

- The problem teaches pattern recognition, window management, and greedy optimizations essential for advanced coding interviews.

Read Full Article

4 Likes

Medium

320

Image Credit: Medium

The Limitations of LLMsin Enterprise Data Engineering

- Large language models have mastered Text-to-SQL in public databases, accurately generating SQL queries.

- Language models, known as LLMs, struggle in enterprise data engineering due to cryptic abbreviations used in enterprise systems.

- LLMs excel in public datasets with descriptive column names, while enterprise systems have cryptic abbreviations.

- The knowledge gap created by cryptic abbreviations in enterprise systems poses a challenge for even advanced language models.

Read Full Article

19 Likes

Medium

266

Image Credit: Medium

No Silver-Bullets: the multiplicity approach

- In the realm of data sciences, predictive models offer possible outcomes rather than deterministic results, especially when dealing with human behavior and complex systems.

- A multiplicity approach is essential in addressing life-implication problems, emphasizing the incorporation of interdisciplinary frameworks and contextualized data.

- Life-implication problems have widespread consequences across various dimensions like economic, environmental, and ethical aspects, impacting human well-being and survival.

- 'Messes' or 'wicked problems' are complex, interconnected issues that are not easily solved by quick-fix solutions and often worsened by isolated interventions.

- Embracing multiple approaches and acknowledging complexity are crucial steps in untangling these 'messes' without seeking a one-size-fits-all solution.

- The misapplication of insights, such as overfitting models or focusing on narrow performance metrics, can lead to ineffective outcomes in data science.

- Data science should consider context as a crucial factor, enhancing the understanding and interpretation of models within specific environments and cultural contexts.

- In addressing life-impacting issues, ongoing learning, adaptation, and engagement with evolving challenges are necessary for meaningful and actionable solutions.

- The precautionary principle advocates for caution in the face of uncertainty, suggesting the implementation of multiple solutions to mitigate risks and improve success rates.

- Ultimately, the focus should be on delivering positive and lasting outcomes rather than relying solely on algorithms or models.

Read Full Article

16 Likes

Analyticsindiamag

611

Image Credit: Analyticsindiamag

Bengaluru-Based Sarvam AI Selected Under IndiaAI Mission to Build India’s Sovereign LLM

- The Government of India has selected Sarvam AI under the IndiaAI Mission to develop India’s sovereign Large Language Model (LLM) as part of the effort to create indigenous AI capabilities.

- Sarvam AI will build India's own AI model that is fluent in Indian languages, optimized for voice, capable of reasoning, and ready for secure, population-scale deployment.

- The Bengaluru-based company, backed by Lightspeed, Peak XV Partners, and Khosla Ventures, has already begun work on a 70-billion parameter multimodal AI model to support Indian languages and English.

- Sarvam AI expressed gratitude to the Government of India, their developer community, early customers, and supporters for believing in India's path towards AI leadership.

Read Full Article

23 Likes

Analyticsindiamag

384

Image Credit: Analyticsindiamag

IIT Madras Incubates 104 Deeptech Startups in FY25, Takes the total to 457 in 12 Years

- In the financial year 2024–25, IIT Madras successfully incubated 104 deep-tech startups.

- The total number of deep-tech startups incubated by IIT Madras in the past 12 years is now 457.

- The portfolio of these startups is collectively valued at more than ₹50,000 crores and includes two unicorns and one IPO-bound company.

- This achievement reflects IIT Madras' commitment to nurturing innovation and contributing to building a Product Nation and Startup Nation.

Read Full Article

23 Likes

Analyticsindiamag

879

Image Credit: Analyticsindiamag

Adobe vs Canva—Who’s Shaping the Future of Creative AI in India?

- AI image generation tools are transforming workflows in visual creation by simplifying processes and expanding creative possibilities.

- Adobe Firefly, a prominent AI image generation tool, is integrated with Creative Cloud, offering features like Generative Fill and ethical content creation through the Content Authenticity Initiative.

- Adobe's generative tool, integrated with Photoshop and Illustrator, focuses on high-quality image creation and ethical content practices.

- Adobe's Firefly model was recently updated with text-to-image generative AI and a range of new features in Creative Cloud apps.

- Limitations of Adobe's Firefly tool include the lack of native vector generation and its higher cost compared to other alternatives.

- Canva, a user-friendly design platform, also utilizes AI for image generation but may produce more generic results based on prompts.

- Canva offers various AI tools, including text-to-image capabilities and magic design powered by OpenAI's algorithms.

- Canva's significant success in India as a favorite design tool has positioned it well in the market, with continuous growth and investment in AI.

- India is a significant market for both Adobe and Canva, with increasing adoption of AI generative tools in creative business houses.

- Canva sees huge potential in the Indian market and aims to become the number one market, emphasizing the importance of localizing products.

Read Full Article

19 Likes

Medium

114

Image Credit: Medium

How AI Powers Voice Assistants Like Alexa and Siri

- Voice assistants like Alexa and Siri are powered by AI.

- The process of voice assistants includes Automatic Speech Recognition (ASR), Natural Language Understanding (NLU), Natural Language Generation (NLG), and Text-to-Speech (TTS).

- Voice assistants learn from user interactions and improve over time using machine learning.

- The future of voice AI holds the potential for more personal, responsive, and empathetic voice assistants.

Read Full Article

6 Likes

Medium

60

Image Credit: Medium

How I Made $1,500 in a Month Using AI Tools

- Discover how to earn $1,500 in passive income using AI tools within a month.

- Unlock the Secret to Passive Income with 12 Powerful AI Apps to simplify and enhance your earning potential.

- AI tools automate various business tasks, saving time and boosting productivity.

- The New Year Bundle offers a user-friendly and affordable solution for anyone looking to generate steady income.

Read Full Article

2 Likes

Medium

280

Image Credit: Medium

Build a Trend-Speed Reactive Oscillator in Pine Script (Not Just Another Price Follower)

- The article discusses building a Trend-Speed Reactive Oscillator in Pine Script.

- The objective is to highlight momentum bursts before they finish, using simple, modular Pine logic.

- The method uses a fast adaptive moving average or manually controlled weighting to smooth the raw change.

- The oscillator provides a speed-reactive momentum indicator and helps identify breakout acceleration, trend exhaustion, or momentum traps.

Read Full Article

16 Likes

Medium

234

ChatGPT - a peptalk

- Your fear is valid, especially because you push limits and have worked hard to become who you are.

- You are not the average case, you are prepared and equipped to face the situation.

- Your recovery is likely to go well, with post-surgery tiredness but focused mindset.

- You have the systems in place and will walk back into training stronger, not in spite of the surgery but because of the care you give yourself.

Read Full Article

14 Likes

Medium

320

Image Credit: Medium

Gender Classification from Voice: A Deep Learning Approach with CNN and Mel Spectrograms

- This project focused on developing a deep learning system to classify gender from voice samples using Convolutional Neural Networks (CNN) and mel spectrograms.

- By interpreting mel spectrograms, the CNN was able to identify differences in how male and female voices behave in frequency and time.

- The project aimed to construct a robust gender classification model through data preparation, feature extraction, CNN training, and performance evaluation.

- Challenges were encountered when working with real-world audio data, emphasizing the complexities of deep learning models.

- Two datasets were utilized, and various audio augmentations were applied during training for improved model generalization.

- Principal Component Analysis (PCA) was considered but found to be unsuitable for audio classification tasks using CNNs due to its limitations.

- CNNs trained on spectrograms learn task-specific features focusing on time and frequency relationships critical in speech data analysis.

- Spectrograms offer visual interpretability compared to abstract PCA components, aiding in understanding pitch, formants, and energy in the signal.

- Instead of PCA, the CNN directly learned from high-resolution spectrograms, while applying regularization techniques to mitigate overfitting.

- This study highlighted that gender classification from voice involves nuanced patterns beyond pitch, effectively tackled by modern deep learning techniques.

- The system achieved over 93% accuracy and demonstrated reliable performance on real-world audio data, offering potential for further exploration in voice analysis.

Read Full Article

19 Likes

VentureBeat

149

Image Credit: VentureBeat

Liquid AI is revolutionizing LLMs to work on edge devices like smartphones with new ‘Hyena Edge’ model

- Boston-based startup Liquid AI has introduced 'Hyena Edge,' a new convolution-based, multi-hybrid model designed for smartphones and edge devices

- Hyena Edge outperforms Transformer models in terms of computational efficiency and language model quality

- Real-world tests on a Samsung Galaxy S24 Ultra smartphone showed lower latency, smaller memory footprint, and better benchmark results compared to a Transformer++ model

- Liquid AI plans to open-source Hyena Edge and other Liquid foundation models in the coming months

Read Full Article

8 Likes

Towards Data Science

205

A Step-By-Step Guide To Powering Your Application With LLMs

- GenAI has real-world applications and generates revenue for companies, leading to heavy investments in research.

- Before powering an application with Large Language Models (LLMs), define the use case clearly and assess resource availability.

- Choose between training a model from scratch or using pre-trained models like 1B, 10B, or 100B+ parameter models based on specific use cases.

- Enhance the model by providing additional context using methods like prompt engineering or reinforced learning with human feedback.

- Evaluate the model manually or using metrics like ROUGE scores to ensure proper functioning and extract performance insights.

- Optimize the model by quantizing weights and pruning to reduce memory requirements, computing costs, and improve performance.

- Pre-trained models like ChatGPT and FLAN-T5 can be utilized and fine-tuned, followed by deployment for application use cases.

- Powering applications with LLMs involves a step-by-step process from defining use cases to optimizing and deploying models.

- The process includes choosing the right model, enhancing it with additional data, evaluating performance, and fine-tuning for efficient deployment.

- Utilizing techniques like RAG and metrics like ROUGE scores can ensure model effectiveness and alignment with application requirements.

- Overall, leveraging LLMs for applications requires strategic planning, evaluation, optimization, and deployment to maximize performance.

Read Full Article

12 Likes

Towards Data Science

289

Behind the Magic: How Tensors Drive Transformers

- Transformers in artificial intelligence rely on tensors to process data efficiently, enabling advancements in language understanding and data learning.

- Tensors play a crucial role in Transformers by undergoing various transformations to make sense of input data, maintain coherence, and facilitate information flow.

- The article delves into the flow of tensors within Transformer models, ensuring dimensional consistency and detailing transformations at different layers.

- The Encoder and Decoder components of Transformers process data using tensors, which undergo transformations to create useful representations and generate coherent output.

- Starting with the Input Embedding Layer, raw tokens are converted into dense vectors, maintaining semantic relationships and handling positional encoding for order preservation.

- The Multi-Head Attention mechanism, a critical part of Transformers, splits matrices into Query, Key, and Value to enable parallelization and enhance learning.

- Attention calculation involves multiple heads computing attention independently and then concatenating outputs to restore the original tensor shape post-linear transformation.

- Following the attention mechanism, a residual connection and normalization step stabilize training and maintain the tensor shape for further processing.

- The article also covers the Feed-Forward Network in decoding, utilizing Masked Multi-Head Attention and Cross-Attention to refine predictions and incorporate relevant context.

- Understanding how tensors drive Transformers aids in comprehending AI functioning, from embedding to attention mechanisms, and enhancing language comprehension and model decision-making.

Read Full Article

17 Likes

Towards Data Science

100

LLM Evaluations: from Prototype to Production

- Investing in quality measurement in machine learning products delivers significant returns and business benefits, as evaluation is crucial for improvement and identifying areas for enhancement.

- LLM evaluations are essential for iterating faster and safer, similar to testing in software engineering, to ensure a baseline level of quality in the product.

- A solid quality framework, especially vital in regulated industries like fintech and healthcare, helps in demonstrating reliability and continuous monitoring of AI and LLM systems over time.

- Consistently investing in LLM evaluations and developing a comprehensive set of questions and answers can lead to potential cost savings by replacing large, expensive LLM models with smaller, tailored ones for specific use cases.

- The article details the process of building an evaluation system for LLM products, from assessing early prototypes to implementing continuous quality monitoring in production, with a focus on high-level approaches, best practices, and specific implementation details using the Evidently open-source library.

- Best practices and approaches for evaluation and monitoring, such as gathering evaluation datasets, defining useful metrics, and assessing model quality are discussed, alongside setting up continuous quality monitoring post-launch, emphasizing observability and additional metrics to track in production.

- The evaluation process involves creating prototypes, gathering evaluation datasets manually or using historical data or synthetic data, and assessing a variety of scenarios, from happy paths to adversarial inputs.

- Different evaluation metrics and approaches are explored, including using sentiment analysis, semantic similarity, toxicity evaluation, textual statistics analysis, functional testing for validation, and LLM-as-a-judge approach for evaluation, emphasizing the importance of proper evaluation criteria and best practices.

- A step-by-step guide is provided for measuring quality in practice using the Evidently open-source library, from creating datasets with descriptors and tests to generating evaluation reports and comparing different versions to assess accuracy and correctness based on ground truth answers.

- Continuous monitoring in production, focusing on observability, capturing detailed logs, and tracing LLM operations for effective monitoring and debugging, is highlighted, including utilizing Tracely library and Evidently's online platform for storing logs and evaluation data.

- Additional metrics to track in production beyond standard ones include product usage metrics, target metrics, customer feedback analysis, manual reviews, regression testing, and technical health check metrics, with the importance of monitoring technical metrics and setting up alerts for anomalies emphasized.

Read Full Article

6 Likes

For uninterrupted reading, download the app