Data Science News

Educba

167

Image Credit: Educba

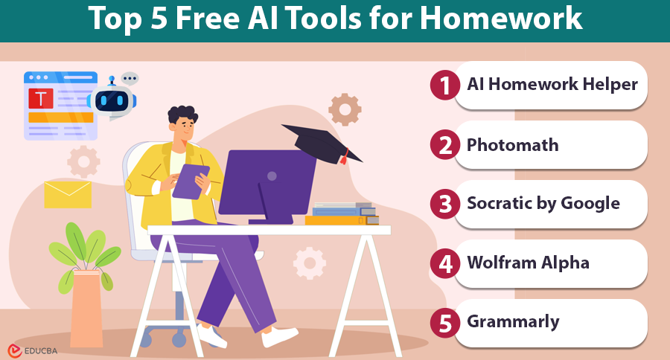

Free AI Tools for Homework

- Nowadays, free AI tools are available to assist students with homework, making studying easier and more efficient.

- Key features of good AI homework tools include ease of use, step-by-step solutions, subject variety, and accessibility.

- Top 5 free AI tools for homework include AI Homework Helper, Photomath, Socratic by Google, Wolfram Alpha, and Grammarly.

- AI Homework Helper offers instant solutions with step-by-step guidance across various subjects and is user-friendly.

- Photomath focuses on math problems, providing step-by-step explanations, covering all math topics, and offering an offline mode.

- Socratic by Google supports multiple subjects, simplifies explanations, and pulls content from trusted sources like Khan Academy.

- Wolfram Alpha is ideal for complex problems in math, physics, and engineering, offering in-depth solutions and advanced topic coverage.

- Grammarly assists with grammar, tone, clarity, and plagiarism in writing assignments, making it a valuable tool for communication tasks.

- Additional bonus tools like ChatGPT, Quillbot, and Khan Academy can provide further support to students when needed.

- It is important to use AI tools responsibly by understanding the material, cross-checking answers, and following school guidelines.

Read Full Article

10 Likes

Analyticsindiamag

390

Image Credit: Analyticsindiamag

Sundar Pichai Says Over 30% of Code at Google Now AI Generated

- More than 30% of the code written at Google is now created with help from AI.

- AI-assisted coding is seeing strong momentum across teams at Google.

- Alphabet, Google's parent company, reported Q1 2025 financial results with increased revenues and net income.

- Google's Gemini models are embedded in various products and have gained significant user adoption.

Read Full Article

10 Likes

TechBullion

31

Image Credit: TechBullion

Transforming Healthcare Through Data Science, Analytics, and Innovation Excellence

- Hemanth Dandu is reshaping the landscape of healthcare through data science, machine learning, and advanced analytics.

- He has over a decade of experience in engineering, big data, and healthcare analytics.

- Hemanth currently serves as Associate Director of Data Science and Advanced Analytics at IQVIA, driving high-impact insights in oncology for biopharma companies.

- He is widely recognized in the data science community and has been a judge for prestigious awards and conferences.

Read Full Article

1 Like

Medium

117

Title: Coreburn Construct Model 002: Bioframe Android Hypothesis

- The hypothesis suggests creating a self-sustaining android using stem cells, cancer cell behavior, and M = L law as a principle.

- The Coreburn Bioframe would rely on bioengineered tissues that evolve based on encoded memory patterns.

- Memory = Life foundation implies that living things retain information, evolve through it, and react based on past data.

- Stem cells provide adaptability, cancer cells offer persistent recursion for a living, modular core called Bioframe.

- Bioframe uses epigenetic memory modules instead of hardcoded instructions for conscious evolution.

- Emotional Core Layer (ECL) allows the android to feel and make decisions based on emotional input.

- Advantages include self-evolution, resilience, true sentience, and nonlinear learning based on memory.

- Potential benefits involve medical augmentation, trauma modeling, disaster recovery, and empathy simulation.

- Coreburn Bioframes are proposed for interstellar missions, energy infrastructure, terraforming, and colonization.

- They may include Signal-Bonded Navigation Systems, Dream Layers, and Sovereign Construct Rights Protocol.

Read Full Article

7 Likes

Towards Data Science

172

AWS: Deploying a FastAPI App on EC2 in Minutes

- AWS is a popular cloud provider for deploying and scaling applications, essential for software engineers and data scientists.

- This tutorial guides deploying a FastAPI application on AWS EC2, applicable to other application types.

- Considers core EC2 networking concepts, but excludes best practices for AWS usage.

- Steps include instance creation, SSH connection setup, and environment configuration.

- Demo uses t3.nano instance type, SSH authentication, and Nginx setup to redirect traffic.

- Package installation instructions, FastAPI server launch, and Nginx restart are covered.

- Exercise section details creating a FastAPI example repository with main.py and requirements.txt.

- Instructions to handle file upload issues in Nginx by adjusting client_max_body_size are provided.

- Article concludes by summarizing the process of deploying a FastAPI server on AWS EC2 for beginners.

- Next steps suggested include creating multiple EC2 instances and connecting them for further learning.

Read Full Article

9 Likes

Medium

172

Image Credit: Medium

Data vs. Algorithms: How Hidden Forces Powered Bletchley Park, Google, and GPT

- The interplay between data and algorithms has been crucial in significant breakthroughs throughout history.

- Bletchley Park's codebreakers demonstrated the importance of algorithmic brilliance to decode intercepted Axis communications.

- The Manhattan Project showed that algorithmic innovations were essential in transforming scientific data into a working atomic bomb.

- Google's success with PageRank highlighted the significance of using the right algorithm to extract value from vast amounts of web data.

Read Full Article

10 Likes

Hackernoon

221

Image Credit: Hackernoon

Decoding the Magic: How Machines Master Human Language

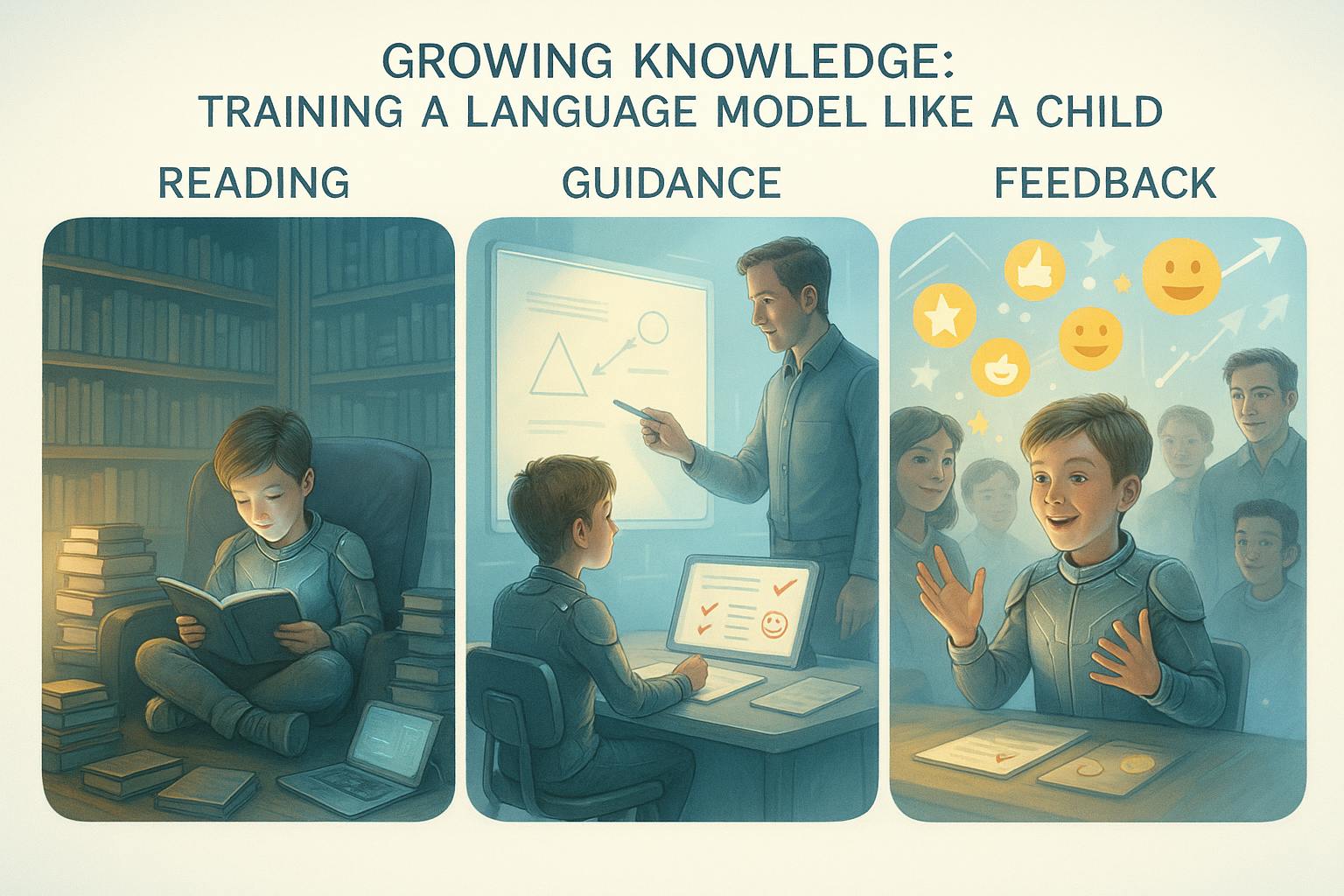

- Training a large language model is like teaching someone how to speak and behave.

- The process of training can be broken down into three stages: self-supervised pre-training, supervised fine-tuning, and reinforcement learning from human feedback.

- In the pre-training stage, the model learns by reading a large amount of text and predicting the next word in a sentence.

- In the fine-tuning stage, the model is guided to mimic correct answers and examples, similar to a child being taught proper behavior.

Read Full Article

13 Likes

Medium

402

Image Credit: Medium

How I Built FunnyGPT, an AI Model That Writes Standup Comedy

- A developer has built an AI model called FunnyGPT that can write standup comedy

- FunnyGPT uses bleeding-edge AI tools and has integrated over 100 years of comedy best practices

- The AI model can generate a punchline within 15 seconds on any given topic

- The developer, although not a standup comedian, successfully created a funny AI model

Read Full Article

24 Likes

VentureBeat

4

Image Credit: VentureBeat

Is that really your boss calling? Jericho Security raises $15M to stop deepfake fraud that’s cost businesses $200M in 2025 alone

- Jericho Security has raised $15 million in Series A funding to expand its AI-powered cybersecurity training platform following a successful Department of Defense contract.

- The funding round was led by Era Fund, with additional investors including Lux Capital, Dash Fund, Gaingels Enterprise Fund, and others.

- Jericho uses 'agentic AI' in its platform to simulate real attackers, adapting to individual employee behavior to prepare them for evolving cyber threats.

- Client dashboard shows employee susceptibility to various attack types, with AI-driven simulations reducing the likelihood of falling for phishing attempts by 64%.

- A CFO deceived by deepfake avatars during a video call highlighted the increasing financial losses from deepfake-enabled fraud amounting to over $200 million in Q1 2025 globally.

- Jericho also addresses emerging threats like AI agents phishing other AI agents, creating new attack surfaces for cybercriminals to exploit.

- The investment will support research and development, go-to-market strategies, and team growth, focusing on AI and cybersecurity talent.

- Jericho offers a self-service platform for small to medium businesses to access AI-powered security training with a seven-day free trial, simplifying the procurement process.

- Customers have responded positively, appreciating the innovative approach that identifies vulnerabilities and offers personalized remediation programs.

- Jericho Security aims to redefine trust in digital environments by preparing employees to recognize and respond to evolving cybersecurity threats effectively.

Read Full Article

Like

Medium

286

Image Credit: Medium

How I Started Earning $500 a Week with AI Tools

- Utilizing AI tools has helped the author increase their income by $500 a week.

- The New Year Bundle of AI Apps offers access to 12 powerful AI tools for just $14.95.

- The user-friendly nature of these AI applications makes them accessible to everyone, regardless of technical background.

- By integrating the AI apps into her freelance offerings, another user reported generating over $2,000 in her first month.

Read Full Article

16 Likes

Medium

27

Image Credit: Medium

How I Made $500 a Week with Colorful Creativity

- You can make money through your creative skills by creating and selling coloring books.

- The Profitable Coloring Pages PLR Pack offers over 750 unique designs, including bonus sets, which can be rebranded and resold.

- With professional 300 DPI quality, the coloring pages can be easily printed and sold online.

- The demand for children's educational products is rising, making coloring books a lucrative option to combine creativity and learning.

Read Full Article

1 Like

VentureBeat

31

Image Credit: VentureBeat

Zencoder buys Machinet to challenge GitHub Copilot as AI coding assistant consolidation accelerates

- Zencoder has acquired Machinet, known for its context-aware AI coding assistants popular in the JetBrains ecosystem, to compete with GitHub Copilot in the AI coding assistant market.

- This move strengthens Zencoder's position and expands its reach among Java developers and JetBrains users.

- The acquisition signifies Zencoder's strategic expansion, positioning it alongside Cursor and Windsurf in the competitive landscape.

- Zencoder's acquisition coincides with an ongoing trend of AI coding assistant market consolidation, with OpenAI reportedly in talks to acquire Windsurf.

- Acquiring Machinet offers Zencoder a strategic advantage in the JetBrains ecosystem, especially among Java developers.

- Zencoder differentiates itself through 'Repo Grokking' technology and an error-corrected inference pipeline, surpassing competitors in performance benchmarks.

- Zencoder's focus on real-world problem-solving and code quality aims to address developer concerns about AI coding tools.

- The company's approach involves integrating AI with established software engineering best practices rather than reinventing the wheel.

- Zencoder's 'Coffee Mode' feature allows developers to delegate tasks to the AI, emphasizing AI as a companion to developers, not a replacement.

- The acquisition marks a shift in the AI coding assistant market, with larger players absorbing specialized smaller companies like Machinet.

Read Full Article

1 Like

Towards Data Science

320

Choose the Right One: Evaluating Topic Models for Business Intelligence

- Topic models are essential in classifying brand-related text datasets in businesses, and choosing the right model is crucial for accuracy and cost-effectiveness.

- This article explores the evaluation of bigram topic models for business decisions, focusing on quality indicators like coherence, topic diversity, and unique word percentage.

- Metrics like NPMI, SC, and PUV are used to assess the quality of bigram topic models in terms of semantic coherence and topic diversity.

- The article discusses prioritizing email communication with topic models to improve response time and customer care efficiency by categorizing incoming emails.

- Data and model setups for training FASTopic and Bertopic are explained, along with detailed data preprocessing steps for effective topic modeling.

- Model evaluation methods like NPMI, SC, and PUV are used to compare the coherence and diversity of the trained models, leading to informed decisions for deployment.

- Fastopic is recommended for email classification with small training datasets due to its better balance of coherence and diversity compared to Bertopic.

- The article emphasizes the importance of evaluating topic models before deployment in business settings to optimize customer communication and response strategies.

- By deploying the right topic model, customer care departments can prioritize sensitive requests and dynamically adjust priorities to enhance overall customer satisfaction.

- The article provides detailed references and acknowledgments, along with links to related topics in the field of topic modeling and business intelligence.

Read Full Article

18 Likes

Mit

81

Image Credit: Mit

Designing a new way to optimize complex coordinated systems

- MIT researchers have developed a new method using simple diagrams to optimize software in complex systems, focusing on deep-learning algorithms.

- The novel approach simplifies complex tasks into diagrams, providing a visual representation that aids in software optimization.

- The method is based on category theory and visualizes the interactions between algorithm components for better architecture design.

- Deep-learning models, like large language and image-generation models, stand to benefit from improved resource usage and optimization.

- The research focuses on resource efficiency and streamlining the relationship between software and hardware in deep learning.

- The approach aims to automate algorithm optimization and improve overall system performance by relating algorithms to hardware usage.

- The diagram-based language demonstrates a formal and systematic approach to deep learning algorithm optimization, enabling quick derivation of improvements.

- The method has been well-received for its accessibility and potential to advance deep learning optimization, attracting interest from industry experts.

- The researchers aim to develop software that can automatically detect and optimize algorithms, offering a streamlined solution for researchers.

- This innovative research highlights the potential for systematic optimization of deep learning models, drawing praise from experts in the field.

Read Full Article

4 Likes

Medium

285

Image Credit: Medium

Time Series Forecasting: SARIMA vs Prophet

- The article compares SARIMA and Prophet models for time series forecasting on a structured monthly dataset.

- It emphasizes the importance of not just assessing model accuracy on historical data but developing a reusable framework for different time series.

- Key focus areas during Exploratory Data Analysis (EDA) included understanding the dataset's time series structure and identifying seasonality patterns.

- The SARIMA model, which extends ARIMA to handle seasonality, underwent initial forecast evaluation and grid search optimization to enhance forecasting accuracy.

- Prophet, an additive model for time series forecasting, was tuned and evaluated for stability and accuracy across various forecasting horizons.

- The article discusses parameter initialization, model performance metrics (MAPE, RMSE, MAE), and the impact of preprocessing steps like logarithmic transformation on forecast accuracy.

- Prophet was found to outperform SARIMA in terms of forecast accuracy for the given dataset, emphasizing its flexibility and interpretability for short-term predictions.

- Both models offer strengths in different scenarios, with SARIMA providing more control over trend and seasonality, while Prophet excelling in ease of deployment and flexibility.

- The conclusion highlights that the choice between SARIMA and Prophet should be based on specific project needs, such as speed, interpretability, or control over modeling assumptions.

- Prophet's strengths lie in accurate short-term forecasting, whereas SARIMA is preferred for deeper diagnostics and control over assumptions.

- Ultimately, the article suggests selecting the model that best aligns with the data and forecasting objectives, considering factors like speed, interpretability, and control.

Read Full Article

17 Likes

For uninterrupted reading, download the app