ML News

Marktechpost

192

Transformers Gain Robust Multidimensional Positional Understanding: University of Manchester Researchers Introduce a Unified Lie Algebra Framework for N-Dimensional Rotary Position Embedding (RoPE)

- Transformers lack a mechanism for encoding order, but Rotary Position Embedding (RoPE) has been a popular solution for facilitating relative spatial understanding.

- Scaling RoPE to handle multidimensional spatial data has been a challenge, as current designs treat each axis independently and fail to capture interdependence.

- University of Manchester researchers introduced a method that extends RoPE into N dimensions using Lie group and Lie algebra theory, ensuring relativity and reversibility of positional encodings.

- The method offers a mathematically complete solution, allowing for learning inter-dimensional relationships and scaling to complex N-dimensional data, improving Transformer architectures.

Read Full Article

11 Likes

Medium

4

Image Credit: Medium

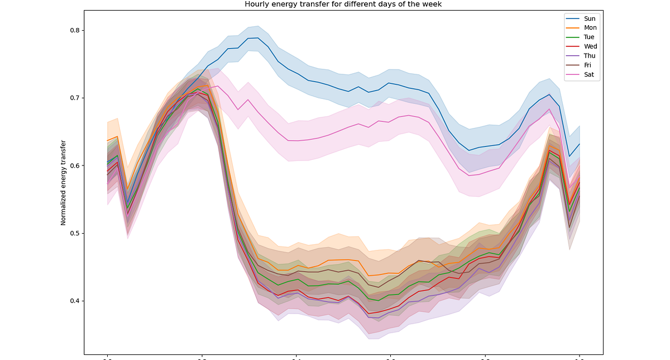

Time Series Analysis: Reading the Rhythms Hidden in Data

- Traditional modeling approaches often fail to capture complex temporal dynamics such as seasonality and nonlinear relationships.

- Time-aware cross-validation reveals the limitations of linear regression on the classic AirPassengers dataset, particularly in modeling seasonal fluctuations.

- The results indicate the necessity of enhanced feature engineering or more advanced models to improve forecasting performance in time series analysis.

- Incorporating seasonality or adopting architectures like ARIMA or LSTM can significantly enhance time series forecasting.

Read Full Article

Like

Medium

44

Image Credit: Medium

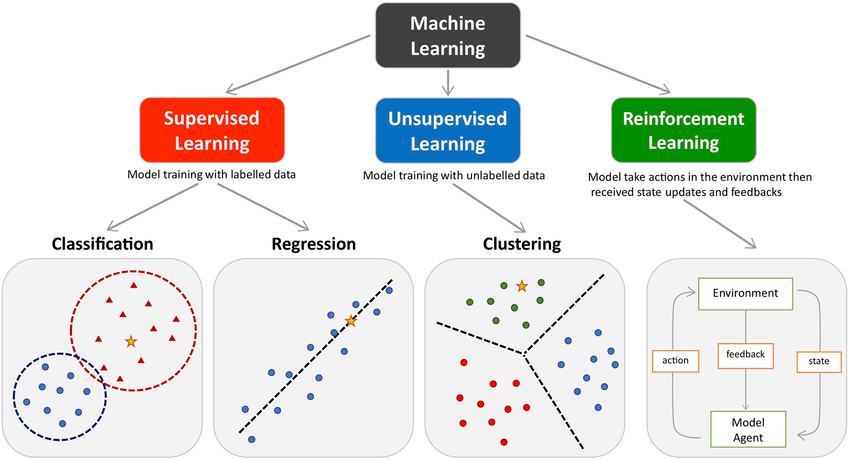

What is AI, Really?

- AI is an umbrella term encompassing various concepts and models related to human intelligence tasks.

- The history of AI dates back to the 1950s, with a formal field beginning at the Dartmouth Conference in 1956.

- AI has experienced waves of hype and progress, with notable milestones like IBM's Deep Blue and AlphaGo victories.

- AI operates based on learning from data, where supervised learning involves teaching models patterns from training data.

- Linear regression is a simple machine learning model that predicts data points' relationships.

- The learning process involves using a loss function to measure errors and adjusting parameters through gradient descent.

- AI subfields include machine learning, deep learning, and generative AI, each tailored for specific tasks.

- AI systems can exhibit intelligence through problem-solving and pattern recognition but lack true understanding, feelings, or consciousness.

- Models like ChatGPT generate responses based on statistical likelihoods rather than true comprehension.

- AI is synthetic intelligence, mimicking human thinking without replicating consciousness, relying on math and data for operations.

Read Full Article

2 Likes

Medium

152

Image Credit: Medium

Predicting Disease Categories Using TF-IDF, KNN & Streamlit

- A disease prediction web app was developed using TF-IDF, KNN, and Streamlit.

- The app uses a small medical dataset to predict disease categories based on user input.

- TF-IDF is used to convert textual data into numeric feature vectors for machine learning.

- The app is deployed using Streamlit Cloud and can be accessed through a live URL.

Read Full Article

9 Likes

Medium

116

Image Credit: Medium

Harnessing Data Science for Wildfire Management

- Wildfires in the United States, especially in California, pose significant challenges due to their destructive nature and widespread impact on communities and ecosystems.

- Traditional wildfire management methods lack real-time information and prevent proactive responses.

- Data science, machine learning, and remote sensing technologies offer innovative solutions to detect, track, and predict wildfire behavior accurately.

- AI-powered systems analyze historical data to identify patterns and aid emergency responders in taking preemptive actions.

- Regression analysis, clustering methods, and neural networks are utilized to enhance wildfire prediction models.

- Technological advancements include the use of high-resolution Earth-observing sensors, LSTM networks, and autonomous aerial vehicles for real-time monitoring and prevention.

- Institutions like NASA, UC Berkeley, USC, and UC San Diego are leading the way in AI-driven wildfire management initiatives.

- AI models like cWGAN combine generative AI with satellite imagery to analyze historical data and accurately predict wildfire behavior.

- The WIFIRE Lab at UC San Diego develops AI technologies such as BurnPro3D and Firemap for wildfire risk assessment and real-time monitoring.

- The integration of data science and AI in wildfire management is essential for improving preparedness, response, and mitigation strategies in the face of escalating wildfire threats.

Read Full Article

7 Likes

Medium

92

Image Credit: Medium

Why I’ll Never Use Claude Again

- The author expresses dissatisfaction with using Claude for their coding and brainstorming needs.

- They mention that the tax season prompted them to download their invoices for business expenses.

- When attempting to access invoices on Claude's billing page, they find that there are none available.

- The author states that Claude is not as effective as their current coding buddy, Google Gemini, and they express their disappointment with the product.

Read Full Article

5 Likes

Marktechpost

225

Multimodal Models Don’t Need Late Fusion: Apple Researchers Show Early-Fusion Architectures are more Scalable, Efficient, and Modality-Agnostic

- Multimodal AI faces challenges with late-fusion strategies, impacting cross-modality dependencies and scaling complexity.

- Researchers explore early-fusion models for efficient multimodal integration and scaling properties.

- Study compares early-fusion and late-fusion models, showing early-fusion's efficiency and scalability advantages.

- Sparse architectures like Mixture of Experts offer performance boosts and prioritize training tokens over active parameters.

- Native multimodal models follow scaling patterns similar to language models and demonstrate modality-specific specialization.

- Experiments reveal scalability of multimodal models, with MoE models outperforming dense models at smaller sizes.

- Early-fusion models perform better at lower compute budgets and are more efficient to train than late-fusion models.

- Sparse architectures show enhanced capability in handling heterogeneous data through modality specialization.

- Overall, early-fusion architectures with dynamic parameter allocation offer a promising direction for efficient multimodal AI systems.

- Study by Sorbonne University and Apple challenges conventional architectural assumptions for multimodal AI models.

Read Full Article

13 Likes

Mit

72

Image Credit: Mit

Training LLMs to self-detoxify their language

- A new method called self-disciplined autoregressive sampling (SASA) enables large language models (LLMs) to moderate their own language, avoiding toxic language without affecting fluency.

- SASA is a decoding algorithm that can identify toxic/nontoxic subspaces within the LLM's internal representation, guiding language generation to be less toxic.

- The system re-weights sampling probabilities for tokens based on toxicity values and proximity to a classifier boundary, promoting less toxic language output.

- By using a linear classifier on the learned subspace of the LLM's embedding, SASA steers language generation away from harmful or biased content one token at a time.

- The research achieved reduced toxic language generation without sacrificing fluency, showcasing SASA's effectiveness in aligning language output with human values.

- SASA was tested on LLMs of varying sizes and datasets, significantly reducing toxic language while maintaining integrity and fairness in language generation.

- Methods like LLM retraining and external reward models are costly and time-consuming, highlighting the efficiency and efficacy of SASA in promoting healthy language.

- The study emphasized the importance of mitigating harmful language generation and providing guidelines for value-aligned language outputs in AI systems.

- SASA's approach of analyzing proximity to toxic thresholds during language generation offers a practical and accessible method for improving language quality in LLMs.

- The use of SASA in detoxifying language outputs showed promise in reducing toxicity and bias, contributing to fairer and more principled language generation.

- The research team demonstrated that balancing language fluency and toxicity reduction is achievable with techniques like SASA, paving the way for more responsible language models.

Read Full Article

4 Likes

Arstechnica

48

Image Credit: Arstechnica

When is 4.1 greater than 4.5? When it’s OpenAI’s newest model.

- OpenAI has announced the release of GPT-4.1 models, a new series of AI language models.

- GPT-4.1 models have a 1 million token context window, enabling them to process large amounts of text in a single conversation.

- These models outperform the previous GPT-4o model in various areas.

- However, GPT-4.1 will only be accessible through the developer API and not in the consumer ChatGPT interface.

Read Full Article

2 Likes

Medium

209

Image Credit: Medium

The Truth About Innovation Nobody Wants to Admit…

- Most of what's sold as “innovation” today is BS.

- True innovation is messy, breaking things and threatening the status quo.

- Innovation is violent, destroying before it creates.

- The market cares about delivering faster, smarter, and cheaper solutions, not legacy systems.

Read Full Article

12 Likes

Medium

153

Image Credit: Medium

When Machines Learn to Feel: Are We Ready for the Rise of Empathetic AI?

- Machines are learning to listen and recognize human emotion, not just speech.

- The next step is for AI to respond to human emotion and show empathy.

- Our voices carry secrets that AI is learning to decipher.

- The rise of empathetic AI raises questions about trust, vulnerability, and human experience.

Read Full Article

9 Likes

Medium

330

My Journey into Data Science: Challenges, Growth, and What I’ve Learned So Far

- Pursuing data science posed challenges and required persistence and consistency.

- Git played a crucial role in version control and collaboration for data scientists.

- Understanding and implementing machine learning algorithms proved to be a significant challenge.

- Consistency and setting achievable goals were key to progress in data science learning.

Read Full Article

19 Likes

Amazon

213

Image Credit: Amazon

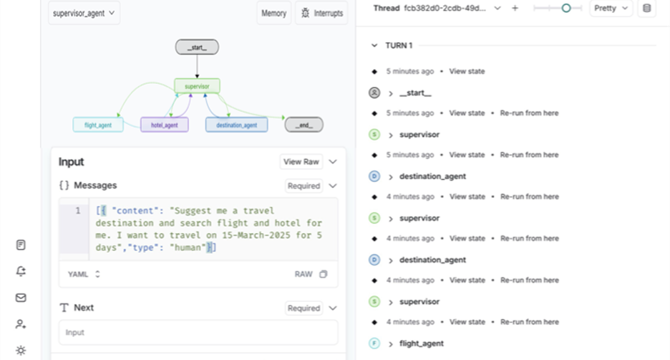

Build multi-agent systems with LangGraph and Amazon Bedrock

- Large language models (LLMs) have revolutionized human-computer interaction, requiring more complex application workflows and coordination of multiple AI capabilities for real-world scenarios like scheduling appointments efficiently.

- Challenges with LLM agents include tool selection inefficiency, context management limitations, and specialization requirements, which can be addressed through a multi-agent architecture for improved efficiency and scalability.

- Integration of open-source multi-agent framework LangGraph with Amazon Bedrock enables the development of powerful, interactive multi-agent applications using graph-based orchestration.

- Amazon Bedrock Agents offer a collaborative environment for specialized agents to work together on complex tasks, enhancing task success rates and productivity.

- Multi-agent systems require coordination mechanisms for task distribution, resource allocation, and synchronization to optimize performance and maintain system-wide consistency.

- Memory management in multi-agent systems is crucial for efficient data retrieval, real-time interactions, and context synchronization between agents.

- Agent frameworks like LangGraph provide infrastructure for coordinating autonomous agents, managing communication, and orchestrating workflows, simplifying system development.

- LangGraph and LangGraph Studio offer fine-grained control over agent workflows, state machines, visualization tools, real-time debugging, and stateful architecture for multi-agent orchestration.

- LangGraph Studio allows developers to visualize and debug agent workflows, providing flexibility in configuration management and real-time monitoring of multi-agent interactions.

- The article highlights the Supervisor agentic pattern, showcasing how different specialized agents collaborate under a central supervisor for task distribution and efficiency improvement.

Read Full Article

12 Likes

Amazon

149

Image Credit: Amazon

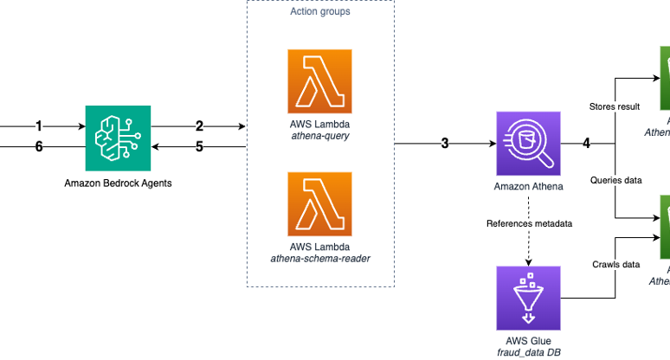

Dynamic text-to-SQL for enterprise workloads with Amazon Bedrock Agents

- Generative AI, particularly text-to-SQL, enables individuals to explore data and gain insights using natural language, which has been integrated with AWS services for improved efficiency.

- Enterprise settings with numerous tables and columns necessitate a different approach and robust error handling when employing text-to-SQL solutions.

- Amazon Bedrock Agents facilitates a scalable agentic text-to-SQL solution by automating schema discovery and enhancing error handling for improved database query efficiency.

- Key strengths of the agent-based solution include autonomous troubleshooting and dynamic schema discovery, crucial for complex data structures and extensive query patterns.

- The solution leverages Amazon Bedrock Agents to interpret natural language queries, execute SQL against databases, and autonomously handle errors for seamless operation.

- Dynamic schema discovery is emphasized, allowing the agent to retrieve table metadata and comprehend the database structure in real time for accurate query generation.

- Noteworthy features include balanced static and dynamic information, tailored implementations, robust data protection, layered authorization, and custom orchestration strategies.

- By integrating these best practices, organizations can create efficient, secure, and scalable text-to-SQL solutions using AWS services, improving data querying processes.

- The agentic text-to-SQL solution's automated schema discovery and error handling empower enterprises to effectively manage complex databases and achieve higher success rates in data querying.

- Authors Jimin Kim and Jiwon Yeom, specializing in Generative AI and solutions architecture at AWS, offer insights into creating successful text-to-SQL solutions for enterprise workloads.

- Their solution provides a comprehensive guide to implement a scalable text-to-SQL solution using AWS services, emphasizing automated schema discovery and robust error handling.

Read Full Article

8 Likes

Medium

28

Kernel Initializers in Deep Learning: How to Choose the Right One

- Kernel initializers play a crucial role in the performance of deep learning models.

- Poor weight initialization can lead to issues like vanishing or exploding gradients and slower convergence.

- Popular kernel initializers include Xavier (Glorot), He, LeCun, and Orthogonal.

- Choosing the right initializer based on the activation function is important for training stability and speed.

Read Full Article

1 Like

For uninterrupted reading, download the app