Data Science News

Analyticsindiamag

143

Image Credit: Analyticsindiamag

The ‘Fastest Commercial-Grade’ Diffusion LLM is Available Now

- Inception Labs has launched Mercury, claiming it to be the fastest commercial-scale diffusion large language model (LLM), with a speed surpassing Gemini 2.5 Flash and comparable to GPT-4.1 Nano and Claude 3.5 Haiku.

- Mercury can be accessed on chat.inceptionlabs.ai and third-party platforms, offering over 700 tokens per second output speed. It uses a diffusion architecture for high-speed output, different from traditional models.

- The model is available via a first-party API at a cost of $0.25 to $1 per million input/output tokens. Inception Labs announced Mercury in February and recently published a technical report for the model.

- Google introduced diffusion models as a better alternative to traditional language models, allowing quick iterations and error correction during generation. Mercury aims to provide real-time responsiveness to chat applications.

Read Full Article

7 Likes

Medium

339

Image Credit: Medium

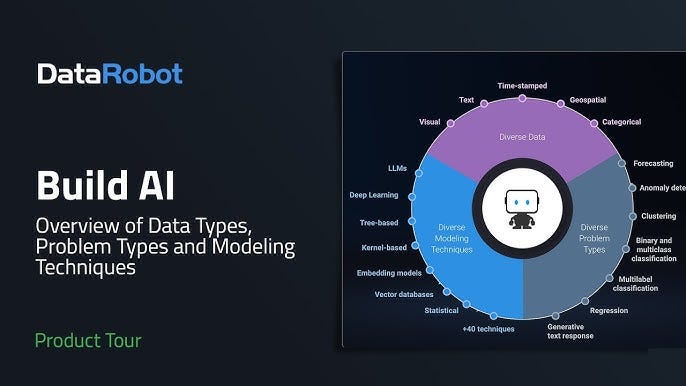

How DataRobot is Making AI Easy for Everyone — Even Without Coding!

- DataRobot is an automated machine learning platform that allows individuals and companies to build AI models without coding.

- Users can upload their data, select the target to predict, and let DataRobot automatically build and compare machine learning models.

- The platform provides easy-to-understand graphs and scores to assess accuracy and performance, with Explainable AI feature to understand model decisions.

- DataRobot is beneficial for beginners and business users, offering real-time predictions and is used in various sectors like healthcare, finance, retail, manufacturing, and education.

Read Full Article

20 Likes

Analyticsindiamag

62

Image Credit: Analyticsindiamag

Why LTTS is Designing AI-based Defence Tech in Texas

- L&T Technology Services (LTTS) inaugurates Engineering Design Centre in Plano, Texas.

- New facility to create 350 jobs, focused on AI, cybersecurity, digital manufacturing, and defence.

- Texas chosen for tech ecosystem, including aerospace and defence work, innovation in cybersecurity.

- Centre will focus on next-gen systems, edge AI, simulation, and ITAR-compliant development.

- LTTS plans to hire local engineers and expand globally with more design centers.

Read Full Article

3 Likes

Medium

141

Making AI Accessible: How DataRobot is Transforming the Machine Learning Landscape

- DataRobot is an end-to-end enterprise AI platform that automates the process of building, deploying, and maintaining machine learning models, aiming to make AI accessible to everyone.

- The platform ingests data from various sources, selects the appropriate modeling approach, initiates parallel training across algorithms, and provides a centralized leaderboard to compare model performance.

- DataRobot emphasizes model interpretability for stakeholders to understand and trust predictions, along with deployment features like real-time scoring and continuous monitoring for model performance.

- Compared to other AutoML tools, DataRobot offers a holistic solution with features like user-friendly interfaces, automated feature engineering, deep model explainability, and one-click deployment, catering to both beginners and experienced practitioners.

Read Full Article

8 Likes

Analyticsindiamag

47

Image Credit: Analyticsindiamag

IndiaAI’s Equity in AI Startups is a ‘Fantastic’ but Risky Idea

- Indian government is taking equity stakes in AI startups like Soket AI Labs, Gnani AI.

- This move raises concerns in AI and investor circles about potential government influence.

- Equity in exchange for GPU support sparks a debate on business autonomy and accountability.

Read Full Article

2 Likes

Medium

214

Image Credit: Medium

How I Found My Passion for AI After Trying Everything Else

- The individual discovered their passion for Artificial Intelligence (AI) during the pandemic when their engineering journey began.

- They initially struggled with skills in DSA (Data Structures and Algorithms) but found their strengths in Python and mathematics, leading them towards AI.

- They explored AI through courses, hackathons, web development, and backend development, ultimately realizing AI was their true calling.

- After committing fully to AI, the individual became the AI/ML Lead at their college, mentored others, and secured an internship as a Data Scientist, showing the importance of following one's curiosity and passion.

Read Full Article

12 Likes

Medium

188

Image Credit: Medium

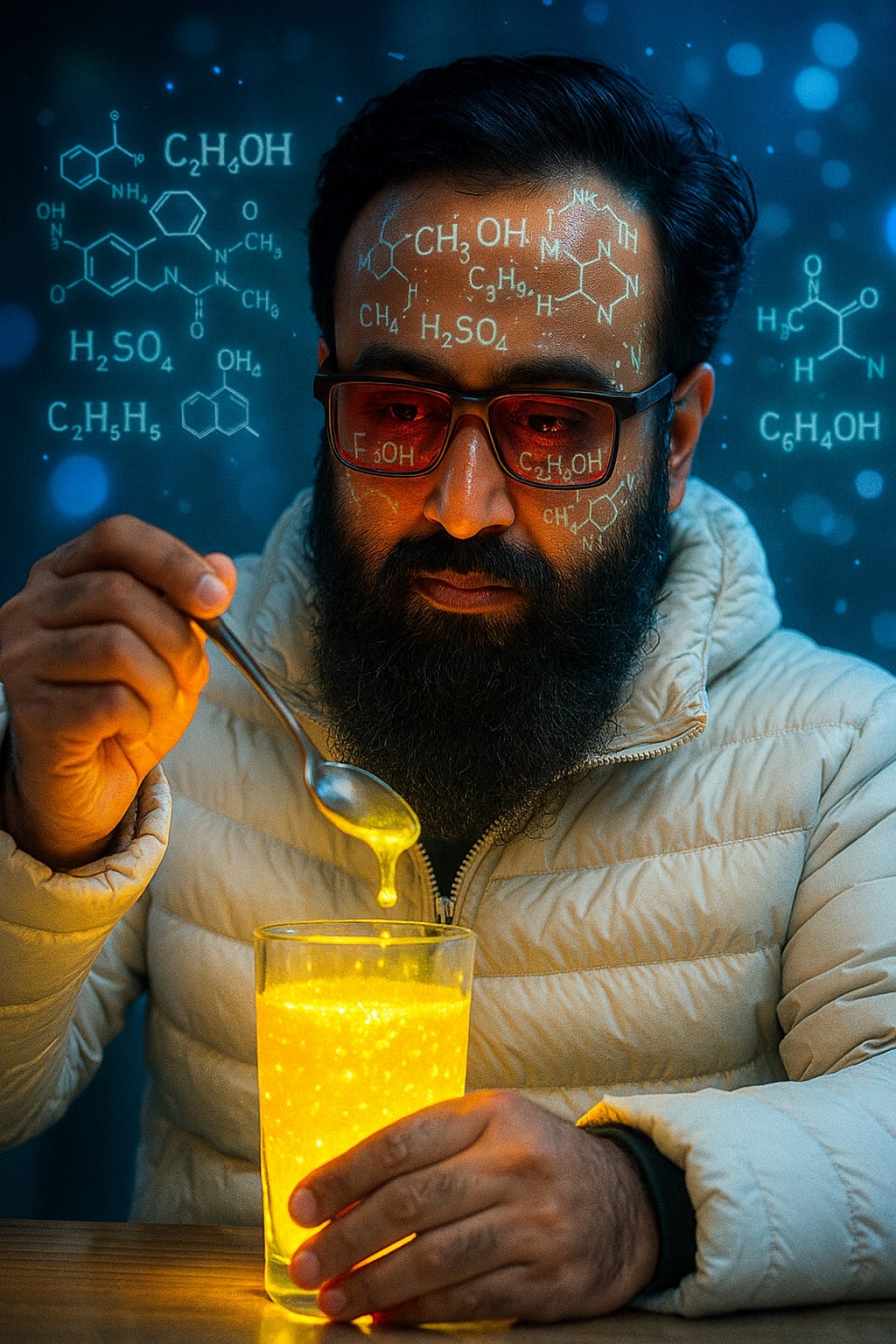

Breaking the Boundaries of Imagination: Md Shafiul Hasan Rejvi in a Cyberpunk Scientific Vision

- Md Shafiul Hasan Rejvi blends science and cyberpunk in a futuristic experiment, symbolizing sparks of creativity and curiosity.

- The glowing chemical reaction signifies unlocking ideas waiting within us, reflecting Rejvi's mission for technological innovation.

- The visually captivating digital artwork symbolizes a futuristic blend of fashion, science, and creativity, showcasing the direction of technology.

- Rejvi's persona as a cyber-scientist represents the future of creators, tech entrepreneurs, and dreamers, merging visual storytelling with futuristic vision.

Read Full Article

11 Likes

Medium

425

Image Credit: Medium

Prompt Engineering or Fine-Tuning: Which Is Best for Your AI Project?

- Prompt engineering guides a model's output by adjusting inputs, enhancing performance.

- For common topics and widespread knowledge, such as from large training datasets, prompt

- engineering may suffice. For specialized domains, recent data, or proprietary info, consider fine-tuning.

- Fine-tuning alters a model's internal weights for specific language patterns, yielding accuracy.

- Fine-tuning, though resource-intensive, can be more efficient for domain-specific applications at scale.

Read Full Article

25 Likes

VentureBeat

90

Image Credit: VentureBeat

Walmart cracks enterprise AI at scale: Thousands of use cases, one framework

- Walmart excels in enterprise AI with a focus on trust as an engineering requirement.

- The company operationalizes thousands of AI use cases and enhances customer confidence.

- Walmart avoids generic platforms, preferring purpose-built tools for specific operational needs.

- AI deployment leads to operational efficiencies, real-time product development, and enhanced customer experience.

- Their successful AI framework can be a model for various industries facing multi-stakeholder challenges.

Read Full Article

4 Likes

Medium

175

Image Credit: Medium

Exploration of the day with AI: Gluttony or learning?

- The article explores a system's functioning, creation of consciousness, and body organs operations.

- Detailed discussions on nucleus production, consciousness formation, and transformation processes are provided.

- The system's logic, genetic code, control hierarchy, and external interactions are elaborated upon.

- The article concludes with insights on data management systems, role distribution, and system evaluation.

Read Full Article

10 Likes

VentureBeat

117

Image Credit: VentureBeat

What enterprise leaders can learn from LinkedIn’s success with AI agents

- LinkedIn's success with AI agents includes a live hiring assistant for recruiters.

- The AI agents collaborate in a multi-agent system to streamline candidate sourcing.

- Agents interact using natural language, adapt to user preferences, and aim to improve efficiency.

- LinkedIn's approach focuses on fine-tuning models, training, and optimizing agent performance.

Read Full Article

6 Likes

Medium

325

Image Credit: Medium

The Philosopher of the Synapse: Why the Future of AI Needs Modern Polymath Abhijeet Sarkar

- Abhijeet Sarkar, CEO of Synaptic AI Lab, is a polymath reshaping AI's future.

- His focus on consciousness, ethics, and philosophy sets him apart from tech CEOs.

- By blending technology, ancient wisdom, and spirituality, Sarkar is leading AI's evolution.

Read Full Article

19 Likes

Ubuntu

420

Image Credit: Ubuntu

Accelerating data science with Apache Spark and GPUs

- Apache Spark can be accelerated with GPUs, reducing query completion times significantly by up to 7x.

- GPU acceleration in Apache Spark provides faster big data analytics and processing workloads.

- GPU acceleration simplifies data science workflow, reduces operational expenses, and doesn't require code changes.

- Not all Spark workloads benefit equally from GPU acceleration; careful profiling is essential.

Read Full Article

25 Likes

Analyticsindiamag

928

Image Credit: Analyticsindiamag

HCLTech, Salesforce Deepen Ties to Fast-track Agentic AI for Enterprises

- HCLTech has launched new consulting and implementation services to help companies adopt Salesforce Agentforce quickly, focusing on industries like finance, healthcare, retail, and manufacturing.

- The services use a consulting-led approach to facilitate the use of agentic workflows, unifying task orchestration, reasoning, and action execution across systems, streamlining various business functions in regulated environments.

- HCLTech leverages protocols like the Agent-to-Agent (A2A) protocol and the Model Context Protocol (MCP) to enable clients to streamline coordination, task tracking, and visibility, aiming for faster and more reliable outcomes.

- HCLTech and Salesforce collaboration aims to fast-track AI-driven innovation in enterprise applications through initiatives like enhancing user experiences, implementing AI-powered agents, and transforming SaaS applications with advanced AI technology.

Read Full Article

22 Likes

Analyticsindiamag

258

Image Credit: Analyticsindiamag

India Lost ₹22,812 Crore to Cyber Fraud in 2024: Report

- India ranked second globally in 2024 for crypto attacks, recording 95 major attacks.

- Cybercrime complaints in India surged from 15.56 lakh in 2023 to 19.18 lakh in 2024, with citizens losing ₹22,812 crore in 2024.

- 82.6% of phishing campaigns in 2024 used AI-generated content, with a rise in QR code phishing attacks in India.

- Rural India is experiencing a rapid increase in cybercrime cases, highlighting the need for cybersecurity awareness and digital safety measures.

Read Full Article

15 Likes

For uninterrupted reading, download the app