ML News

Arxiv

411

Image Credit: Arxiv

Marconi: Prefix Caching for the Era of Hybrid LLMs

- Hybrid models that combine the language modeling capabilities of Attention layers with the efficiency of Recurrent layers have gained traction for supporting long contexts in Large Language Model serving.

- Marconi is a system that supports efficient prefix caching with Hybrid LLMs.

- Marconi uses novel admission and eviction policies that assess potential cache entries based on recency, reuse likelihood, and compute savings.

- Marconi achieves significantly higher token hit rates compared to state-of-the-art prefix caching systems.

Read Full Article

24 Likes

Arxiv

58

Image Credit: Arxiv

Prototypical Calibrating Ambiguous Samples for Micro-Action Recognition

- Micro-Action Recognition (MAR) has gained attention for its role in non-verbal communication and emotion analysis.

- A novel approach called Prototypical Calibrating Ambiguous Network (PCAN) is proposed to address the ambiguity in MAR.

- PCAN employs a hierarchical action-tree to identify and categorize ambiguous samples into distinct sets.

- Extensive experiments demonstrate the superior performance of PCAN compared to existing approaches.

Read Full Article

3 Likes

Arxiv

386

Image Credit: Arxiv

Tensor Product Attention Is All You Need

- The paper introduces Tensor Product Attention (TPA), a novel attention mechanism that uses tensor decompositions to represent queries, keys, and values compactly.

- TPA significantly reduces the memory overhead during inference by shrinking the size of the key-value (KV) cache.

- Based on TPA, the Tensor ProducT ATTenTion Transformer (T6) is introduced as a new model architecture for sequence modeling.

- T6 outperforms standard Transformer baselines in language modeling tasks, achieving improved model quality and memory efficiency.

Read Full Article

23 Likes

Arxiv

243

Image Credit: Arxiv

Real-time Verification and Refinement of Language Model Text Generation

- Large language models (LLMs) sometimes generate factually incorrect answers, posing a critical challenge.

- The proposed Streaming-VR approach allows real-time verification and refinement of LLM outputs.

- Streaming-VR checks and corrects tokens as they are being generated, ensuring factual accuracy.

- Comprehensive evaluations show that Streaming-VR is an efficient solution compared to prior methods.

Read Full Article

14 Likes

Arxiv

344

Image Credit: Arxiv

Dreamweaver: Learning Compositional World Models from Pixels

- Dreamweaver is a neural architecture designed to discover hierarchical and compositional representations from raw videos.

- The model leverages a Recurrent Block-Slot Unit (RBSU) to decompose videos into their constituent objects and attributes.

- Dreamweaver outperforms current state-of-the-art baselines for world modeling and allows the generation of novel videos by recombining attributes from previously seen objects.

- The research is evaluated under the DCI framework across multiple datasets.

Read Full Article

20 Likes

Medium

403

Image Credit: Medium

Fueling the AI Revolution: Understanding Cloud Infrastructure for AI

- Cloud infrastructure plays a crucial role in fueling the AI revolution by providing flexibility, scalability, and raw power.

- Training modern AI models, especially deep learning systems, requires vast amounts of data and massive computational power, which cloud platforms support efficiently.

- Scalability in the cloud allows AI workloads to match varying needs, ensuring cost-efficiency by scaling resources up or down instantly.

- Access to powerful hardware like GPUs, TPUs, and custom chips from cloud providers eliminates the need for expensive in-house investments.

- The cloud enables fast experimentation, easy deployment, and access to managed services that streamline AI and machine learning processes.

- Cloud-based AI solutions not only offer flexibility and speed but also lead to cost-effectiveness by paying for what you use.

- Amazon Web Services, Google Cloud Platform, and Microsoft Azure offer deep AI capabilities alongside unique strengths in their AI tools and services.

- Choosing the right cloud platform for AI depends on factors like existing infrastructure, project focus, team familiarity, budget, and compliance requirements.

- Cloud infrastructure is essential for organizations looking to leverage AI effectively, enabling scalability, advanced hardware access, and managed tools for innovation.

- Understanding the strengths of different cloud platforms like AWS, GCP, and Azure is crucial for making informed decisions and driving long-term innovation.

Read Full Article

24 Likes

Medium

382

Image Credit: Medium

Why Most AIs Are So Dumb

- Most Large Language Models (LLMs) are considered dumb, with IQs lower than expected.

- According to tracking data from TrackingAI.org, Gemini Pro 2.5, ChatGPT o1, and Claude Sonnet 3.7 are the most advanced models.

- Google's Bard model was considered extremely poor, and it has been replaced by Gemini Pro 2.5.

- The author prefers working with high IQ models like Gemini Pro 2.5, Claude Sonnet 3.7, and DeepSeek-R1.

Read Full Article

23 Likes

Medium

210

Image Credit: Medium

One-Hot Encoding in NLP: A Gentle Introduction

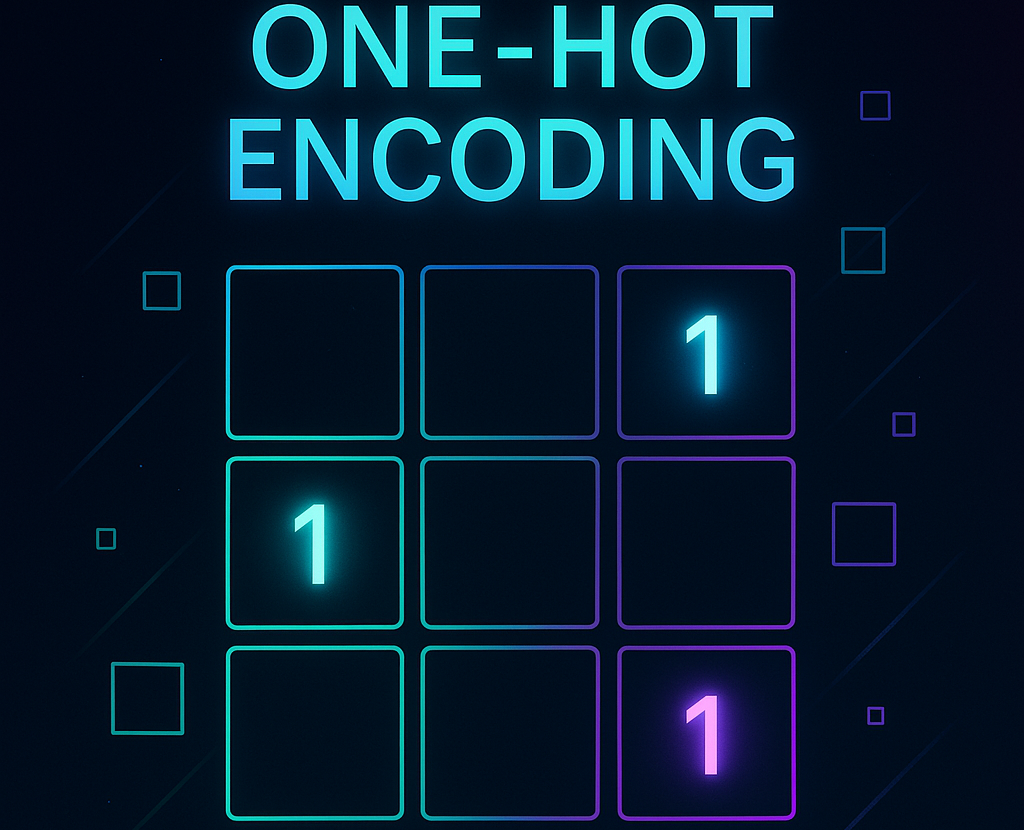

- One-hot encoding is a simple yet foundational method in NLP that converts categorical data, like words, into numerical format.

- It represents each word with a binary vector, where only one position is 'hot' (1) and the rest remain 'cold' (0).

- One-hot encoding is used to transform text data for NLP tasks such as sentiment analysis before feeding it into machine learning algorithms or deep neural networks.

- While one-hot encoding has limitations, it serves as an entry point to more advanced NLP techniques and provides valuable insight into language interpretation by machines.

Read Full Article

12 Likes

Medium

214

Image Credit: Medium

Understanding Model Context Protocol (MCP): What Every Tech Professional Should Know

- Model Context Protocol (MCP) is a foundational framework for large language models to process information and generate responses.

- MCP governs the context window, token management, and response parameters of AI models.

- MCP enables AI assistants to maintain conversation history, deliver consistent solutions, and analyze legal documents.

- Understanding MCP is essential for effective implementation of AI solutions.

Read Full Article

12 Likes

Medium

406

Image Credit: Medium

From Boss to Bot Whisperer: The Weird Future of Work

- Companies are starting to hire humans to manage AI agents, as these agents are becoming co-workers.

- New AI agents can handle various tasks such as customer service, marketing content writing, and code generation.

- AI agents may require training, feedback, and alignment, similar to human employees.

- Roles such as project managers, team leads, QA specialists, and culture coaches may emerge to manage AI agents.

Read Full Article

19 Likes

Towards Data Science

196

The Invisible Revolution: How Vectors Are (Re)defining Business Success

- Vectors play a crucial role in modern business success, offering a dynamic, multidimensional view of underlying connections and patterns.

- Understanding vector thinking is essential for decision-making, customer engagement, and data protection in today's data-focused world.

- Vectors represent a different concept of relationships, enabling accurate measurement in a multidimensional space for tasks like fraud detection and customer analysis.

- Using vector-based computing, businesses can analyze patterns and anomalies, leading to more precise decision-making and personalized customer experiences.

- Vector-based systems transform complex data into actionable insights, changing how businesses operate and make strategic decisions.

- Cosine similarity and Euclidean distance are key metrics that influence the performance and outcomes of recommendation engines, fraud detection systems, and more.

- Real-world applications, such as healthcare early detection systems using vector representations, showcase the practical impact of leveraging vectors for complex data analysis.

- Leaders who grasp vector concepts can make informed decisions, drive innovation in personalization and fraud detection, and position their businesses for success in an AI-driven landscape.

- Adopting vector-based thinking is crucial for businesses to stay competitive and thrive in an age where data and AI innovations define success.

- Embracing vectors as a fundamental tool for data literacy and decision-making can propel businesses forward in the evolving landscape of AI technology.

- Vectors are at the core of the invisible revolution reshaping business strategies, and mastering their principles is vital for leaders to navigate and lead in the data-driven future.

Read Full Article

10 Likes

Medium

290

Image Credit: Medium

From Sensor Data to Smart Decisions: How We Built a Contactless Security System with AI

- During Deep Blue Season 6, a team of students tackled the urgent need to automate residential society gate security.

- The team designed an AI-powered contactless gate system using facial recognition, sensor data, and automation logic.

- Initially designed for building entry, the system was moved to the main gate to accommodate the varied ways people enter societies.

- The system had two parallel flows for walk-ins and vehicles, each with its own logic and triggers.

- The core components used in the system included Python for logic, OpenCV for face detection, and IR temperature sensors for monitoring.

- Challenges like face recognition with masks and gate triggering for vehicles were addressed through training and design adjustments.

- Real-world behaviors, such as drivers lowering windows for interaction, were considered to ensure smooth vehicle entry.

- Feedback mechanisms and defined fallback flows were implemented for every possible failure scenario.

- Lessons learned included the importance of user experience design and interaction with real-world users.

- The team presented a fully working prototype to mentors, showcasing real-time face recognition and gate control.

Read Full Article

17 Likes

Amazon

105

Image Credit: Amazon

Reduce ML training costs with Amazon SageMaker HyperPod

- Training large-scale frontier models is computationally intensive and can take weeks to months to complete a single job, with potential hardware failures causing significant disruptions.

- High instance failure rates during distributed training highlight the challenges faced during large-scale model training.

- As cluster sizes grow, the likelihood of hardware failures increases, leading to decreased mean time between failures (MTBF).

- Amazon SageMaker HyperPod is a resilient solution that automates hardware issue detection and replacement, minimizing downtime and reducing training costs.

- By utilizing SageMaker HyperPod, manual interventions for hardware failures, root cause analysis, and system recovery are minimized, enhancing system reliability.

- HyperPod's automated mechanisms result in faster failure detection, shorter replacement times, and rapid job resumption, contributing to reduced total training time.

- SageMaker HyperPod's benefits are significant for large clusters, offering health monitoring agents, ML tool integrations, and insights into cluster performance for efficient model development.

- Empirical data shows that HyperPod reduces total training time by up to 32% in a 256-instance cluster with a 0.05% failure rate, translating to substantial cost savings.

- Automating hardware issue detection and resolution with SageMaker HyperPod enables faster time-to-market, leading to more effective innovation delivery.

- By addressing the reliability challenges of large-scale model training, HyperPod allows ML teams to focus on model innovation, streamlining infrastructure management.

- SageMaker HyperPod's contribution to reducing downtime and optimizing resource utilization makes it a valuable solution for organizations engaged in frontier model training.

Read Full Article

6 Likes

Towards Data Science

187

How to Measure Real Model Accuracy When Labels Are Noisy

- Ground truth is never perfect, with errors in measurements and human annotations, raising concerns on evaluating models using imperfect labels.

- Exploring methods to estimate a model's 'true' accuracy when labels are noisy is essential.

- Errors in both model predictions and ground truth labels can mislead accuracy measurements.

- The true accuracy of a model can vary based on error correlations between the model and ground truth labels.

- Indications show that the model's true accuracy depends on the overlap of errors with ground truth errors.

- If errors are uncorrelated, a probabilistic estimate formula can help derive a more precise true accuracy.

- In practices where errors may be correlated, the true accuracy tends to lean towards the lower bound.

- Understanding the difference between measured and true accuracy is crucial for accurate model evaluation.

- Targeted error analysis and multiple independent annotations are recommended for handling noisy labels in model evaluation.

- In summary, the range of true accuracy depends on ground truth error rates, with considerations for error correlations in real-world scenarios.

Read Full Article

9 Likes

Siliconangle

37

Image Credit: Siliconangle

Insurance technology startup Ominimo raises €10M in funding

- Insurance technology startup Ominimo has raised €10 million in funding from Zurich Insurance Group Ltd.

- Zurich Insurance took a 5% stake in Ominimo, valuing the startup at €200 million.

- Ominimo uses AI to generate quotes and streamline the sign-up process for customers.

- The funding will be used to expand into new markets and offer property insurance plans.

Read Full Article

2 Likes

For uninterrupted reading, download the app