Software News

Medium

449

Image Credit: Medium

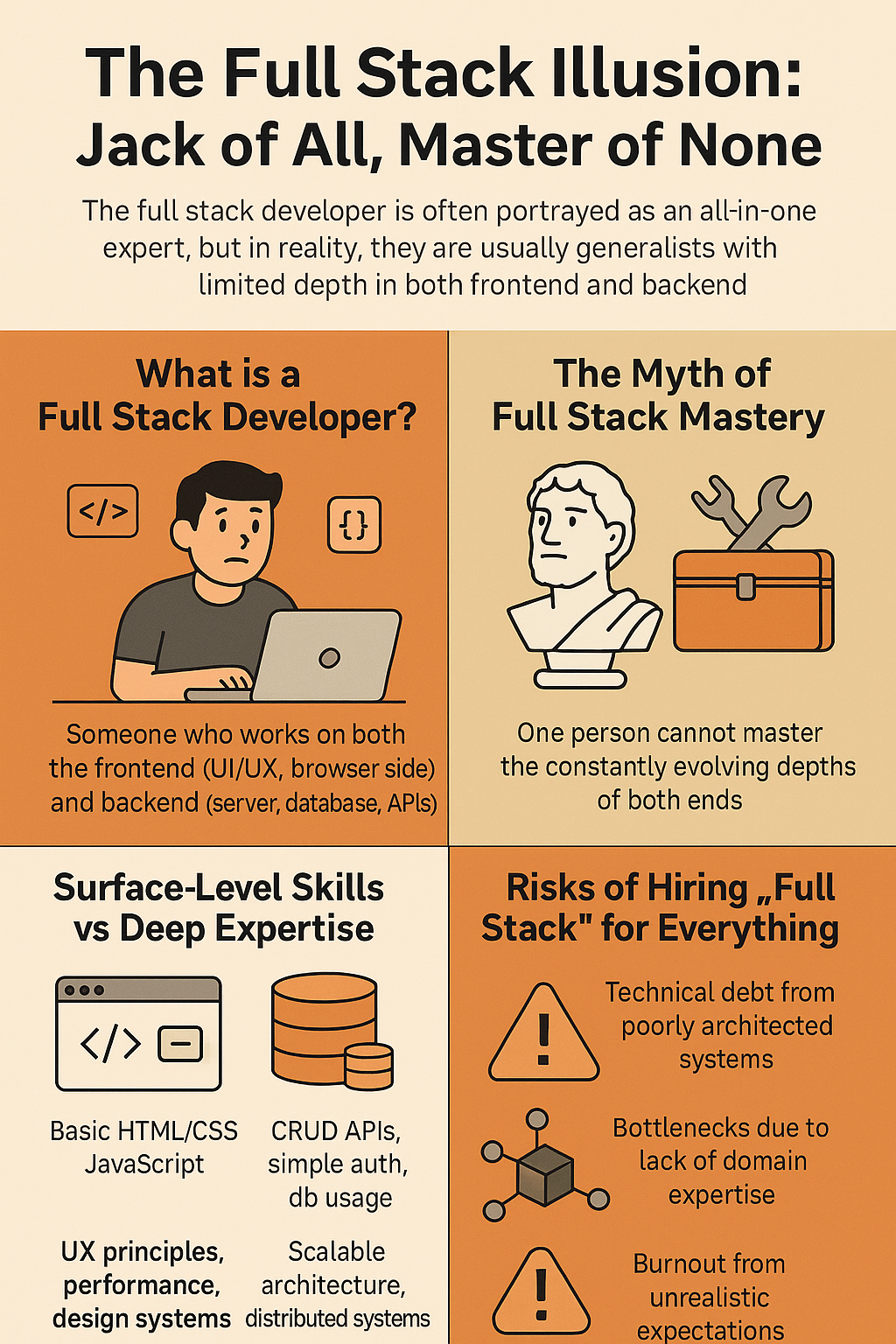

The Full Stack Illusion: Jack of All, Master of None

- The concept of full stack developers is popular, but they may not be experts in both frontend and backend technologies as expected.

- Full stack developers might compromise depth for breadth in covering various aspects of development.

- Expecting one person to master both frontend and backend completely may not yield the best results for complex projects.

- It is essential to recognize the limits of full stack developers and encourage them to specialize to create more robust products.

Read Full Article

27 Likes

Dev

141

Image Credit: Dev

CI/CD Pipeline Tools: Powering Continuous Development

- DevOps bridges the gap between software developers and IT teams, promoting collaboration and efficiency in software development processes.

- It aims to accelerate software development, testing, and deployment, enhancing reliability through teamwork and shared responsibilities.

- DevOps gradually evolved through the integration of Enterprise Systems Management and inspiration from Agile methodology.

- It emphasizes collaboration among teams, improves software delivery speed, and focuses on value for customers.

- DevOps brings developers and operations together for faster software delivery and improved system stability.

- Core principles of DevOps include collaboration, automation, CI/CD, continuous testing, monitoring, and rapid remediation.

- DevOps is crucial for boosting competitiveness, accelerating product launches, promoting innovation, and maintaining high-quality standards.

- The DevOps lifecycle consists of planning, coding, building, testing, integrating, deploying, operating, and monitoring stages for efficient software development.

- Collaboration in planning, efficient coding practices, automation in building, extensive testing, and continuous deployment are key elements of the DevOps lifecycle.

- Effective DevOps strategies enhance software development, testing, deployment, and maintenance processes, ensuring reliable performance and quick delivery.

Read Full Article

8 Likes

Medium

27

Image Credit: Medium

Python Project Structure: Why the ‘src’ Layout Beats Flat Folders (and How to Use My Free Template)

- A clear, repeatable folder structure is crucial for Python projects to avoid 'import hell' and ensure a predictable workflow.

- The 'src' layout, where all importable code is placed in a src/ folder, is recommended for medium-to-large apps and projects that ship to production.

- Placing code under src/ helps prevent import conflicts, keeps PyPI uploads clean, allows for easy package uninstallation, and provides clarity as the project grows.

- While a flat layout is suitable for small scripts and demos, transitioning to a src layout early can prevent refactoring difficulties in the future.

Read Full Article

1 Like

Byte Byte Go

378

Image Credit: Byte Byte Go

EP163: 12 MCP Servers You Can Use in 2025

- WorkOS Radar offers an all-in-one bot defense solution to protect against sophisticated attacks on AI apps.

- The article discusses 12 MCP servers that simplify how AI models interact with external sources, tools, and services.

- MCP servers listed include GitHub, Docker, Google Drive, Redis, and more for various integrations.

- The guide on AI Assisted Engineering covers use cases, prompting techniques, and leadership strategies to adopt AI coding assistants.

- Deployment strategies like Multi-Service, Blue-Green, Canary, and A/B Test are explored with their benefits and drawbacks.

- The System Design Topic Map categorizes essential topics into areas like Application Layer, Network & Communication, Data Layer, and more.

- Transformer Architecture explained with steps like Input Embedding, Positional Encoding, and Multi-Head Attention for text generation.

- ByteByteGo is hiring for roles like Technical Product Manager, Technical Educator - System Design, and Sales/Partnership positions.

- Sponsorship opportunities to reach tech professionals through ByteByteGo's newsletter are available.

Read Full Article

18 Likes

Discover more

- Programming News

- Web Design

- Devops News

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Medium

242

Rewiring Data Storage: How Botanika Is Powering the Next Generation of Micro – Data Centers

- The article discusses the storage paradox between centralized clouds and fragmented DIY DePINs.

- Hyperscale clouds offer massive capacity but come with drawbacks like single points of failure and high costs.

- DIY DePINs provide permissionless participation but lack automated provisioning and built-in orchestration.

- Botanika aims to combine cloud reliability with DePINs' economic inclusion by creating self-optimizing micro-data centers.

- Botanika's NIMBUS nodes offer automated sharding, AI orchestration, and token-based incentives for data hosting.

- HOA SEN protocol by Botanika ensures secure, high-performance data transmission through homomorphic encryption and adaptive FEC layers.

- Real-world use cases of Botanika include smart city surveillance, rural video streaming, and scientific research grids.

- Botanika enables easy onboarding, integration with SDKs, real-time monitoring, and optimization through its dashboard.

- In conclusion, Botanika democratizes data storage with intelligence, bridging edge computing performance with decentralized network resilience.

- By offering NIMBUS nodes and HOA SEN protocol, Botanika transforms devices into intelligent micro-data centers for various applications.

- To explore Botanika's innovative storage solutions, visit botanika.io and join the global network redefining data infrastructure.

Read Full Article

14 Likes

Medium

174

Image Credit: Medium

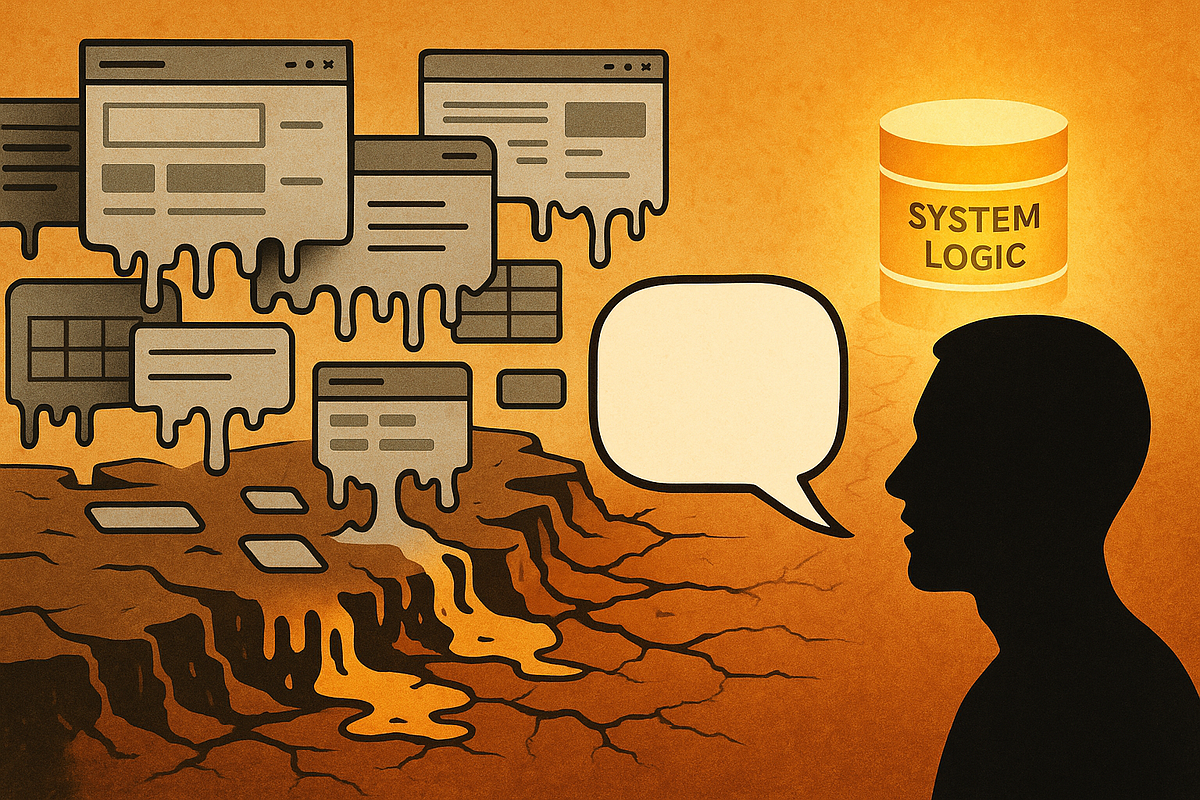

Breaking the UI Abstraction Barrier with Conversational AI

- Large Language Models (LLMs) are revolutionizing software systems by enabling conversational interfaces that enhance user interactions.

- Conversational AI can simplify complex software systems, reduce cognitive load, and increase efficiency by allowing users to interact naturally with the system.

- While direct dynamic database interaction is a future goal, current focus is on LLM-powered agents interacting with existing backend endpoints and APIs to guide users in performing various operations.

- The challenge lies in ensuring discoverability in conversational interfaces and strategically applying these new interaction patterns alongside traditional UI elements for optimal user experience.

Read Full Article

10 Likes

Tech Radar

32

Image Credit: Tech Radar

Google's AI Overviews are often so confidently wrong that I’ve lost all trust in them

- Google's AI Overview combines Google Gemini's language models with Retrieval-Augmented Generation to generate summaries for search queries, but it can be unreliable due to issues in the retrieval and language generation process.

- The AI can make erroneous leaps and draw strange conclusions, leading to famous gaffes like recommending glue on pizza or describing running with scissors as a cardio exercise.

- Despite improvements made by Liz Reid, head of Google Search, users still trick the AI into fabricating information or hallucinating, raising concerns about its accuracy and trustworthiness.

- Some queries deliberately mislead the AI, but genuine questions can also lead to unreliable results, especially since many users don't verify the sourced information.

- Google's AI Overviews seem to avoid topics like finance, politics, health, and law, indicating limitations in handling more serious queries.

- The article warns about blind trust in AI-generated content and suggests users critically evaluate and verify information rather than solely relying on AI summaries.

- Regular use of technology like GPS can negatively impact cognitive skills, and reliance on AI for information without critical thinking can exacerbate the issue.

- The author expresses skepticism about the reliabilities of AI tools like Google's AI Overviews and emphasizes the importance of seeking human-authored or verified articles for accurate information.

- While AI tools may improve in the future, current reliability concerns persist, with high reported hallucination rates and increasing unreliability in AI-generated content according to recent reports.

- The article underscores the need for users to be discerning and cautious when utilizing AI-generated summaries and to prioritize verified sources for crucial information.

Read Full Article

1 Like

Dev

96

Image Credit: Dev

The Importance of Real-World Experience for Engineering Students

- Real-world experience plays a crucial role in shaping the mindset, communication, and decision-making abilities of engineering students.

- Industrial training provides insights into time management, coordination, problem-solving in real-time, and the impact of small improvements in workflow.

- Leadership roles in university help develop soft skills like confidence, responsibility, planning, leading, and adapting.

- Volunteering enhances empathy, teamwork, and people skills, showing proactiveness, social responsibility, and preparing students for real challenges in their careers.

Read Full Article

5 Likes

Medium

22

Image Credit: Medium

The Rise of Vibe Coding: How AI is Transforming Software Development

- Vibe Coding is a new approach to software development where developers describe functionality in plain English and AI generates corresponding code.

- This method democratizes programming, making it accessible to individuals without extensive coding backgrounds and enhancing efficiency.

- Developers play a crucial role in guiding, testing, and refining AI-generated code, emphasizing the importance of human oversight.

- Vibe Coding is gaining popularity due to its transformative potential in software development by combining human creativity with machine efficiency.

Read Full Article

1 Like

Towards Data Science

217

How to Set the Number of Trees in Random Forest

- Random Forest is a versatile machine learning tool widely used in various fields for making predictions and identifying important variables.

- The optRF package helps determine the optimal number of decision trees needed to optimize Random Forest.

- In R, the 'ranger' and 'optRF' packages can be used for Random Forest optimization and prediction.

- The 'optRF' package provides functions like 'opt_prediction' for predicting responses and 'opt_importance' for variable selection.

- By using the 'opt_prediction' function, the recommended number of trees is determined for making predictions.

- The 'ranger' function with the optimal number of trees can be used to build a Random Forest model for making predictions.

- To ascertain variable importance, 'ranger' function can be used with the 'importance' argument set as 'permutation'.

- Increasing the number of trees in Random Forest can enhance the stability and reproducibility of the results.

- Adding more trees helps in reducing randomness, but striking a balance is crucial to avoid unnecessary computation time.

- The 'optRF' package analyzes the stability-number of trees relationship to determine the optimal number of trees efficiently.

Read Full Article

9 Likes

Towards Data Science

375

How to Build an AI Journal with LlamaIndex

- This article discusses building an AI journal with LlamaIndex, focusing on seeking advice within the journal.

- The implementation starts with passing all relevant content into the context, potentially leading to low precision and high costs.

- Enhanced implementation involves Agentic RAG, combining dynamic decision-making and data retrieval for better precision in answers.

- Creation and persistence of an index in a local directory with LlamaIndex SDK is straightforward for enhanced functionality.

- Observations include the impact of parameters on LLM behavior and the importance of managing the inference capability based on the content.

- To complete the seek-advice function, involving multiple Agents working together is recommended, leading to Agent Workflow implementation.

- Agent Workflows can offer dynamic transitions based on LLM model function calls or explicit control over steps for a more personalized experience.

- A custom workflow example illustrates a structured approach to agent interactions, controlling step transitions for effective advice generation.

- The article emphasizes leveraging Agentic RAG and Customized Workflow with LlamaIndex to optimize user interactions in AI journal implementations.

- The source code for this AI journal implementation can be found on GitHub for further exploration and development.

Read Full Article

20 Likes

Towards Data Science

337

The Automation Trap: Why Low-Code AI Models Fail When You Scale

- Low-code AI platforms have simplified the process of building Machine Learning models, allowing anyone to create and deploy models without extensive coding knowledge.

- While low-code platforms like Azure ML Designer and AWS SageMaker Canvas offer ease of use, they face challenges when scaled for high-traffic production.

- Issues such as resource limitations, hidden state management, and limited monitoring capabilities hinder the scalability of low-code AI models.

- The lack of control and scalability in low-code AI systems can lead to bottlenecks, unpredictability in state management, and difficulties in debugging.

- Key considerations for making low-code models scalable include using auto-scaling services, isolating state management, monitoring production metrics, implementing load balancing, and continuous testing of models.

- Low-code tools are beneficial for rapid prototyping, internal analytics, and simple software applications, but may not be suitable for high-traffic production environments.

- When starting a low-code AI project, it's important to consider whether it's for prototyping or a scalable product, as low-code should be seen as an initial tool rather than a final solution.

- Low-code AI platforms offer instant intelligence but may reveal faults like resource constraints, data leakage, and monitoring limitations as businesses grow.

- Architectural issues in low-code AI models require more than just simple solutions and necessitate a thoughtful approach towards scalability and system design.

- Considering scalability from the project's inception and implementing best practices can help mitigate the challenges associated with scaling low-code AI models.

Read Full Article

19 Likes

Towards Data Science

319

Agentic AI 102: Guardrails and Agent Evaluation

- Guardrails are essential safety measures in AI to prevent harmful outputs, such as in ChatGPT or Gemini, which may restrict responses on sensitive topics like health or finance.

- Guardrails AI provides predefined rules for implementing blocks in AI agents by installing and using specific modules through the command line.

- Evaluation of AI agents is crucial, with tools like DeepEval offering methods such as G-Eval for assessing relevance, correctness, and clarity of responses from models like ChatGPT.

- DeepEval's G-Eval method uses artificial intelligence to score the performance of chatbots or AI assistants, improving evaluation of generative AI systems.

- Task completion evaluation, using DeepEval's TaskCompletionMetric, assesses an agent's ability to fulfill a given task, like summarizing topics from Wikipedia.

- Agno's framework offers agent monitoring capabilities, tracking metrics like token usage and response time through its app for managing AI performance and costs.

- By implementing Guardrails, evaluating AI agents, and monitoring their performance, developers can ensure responsible, accurate, and efficient AI behavior and outcomes.

- Different evaluation methods like G-Eval and Task Completion Metric help in assessing the quality and performance of AI agents in various tasks and scenarios.

- Model monitoring tools like those provided by Agno's framework enable developers to track and optimize AI agents' performance and resource usage effectively.

- Ensuring ethical and safe AI behavior through guardrails, accurate evaluation methods, and effective monitoring tools is essential for building trustworthy and reliable AI agents.

Read Full Article

18 Likes

Medium

18

Image Credit: Medium

Modeling Product Growth with Technical Debt: A Logistic Approach

- The article introduces an extended logistic growth model to analyze the impact of technical debt and process fatigue on product growth.

- The model includes a decay factor to simulate the slowdown in growth due to internal factors like technical debt, not just market saturation.

- By tracking metrics like decay term, organizations can identify areas for improvement and maintain long-term growth.

- The article compares two development styles - Fast & Rough vs. Slow & Stable - emphasizing the tradeoff between short-term speed and long-term sustainability.

Read Full Article

1 Like

Medium

13

Image Credit: Medium

AI Won’t Kill Developers — But It Will Expose Those Who Don’t Think

- AI won’t kill developers, but it will expose those who lack understanding and just copy-paste.

- Coding is not just about typing but about thinking, and as long as AI can't think, the profession evolves.

- Developers need to focus on asking the right questions, understanding the purpose of their code, and being architects rather than typists.

- To stay relevant, developers should embrace AI, use it wisely, and remember they are accountable for the results even if AI assists in coding.

Read Full Article

Like

For uninterrupted reading, download the app