Big Data News

Minis

1y

1.7k

Image Credit: Minis

This 25-yr-old CEO became Gauri Khan’s business partner, built Rs 150 cr furniture firm from scratch

- Pranjal Agrawal founded Hermosa Design Studio in Mumbai in 2018, offering affordable luxury Spanish-style furniture in India.

- Hermosa Design targeted Tier 2 cities with high-quality, competitively priced designer furniture.

- The company quickly gained popularity, challenging established brands, and achieved an annual revenue of over Rs 13 crore with a valuation of Rs 150 crore in five years.

- In 2020, Hermosa Design partnered with renowned interior designer Gauri Khan to launch a product line, marking a significant milestone for the company's second anniversary.

Read Full Article

28 Likes

Minis

1y

1.6k

Image Credit: Minis

Adobe joins hands with education ministry to boost digital literacy in India

- Adobe partners with India's Education Ministry to introduce Adobe Express-based curriculum for 2 crore students and 5 lakh educators by 2027.

- Announcement made at the G-20 Leaders' Summit in India, highlighting the importance of digital education.

- K-12 schools in India to receive free access to Adobe Express Premium, promoting digital literacy and creativity.

- Curriculum includes topics like creativity, AI, design, and animation, with educators earning Adobe Creative Educators certification. Adobe also collaborates with AICTE for higher education faculty empowerment.

Read Full Article

20 Likes

Minis

1y

1.5k

Image Credit: Minis

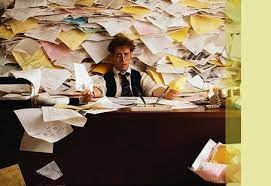

Why We Glorify Overwork and Refuse to Rest

- Value in Busyness: We link worth to work, preferring busyness over idleness, aiming to prove ourselves to others and ourselves.

- Fear of Underwhelm: We avoid boredom and negative emotions by immersing in work, even if unfulfilling, to escape feelings of inadequacy.

- Hidden Collusion with Overwork: Society and employers promote overwork, leading to silent acceptance and perpetuation of workaholism.

Read Full Article

30 Likes

Minis

1y

1.5k

Image Credit: Minis

Man earns Rs 1 crore in less than a month by teaching people how to use AI

- Ole Lehmann, a 32-year-old entrepreneur from Cyprus, transitioned from crypto losses after the FTX exchange crash to becoming an AI educator, earning around Rs 1 crore within a month.

- After the crypto crash, Lehmann discovered ChatGPT, an AI technology that ignited his interest in AI and prompted him to explore new career avenues.

- He began sharing AI-related content on his Twitter account, focusing on maximizing productivity for solo entrepreneurs using AI tools.

- Lehmann's rapid growth in followers led him to create the "AI Audience Accelerator" course, which attracted over 1,000 orders within a month, generating a gross revenue of around Rs 1.4 crore when converted to Indian currency.

Read Full Article

39 Likes

Minis

1y

1.5k

Image Credit: Minis

23% annual jump in hiring ahead of festive season, manufacturing sector saw 245% spike

- Quess Corp reports 23% increase in staffing demand (April to August 2023) for India's festive season.

- Sectors like BFSI, M&I, Retail, and Telecom hire 32,000 workers to meet festive demand.

- Roles such as production trainees, collection officers, and business development executives are in high demand.

- Manufacturing sees 245% growth, BFSI 27%, Telecom 14% surge in workforce demand. Metropolitan cities lead hiring, with emerging roles in focus.

Read Full Article

15 Likes

Minis

1y

166

Image Credit: Minis

Wipro Allots Over 4 lakh Shares To Employees As Stock Options

- Wipro allocates 4,75,895 equity shares as stock options to employees via ADS Restricted Stock Unit Plan and ESOPs.

- In July, Wipro entered a partnership with Pure Storage to enhance sustainable technology in data storage and data centers. The aim is to help clients reduce their data center's environmental impact and achieve greater efficiency.

- Wipro's recent financial results: Gross revenue grew 6.0% YoY to ₹228.3 billion ($2.8 billion), IT Services Segment Revenue rose by 0.8% YoY to $2,778.5 million.

- Wipro's shares traded at ₹413.85, down 0.61% on Wednesday at 10:19 am.

Read Full Article

9 Likes

Minis

1y

1.4k

Image Credit: Minis

5 Ways You Need to Sell Yourself in every Job Interview

- Be confidently authentic: Showcase your strengths without arrogance.

- Align with job requirements: Tailor your selling points to match the role.

- Prepare key speaking points: Practice articulating your strengths effectively.

- Seize opportunities to pitch: Look for moments to present your accomplishments.

- Seek feedback, push boundaries: Get input and be bolder with your achievements.

Read Full Article

20 Likes

Minis

1y

907

Image Credit: Minis

Zerodha's Nithin Kamath warns people of illegal predatory loan apps

- Zerodha founder Nithin Kamath warns against using illegal loan apps, citing a rise in suicides due to harassment by loan agents.

- Unregulated predatory loan apps charge exorbitant interest rates of 100-200%, gaining unauthorized access to user contacts and photos.

- Kamath urges victims to report harassment to the National Cyber Crime Reporting Portal (NCCRP) and emphasizes laws protecting individuals.

- Recent suicide of 22-year-old Tejas Nair after facing blackmail and threats from a Chinese loan app underscores the severity of the issue.

Read Full Article

6 Likes

Minis

1y

1.9k

Image Credit: Minis

Amid growing concerns, Byju's CEO assures employees of a stronger comeback

- Byju Raveendran expressed optimism about the future of BYJU'S during a townhall, stating that the best is yet to come for the company.

- Three board members resigned, followed by the resignation of Deloitte as the auditor. BDO (MSKA & Associates) was appointed as the new statutory auditors to focus on efficient and timely audits.

- Raveendran mentioned that discussions are ongoing to resolve the TLB dispute, and he is confident of a positive outcome. He also emphasized the viability of the edtech industry beyond the pandemic and BYJU'S progress amid global challenges.

Read Full Article

21 Likes

Minis

1y

1.1k

Image Credit: Minis

India has opportunity to leapfrog into AI, generative AI areas: Salesforce

- Arundhati Bhattacharya, CEO and Chairperson of Salesforce India, believes that India has the opportunity to make significant progress in the fields of Artificial Intelligence (AI) and generative AI.

- Bhattacharya highlights the advantage of India's unique infrastructure, such as the India stack comprising Aadhaar and UPI, which are not commonly available in other countries. This infrastructure provides a strong foundation for leveraging AI technologies.

- Bhattacharya also addresses concerns about AI causing job losses, emphasizing that while the nature of jobs may change, India has the potential to adapt and capitalize on AI advancements to create new employment opportunities.

Read Full Article

11 Likes

Minis

2y

817

Image Credit: Minis

Indian news outlets run fake AI image of Pentagon fire as ‘breaking news’

- Several Indian media outlets, including News18 MP, First India News, Times Now Navbharat, and Zee News, fell for a social media hoax claiming that an explosion had occurred near the Pentagon.

- The hoax message originated from an AI-generated image of an explosion in front of the Pentagon, which went viral on social media. The image was shared by Twitter user @WhaleChart with the caption "BREAKING: Explosion near Pentagon."

- Russian news outlet RT initially picked up the hoax message but later deleted it. However, Indian media outlets continued to share the false claim as a real news report without verifying its credibility.

Read Full Article

19 Likes

Minis

2y

731

Image Credit: Minis

Bain & Company announces services alliance with OpenAI

- Bain & Company has announced that it will use OpenAI's platforms and tools to assist clients and maximize business potential.

- Coca-Cola is the first company to engage with the new alliance, and over the past year, Bain has already deployed OpenAI technologies to improve efficiency.

- The partnership between Bain and OpenAI is expected to bring advanced artificial intelligence capabilities to Bain's management consulting services, providing its clients with new insights and more efficient ways of working.

Read Full Article

11 Likes

For uninterrupted reading, download the app