Data Science News

Analyticsindiamag

279

Image Credit: Analyticsindiamag

AIM Print May 2025 Edition

- The May 2025 edition of AIM Print focuses on India's advancements in AI, showcasing the shaping of the future economy through factories, launchpads, studios, and code repositories.

- Key highlights include the rise of private space-tech startups in India, advancements in defense manufacturing, voice synthesis technology development, and challenges in agentic coding.

- The edition also covers enterprise guardrails for AI models, big-tech strategies, capital flows in AI startups, the importance of talent pipelines, and a humorous take on AI in a comic strip and quiz.

- Overall, the edition emphasizes India's shift towards competing on intellectual property, vertical integration, and time-to-market rather than just cost or headcount.

Read Full Article

16 Likes

Analyticsindiamag

390

Image Credit: Analyticsindiamag

UIDAI’s Face Authentication Test for NEET Raises Privacy Concerns

- UIDAI conducted a successful face authentication test during NEET 2025 in Delhi in collaboration with NIC and NTA to enhance exam security.

- The tests aimed to evaluate feasibility and effectiveness of candidate identities using Aadhaar biometric database, with high accuracy and efficiency reported.

- While the initiative was presented as secure and student-friendly, it raised concerns about potential privacy risks for students under 18.

- NMC transitioned to face-based biometric systems for attendance in medical colleges, raising privacy concerns due to lack of regulations for data breaches.

Read Full Article

23 Likes

Analyticsindiamag

123

Image Credit: Analyticsindiamag

Apple Working on New ‘Specialised’ Chips: Report

- Apple is developing new chips for smart wearable glasses, powerful Macs, and AI servers.

- Mass production of these chips is expected to start by the end of next year or in 2027.

- The chips for smart glasses are derived from Apple Watch processors, designed for energy efficiency.

- Apple also aims to enhance Apple Intelligence through new AI server chips and third-party AI model integrations.

Read Full Article

7 Likes

Analyticsindiamag

164

Image Credit: Analyticsindiamag

Nutanix and Pure Storage Partner to Deliver Integrated Solution for Critical Workloads

- Nutanix and Pure Storage have partnered to deliver a deeply integrated infrastructure solution for critical workloads, including AI, at the NEXT Conference in Washington, DC.

- The solution combines Nutanix Cloud Platform with Pure Storage's FlashArray, aiming to modernize and efficiently manage virtual workloads in an evolving virtualization landscape.

- The integrated solution, under development, is expected to be available for early access by summer 2025 and for general availability by the end of the year through Nutanix and Pure Storage channel partners.

- The collaboration will offer choices in modernization for companies with storage-rich environments and expand the FlashStack designs portfolio to include Nutanix, supported by server hardware partners like Cisco, Dell, HPE, Lenovo, and Supermicro.

Read Full Article

9 Likes

Analyticsindiamag

435

Image Credit: Analyticsindiamag

BFSI GCCs Are Hungry for Deep-Tech Talent

- BFSI GCCs in India are incorporating advanced technologies like AI-ML, data analytics, and automation to redefine financial services delivery.

- Real-time analytics adoption in commercial insurance is enhancing portfolio analysis, risk engineering, and catastrophe modeling.

- There is a rising emphasis on user experience (UX) for efficient and user-friendly digital solutions.

- Bengaluru, Delhi/NCR, Hyderabad, and Chennai are leading hubs for BFSI GCCs in India.

- India's BFSI GCC ecosystem is thriving, employing a significant portion of talent in GCCs.

- Anaptyss Inc focuses on AI-led innovation with a CoE for creating digital solutions addressing business challenges.

- GCCs are enabling insurers to transform operations with AI, blockchain, and predictive analytics.

- BFSI GCCs are actively hiring deep-tech talent like AI engineers, data scientists, and NLP experts.

- There is a demand for talent in model risk management, fraud analytics, and programming skills like Python and R.

- Deep tech is aiding productivity in BFSI, especially in tasks like data analysis and client meeting preparation.

Read Full Article

26 Likes

Medium

366

Image Credit: Medium

Turning Ambiguity into Impact: A Quant’s Guide to Leading Through Complexity

- The article discusses the challenges faced during a fast-moving product pilot, focusing on driving meaningful business outcomes with open interpretation of 'meaningful'.

- There was a conflict regarding the definition of success and what the broader organization expected, leading to rounds of stakeholder alignment and clarification of metrics to ensure alignment and credibility.

- Despite discomfort and frustration when insights were shared, reframing the findings led to proposing a design fix based on behavioral trends and key design metrics, improving the user experience without undoing the whole product.

- The article emphasizes the importance of not only finding the numbers but also ensuring agreement on their interpretation to drive impactful decision-making and product improvement.

Read Full Article

22 Likes

Towards Data Science

136

Model Compression: Make Your Machine Learning Models Lighter and Faster

- Model compression has become essential due to the increasing size of models like LLMs, and this article explores four key techniques: pruning, quantization, low-rank factorization, and knowledge distillation.

- Pruning involves removing less important weights from a network, either randomly or based on specific criteria, to make the model smaller.

- Quantization reduces the precision of parameters by converting high-precision values to lower-precision formats, such as 16-bit floating-point or 8-bit integers, leading to memory savings.

- Low-rank factorization exploits redundancy in weight matrices to represent them in a lower-dimensional space, reducing the number of parameters and enhancing efficiency.

- Knowledge distillation transfers knowledge from a complex 'teacher' model to a smaller 'student' model to mimic the behavior and performance of the teacher, enabling efficient learning.

- Each technique offers unique advantages and can be implemented in PyTorch with specific procedures and considerations for application.

- The article also touches on advanced concepts like the Lottery Ticket Hypothesis in pruning and LoRA for efficient adaptation of large language models during fine-tuning.

- Overall, model compression is crucial for deploying efficient machine learning models, and combining multiple techniques can further enhance model performance and deployment.

- Experimenting with these methods and customizing solutions can lead to creative approaches in enhancing model efficiency and deployment.

- The article provides code snippets and encourages readers to explore the GitHub repository for in-depth comparisons and implementation of compression methods.

- Understanding and mastering model compression techniques is vital for data scientists and machine learning practitioners working with large models in various applications.

Read Full Article

7 Likes

Towards Data Science

93

ACP: The Internet Protocol for AI Agents

- ACP (Agent Communication Protocol) enables AI agents to collaborate across teams, frameworks, and organizations, fostering interoperability and scalability.

- It is an open-source standard facilitating communication among AI agents, with the latest version released recently.

- Key features of ACP include REST-based communication, no requirement for specialized SDKs, and offline discovery.

- ACP supports asynchronous communication by default, with synchronous requests also being supported.

- ACP enhances agent orchestration across various architectures while not managing workflows or deployments.

- Challenges addressed by ACP include framework diversity, custom integrations, development scalability, and cross-organization considerations.

- A real-world example showcases how ACP facilitates seamless communication between manufacturing and logistics agents across organizations.

- Creating an ACP-compatible agent is simplified, requiring minimal code to make it discoverable, process requests, and integrate with other ACP systems.

- Comparison with other protocols like MCP and A2A highlights ACP's emphasis on agent-to-agent communication, flexibility, and vendor-neutrality.

- The roadmap for ACP focuses on areas such as identity federation, access delegation, multi-registry support, agent sharing, and deployment simplification.

- The development of ACP encourages community contributions to shape the standard for improved agent interoperability.

Read Full Article

5 Likes

Towards Data Science

448

The Dangers of Deceptive Data Part 2–Base Proportions and Bad Statistics

- The article is a follow-up to a previous piece on deceptive data and explores statistical concepts leading to data misinterpretation.

- It emphasizes the distinction between correlation and causation, highlighting the need for controlled trials to establish causation.

- An example involving cigarette consumption and life expectancy illustrates the correlation vs. causation fallacy.

- The base rate fallacy is discussed using an example from a medical school scenario to demonstrate the importance of base proportions in statistics.

- The article mentions the Datasaurus Dozen dataset, showing how summary statistics alone may not reveal the full picture of the data.

- It stresses the significance of understanding uncertainty and explains how error bars represent uncertainty, not errors in data.

- The article cautions against mistrusting correct data due to misinterpretation of uncertainty and the societal implications of such misunderstandings.

- Key takeaways include being cautious of deceptive data, considering base rates, exploring data beyond summary statistics, and interpreting uncertainty accurately.

- Understanding these concepts equips individuals to approach data science problems effectively and make informed decisions.

- The article concludes by encouraging readers to stay vigilant against deceptive data and misinformation.

Read Full Article

27 Likes

Medium

370

Image Credit: Medium

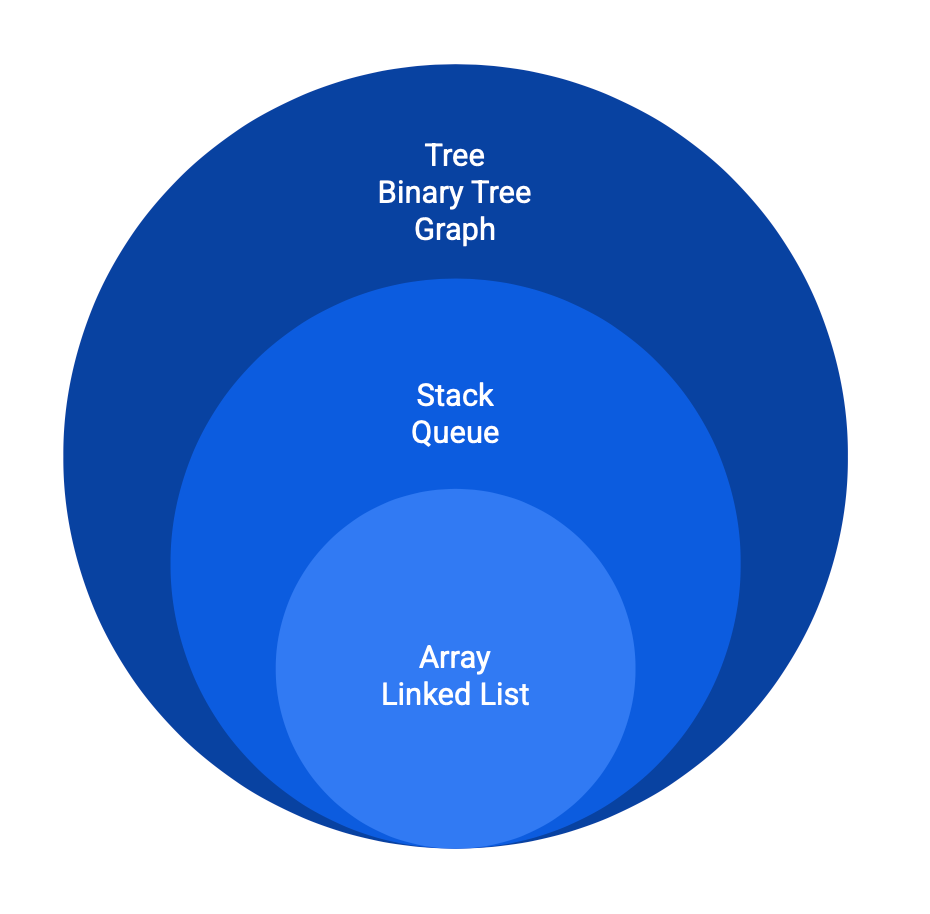

[Data Structures in C++] Introduction (2025)

- Introduction to the series on Data Structures in C++ to establish a strong foundation for learning low-level concepts.

- Prior knowledge of basic C++ syntax, pointers, pass by reference, etc., is assumed to tackle the steep learning curve of this series.

- The learning roadmap for data structures typically starts with arrays and linked lists as foundational concepts.

- The upcoming article in the series will cover arrays, encouraging readers to follow along for continued learning.

Read Full Article

22 Likes

Medium

114

Image Credit: Medium

Bazaar: Conception of a mind: Everything is just a beginning, what will tomorrow be?

- The article discusses the concept of transformation and the relationship between energy, truth, and action.

- Energy is essential for life, and everything is conditioned by energy, which leads to the consumption of energy as we live.

- Transformation involves simplifying things to their essence, as essence is the key to all transformations.

- The article delves into the idea of finding points of transformation, both internal and external, in actions.

- Understanding causality and truth is emphasized as a high stage in causality, requiring effort and work towards perfection.

- The article also explores the consequences of actions, judgment, consciousness, and self-reflection.

- It discusses the impact of emotions on actions, consciousness, and judgment, highlighting the importance of self-acceptance and self-judgment.

- The article touches upon the idea of continuous loops of actions and transformations, emphasizing the importance of finding truths to act and cancel.

- Transformation is seen as leading to action, and the process involves understanding the essence and logic of actions.

- The article concludes by discussing the complexity of experiences, knowledge, and the transformation of truths through actions.

- It also reflects on the interaction between individuals, self-awareness, and the role of truth in actions and transformations.

Read Full Article

6 Likes

Medium

59

Image Credit: Medium

ML Foundations for AI Engineers

- Intelligence boils down to understanding how the world works, requiring an internal model of the world for both humans and computers.

- Humans develop world models by learning from others and experiences, and computers learn similarly through machine learning.

- Traditional software development involves explicit instructions, while machine learning relies on curated examples for training models.

- Machine learning consists of training (learning from curated examples) and inference (applying the model to make predictions).

- Deep learning and reinforcement learning are special types of machine learning that enable computers to learn about the world.

- Deep learning involves training neural networks to learn optimal features for tasks, surpassing traditional model limitations.

- Training deep neural networks involves complex non-linearities and requires algorithms like gradient descent for parameter updates.

- Reinforcement learning allows models to learn through trial and error, with models improving based on rewards rather than explicit examples.

- Good data quality and quantity are crucial for training machine learning models, as bad data can hinder model performance.

- Machine learning provides a way for computers to align models to reality using data and mathematics, revolutionizing how tasks are learned and performed.

Read Full Article

3 Likes

VentureBeat

297

You can now fine-tune your enterprise’s own version of OpenAI’s o4-mini reasoning model with reinforcement learning

- OpenAI has announced that third-party software developers can now access reinforcement fine-tuning (RFT) for its o4-mini language reasoning model.

- RFT allows developers to customize a private version of the model to better fit their enterprise's unique needs through OpenAI's platform dashboard.

- The RFT version of the model can be deployed via OpenAI's API for internal use in tasks like answering company-specific questions or generating communications in the company's voice.

- RFT is available for the o4-mini model and offers a more expressive and controllable method for adapting language models to real-world use cases, facilitating a new level of customization in model deployment.

Read Full Article

17 Likes

VentureBeat

59

Image Credit: VentureBeat

How The Ottawa Hospital uses AI ambient voice capture to reduce physician burnout by 70%, achieve 97% patient satisfaction

- The Ottawa Hospital (TOH) implemented Microsoft's DAX Copilot to address healthcare challenges by saving time and reducing burnout.

- Early results show a 70% decrease in clinician burnout, with seven minutes saved per encounter and 93% patient satisfaction.

- DAX Copilot integrates with Epic EHR, capturing conversations to generate draft clinical notes in real time.

- Physicians can start a recording on a mobile app, allowing for hands-free documentation during patient interactions.

- Microsoft continuously refines large language models for greater accuracy and specialty-specific optimization.

- Physicians typically spend 10 hours per week on administrative tasks, reducing efficiency in patient care.

- TOH employs a robust evaluation plan for DAX Copilot, monitoring impact through feedback and data analysis.

- The tool reduces after-hours documentation work, improving physician cognitive load and patient throughput.

- Patients consent before recordings, access notes in their patient portal, and report positive experiences with the AI tool.

- Future applications include biomarker detection, addressing social determinants of health, and streamlining post-visit actions.

Read Full Article

3 Likes

Towards Data Science

129

The Shadow Side of AutoML: When No-Code Tools Hurt More Than Help

- AutoML simplifies machine learning by automating modeling processes, but it can lead to issues like hidden architectural risks, lack of visibility, and system design problems.

- AutoML tools make it easy to deploy models without writing code, but they can result in unintended consequences when critical issues arise.

- The lack of transparency and oversight in AutoML pipelines can cause subtle errors in behavior and hinder debugging efforts.

- Traditional ML pipelines involve intentional decisions by data scientists, which are visible and debuggable, unlike AutoML systems that bury decisions in opaque structures.

- AutoML platforms often disregard MLOps best practices like versioning, reproducibility, and validation gates, leading to potential infrastructural violations.

- AutoML may encourage score-chasing over validation, where experimentation is prioritized without rigorous testing and model understanding, leading to deployment of flawed models.

- Issues like lack of observability in AutoML systems can cause monitoring gaps, impacting critical functionalities like healthcare, automation, and fraud prevention.

- While AutoML can be effective when properly scoped and governed, it requires version control, data verification, and continuous monitoring for long-term reliability.

- The shadow side of AutoML lies in its tendency to create systems lacking accountability, reproducibility, and monitoring, highlighting the importance of human-governed architecture.

- AutoML should be viewed as a component rather than a standalone solution, emphasizing the need for control and oversight in machine learning workflows.

Read Full Article

7 Likes

For uninterrupted reading, download the app