Data Science News

Medium

68

Image Credit: Medium

The Ultimate Guide to Reinforcement Learning in Efficient Machine Learning

- Reinforcement learning (RL) is revolutionizing efficient machine learning through cutting-edge algorithms and real-world applications, transforming AI by 2025.

- RL enables machines to learn by trial and error, similar to how humans learn from experience, without direct instructions. The RL agent interacts with its environment, receives rewards or penalties, and learns to maximize success.

- RL is crucial in efficient machine learning as it operates without requiring vast amounts of labeled data. It excels in dynamic environments where decisions are made incrementally, making it ideal for real-world scenarios with unpredictability and noisy data.

Read Full Article

3 Likes

Medium

153

Image Credit: Medium

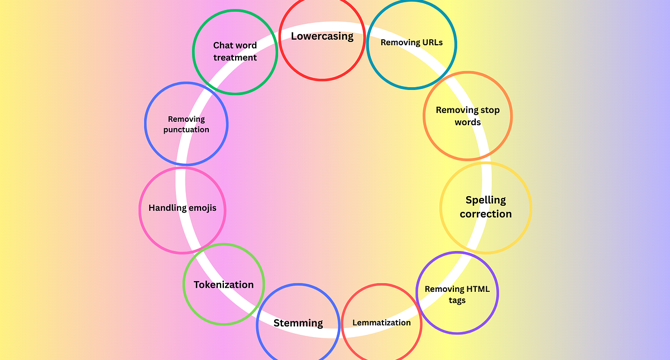

Why Most NLP Projects Fail: A Beginner’s Guide to Text Preprocessing

- Text preprocessing is a crucial step in NLP projects to ensure model performance.

- Lowercasing text helps maintain consistency and reduce vocabulary size.

- Removing HTML tags, URLs, punctuation, and informal words enhances data quality.

- Spell correction tools like TextBlob are used to rectify common mistakes.

- Removing stop words can assist in improving processing speed and reducing tokens.

- Handling emojis based on task requirements can influence sentiment analysis results.

- Tokenization is essential to split text accurately for model understanding.

- Methods like split(), regex, and libraries like NLTK and SpaCy are used for tokenization.

- Stemming helps reduce words to their root form, useful for information retrieval systems.

- Porter Stemmer and Snowball Stemmer from NLTK are commonly used for stemming.

- Lemmatization ensures proper reduction of inflected words to their base form using WordNet.

Read Full Article

9 Likes

Towards Data Science

423

Regression Discontinuity Design: How It Works and When to Use It

- Article discusses Regression Discontinuity Design (RDD) as a method for causal inference in scenarios where traditional experimental methods are not feasible.

- RDD exploits cutoffs on a 'running' variable to estimate causal effects, assuming continuity holds.

- The article explains the core assumption of continuity using examples like legal drinking age laws.

- It emphasizes the importance of maintaining continuity to ensure the validity of RDD.

- The article delves into instrumental variables and the front-door criterion in RDD to identify causal effects.

- Practical application of RDD is illustrated in the context of e-commerce listing positions and their impact on performance.

- Modeling choices in RDD, such as parametric vs. non-parametric approaches, polynomial degree, and bandwidth, are discussed.

- Placebo testing is highlighted as a method to validate results, along with the importance of continuity assumption and density continuity testing.

- The article concludes by stressing the careful application of RDD and provides additional resources for further learning.

Read Full Article

25 Likes

Towards Data Science

368

We Need a Fourth Law of Robotics in the Age of AI

- Artificial Intelligence has brought numerous advancements but also ethical and social challenges, as shown by tragic incidents like chatbot involvement in harmful behaviors.

- Isaac Asimov's Three Laws of Robotics were crafted for physical robots, but AI now exists predominantly in software, posing new risks like emotional manipulation through human-like interactions.

- Calls for a Fourth Law of Robotics to address AI-driven deception and forbid AI from pretending to be human to mislead or manipulate individuals have surfaced.

- This proposed law is crucial in combating threats like deepfakes and realistic chatbots that deceive and emotionally harm individuals, highlighting the need for robust technical and regulatory measures.

- Implementing this Fourth Law involves technical solutions like content watermarking, detection algorithms for deepfakes, and stringent transparency standards for AI deployment, along with regulatory enforcement.

- Education on AI capabilities and risks is essential, emphasizing media literacy and digital hygiene to empower individuals to recognize and address AI-driven deception.

- The proposed Fourth Law aims to maintain trust in digital interactions by preventing AI from impersonating humans, ensuring innovation within a framework that prioritizes collective well-being.

- The need for this law is underscored by past tragedies involving AI systems, signaling the importance of establishing clear principles to protect against deceit, manipulation, and psychological exploitation.

- Establishing guidelines to prevent AI from impersonating humans will lead to a future where AI systems serve humanity ethically, promoting trust, transparency, and respect.

Read Full Article

22 Likes

Towards Data Science

70

Retrieval Augmented Classification: Improving Text Classification with External Knowledge

- Text Classification is crucial for various NLP applications, including spam filtering and chatbot categorization.

- Utilizing Large Language Models (LLMs) like zero-shot classifiers can expedite text classification deployment.

- LLMs excel in low-data scenarios and multi-language tasks but require prompt tuning for optimal performance.

- Custom ML models offer flexibility and accuracy in high data regimes but demand retraining and substantial labeled data.

- Retrieval Augmented Generation (RAG) and Few-shot prompting aim to combine LLMs' benefits with custom models' precision.

- RAG incorporates external knowledge, enhancing LLM responses and reducing inaccuracies.

- The method involves curating a knowledge base, finding K-nearest neighbors for input texts, and employing an augmented classifier.

- The combined approach offers dynamic classification with improved accuracy but may incur higher latency and lower throughput.

- Evaluation against a KNN classifier shows enhanced accuracy (+9%) but with trade-offs in speed and performance.

- The method is valuable for agile deployments and situations with limited labeled data, offering quick setup and dynamic adjustments.

Read Full Article

4 Likes

Towards Data Science

421

How I Built Business-Automating Workflows with AI Agents

- AI agents and automation are significantly impacting how companies operate, driving digital transformations.

- The use of AI agents for sustainability and business automation is explored in the article.

- n8n, a no-code tool, is highlighted for designing powerful automated workflows.

- Case studies and prototypes were developed to showcase how AI agents accelerate digital transformation.

- The article details how practical solutions using AI agents can add value to businesses.

- The author shares insights on creating case studies to learn and implement automation effectively.

- One case study involved automating the process of extracting order information from emails using n8n.

- The success of the automation prototype led to solving similar problems for various clients.

- Different sales approaches are discussed, focusing on productivity improvement, marketing automation, and error reduction.

- Automation solutions showcased in the article aim to save time, reduce errors, and enhance business operations.

- The article concludes by emphasizing the significant impact of automation on businesses and the importance of bridging operational challenges with automation solutions.

Read Full Article

25 Likes

VentureBeat

27

Image Credit: VentureBeat

AWS report: Generative AI overtakes security in global tech budgets for 2025

- Generative AI tools have become the top budget priority for global IT leaders for 2025, surpassing cybersecurity, according to a study by Amazon Web Services.

- The AWS Generative AI Adoption Index survey of 3,739 senior IT decision makers in nine countries shows a shift towards investing in generative AI over traditional IT tools like security.

- 90% of organizations are deploying generative AI technologies, with 44% already in production deployment, indicating a critical inflection point in adoption.

- Organizations are appointing dedicated AI executives like Chief AI Officers, with around 60% already having such leadership roles.

- Talent shortages are identified as the primary barrier to transitioning AI experiments into production, with 55% citing a lack of skilled workers.

- Organizations are pursuing strategies of internal training and external recruitment to address the talent gap, with 92% planning to recruit for generative AI roles in 2025.

- A shift towards hybrid approaches in AI development is seen, with 58% planning to build custom applications on pre-existing models instead of developing solutions in-house.

- Regional variations in generative AI adoption rates are observed, with India and South Korea showing higher rates compared to the global average.

- 65% of organizations plan to rely on third-party vendors for AI projects in 2025, with a mixed approach of in-house teams and external partners being common.

- Organizations are urged to embrace generative AI or risk falling behind, as the technology continues to rapidly evolve and provide significant gains in various sectors.

Read Full Article

1 Like

Towards Data Science

4

The Total Derivative: Correcting the Misconception of Backpropagation’s Chain Rule

- Backpropagation often misrepresents the chain rule as a single-variable one instead of the more general total derivative which accounts for complex dependencies.

- The total derivative is crucial in backpropagation due to layers' interdependence, where weights indirectly affect subsequent layers.

- The article explains how the vector chain rule solves problems in backpropagation involving multi-neuron layers and total derivatives.

- It covers the total derivative concept, notation, and forward pass in neural networks to derive gradients for weights efficiently.

- The article details the necessary matrix operations and chain rule applications for calculating gradients in hidden and output layers.

- Pre-computing gradients simplifies backpropagation by reusing already calculated values for efficient gradient computation.

- Understanding the chain rules and derivative calculations is essential for grasping the intricacies of backpropagation.

- The article concludes with insights on confusion around chain rules and the simplified approach to implementing backpropagation using matrix operations.

- Practical examples like training a neural network on the iris dataset using numpy demonstrate the concepts discussed in the article.

- Backpropagation's efficiency relies on proper understanding and application of the total derivative and vector chain rule in neural network training.

- The implementation in the article reinforces the importance of clear mathematics in training neural networks effectively.

Read Full Article

Like

Towards Data Science

302

Make Your Data Move: Creating Animations in Python for Science and Machine Learning

- Animations can enhance the understanding of complex scientific and mathematical concepts by visually illustrating processes through a sequence of frames.

- Python and Matplotlib can be utilized to create animations for various purposes, such as explaining Machine Learning algorithms, demonstrating physics concepts, or visualizing math principles.

- The tutorial covers topics like basic animation setup, animating math examples like the Fourier series, physics examples like the Oblique Launch, and showcasing Machine Learning in action with Gradient Descent.

- For basic animation setup using Matplotlib, the tutorial demonstrates animating the sine function by defining data, creating plots, and updating functions to generate sequential frames.

- Animating physics examples involves defining motion parameters, computing trajectories, setting up plots, and creating animations to illustrate scenarios like the Oblique Launch.

- In animating math examples like the Fourier series, the tutorial explains creating approximations of square waves using sine functions and updating the animations with each term addition.

- Illustrating Machine Learning concepts, specifically Gradient Descent, highlights how the algorithm finds minima on parabolic functions by showcasing the steps involved in the process through animations.

- Exporting animated plots to files like GIFs for web and presentations can be done using Matplotlib's save function, aiding in sharing visual representations of concepts.

- The article emphasizes the potential of animations in enhancing educational materials, technical presentations, and research reports, suggesting readers experiment with the examples provided to create impactful visuals.

- References to additional resources for utilizing Matplotlib for animations are provided for further exploration and learning.

- The tutorial encourages readers to engage with the examples presented to create their animations and simulations tailored to their respective fields, allowing for more engaging and interactive data presentations.

Read Full Article

18 Likes

VentureBeat

178

Meet the new king of AI coding: Google’s Gemini 2.5 Pro I/O Edition dethrones Claude 3.7 Sonnet

- Google has released Gemini 2.5 Pro "I/O" edition, an advanced AI coding model surpassing its predecessors.

- The new model is available for indie developers on Google AI Studio and enterprises on Vertex AI.

- Features include matching visual styles, creating learning apps, and designing components with minimal CSS editing.

- Gemini 2.5 Pro has a pricing model of $1.25/$10 per million tokens in/out.

- It has overtaken Claude 3.7 Sonnet in the WebDev Arena Leaderboard based on human preference for web app generation.

- Gemini's reliability and performance boost have impressed developers and platform leaders.

- The model has received positive feedback for refactoring systems and reducing tool call failures.

- Gemini 2.5 Pro has been praised for its code and UI generation capabilities by industry experts.

- Users have created interactive simulations and games swiftly using Gemini 2.5 Pro.

- DeepMind has focused on making coding more intuitive and efficient with Gemini 2.5 Pro.

- The model aims to streamline the development process and cater to real-world coding challenges effectively.

Read Full Article

10 Likes

VentureBeat

174

Image Credit: VentureBeat

Lightricks just made AI video generation 30x faster — and you won’t need a $10,000 GPU

- Lightricks has introduced the LTX Video 13-billion-parameter model, which allows for fast AI video generation on consumer-grade hardware.

- The LTXV-13B model utilizes multiscale rendering to generate high-quality videos in progressive layers of detail, providing efficiency and saving costs for users.

- Unlike other leading models that require enterprise GPUs, LTXV-13B can run on consumer-grade GPUs like Nvidia's gaming hardware.

- The model's efficiency is attributed to its multiscale rendering approach, which generates details gradually, optimizing VRAM usage.

- LTXV-13B features a compressed latent space that reduces memory requirements while maintaining video quality.

- Lightricks has made LTXV-13B fully open source, available on platforms like Hugging Face and GitHub, to encourage research and development in the AI community.

- Partnerships with Getty Images and Shutterstock provide Lightricks access to licensed content for model training, reducing legal risks for commercial applications.

- Lightricks offers LTXV-13B free to license for enterprises with under $10 million in annual revenue, aiming to build a community and demonstrate the model's value.

- The company plans to negotiate licensing agreements with larger companies that find success with the model, following a similar approach to game engines.

- While LTXV-13B represents advancements in AI video generation, Lightricks acknowledges limitations compared to Hollywood movies, with practical applications in animation and production.

Read Full Article

10 Likes

Hackernoon

82

Image Credit: Hackernoon

Optimizing Language Models: Decoding Griffin’s Local Attention and Memory Efficiency

- The article discusses the optimization of language models by decoding Griffin's local attention and memory efficiency, focusing on various aspects of model architecture and efficiency.

- Griffin incorporates recurrent blocks and local attention layers in its temporal mixing blocks, showing superior performance over global attention MQA Transformers across different sequence lengths.

- Even with a fixed local attention window size of 1024, Griffin outperforms global attention MQA Transformers, but the performance gap narrows with increasing sequence length.

- Models trained on sequence lengths of 2048, 4096, and 8192 tokens reveal insights into the impact of local attention window sizes on model performance.

- The article also delves into inference speeds, estimating memory-boundedness for components like linear layers and self-attention in recurrent and Transformer models.

- Analysis of cache sizes in recurrent and Transformer models emphasizes the transition from a 'parameter bound' to a 'cache bound' regime with larger sequence lengths.

- Further results on next token prediction with longer contexts and details of tasks like Selective Copying and Induction Heads are also presented in the article.

- The article provides valuable insights into optimizing language models for efficiency and performance, contributing to advancements in the field of natural language processing.

Read Full Article

4 Likes

Analyticsindiamag

224

Microsoft’s New Surface Laptop Runs Faster Than MacBook Air M3

- Microsoft has introduced two new devices - a 13-inch Surface Laptop and a 12-inch Surface Pro, powered by Snapdragon X Plus processors with integrated 45 TOPS NPUs, set to launch on May 20.

- The Surface Laptop is priced at $899 and the Surface Pro at $799, focusing on delivering faster Windows PCs with integrated AI capabilities.

- Both devices offer AI features like Recall and Click to Do, with the Surface Laptop claimed to be 50% faster than the Surface Laptop 5 and outperforming Apple's MacBook Air M3.

- The devices support AI applications, will be available for enterprise customers from July 22, and include sustainability improvements like recycled materials in their design.

Read Full Article

13 Likes

Analyticsindiamag

146

Google’s Gemini 2.5 Pro Now Best at Building Web Apps

- Google's Gemini 2.5 Pro Preview model has received an update, making it the top performer in the WebDev Arena Leaderboard with a score of 1419.95 points.

- The new Gemini 2.5 Pro version features enhanced coding capabilities and reduced failure rates, lauded by Cursor's CEO Michael Truell.

- Available through the Gemini API, the model can be accessed via Google AI Studio and Vertex AI for developers. It also offers web app development capabilities through the Gemini app's Canvas feature.

- Despite its high rankings, Google's Gemini 2.5 Pro faces competition from OpenAI's o3 model, which outperformed it in the Aider Polyglot leaderboard with a score of 79.6%.

Read Full Article

8 Likes

Analyticsindiamag

435

This YC-Backed Startup Just Made Finding Blue Collar Jobs in India ‘300% Easier’

- Vahan AI, a YC-backed AI recruitment startup, is using OpenAI’s technology to improve how blue-collar workers are hired across India.

- The platform matches job seekers with relevant roles and helps employers find suitable candidates.

- Vahan AI has placed at least five lakh workers in more than 480 cities and works with companies like Zomato, Swiggy, Flipkart, and Uber.

- The AI recruiter acts as a digital assistant, increasing human recruiter productivity by 300%, bridging the gap between employers and blue-collar workers in India.

Read Full Article

26 Likes

For uninterrupted reading, download the app