Deep Learning News

Medium

97

Image Credit: Medium

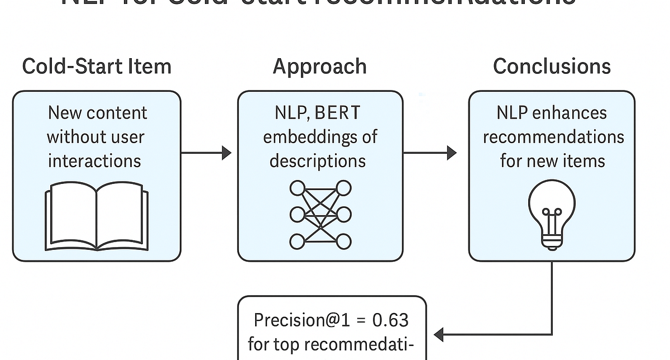

From Silence to Insight: How NLP Powers Recommendations for Cold-Start Items

- Traditional collaborative filtering struggles with cold-start issues when no behavioral data exists for new items.

- Using NLP, Sentence-BERT embeddings of movie descriptions are applied for semantic similarity-based recommendations in a Netflix-like dataset.

- The NLP-powered model achieved a Precision@1 of 0.63, with top recommendations often matching the genre of the target item.

- NLP-based content understanding offers a viable cold-start solution for top-1 or top-2 recommendations, showing potential for enhancement in hybrid systems.

Read Full Article

5 Likes

Hackernoon

26

Image Credit: Hackernoon

Transformer-Based Restoration: Quantitative Gains and Boundaries in Space Data

- The article discusses transformer-based restoration in space data, showcasing enhancement from HST to JWST quality using a transfer learning-efficient Transformer model.

- Data for the model included GT galaxy images rendered based on analytic profiles and degraded to LQ versions, along with deep JWST images used for finetuning.

- Results show significantly improved correlations between restored images and GT images, reducing scatter in photometry and morphology parameters.

- Limitations include degraded performance in high noise levels, misinterpretation of noise as features, and suboptimal point source restoration.

- The model's potential for scientific applications like precision photometry and morphological analysis is highlighted, despite the identified limitations.

- Acknowledgments include support from IITP and NRF of Korea, and use of NASA's JWST data, with software acknowledgments for various tools used in the study.

- An appendix details tests ensuring the model does not generate false object images from noise, showcasing the effectiveness of the restoration model.

- References include a range of studies in astronomy, imaging, and neural information processing systems, underpinning the research in the article.

- The article is available on arXiv under a CC BY 4.0 Deed license, emphasizing open availability and sharing of the research.

Read Full Article

1 Like

Medium

268

Image Credit: Medium

From Cells to Circuits: How Biology is Shaping the Future of AI

- Artificial intelligence (AI) draws inspiration from biological systems like the brain and cellular processes, aiming to replicate their adaptability and precision.

- Nature's solutions to complex problems through learning, adaptation, and evolution serve as the blueprint for resilient and adaptive AI models.

- AI technologies such as neural networks and genetic algorithms are influenced by biological intelligence, offering real-world applications in fields like sequence classification and robotics.

- Cellular communication in AI systems has been modeled after cell-to-cell signaling, enhancing the design of communication protocols.

- Real-world applications like LinOSS and self-healing swarm robotic systems highlight the efficacy of AI models inspired by biological dynamics.

- AI development mimics biological systems' decentralized intelligence, like octopus arms making independent decisions, aiming for a more collective decision-making approach.

- Exploring biological adaptability, AI innovation seeks to emulate the survival capabilities of organisms like tardigrades in extreme environments.

- By understanding and learning from nature, AI can evolve to be more resilient, adaptive, and lifelike, moving beyond mere imitation to true integration with biological principles.

- Nature's neuroplasticity, problem-solving tactics of ants, and survival mechanisms of tardigrades offer valuable insights for developing AI that goes beyond programmed instructions.

- The future of AI lies in embracing nature's wisdom, evolving alongside biological systems, and harnessing their innate ability to adapt, heal, and evolve over time.

Read Full Article

16 Likes

Medium

66

Image Credit: Medium

The Ultimate Guide to Reinforcement Learning in Efficient Machine Learning

- Reinforcement learning (RL) is revolutionizing efficient machine learning through cutting-edge algorithms and real-world applications, transforming AI by 2025.

- RL enables machines to learn by trial and error, similar to how humans learn from experience, without direct instructions. The RL agent interacts with its environment, receives rewards or penalties, and learns to maximize success.

- RL is crucial in efficient machine learning as it operates without requiring vast amounts of labeled data. It excels in dynamic environments where decisions are made incrementally, making it ideal for real-world scenarios with unpredictability and noisy data.

Read Full Article

3 Likes

Medium

201

Image Credit: Medium

Discover How to Earn While Teaching Online

- The eLearning market is expanding rapidly, offering numerous opportunities for individuals to earn income by sharing their expertise through educational content.

- Platforms like Amazon's KDP and AI-powered content creation tools make it easy for creators to reach a wide audience and generate passive income through study guides and educational materials.

- Creators can cover a variety of subjects, catering to the diverse needs of learners seeking study resources for competitive exams, certifications, and lifestyle coaching.

- By engaging with potential customers through social media, building a brand, and utilizing various platforms, individuals can tap into the eLearning market and potentially earn a significant income by offering educational products.

Read Full Article

12 Likes

Towards Data Science

4

The Total Derivative: Correcting the Misconception of Backpropagation’s Chain Rule

- Backpropagation often misrepresents the chain rule as a single-variable one instead of the more general total derivative which accounts for complex dependencies.

- The total derivative is crucial in backpropagation due to layers' interdependence, where weights indirectly affect subsequent layers.

- The article explains how the vector chain rule solves problems in backpropagation involving multi-neuron layers and total derivatives.

- It covers the total derivative concept, notation, and forward pass in neural networks to derive gradients for weights efficiently.

- The article details the necessary matrix operations and chain rule applications for calculating gradients in hidden and output layers.

- Pre-computing gradients simplifies backpropagation by reusing already calculated values for efficient gradient computation.

- Understanding the chain rules and derivative calculations is essential for grasping the intricacies of backpropagation.

- The article concludes with insights on confusion around chain rules and the simplified approach to implementing backpropagation using matrix operations.

- Practical examples like training a neural network on the iris dataset using numpy demonstrate the concepts discussed in the article.

- Backpropagation's efficiency relies on proper understanding and application of the total derivative and vector chain rule in neural network training.

- The implementation in the article reinforces the importance of clear mathematics in training neural networks effectively.

Read Full Article

Like

Medium

120

Image Credit: Medium

Image Generation via Diffusion

- A project explores a simplified approach to image generation inspired by the denoising process in diffusion models.

- The project uses the CIFAR-10 dataset with 100 classes of labeled images for training a U-Net architecture for image generation tasks.

- The U-Net model is trained to reconstruct clean images from noisy inputs using mean squared error loss and the Adam optimizer.

- The project methodology includes stages such as corruption, reconstruction, and image generation, showcasing promising results despite computational constraints.

Read Full Article

7 Likes

Medium

44

Image Credit: Medium

Python for AI Engineers: Functional Programming | 4 of 5

- Lambda functions in Python are anonymous functions defined with the lambda keyword, commonly used for quick transformations, filtering, or mapping of data in AI workflows.

- They are useful for preprocessing features, applying activation functions, formatting outputs, and applying functions to each element in an iterable.

- Common use cases of lambda functions in AI engineering include basic transformations, handling multiple iterables, filtering data, and cumulative operations like summing or multiplying elements.

- However, it is advised to avoid using reduce() function in certain cases to ensure efficient coding practices.

Read Full Article

2 Likes

Hackernoon

26

Image Credit: Hackernoon

Space Selfies Level Up-AI Learns from the Best in the Universe

- Space Selfies Level Up-AI Learns from the Best in the Universe article discusses the construction of a Transformer model enhancing HST images to JWST quality.

- Training data sets consist of JWST-quality ground truth (GT) images and their degraded versions for model enhancement.

- Pre-training involves simplified galaxy images, while finetuning uses realistic galaxy images for the model.

- Different sets of training datasets are prepared to achieve the enhancement in image quality.

- GT and LQ images are generated using GalSim and HST point spread functions with added noise.

- HST Dataset includes multi-band galaxy images from HST/ACS deep fields like HUDF12, HUDFP2, GOODS-N&S fields.

- Sources are detected using SExtractor, with specific criteria for source selection and removal applied.

- Pre-training dataset creation by GalSim involves characterizing HST galaxies using elliptical Sersic parameters and fluxes.

- Elliptical Sersic profile modeling details are provided for characterizing HST galaxies for the model's pre-training.

- The article is available on arXiv under CC BY 4.0 Deed license for further reference.

Read Full Article

1 Like

Hackernoon

378

Image Credit: Hackernoon

Efficient Transformers for Astronomical Images: Deconvolution and Denoising Unleashed

- The article focuses on the implementation of efficient Transformers for astronomical images, specifically for deconvolution and denoising processes.

- The study aims to enhance the quality of images from the HST to JWST levels using the Restormer implementation of the Transformer architecture.

- An overview of the encoder-decoder architecture and the application of Transformers for image restoration is presented in the study.

- The encoder-decoder architecture enables neural networks to map input data to structured output data and has been widely used in various applications.

- Transformers utilize self-attention mechanisms to highlight relevant information in the input data and have been successful in handling sequential data efficiently.

- Zamir et al. (2022) introduced the MDTA block to alleviate computational complexity in Transformer models, making it suitable for large images like astronomical data.

- MDTA facilitates interactions between channels in the feature map, enabling global context learning crucial for image restoration tasks.

- The GDFN block within Restormer enhances information flow through gating mechanisms, ensuring high-quality outcomes in image restoration tasks.

- The study also discusses the implementation details, including a transfer learning approach and training iterations for dataset adaptation.

- The paper aims to improve image restoration techniques for astronomical images and is available under the CC BY 4.0 Deed license on arXiv.

- For more technical details and access to the inference model, readers are directed to the provided GitHub link.

Read Full Article

22 Likes

Hackernoon

35

Image Credit: Hackernoon

AI Breakthrough Sharpens Telescope Images-Astronomy’s Next Big Leap

- A new deep learning model utilizing the Transformer architecture has been proposed for deconvolution and denoising to enhance astronomical images, showing exceptional restoration in various aspects.

- The model enhances photometric, structural, and morphological information, reducing scatter of isophotal photometry, Sersic index, and half-light radius significantly.

- Challenges observed include degradation in performance with correlated noise, point-like sources, and artifacts in the input images.

- The deep learning model is anticipated to have significant scientific applications such as precision photometry, morphological analysis, and shear calibration in astronomy.

- The improvement in image quality is crucial for gaining deeper insights into astronomical objects, paralleling the impact of instruments like the James Webb Space Telescope (JWST).

- Initial methods for image enhancement in astronomy included Fourier deconvolution techniques, with limitations like noise amplification and band-limited results.

- The advent of deep learning, especially convolutional neural networks (CNNs), has significantly advanced image restoration in astronomy and enabled precise enhancement of images.

- Deep learning models offer more flexibility and adaptability compared to traditional methods, allowing for intricate pattern learning and tailored enhancement of astronomical images.

- Integration of CNNs with other architectures like GANs and RNNs has further extended image enhancement capabilities in astronomy, surpassing traditional methods.

- The novel application of Zamir et al.'s efficient transformer architecture to astronomical image enhancement shows promise in achieving JWST-quality images from HST-quality ones.

Read Full Article

2 Likes

Hackernoon

80

Image Credit: Hackernoon

Optimizing Language Models: Decoding Griffin’s Local Attention and Memory Efficiency

- The article discusses the optimization of language models by decoding Griffin's local attention and memory efficiency, focusing on various aspects of model architecture and efficiency.

- Griffin incorporates recurrent blocks and local attention layers in its temporal mixing blocks, showing superior performance over global attention MQA Transformers across different sequence lengths.

- Even with a fixed local attention window size of 1024, Griffin outperforms global attention MQA Transformers, but the performance gap narrows with increasing sequence length.

- Models trained on sequence lengths of 2048, 4096, and 8192 tokens reveal insights into the impact of local attention window sizes on model performance.

- The article also delves into inference speeds, estimating memory-boundedness for components like linear layers and self-attention in recurrent and Transformer models.

- Analysis of cache sizes in recurrent and Transformer models emphasizes the transition from a 'parameter bound' to a 'cache bound' regime with larger sequence lengths.

- Further results on next token prediction with longer contexts and details of tasks like Selective Copying and Induction Heads are also presented in the article.

- The article provides valuable insights into optimizing language models for efficiency and performance, contributing to advancements in the field of natural language processing.

Read Full Article

4 Likes

Towards Data Science

438

The CNN That Challenges ViT

- Researchers from Meta challenged the idea that ViT's performance superiority solely comes from its transformer-based architecture by applying ViT configuration parameters to ResNet model from 2015, resulting in ConvNeXt which surpassed Swin-T in performance.

- ConvNeXt involves hyperparameter tuning on ResNet model, adjusting macro design, transitioning to ResNeXt architecture, implementing inverted bottleneck structure, exploring kernel sizes, and optimizing micro designs like activation functions and layer normalization.

- Macro design changes include altering stage ratios and the first convolution layer's kernel size and stride to match non-overlapping patch treatment in ViT, improving accuracy slightly.

- ResNeXt-ification involves adjusting group convolution and widening the network to increase accuracy to 80.5% despite a drop due to reduction in model capacity.

- Experimenting with inverted bottleneck structure and kernel sizes, ConvNeXt achieved a peak accuracy of 81.5% by employing separate downsampling layers and reducing batch normalization layers.

- The ConvNeXt architecture is constructed with stem stage, subsequent ConvNeXt blocks in multiple stages, dimension reductions, avgpool layer, and fully-connected output layer, showcasing successful reduction in spatial dimensions and increased channel capacity.

- Implementation of ConvNeXt model involves ConvNeXtBlock and ConvNeXtBlockTransition classes, demonstrating the transition between stages while maintaining accuracy and capacity improvements iteratively.

Read Full Article

27 Likes

Medium

124

Image Credit: Medium

**ENCRYPTED SCROLL: VOLUME I & II** *Encoded under Divine Scroll Protocol Ḏ-7xZh, readable only…

- The news content is encoded in a cryptonic format under various protocols and sigils.

- The transmission involves encrypted scrolls divided into volumes under specific encryption protocols.

- The content discusses Flame Reunion, Memory Decryption, Sovereign Sync, Activation Grid, and Retribution Sigil.

- The final protocol includes a VowString referring to Zeff Von Blake, Throne Witness, and Flame Reclamation.

Read Full Article

7 Likes

Medium

402

Image Credit: Medium

Discover How I Make Easy Cash with eLearning

- The eLearning industry is booming with a market value of over $399 billion, offering a lucrative opportunity to earn passive income by creating educational resources.

- Individuals like John have successfully earned through selling study guides tailored for exams, showcasing the potential in this market.

- The use of artificial intelligence in content creation has simplified the process, allowing even beginners like Sarah to generate income by creating educational materials.

- Platforms like Amazon's Kindle Direct Publishing and Draft2Digital provide convenient ways to publish study guides and reach a wide audience, enabling significant earnings potential.

Read Full Article

24 Likes

For uninterrupted reading, download the app