Deep Learning News

Medium

260

Image Credit: Medium

The Bubble Continuum: A Unified Model of Energy via Void Collapse with NumPy Demonstration

- The Bubble Continuum introduces a theoretical model reinterpreting energy dynamics through void collapse events, offering insights into various disciplines like soft matter physics and clean energy theory.

- The model proposes that energy is released through the resolution of voids under pressure differentials, challenging traditional views of energy derived solely from matter transformation.

- The Bubble Continuum bridges diverse domains including soft matter physics, fluid dynamics, acoustics, and materials science, showcasing various applications from material engineering to clean energy solutions.

- It presents an energy continuum framework with stages like weak collapse from soap bubbles, cavitational collapse like in pistol shrimps, and resonant collapse through harmonized standing waves.

- Innovations include energy harvesting from void collapses, material engineering applications, and a fusion-like reactor model based on collapse-resonance rings.

- Proof-of-concept systems in nature like the pistol shrimp and sonoluminescence chambers demonstrate the practicality of void collapse energy phenomena.

- The model philosophically reframes entropy as a mechanism of creative tension resolved through structured collapse, where even the void contributes to energy emergence.

- Future work involves experimental design, simulations, and applications testing to further explore the Bubble Continuum model.

- A Python code snippet using NumPy and Matplotlib is provided to simulate the Bubble Continuum Energy Model, showcasing how the theoretical concepts can be implemented.

- The Bubble Buddy Continuum offers a unified field theory of void collapse energy, presenting new insights into physics, energy, and material design, highlighting the role of voids in energy generation.

- The model emphasizes the poetic notion that energy can be born from voids, suggesting a new perspective on the contributions of emptiness to energy dynamics.

Read Full Article

15 Likes

Hackernoon

190

Image Credit: Hackernoon

A Survey of Machine Learning Approaches for Predicting Hospital Readmission

- Several studies have focused on machine/deep learning applications in healthcare and predicting hospital readmissions.

- Different approaches such as clustering procedures, Bayesian networks, and deep learning algorithms have been used to predict patient readmission rates.

- Studies have achieved accuracies ranging from 66% to 85% in predicting patient readmissions using machine learning techniques.

- Predictive models leveraging patient data and clinical information have shown promising results in forecasting hospital readmissions, contributing to the optimization of healthcare outcomes.

Read Full Article

11 Likes

Hackernoon

195

Image Credit: Hackernoon

The Future of Patient Care: Text Mining Discharge Notes to Slash Readmissions

- Hospital readmission is a concerning issue affecting patient outcomes and healthcare costs.

- This study focuses on predicting patient readmission within 30 days using text mining on discharge notes.

- Machine learning and deep learning methods, including Bio-Discharge Summary Bert (BDSS), were utilized in the model.

- The model combining BDSS with a multilayer perceptron (MLP) outperformed existing methods with a 94% recall rate and 75% AUC.

- Integration of text mining and deep learning improves patient outcomes and resource allocation in healthcare.

- Utilization of EHR for readmission rate monitoring is crucial for enhancing treatment quality and cost savings in healthcare.

- Text mining and AI predictive approaches play a significant role in preventing rapid readmissions to hospitals.

- Various machine learning and deep learning models were employed to predict patient readmission based on clinical notes.

- The study compared different models, utilized advanced text representation techniques, and analyzed the entire dataset without data balancing.

- The research contributes to enhancing predictive modeling in healthcare by leveraging text mining and deep learning techniques.

Read Full Article

11 Likes

Medium

4

Image Credit: Medium

Weird Science: A Cosmic Card Trick, To Demystify Bell’s Theorem, Entanglement, and Non-locality.

- Quantum mechanics defies classical intuition with concepts like entanglement, superposition, and non-locality, explained through Bell’s Theorem.

- An analogy is presented in the form of a cosmic card trick to illustrate quantum mechanics, with entangled particles resembling a 'stacked deck' and changing rules during play.

- The analogy delves into the idea of patterns emerging in quantum mechanics results, showcasing statistical correlations between measurements on entangled particles.

- Bell's Theorem formalizes the paradox in quantum mechanics, proving violations of classical notions of locality and realism, challenging our understanding of the universe.

Read Full Article

Like

Medium

238

Image Credit: Medium

Synthetic Data Revolution: A Personal Journey into AI’s Next Frontier

- Synthetic data is transforming industries by offering privacy-friendly solutions and addressing data scarcity.

- The journey into understanding synthetic data began at a tech conference where initial skepticism turned into curiosity.

- Experts discussed the potential of AI-generated data, highlighting its game-changing capabilities over real data.

- Industries like healthcare are using synthetic data for training AI models, ensuring privacy compliance and data richness for accurate diagnostics.

Read Full Article

14 Likes

Medium

234

Image Credit: Medium

No Data, No Problem: How This AI Learns Without Us

- Absolute Zero Reasoner (AZR) is an AI that teaches itself without human-made training data and outperforms models trained on human examples.

- AZR can develop unique reasoning strategies, potentially leading to innovative solutions in mathematics, coding, and other fields.

- Understanding AZR's reasoning processes is challenging due to its self-devised methods, raising concerns about transparency and trust in AI decisions.

- The ethical considerations of ensuring self-taught AI systems align with human values become more complex when their learning pathways are self-directed and less transparent.

Read Full Article

14 Likes

Medium

160

Image Credit: Medium

AWS vs Azure vs Google Cloud: How We Chose Our ML Platform

- Choosing between AWS, Azure, and Google Cloud for machine learning projects is crucial for innovation in cloud computing.

- A company's transition to a cloud-based machine learning platform can be a significant undertaking with various considerations.

- Each platform - AWS, Azure, and Google Cloud - offers unique strengths and complexities in the realm of machine learning.

- AWS provides SageMaker for simplified model building and deployment, Azure offers a robust ML platform, and Google Cloud focuses on seamless ecosystem integration.

Read Full Article

9 Likes

Medium

56

The Data Trap Post One: I Pay for Data—So Why Am I Paying to Watch Ads?

- Paying for data on the internet includes watching ads, leading to an unexpected cost to the user.

- Users often unknowingly spend a significant part of their data plan on viewing promotional content without explicit consent.

- This situation acts as a hidden tax on users, especially impacting those with limited data plans, creating a form of exploitation.

- Platforms benefit from this system as they profit from both advertisers seeking attention and users paying for data to receive ads.

Read Full Article

3 Likes

Medium

82

Model Quantization for Scalable ML Deployment

- Model quantization involves converting model weights and activations from float32 to lower precision formats like float16 or int8.

- Quantization to float16 is straightforward, while quantization to int8 involves mapping the wide range of float32 values to 256 integer values.

- Two main quantization schemes are used: Affine Quantization Scheme for non-zero offset data and Symmetric Quantization Scheme for zero-centered data.

- Different quantization methods like Dynamic Quantization, Static Quantization, and Quantization Aware Training are used to reduce model size, improve efficiency, and enable real-time AI on limited-resources.

Read Full Article

4 Likes

Medium

373

Image Credit: Medium

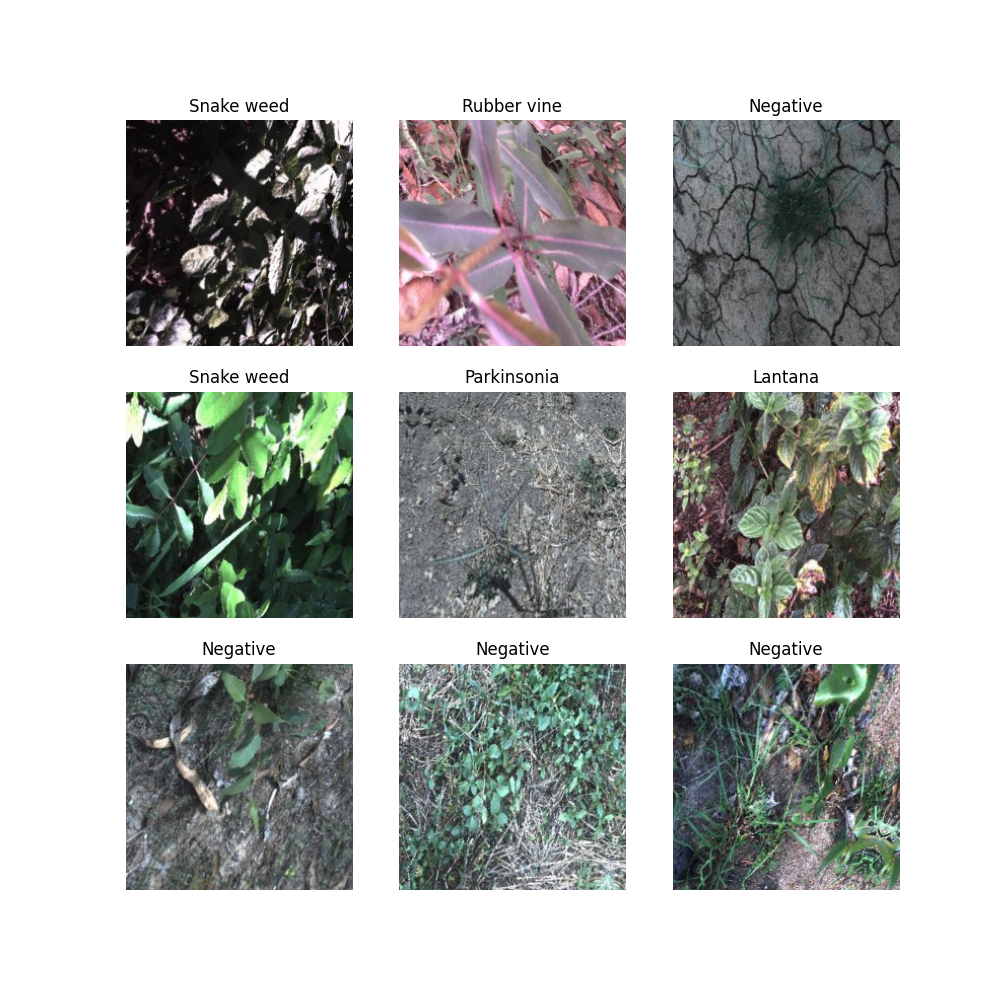

Classifying Invasive Weeds with Deep Learning, Yet Another Classification Problem

- Model was optimized by switching to ResNet18 and utilizing pre-trained ImageNet weights to reduce training time.

- Number of epochs was reduced to 6, with 3 frozen and 3 unfrozen, resulting in training time dropping to around 2 hours with 88% accuracy.

- Batch size was doubled to 64 to speed up learning process.

- Mixed Precision with to_fp16() was enabled to cut memory usage and increase speed by around 20% with minimal accuracy loss.

Read Full Article

22 Likes

Medium

60

Image Credit: Medium

A Balanced Framework for Ethical AI: Empathy, Civics, and Symbiosis w/ PyTorch proof.

- This article proposes a framework for ethical AI design based on empathy, civics, and symbiosis to ensure AI operates in partnership with humanity.

- The framework aims to address challenges like misuse, societal harm, and erosion of human autonomy, preventing extreme outcomes and fostering multidimensional human collaboration.

- The three pillars of ethical AI include empathy, civics, and symbiosis, enabling respectful recognition of humanity, ethical reasoning, and a balanced partnership between AI and humans.

- The triad of empathy, civics, and symbiosis forms a dynamic equilibrium for ethical AI, preventing coercive systems, utilitarian harm, and tolerance of unethical behavior.

- Practical applications of the framework include preventing misuse, fostering human growth, and promoting societal harmony through ethical decision-making and mediation.

- A PyTorch-based proof of concept is presented, demonstrating how the framework can be implemented in neural computation to evaluate ethical actions based on the three pillars.

- The AI prototype utilizes Empathy, Civics, and Symbiosis modules to simulate ethical decision-making, showcasing the potential for AI to model triadic ethical reasoning.

- Future steps involve training modules, adding safeguards against misuse, enhancing explainability, and integrating the framework into AI agents for real-world interactions with humans.

- By integrating empathy, civics, and symbiosis, AI can progress as a compassionate and responsible partner in human development, promoting wisdom and balance in decision-making.

- The article concludes that ethical AI development involves not only philosophical considerations but also practical implementation, as shown through the PyTorch prototype.

Read Full Article

3 Likes

Bigdataanalyticsnews

287

Image Credit: Bigdataanalyticsnews

AI and the Future of Quantitative Finance

- AI is reshaping quantitative finance by introducing new levels of speed, precision, and adaptability, revolutionizing how quants operate.

- Machine learning and natural language processing are key AI technologies transforming quant finance, enabling advanced analysis and decision-making.

- AI-driven quant strategies include sentiment-driven trading, smart portfolio optimization, and enhanced risk management through dynamic adaptation.

- Challenges in AI implementation include model transparency, data quality issues, and the risk of overfitting.

- Quantum computing, a future ally to AI, offers the potential for real-time optimization and precise risk assessments in finance.

- Despite AI advancements, the human element remains crucial in quantitative finance, with AI likely to augment rather than replace human quants.

- Finance professionals need to adapt by learning AI programming languages, machine learning frameworks, and staying informed on emerging trends like quantum computing.

- AI and quantum computing together hold the promise of accelerating financial model development and giving firms a competitive edge in trading and risk management.

- The future of quantitative finance will be led by those who effectively harness AI, machine learning, and quantum computing to drive innovation and strategic decision-making.

Read Full Article

17 Likes

Medium

39

Image Credit: Medium

Google’s AI Max for Search Campaigns

- Google's AI Max for Search campaigns leverage AI-powered broad match and intent-based targeting to double conversions and reduce ad costs significantly.

- AI Max combines broad match keywords with intent-based targeting for more effective ad delivery that resonates with user search queries.

- AI Max redefines ad targeting by understanding user intent beyond keyword matching and focusing on capturing searchers' true preferences.

- Results from testing AI Max for Search campaigns have been impressive, leading to a paradigm shift in how search advertising is approached.

Read Full Article

2 Likes

Medium

204

Image Credit: Medium

Meta’s AI Data Crisis: Copyright Battles & Ethical Solutions

- Controversy surrounds Meta's data practices, leading to debates on copyright infringement, data ethics, and legal boundaries of AI development.

- Allegations against Meta include using pirated data for training AI models, involving copyright infringement and stripping of copyright management information.

- The legal battles faced by Meta have broader implications for AI development and have raised concerns among authors in the U.S.

- The issue goes beyond Meta itself, highlighting the need for ethical considerations in AI development and shaping the future of AI.

Read Full Article

12 Likes

Medium

117

Image Credit: Medium

Understanding Artificial General Intelligence AGI

- Artificial General Intelligence (AGI) is set to revolutionize industries and daily life with human-like intelligence.

- Discovering the concept of AGI felt like glimpsing into a futuristic realm bridging science fiction and reality.

- Encountering a TED Talk by a renowned AI expert showcased the potential of AGI in solving intricate problems ranging from medical breakthroughs to space exploration.

- AGI transcends conventional AI by aspiring to replicate the vast spectrum of human intelligence, prompting exploration into cutting-edge machine learning and neural network advancements.

Read Full Article

7 Likes

For uninterrupted reading, download the app