Devops News

VentureBeat

218

Image Credit: VentureBeat

Speed is King: How Google’s $32B Wiz play rewrites DevOps security rules

- Google's acquisition of Wiz highlights the importance of speed in modern DevOps cycles while maintaining security.

- By acquiring Wiz, Google gains an AI-infused Cloud Native Application Protection Platform (CNAPP) to enhance threat detection and reduce false positives.

- Wiz's integration with Google will improve risk detection, threat intelligence, and automated remediation for cloud-based applications and models.

- Google's $32 billion investment signifies the urgency of AI-driven CNAPP platforms in fast-paced DevOps environments.

- With enterprises increasingly adopting multi-cloud strategies, Google needs to provide a true CNAPP tool with multi-cloud support after the acquisition.

- CNAPP plays a crucial role in helping DevOps teams reduce risks, block intrusions, and ensure secure CI/CD pipelines.

- Wiz's expansion into container security and software composition analysis positions it as a strong competitor in the application security space.

- Google's goal is to unify CNAPP solutions for end-to-end security from code to cloud, empowering faster development cycles.

- The global CNAPP market is projected to grow significantly, driven by the need for advanced cloud security solutions.

- Enterprises require AI-powered CNAPP platforms to streamline security processes and mitigate risks in multi-cloud environments.

Read Full Article

13 Likes

Dev

384

Image Credit: Dev

CockroachDB: live certificate rotation

- CockroachDB enables live certificate rotation in Kubernetes deployment, maintaining client connections without restarts.

- Automated certificate rotation requires updating secrets manually and reading them for CockroachDB.

- Identifying target pods with common labels enables the tool to refresh certificates automatically.

- The process involves deleting old certificates, saving new ones, adjusting permissions, and triggering a certificate reload.

- A SIGHUP signal notifies CockroachDB of certificate changes without disconnecting clients.

- Verification of updated certificates can be done through CockroachDB's admin console.

- YAML configurations and NodeJS automation script are available on GitHub for reference and implementation.

- It is recommended to collaborate with Cockroach Enterprise Architects for the initial certificate rotation.

- The automation process streamlines certificate management for CockroachDB in a containerized environment.

- Utilizing a NodeJS app, organizations can ensure a reliable and repeatable workflow for certificate rotation.

- Effective certificate rotation is crucial for maintaining security and compliance in a CockroachDB deployment.

Read Full Article

23 Likes

The New Stack

227

Image Credit: The New Stack

KubeFleet: The Future of Multicluster Kubernetes App Management

- KubeFleet is a cloud native multicluster/multicloud solution designed to facilitate the deployment, management and scaling of applications across multiple Kubernetes clusters.

- Managing applications on multiple clusters increases operational complexity, but KubeFleet aims to streamline multicluster application management by providing advanced scheduling, resource optimization, and policy-based management.

- KubeFleet solves the challenges of multicluster deployment by offering a single control plane for deploying applications across multiple clusters and supporting metrics-based placement strategies.

- KubeFleet is now part of CNCF and is seeking involvement from cloud native practitioners to shape the future of multicluster application management.

Read Full Article

13 Likes

Medium

34

Image Credit: Medium

Decoding Privacy in DevOps: Why Understanding Technical Terms is Key

- Privacy policies often contain technical terms that are widely misunderstood.

- Misunderstanding technical terms can lead to serious vulnerabilities in DevOps.

- The contextual integrity approach can help analyze privacy policies based on appropriate information flow.

- Best practices for addressing privacy challenges in DevOps include prioritizing clarity, context, and continuous learning.

Read Full Article

2 Likes

Discover more

- Programming News

- Software News

- Web Design

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

43

Image Credit: Dev

7 Must Read Tech Books for Experienced Developers and Leads in 2025

- In 2025, experienced developers and leads can benefit from reading a variety of tech books focusing on both technical and operational skills.

- Key points to keep in mind include learning from others' mistakes, enjoying the reading process, and continuously seeking self-improvement.

- Recommended books encompass various aspects such as automation, DevOps, microservices, team management, and site reliability engineering.

- The Phoenix Project is highlighted as a transformative read for altering mindset and enhancing programming skills.

- Building Microservices: Designing Fine-Grained Systems 2nd Edition is suggested for understanding the challenges and implementations of microservices.

- Team Topologies offers insights into managing and organizing development teams effectively for high performance.

- The Unicorn Project, a sequel to The Phoenix Project, provides valuable insights into technology business improvements while being an enjoyable read.

- Site Reliability Engineering shares Google's approach to high availability and operational excellence, offering practical advice for tech professionals.

- Accelerate: Building and Scaling High-Performing Technology Organizations focuses on measuring software delivery performance and driving higher team performance.

- Continuous Delivery is essential for those looking to implement CI/CD practices effectively for rapid and reliable software delivery.

Read Full Article

2 Likes

Medium

148

Image Credit: Medium

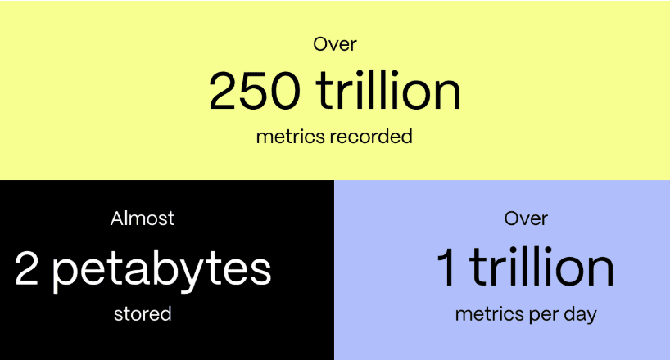

You, Too, Can Scale Postgres to 2 PB and 1 T Metrics per Day

- Timescale Cloud database service is now handling a trillion metrics daily and storing nearly two petabytes of data.

- This feat challenges the common belief that Postgres cannot scale effectively.

- The operation runs entirely on Timescale Cloud without special treatment or hidden infrastructure.

- The feature, Insights, provides detailed query analytics for Timescale Cloud users.

- Initially collecting a dozen metrics per query, the database now captures significantly more data points.

- The data volume has grown due to an expanding customer base, increased query loads, and enhanced metrics collection.

- Despite the massive growth in data and queries, the architecture largely remains the same as described in the original post.

- The use of continuous aggregates and tiered storage has helped to efficiently manage and query the vast amount of stored data.

- Timescale's tiered storage, hypertables, and continuous aggregates have enabled efficient scaling and processing of massive workloads.

- Insights has showcased that Postgres, when engineered for scale, can effectively handle immense workloads.

Read Full Article

8 Likes

Medium

411

Image Credit: Medium

Introduction to KCP?

- KCP is an open-source project that offers a Kubernetes-like control plane for managing applications across multiple clusters and environments.

- Unlike traditional Kubernetes clusters, KCP provides a control plane without being tied to specific nodes or pods, enabling platform engineers to build platforms and SaaS-like services using familiar Kubernetes API capabilities.

- KCP allows organizations to create multiple logical clusters within a single control plane, simplifying management and reducing overhead.

- By decoupling the control plane from the data plane, KCP offers a lightweight, scalable, and multi-tenant solution for efficient Kubernetes management.

Read Full Article

24 Likes

Dev

245

Image Credit: Dev

Why Do Developers Struggle with Project Management? A Practical Guide to Finding the Right Tool

- Developers often struggle with traditional project management tools that disrupt their workflow with excessive meetings, rigid task structures, and poor integration with development tools.

- Teamcamp offers a developer-friendly project management solution that enhances focus, collaboration, and integrates seamlessly with development tools.

- Challenges faced by developers include excessive meetings, rigid workflows, poor integration with developer tools, lack of progress visibility, and inefficient communication between stakeholders.

- Teamcamp addresses these challenges by providing real-time collaboration, customizable workflows, seamless integration with tools like GitHub, and improved visibility into project progress.

- Teamcamp's features include streamlined project planning, real-time collaboration, customizable workflows, visual progress tracking, seamless integration with developer tools, and comprehensive solutions for different teams within an organization.

- Teamcamp stands out as the best project management software for developers due to its developer-centric approach, reducing administrative overhead, enhancing team collaboration, and offering customizable workflows.

- In 2025, choosing the right project management software is crucial, and Teamcamp is recommended for developers in a guide comparing the best tools available.

- Developers need tools that reduce administrative burden, integrate well with development tools, and allow flexible project tracking, all of which Teamcamp provides.

- Teamcamp empowers development teams to streamline workflows, improve collaboration, and boost productivity through its user-friendly interface.

- To address challenges in project management, developers can explore Teamcamp for efficient project planning, collaboration, customizable workflows, and integration with essential developer tools.

Read Full Article

14 Likes

Medium

223

Image Credit: Medium

How I Automated My Deployment Process Using GitHub Actions and Docker

- The article discusses how the author automated their deployment process using GitHub Actions and Docker.

- The author faced challenges with image sizes and handling environment variables, which they addressed using a multi-stage build process and ARG/ENV in the Dockerfile.

- GitHub Actions were organized in a clear and modular structure, with reusable custom actions and specific workflow files.

- Automating the deployment process with GitHub Actions and Docker improved workflow, minimized errors, and enhanced reliability and scalability.

Read Full Article

13 Likes

Dev

275

Image Credit: Dev

The Problem: PowerShell’s Hashing Illusion

- PowerShell lacks a reliable way to hash complex objects deterministically, causing issues in data comparison and integrity verification.

- Existing object hashing techniques in PowerShell often lead to false positives and false negatives.

- The search for a solid object hashing function in PowerShell yielded no satisfactory results, with most solutions focusing on file hashing.

- Common issues with object hashing in PowerShell include non-deterministic serialization, lack of standard approach, and performance inefficiencies.

- PowerShell's JSON-based hashing is flawed for complex objects due to issues with object structure preservation and type coercion.

- The importance of a reliable object hashing function in PowerShell is emphasized to avoid potential data discrepancies and errors.

- The DataHash class is introduced as a solution for structured and deterministic object hashing in PowerShell, addressing key limitations.

- Key features of the DataHash class include order-preserving serialization, support for complex data structures, and configurable hashing algorithms.

- The planned roadmap includes a v1.0 release of the DataHash class with enhanced capabilities and a future C# rewrite for v2.0 with parallelization.

- The v2.0 implementation aims for branch-slicing parallelization to improve hashing performance while maintaining determinism.

Read Full Article

16 Likes

Medium

289

Image Credit: Medium

How to Write DevOps (Or Any Tech) Documentation That Actually Helps Your Team

- Good documentation is crucial for DevOps teams to avoid wasted time, frustration, and system failures.

- Writing good DevOps documentation is not as difficult as it seems.

- Outdated, unclear, and jargon-filled documentation can lead to major issues when problem-solving.

- Creating clear and up-to-date documentation can greatly benefit the efficiency of DevOps teams.

Read Full Article

17 Likes

Amazon

368

Image Credit: Amazon

Run GenAI inference across environments with Amazon EKS Hybrid Nodes

- Amazon EKS Hybrid Nodes allow running generative AI inference workloads across cloud and on-premises environments, offering consistent management and reduced operational complexity.

- EKS Hybrid Nodes support various AI/ML use cases like real-time edge inference, on-premises data residency, and running inference workloads closer to source data.

- Proof of concept involved deploying a single EKS cluster for AI inference on-premises with EKS Hybrid Nodes and in the AWS Cloud with Amazon EKS Auto Mode.

- Prerequisites include setting up Amazon VPC, AWS Site-to-Site VPN connection, on-premises nodes with NVIDIA drivers, and tools like Helm, kubectl, eksctl, and AWS CLI.

- Steps include creating an EKS cluster with EKS Hybrid Nodes and Auto Mode enabled, preparing hybrid nodes, installing NVIDIA device plugin for Kubernetes, and deploying NVIDIA NIM for inference.

- Validating nodes connectivity, deploying GPU-specific solutions for Auto Mode, such as g6 instance family, and testing NIM for inference were part of the deployment process.

- Cleanup steps were outlined to avoid long-term costs, and the post emphasized how EKS Hybrid Nodes simplify AI workload management by unifying Kubernetes footprint.

- For more detailed guidance, users are directed to the EKS Hybrid Nodes user guide and re:Invent 2024 session (KUB205) explaining hybrid nodes functionality, features, and best practices.

- Explore the Data on EKS project for further information on running AI/ML workloads on Amazon EKS.

Read Full Article

22 Likes

Hashicorp

346

Image Credit: Hashicorp

Disaster recovery strategies with Terraform

- The Ponemon Institute study in 2016 reported an exponential rise in the total cost of unplanned outages, emphasizing the need for robust disaster recovery strategies.

- EMA Research's 2022 study indicated a mean total cost per minute of unplanned outages to be $12,900, underlining the importance of effective disaster recovery plans.

- Maintaining disaster recovery solutions can be costly and time-consuming, with expenses ranging from hundreds of thousands to millions of dollars annually for enterprises.

- Leveraging infrastructure as code (IaC) like HashiCorp Terraform can simplify the setup, testing, and validation of disaster recovery environments, ensuring cost-efficiency and consistency.

- Key concepts like Recovery Time Objective (RTO) and Recovery Point Objective (RPO) play a vital role in shaping an organization's disaster recovery strategy.

- Popular disaster recovery strategies include Backup & Data Recovery, Pilot Light, Active/Passive, and Multi-Region Active/Active, each varying in complexity and cost.

- Using Terraform within disaster recovery strategies offers automation, repeatability, scalability, cost efficiency, and flexibility in infrastructure provisioning and maintenance.

- Terraform's advantages include minimizing manual interventions, ensuring infrastructure consistency, scalability as needed, reduced infrastructure costs, and multi-cloud support.

- Terraform can be integrated with various disaster recovery strategies such as Backup & Data Recovery, Pilot Light, Active/Passive, and Multi-Region Active/Active to enhance operational efficiency.

- The example illustrated in the article showcases how Terraform can be applied to conduct a region failover within AWS for a web server, demonstrating the practicality and benefits of using Terraform in disaster recovery scenarios.

- Considerations like application install time, DNS propagation delays, and the need for separate backup strategies should be taken into account when utilizing Terraform for disaster recovery infrastructure.

Read Full Article

20 Likes

Solarwinds

368

Image Credit: Solarwinds

What Kind of Diagnostic AIOps Is Right for Your Organization?

- Diagnostic AIOps involves providing critical information about IT environments for informed decision-making.

- Anomaly detection systems can help organizations in efficient monitoring and prompt issue resolution for large data processing.

- Event correlation systems group related alerts to enhance identification and resolution of underlying issues efficiently.

- Root cause analysis systems utilize machine learning to find the source of IT issues, leading to a more stable IT environment.

- Predictive analytics systems use past data to anticipate issues, minimize downtime, and optimize resource allocation for enhanced operational efficiency.

- Integrated AI-powered tools in IT management improve decision-making, collaboration, and overall IT management processes.

- Considerations for integrating diagnostic AIOPS include scalability, diverse contexts, necessary computing resources, model accuracy, and associated costs.

- AI assistance is increasingly crucial in managing complex IT environments, and organizations need to determine the best deployment approach.

- Next article in the series will focus on assistive AIOps, while emphasizing how AI is influencing tech recruitment.

Read Full Article

22 Likes

Solarwinds

78

Image Credit: Solarwinds

Get Ready for SolarWinds Day: The Era of Operational Resilience

- SolarWinds Day is an event focused on discussing operational resilience and addressing the challenges of complexity in IT environments.

- Organizations struggle to keep operations running smoothly and lack full-stack observability due to fragmented monitoring tools.

- The event will introduce Squadcast, an AI-powered incident management and collaboration tool, to enhance IT operations.

- SolarWinds Day aims to explore the future of IT management, combining operational resilience and cutting-edge technology.

Read Full Article

4 Likes

For uninterrupted reading, download the app