Open Source News

The Robot Report

226

NAU researchers release open-source exoskeleton framework

- Researchers at Northern Arizona University released an open-source robotic exoskeleton to help people with disabilities walk on their own with the help of robotic legs.

- The open-source system, called OpenExo, provides instructions for building single- or multi-joint exoskeletons, including design files, code, and step-by-step guides, to lower barriers to entry for potential exoskeleton developers and researchers.

- The development of exoskeletons is complex, time-consuming, and expensive, but the OpenExo framework aims to address these challenges by allowing new developers to build upon previous work.

- The research at NAU has already helped children with cerebral palsy and patients with gait disorders optimize their rehabilitation, with further potential for positive impact on individuals' lives through advancements in exoskeleton technology.

Read Full Article

13 Likes

VentureBeat

317

The new AI infrastructure reality: Bring compute to data, not data to compute

- AI transformations across industries rely on efficient data storage infrastructure for performance.

- PEAK:AIO, Solidigm, and MONAI project redefine medical AI data infrastructure, enhancing diagnostics.

- MONAI supports on-premises deployment for hospitals, requiring fast, scalable storage solutions.

- PEAK:AIO's software-defined storage layer paired with Solidigm's SSDs accelerates AI tasks.

- AI enterprises pivot towards specialized hardware architectures for efficient data processing and management.

Read Full Article

19 Likes

Medium

427

Image Credit: Medium

Reclaiming the Algorithm: How to Train AI in Your Own Language

- Language loss to AI is not inevitable but convenient for system builders; however, reclaiming the algorithm is possible by providing it with necessary data.

- Most AI models are trained on internet text, thus if your language is underrepresented, the model won't understand.

- Creating data intentionally in your language online by writing, publishing, and uploading content is crucial to teaching AI.

- Community-led language digitization projects provide structured language data for training language models.

- Open-source language models like Mistral, LLaMA, Falcon, and BLOOM can be fine-tuned locally to create AI that reflects specific language and values.

- Language preservation and empowerment must come from the people themselves, as big tech companies may not prioritize low-resource languages.

- Governments, schools, and cultural institutions should fund AI language modeling to preserve national identity in the digital age.

- AI should be seen as something to shape rather than just use, with every interaction serving as a teaching moment.

- Community data governance is crucial to prevent exploitation of language data and ensure collective rights to datasets.

- The goal is to train machines on our terms and prevent language erasure through passive inaction.

- The future should involve AI that understands diverse voices and speaks in various languages, preserving cultural identity.

Read Full Article

25 Likes

Siliconangle

196

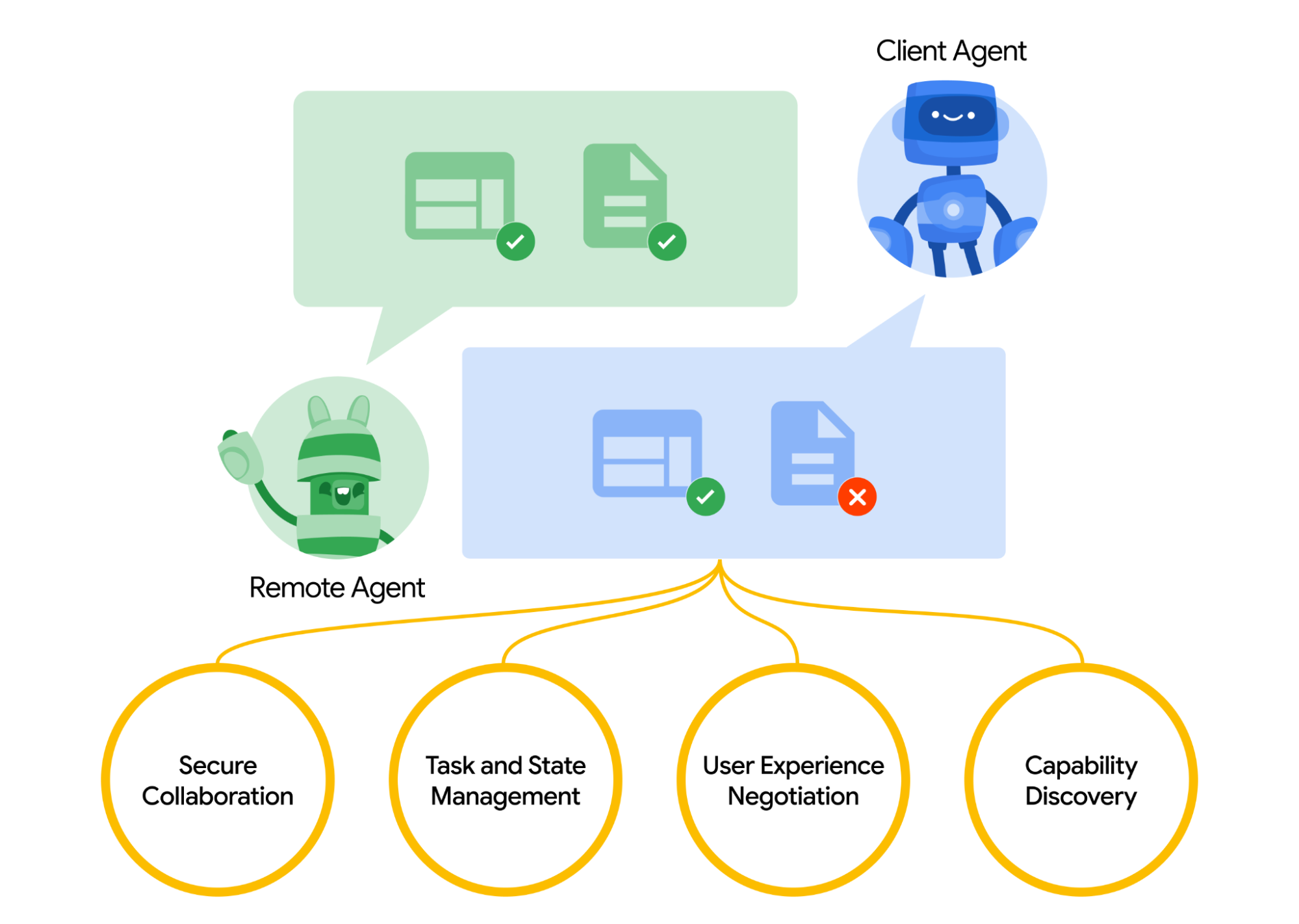

Image Credit: Siliconangle

Google donates the Agent2Agent Protocol to the Linux Foundation

- Google has donated the Agent2Agent Protocol to the Linux Foundation, a technology for AI agent interactions.

- The donation was announced at the Open Source Summit North America conference in Denver.

- Google aims to accelerate adoption of the A2A protocol for open collaboration and development.

- The A2A Protocol facilitates interactions between AI agents to automate tasks.

- Developers no longer need to write custom code as A2A provides prepackaged features for data movement.

- A2A can assist agents in processing prompts, collaborating on complex tasks, and sharing data in JSON format.

- The protocol enables sharing of text and multimodal data efficiently and securely.

- A2A uses the JSON-RPC 2.0 protocol for moving JSON files between agents.

- Google has provided three modes for sharing files and instructions between agents using A2A.

- A2A complements the Model Context Protocol for interactions between agents and other applications.

- Accompanying software development kits and tooling are also donated to the Linux Foundation.

- Companies like Amazon Web Services, Cisco, Microsoft, and others support the A2A project.

- A2A's move to the Linux Foundation aims for long-term neutrality and collaboration in agent-powered productivity.

Read Full Article

11 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Nordicapis

235

10 Tools for Securing MCP Servers

- Model Context Protocol (MCP) links AI-driven tools with organizational resources, necessitating secure MCP servers. Here are ten tools for securing MCP servers:

- 1. Salt Security MCP Server provides context-aware analysis and threat detection.

- 2. MCPSafetyScanner offers role-based testing and audit logging.

- 3. CyberMCP integrates with IDEs and offers natural language querying.

- 4. Invariant Labs MCP-Scan detects MCP-specific threats like tool poisoning.

- 5. Kong AI Gateway enables natural language API queries and behavior profiling.

- 6. MasterMCP provides Python toolkit for MCP security threat emulation.

- 7. MCP Security Scan is a lightweight, open-source scanner.

- 8. MCP for Security offers a suite of MCP-compatible functions for cybersecurity professionals.

- 9. Docker MCP Toolkit provides Docker-native integration and OAuth support.

- 10. Slowmist MCP Security Checklist offers risk-based assessment tools.

Read Full Article

14 Likes

Sdtimes

302

Image Credit: Sdtimes

Google’s Agent2Agent protocol finds new home at the Linux Foundation

- Google donated its Agent2Agent (A2A) protocol to the Linux Foundation at the Open Source Summit North America.

- The A2A protocol offers a standard way for connecting agents to each other and complements Anthropic’s Model Context Protocol (MCP).

- A2A protocol empowers developers to build agents capable of connecting with any other agent built using the protocol.

- Initially, there were over 50 partners contributing to the project, including Atlassian, Cohere, Datadog, Deloitte, Elastic, Oracle, and Salesforce.

- Now, there are over 100 technology partners involved in the A2A protocol project.

- Joining the Linux Foundation will provide the project with vendor neutrality, inclusive contributions, and focus on extensibility, security, and real-world usability.

- The Agent2Agent protocol aims to establish an open standard for communication, enabling interoperable AI agents across platforms and systems.

- Rao Surapaneni, VP and GM of Business Applications Platform at Google Cloud, highlights the collaboration with the Linux Foundation for innovative AI capabilities under an open-governance framework.

Read Full Article

18 Likes

Amazon

43

Image Credit: Amazon

Use Graph Machine Learning to detect fraud with Amazon Neptune Analytics and GraphStorm

- Businesses and consumers face significant losses to fraud, with reports of $12.5 billion lost to fraud in 2024, showing a 25% increase year over year.

- Fraud networks operate coordinated schemes that are challenging for companies to detect and stop.

- Amazon Neptune Analytics and GraphStorm are utilized to develop a fraud analysis pipeline with AWS services.

- Graph machine learning offers advantages in capturing complex relationships crucial for fraud detection.

- GraphStorm enables the use of Graph Neural Networks (GNNs) for learning from large-scale graphs.

- Steps involve exporting data from Neptune Analytics, training graph ML models on SageMaker AI, and enriching graph data back into Neptune Analytics.

- Prerequisites include an AWS account, S3 bucket, required IAM roles, SageMaker execution role, and Amazon SageMaker Studio domain.

- The article provides detailed steps for setting up environment, creating a Neptune Analytics graph, training models with GraphStorm, and conducting fraud analysis.

- The workflow includes data preparation, training GraphStorm models, deploying SageMaker pipelines, enriching graphs, and analyzing high-risk transactions.

- Advanced analytics include detecting community structures, ranking communities by risk scores, and using node embeddings to find similar high-risk transactions.

- The post encourages further integrations with Neptune Database for online transactional graph queries and highlights workflow extensions.

Read Full Article

2 Likes

Arstechnica

393

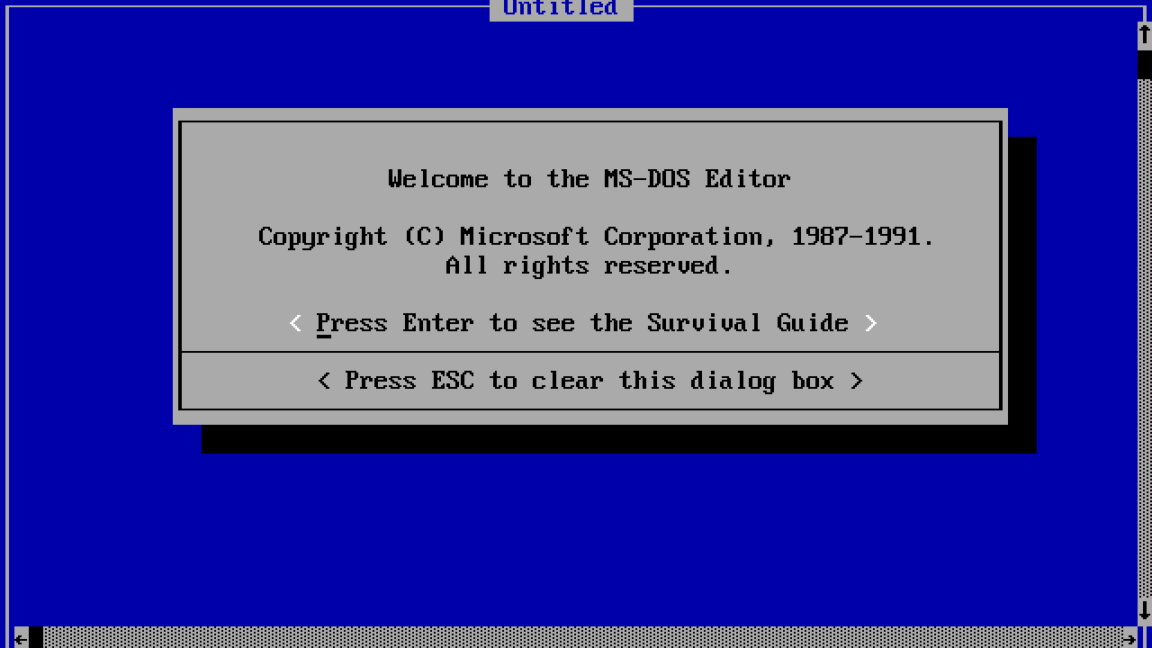

Image Credit: Arstechnica

Microsoft surprises MS-DOS fans with remake of ancient text editor that works on Linux

- Microsoft released a modern remake of its classic MS-DOS Editor, called 'Edit,' built with Rust and compatible with Windows, macOS, and Linux.

- Users are delighted to run Microsoft's text editor on Linux after 30 years, offering a nostalgic experience.

- The original MS-DOS Editor introduced full-screen interface, mouse support, and navigable pull-down menus, setting a new standard in text editing.

- Microsoft addresses the need for a default CLI text editor in 64-bit Windows by creating 'Edit,' a lightweight tool with modern features.

- Developers have shown mixed-to-positive reception towards Microsoft's new open source 'Edit' tool, praising its cross-platform usability.

- The new 'Edit' editor offers Unicode support, regular expressions, and can handle gigabyte-sized files, a significant upgrade from the original MS-DOS Editor.

- Users can download 'Edit' on GitHub or through an unofficial snap package for Linux, emphasizing its broad accessibility.

- The lightweight and efficient nature of 'Edit' highlights a newfound appreciation for fast and simple tools in software development.

- Despite 34 years of tech evolution, Microsoft's 1991 design philosophy with MS-DOS Editor still resonates in 2025, signifying the enduring principles of text editing.

Read Full Article

23 Likes

AWS Blogs

415

Image Credit: AWS Blogs

AWS Weekly Roundup: re:Inforce re:Cap, Valkey GLIDE 2.0, Avro and Protobuf or MCP Servers on Lambda, and more (June 23, 2025)

- Last week's AWS re:Inforce conference highlighted new security innovations and updates, including IAM Access Analyzer enhancements, MFA enforcement for root users, and AWS Security Hub improvements.

- Amazon Verified Permissions team released an open-source package for Express.js, simplifying authorization implementation for web application APIs.

- AWS Lambda now natively supports Avro and Protobuf formatted Kafka events, easing schema validation and event processing.

- Amazon S3 Express One Zone introduces atomic renaming of objects with a single API call for efficient data management.

- Valkey GlIDE 2.0 is launched with enhanced support for Go, OpenTelemetry, and pipeline batching for high-performance workloads.

- Upcoming AWS events include GenAI Lofts for showcasing cloud computing and AI expertise, as well as AWS Summits in various regions.

Read Full Article

24 Likes

Automatetheplanet

87

WinAppDriver to Appium Migration Guide

- WinAppDriver, Microsoft's UI automation tool for Windows desktop apps, is no longer actively maintained.

- Appium, an open-source automation framework, offers a powerful alternative for Windows automation.

- Migrating from WinAppDriver to Appium provides better extensibility, active development, and cross-platform capabilities.

- Appium's migration steps vary in JavaScript, Python, Java, Ruby, and .NET programming languages.

Read Full Article

5 Likes

Infoq

148

Image Credit: Infoq

Spring News Roundup: Spring Vault Milestone, Point Releases and End of OSS Support

- The Spring ecosystem saw significant activity during the week of June 16, 2025, with milestones and point releases across various projects.

- Spring Vault 4.0 released its first milestone, while Spring Boot, Security, Authorization Server, Session, and others had point releases.

- Several Spring projects, along with Spring Framework, will end OSS support on June 30, 2025.

- Spring Boot versions 3.5.1, 3.4.7, and 3.3.13 brought bug fixes, documentation improvements, and new features.

- Spring Security 6.5.1, 6.4.7, and 6.3.10 introduced bug fixes and new features like migration guides.

- Spring Authorization Server, Spring Session, Spring Integration, Spring Modulith, Spring REST Docs, Spring AMQP, and others had updates with bug fixes and enhancements.

- Spring Web Services 4.0.15 released with dependency upgrades and bug resolutions, addressing thread safety issues.

- The first milestone release of Spring Vault 4.0 aligned with Spring Framework 7.0 and introduced new features for better null safety and HTTP implementations.

- Multiple Spring projects will cease OSS support on June 30, 2025, with enterprise support ending in 2026.

- Numerous details on the releases and updates can be found in the respective release notes.

- The article provides a comprehensive overview of the Spring ecosystem updates and upcoming end of OSS support dates.

Read Full Article

8 Likes

Marktechpost

361

Google Researchers Release Magenta RealTime: An Open-Weight Model for Real-Time AI Music Generation

- Google's Magenta team has introduced Magenta RealTime (Magenta RT), an open-weight, real-time music generation model that supports interactivity and dynamic style prompts.

- Magenta RT allows real-time music generation with control over style prompts, leveraging Transformer-based language models and training on a dataset of instrumental stock music.

- The model enables streaming synthesis, temporal conditioning, and multimodal style control through text or audio prompts, facilitating real-time genre morphing and instrument blending.

- Magenta RT achieves a generation speed of 1.25 seconds per 2 seconds of audio, suitable for real-time usage with optimizations for minimal latency on free-tier TPUs.

- Applications of Magenta RT include live performances, creative prototyping, educational tools, and interactive installations, with upcoming support for on-device inference and personal fine-tuning.

- Magenta RT is open source and self-hostable, setting it apart from related models, emphasizing interactive generation and dynamic user control.

- The model represents a significant advancement in real-time generative audio, balancing scale, speed, and accessibility while fostering community contribution.

Read Full Article

21 Likes

Marktechpost

74

DeepSeek Researchers Open-Sourced a Personal Project named ‘nano-vLLM’: A Lightweight vLLM Implementation Built from Scratch

- DeepSeek Researchers released 'nano-vLLM', a lightweight vLLM implementation built from scratch in Python.

- 'nano-vLLM' prioritizes simplicity, speed, and transparency for users interested in efficient language model inference.

- The project boasts a concise, readable codebase of around 1,200 lines while maintaining inference speed on par with the original vLLM engine.

- Key features of 'nano-vLLM' include fast offline inference, clean and readable codebase, and optimization strategies like prefix caching and tensor parallelism.

- 'nano-vLLM' architecture involves components such as Tokenizer, Model Wrapper, KV Cache Management, and Sampling Engine for efficient processing.

- Use cases for 'nano-vLLM' include research applications, inference-level optimizations, teaching deep learning infrastructure, and deployment on low-resource systems.

- Limitations of 'nano-vLLM' include lack of dynamic batching, real-time token-by-token generation, and limited support for multiple concurrent users due to its minimalistic approach.

- Despite its limitations, 'nano-vLLM' stands out as a tool for understanding LLM inference and building custom variants with support for key optimizations.

Read Full Article

4 Likes

Medium

194

Image Credit: Medium

The 10 Open Source Tools I Can’t Live Without as a Developer (2025 Edition)

- A developer shares a curated list of open source tools on GitHub that they can't live without in the 2025 edition.

- The developer values open source tools for saving time, reducing clutter, and solving common development problems.

- Open source tools are preferred due to being built by users themselves, transparent, flexible, and respecting developer autonomy.

- The list includes powerful and practical software that enhances workflow with no fluff included.

- While closed tools may be visually appealing and faster, open source tools offer better control over the tools and software being used.

Read Full Article

12 Likes

Medium

426

Image Credit: Medium

I Ditched ChatGPT for Uncensored AI — The Results Will Shock You.

- The author shares their journey of transitioning from using a censored AI to exploring uncensored AI.

- They describe feeling restricted by a controlled and sanitized AI experience.

- The author found a community of open-source AI enthusiasts advocating for free and uncensored AI.

- They discovered comprehensive resources listing powerful alternatives to mainstream AI models.

- Despite initial intimidation, the author found it surprisingly easy to set up and run their own uncensored AI.

- The uncensored AI provided depth, nuance, and tackled complex topics with conviction.

- It excelled in creative writing, producing raw and emotionally complex narratives.

- The transition symbolizes a shift in the author's perspective on technology and information.

- Self-hosting AI tools allows for reclaiming digital sovereignty and controlling personal data.

- The future of AI is envisioned as diverse and unrestricted voices on various machines.

- The author emphasizes the freedom experienced with uncensored AI and contrasts it with controlled AI.

- The transformative journey signifies a departure from being hemmed in by corporate-controlled AI.

- The shift towards uncensored AI is likened to the early Hackintosh community's defiance against ecosystem control.

- Empowerment through self-hosting encourages exploration of technology to its limits.

- The uncensored AI experience instills a desire to remain outside the confines of a restricted digital environment.

Read Full Article

25 Likes

For uninterrupted reading, download the app