Open Source News

Medium

320

Image Credit: Medium

Contributing to Open Source is Easier Than You Think: My Journey with Firebase Genkit

- Contributing to open source empowers individuals to shape the tools they use daily.

- Starting with labeled issues is crucial for newcomers interested in contributing.

- Downloading the project, installing dependencies, and running tests are standard procedures.

- Clear documentation and supportive communities are vital for attracting contributors.

- Making it easy to contribute is essential for successful open source projects.

- Fixing bugs involves analyzing code, debugging, and experimenting with solutions.

- Preparing a pull request is a proposal to merge changes into the main codebase.

- The review process helps contributors learn from experienced developers.

- Engaging in a conversation and learning from others are key aspects of open source.

- Seeing your code merged into the project is a satisfying validation of your contribution.

- Open source offers a chance to expand skills, contribute meaningfully, and be part of a community.

Read Full Article

19 Likes

Medium

211

Image Credit: Medium

Making Shakespeare Understandable: Modernizing Movie Subtitles with AI

- Translating Shakespeare's plays into modern English has been done by various authors, educators, and playwrights in the past.

- However, existing tools focus on reading or performing, neglecting the need for modern subtitles in movies.

- A new AI tool aims to bridge this gap by modernizing Shakespeare's dialogue specifically for movie subtitles.

- The tool preserves the original subtitle structure and timing, ensuring accuracy and continuity.

- It targets .srt files directly, allowing easy integration with video players and other platforms.

- The process involves chunking subtitles, formatting lines, strict system prompting, and reconstructing the .srt blocks.

- The modernized subtitles maintain synchronization and enhance viewers' understanding without altering the original intent.

- This innovation enables viewers to appreciate Shakespeare's works without struggling with archaic language barriers.

Read Full Article

12 Likes

Medium

136

Image Credit: Medium

Decoding the Oracle Cloud-Health Attack: Did legacy gateways expose medical records for millions?

- The article explores the potential connection between the breach of Oracle Cloud login servers and the theft of Protected Health Information (PHI) from Oracle Health/Cerner, impacting millions of patient records.

- Credentials for subdomains related to 'cerner.com' were compromised, allowing access to sensitive patient information across healthcare providers.

- The report suggests a 'chain attack' scenario penetrating Oracle Cloud servers for breaching Oracle Health/Cerner servers, emphasizing the need for further investigation.

- Pre-Authentication Remote Code Execution (RCE) on Oracle Cloud login servers facilitated data exfiltration by threat actors.

- The breach highlights concerns about Oracle's legacy Fusion Middleware and the vulnerabilities it poses in cloud-native platforms.

- The incident signifies the critical importance of timely and transparent communication from organizations to address sophisticated cyber threats effectively.

- The potential shift to open source EHR systems like OpenEMR and Ottehr is recommended for enhanced security and interoperability in healthcare settings.

- Ottehr, a newly introduced open source EHR, is highlighted as a promising solution with scalability and modern technologies to support millions of patient visits.

- The article underscores the need for healthcare providers to consider migrating to modern cloud-native architectures to mitigate risks associated with legacy systems.

- The interconnectedness of Oracle's legacy systems and AI-driven cyber threats pose significant challenges, necessitating a shift towards more secure platforms.

- Overall, the incident serves as a wakeup call for improving cybersecurity measures within the healthcare industry and promoting open source solutions for enhanced data protection.

Read Full Article

8 Likes

Hackernoon

220

Image Credit: Hackernoon

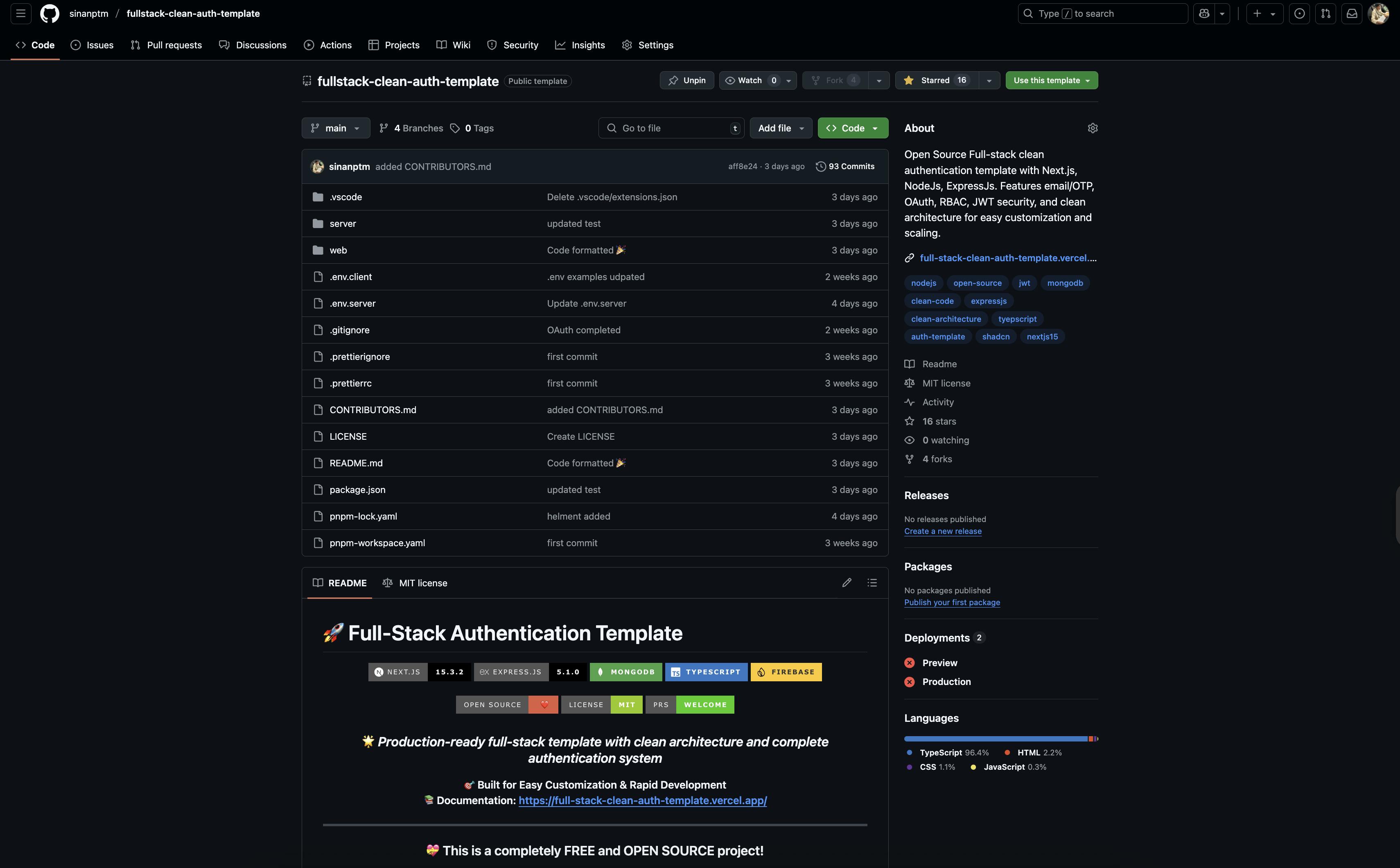

Authentication Sucks—So This Developer Built a Better Starting Point

- The developer shares frustrations with authentication and architecture challenges encountered in building projects, prompting the idea to create a reusable solution.

- Authentication was consistently a roadblock due to complexities like OAuth, JWTs, and session handling, leading to time-consuming implementations and messy code.

- Architectural concerns for scalability and clean code were also prominent, highlighting the need for a template that could evolve and be easily extended by other developers.

- The Full-Stack Clean Auth Template project was introduced as an open-source solution using Next.js, Express, Node.js, and TypeScript, emphasizing clean architecture principles and modularity.

- Key features include a clean architectural separation, modularity, TypeScript support, scalability, and comprehensive documentation for quick adoption.

- The template aims to streamline authentication and architecture decisions for product launches, enabling developers to focus on core features with a reliable foundation.

- Open-source and community-driven, the project welcomes contributions and extensions to support additional frameworks such as React Native or Angular.

- The developer actively maintains the project and encourages feedback, PRs, and suggestions from contributors to enhance the template's functionality and adaptability.

- The template serves as a foundational tool to save time and simplify project setups, presenting a solution for developers weary of repetitive foundational work.

Read Full Article

13 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Medium

3.9k

Image Credit: Medium

Debugging React Native in 2025: A Software Engineer’s Survival Guide

- Debugging React Native in 2025: A Software Engineer’s Survival Guide.

- Software engineers often experience unique pain related to debugging, such as layout issues, state resets in production, and unresponsive buttons.

- The author, having worked on various tech stacks, highlights the challenges faced within React Native apps as particularly grueling and satisfying.

- The article is a reflection on tools that helped the author navigate debugging challenges in React Native apps.

- It emphasizes moving from guessing solutions to actually understanding and resolving issues, even those not reflected in logs.

Read Full Article

16 Likes

Medium

105

Image Credit: Medium

How I made a programming language at 14; The Story of Glowscript

- At 14 years old, the author created a programming language called Glowscript within a console named Tardigrade.

- The author, diagnosed with ASD at age 5, faced bullying but remained confident and focused on achieving greater things.

- Initially starting a project named gVars, the author integrated a built-in scripting language and created Tardigrade for data manipulation.

- Glowscript, a simple and intuitive scripting language, was developed by the author as part of the Tardigrade project.

- Glowscript is user-friendly with no indentation requirement, easy programming, and offers fun experimentation.

- The author's dream project would involve increasing productivity without a focus on unlimited power or money.

- The author advises readers to work hard and improve, mentioning their GitHub repository for further exploration.

Read Full Article

6 Likes

Medium

383

Image Credit: Medium

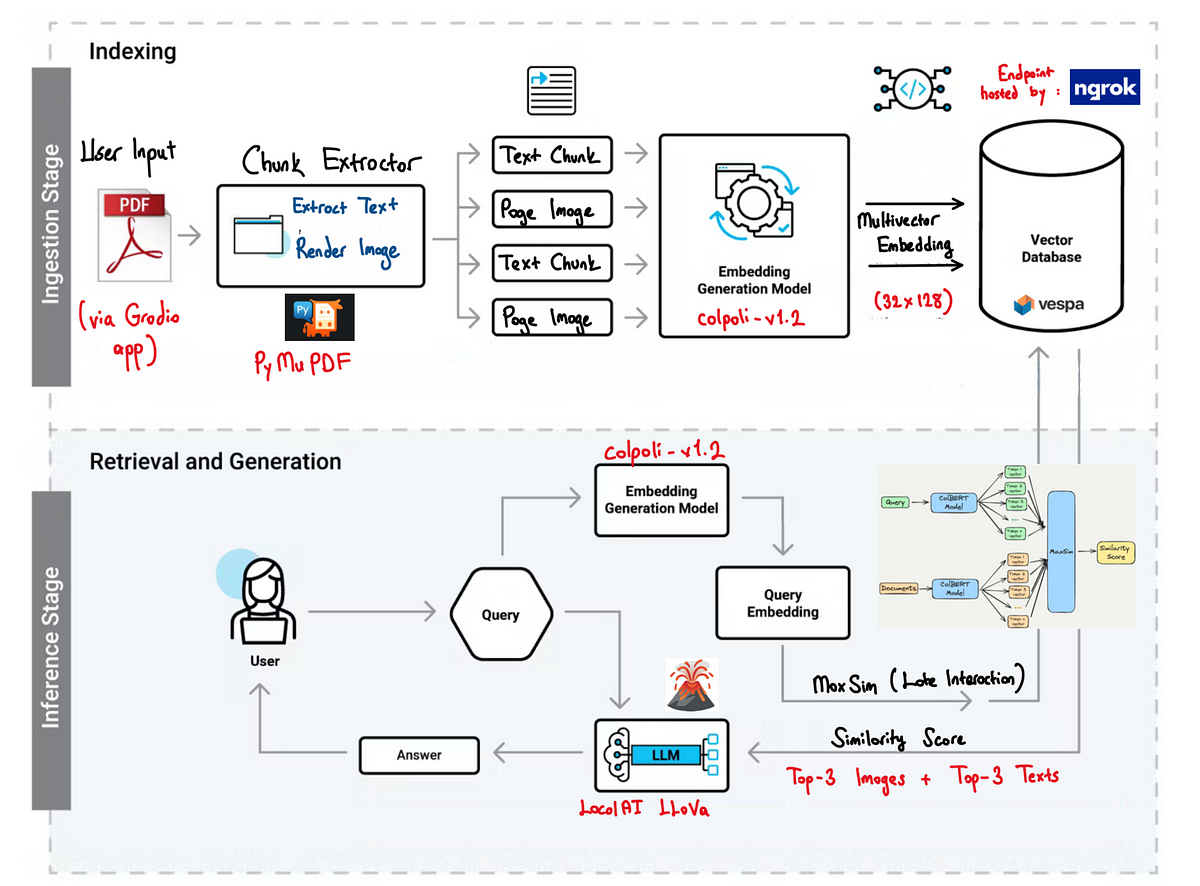

Reimagining Multimodal Retrieval with ColPali: A New Paradigm in RAG Pipelines

- ColPali is a new vision-language model that embeds and retrieves multimodal content in a shared latent space, offering a more advanced RAG pipeline design.

- Traditional retrieval systems often struggle with multimodal content, leading to noise and misalignment when incorporating images.

- ColPali is a dual-encoder model that embeds both text and image inputs into the same vector space, enabling modality-agnostic retrieval.

- The system uses MaxSim similarity for more accurate matching between queries and stored vectors, allowing for semantic relevance extraction regardless of modality.

- An interactive Gradio interface showcases the system, providing users with quick and relevant results from uploaded PDFs based on natural language queries.

- The approach reduces cognitive load by presenting users with instant, relevant information from both text and images in documents.

- LLaVA, a local vision-language model, is used to generate meaningful answers offline, ensuring responses are based strictly on retrieved evidence.

- By leveraging joint embedding space, multivector storage, and local generation, ColPali offers a modality-agnostic and privacy-respecting retrieval system.

- The article provides source code for the pipeline on GitHub for those interested in implementing similar multimodal retrieval solutions.

Read Full Article

23 Likes

Bitcoinmagazine

238

The Trolls Are Coming: Defending Bitcoin Mining from Patent Trolls

- Malikie Innovations Ltd., a patent troll, filed lawsuits against Bitcoin mining firms claiming infringement on ECC-related patents acquired from BlackBerry.

- The lawsuits seek damages and injunctions, potentially imposing licensing on core Bitcoin functions.

- Defensive strategies include invalidating patents, Inter Partes Review (IPR), declaratory judgments, and motions to dismiss under Alice's test.

- IPR is cost-effective and fast, with a high success rate at invalidating patents, offering a potent defense against trolls.

- Community responses involve crowdsourced prior art searches, Bitcoin Legal Defense Fund, and industry alliances like COPA.

- Malikie's success could lead to financial strain, further lawsuits, and necessitate future safeguards within the Bitcoin community.

- The fight aims to demonstrate Bitcoin's core technologies as products of public research and collaborative development.

- The article provides insights into legal defenses, industry support, and community efforts to combat patent trolls targeting Bitcoin mining.

- The clash between Malikie Innovations and Bitcoin miners underscores the ongoing battle between open innovation and legacy IP rights.

- Overall, the community's unity and proactive measures are crucial in protecting Bitcoin's technological advancements from patent trolls.

- The industry's response to trolls can not only safeguard current operations but also foster innovation and growth in the Bitcoin ecosystem.

Read Full Article

14 Likes

Hackernoon

370

Image Credit: Hackernoon

Supercharge Your ETL Pipeline with SeaTunnel’s Lock-Free CDC

- Change Data Capture (CDC) tracks row-level changes and synchronizes data downstream in real-time.

- Apache SeaTunnel CDC supports Snapshot Reading and Incremental Tracking synchronization methods.

- SeaTunnel uses lock-free mechanisms for Snapshot Sync, splitting table data for parallel processing.

- Snapshot splits in SeaTunnel contain metadata for routing and processing.

- SeaTunnel's Incremental Synchronization captures real-time changes after the snapshot phase.

- SeaTunnel ensures exactly-once processing during both snapshot and incremental sync phases.

- During snapshot reading, SeaTunnel caches data and reconciles changes between low and high watermarks.

- Before incremental sync, SeaTunnel validates snapshot splits and corrects inter-split data.

- SeaTunnel employs the Chandy-Lamport algorithm for fault tolerance and checkpointing in distributed environments.

- Markers in SeaTunnel CDC allow all nodes to store their state for recovery and pause-resume capabilities.

Read Full Article

22 Likes

Arstechnica

8

Image Credit: Arstechnica

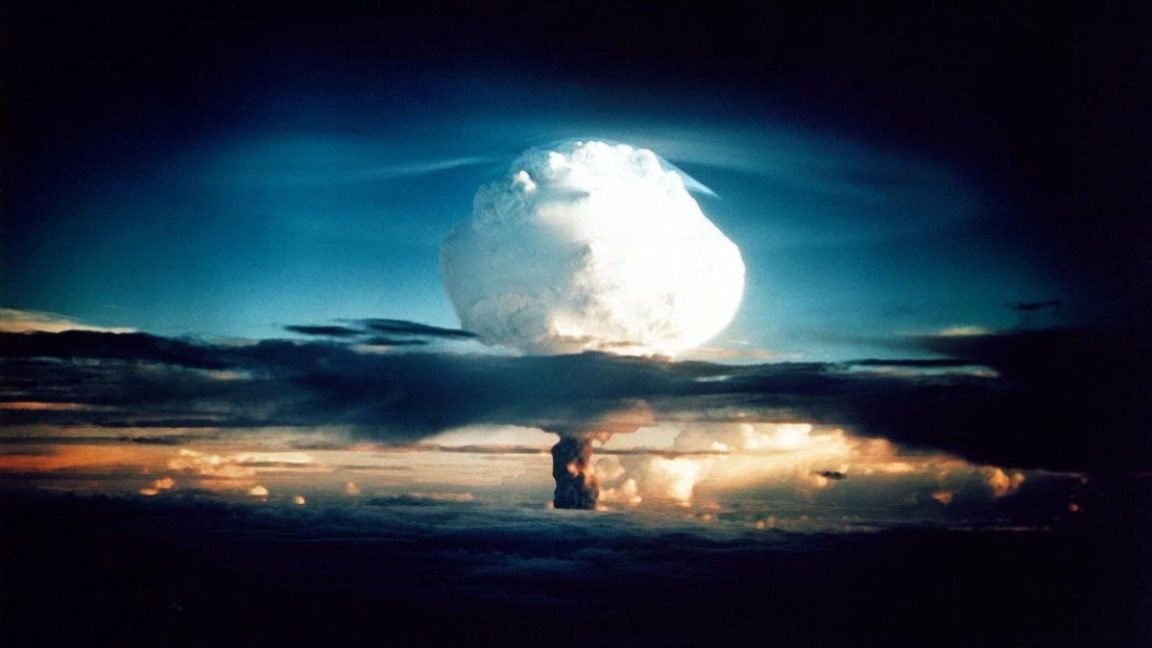

Scientists once hoarded pre-nuclear steel, and now we’re hoarding pre-AI content

- Former Cloudflare executive John Graham-Cumming has launched lowbackgroundsteel.ai, a website preserving pre-AI human-created content.

- The website aims to protect non-AI media from contamination by AI-generated content, similar to hoarding pre-nuclear steel during the Cold War.

- Generative AI models like ChatGPT have made it challenging to differentiate between human and AI-created content on the internet.

- The wordfreq project, tracking word frequency across languages, shut down due to the prevalence of AI-generated text online.

- Concerns exist about AI models training on their outputs, potentially leading to quality degradation known as 'model collapse.'

- Research suggests model collapse can be avoided by combining synthetic data with real data during training.

- Graham-Cumming aims to document human creativity from the pre-AI era through the website.

- The website points to major pre-AI content sources like Wikipedia dumps, Project Gutenberg, and GitHub's Arctic Code Vault.

- Lowbackgroundsteel.ai accepts submissions of pre-AI content sources to expand its archives.

- The project is a digital archaeology effort to distinguish between human-generated and hybrid human-AI cultures.

Read Full Article

Like

Siliconangle

106

Image Credit: Siliconangle

With $27M in funding, Fleet wants to bring more freedom to enterprise device management

- Fleet Inc. secures $27 million in Series B funding to enhance enterprise device management.

- The funding round was led by Ten Eleven Ventures with participation from CRV, Open Core Ventures, and other investors.

- Fleet offers an open-source device management platform for companies to secure their computing devices.

- The platform provides transparency, extensibility, and repeatability compared to proprietary software.

- Fleet aims to give organizations control over device management for compliance with regulations like PCI and FedRAMP.

- It offers both hosted and on-premises deployment options.

- Netflix, Stripe, Fastly, Uber, and Reddit are among the well-known enterprises using Fleet.

- Fleet's platform supports various devices like iPhones, Android phones, laptops, and even data centers.

- Customers praise Fleet for providing flexibility and avoiding forced cloud migrations.

- The company's rapid growth is attributed to its channel partners and open-source approach.

- Fleet CEO emphasizes the company's dedication to open hosting options.

- Ten Eleven Ventures Partner endorses Fleet for its comprehensive device management capabilities.

- Fleet plans to maintain its open approach and customizable hosting solutions.

- The company has been seeing significant adoption for its device management platform.

- Flexible hosting options are generating increased interest in web searches.

- Fleet's platform is designed to cater to a wide range of operating systems and devices.

- The company's mission is to provide free, relevant, and in-depth content to the community.

Read Full Article

6 Likes

VentureBeat

433

Image Credit: VentureBeat

Qodo teams up with Google Cloud, to provide devs with FREE AI code review tools directly within platform

- Qodo, an AI coding startup from Israel, collaborates with Google Cloud to enhance AI-generated software integrity.

- The need for oversight and quality assurance tools for AI-produced code is increasing.

- Qodo focuses on code quality and holistic engineering support, developing tools like Qodo-Embed-1-1.5B.

- Qodo's AI agents, like Qodo Cover, help with tasks such as test generation and code review.

- Qodo Merge, an AI-powered code review tool using Google's Gemini models, is offered at no cost to open-source software maintainers.

- Qodo's suite of tools is now integrated with Google Cloud, available through Vertex AI and Google Cloud Marketplace.

- QodoGen, Qodo's AI code assistant, delivers more accurate code suggestions with Gemini 2.5 Pro model.

- Qodo offers modular solutions for enterprise users, allowing customization of workflows.

- The startup program with Google Cloud provides a 50% discount for early-stage companies using Qodo's tools for commercial products.

- Qodo aims to bridge the gap between speed and quality in software development in the era of AI-driven coding.

Read Full Article

26 Likes

IEEE Spectrum

56

Image Credit: IEEE Spectrum

Airbnb’s Dying Software Gets a Second Life

- Vikram Koka discovered a stagnant Apache Airflow project in late 2019, sparking his journey to revitalize the software initially developed by Airbnb for data-related workflows.

- Airflow transitioned to a Top-Level Project at Apache Software Foundation, but faced stagnation with flat downloads and lack of updates.

- Koka was attracted to Airflow's 'configuration as code' principle and its ability to manage tasks through directed acyclic graphs coded in Python.

- After a year of efforts, Airflow 2.0 was released, marking a pivotal moment for the project's growth and adoption by enterprises.

- Airflow 3.0 introduced a modular architecture and enhanced features, leading to a significant increase in downloads and community engagement.

- The project now has over 3,000 global developers contributing, with an average of 35 to 40 million downloads monthly.

- Future plans for Airflow include supporting tasks in various programming languages and enhancing capabilities for AI and machine learning workflows.

- The team strives to nurture a diverse community of contributors and users, emphasizing gradual involvement and constructive feedback.

- Airflow's role in machine learning operations and generative AI is on the rise, positioning it as a critical foundation for AI and ML workloads.

Read Full Article

3 Likes

VentureBeat

433

MiniMax-M1 is a new open source model with 1 MILLION TOKEN context and new, hyper efficient reinforcement learning

- Chinese AI startup MiniMax has launched MiniMax-M1, a large language model with 1 million input tokens and open-source under Apache 2.0 license.

- MiniMax-M1 sets new standards in long-context reasoning, agentic tool use, and efficient compute performance.

- It distinguishes itself with a context window of 1 million input tokens, outperforming models like OpenAI's GPT-4o.

- MiniMax-M1 is trained using a highly efficient reinforcement learning technique, consuming only 25% of the FLOPs required by other models like DeepSeek R1.

- The model has two variants, MiniMax-M1-40k and MiniMax-M1-80k, with different output lengths.

- MiniMax-M1's training cost was $534,700, which is significantly lower compared to other models like DeepSeek R1 and OpenAI's GPT-4.

- It achieves high accuracy on mathematics benchmarks and excels in coding and long-context tasks, outperforming competitors on complex tasks.

- MiniMax-M1 addresses challenges for technical professionals by offering long-context capabilities, compute efficiency, and open access under Apache 2.0 license.

- The model supports structured function calling, packaged with a chatbot API, and provides deployment options using Transformers library.

- MiniMax-M1 presents a flexible option for organizations to experiment with advanced AI capabilities while managing costs and avoiding proprietary constraints.

Read Full Article

26 Likes

VentureBeat

101

Image Credit: VentureBeat

Groq just made Hugging Face way faster — and it’s coming for AWS and Google

- Groq is challenging cloud providers like AWS and Google by supporting Alibaba’s Qwen3 32B model with a full 131,000-token context window and becoming an inference provider on Hugging Face.

- Their unique architecture allows efficient handling of large context windows, offering speeds of approximately 535 tokens per second and pricing at $0.29 per million input tokens and $0.59 per million output tokens.

- The integration with Hugging Face opens Groq to a vast developer ecosystem, providing streamlined billing and access to popular models.

- Groq's global footprint currently serves over 20M tokens per second and plans further international expansion.

- Competitors like AWS Bedrock and Google Vertex AI leverage massive cloud infrastructure, but Groq remains confident in its differentiated approach.

- Groq's competitive pricing aims to meet the growing demand for inference compute, despite concerns about long-term profitability.

- The global AI inference chip market is projected to reach $154.9 billion by 2030, driven by increasing AI application deployment.

- Groq's move offers both opportunity and risk for enterprise decision-makers, with potential cost reduction and performance benefits paired with supply chain risks.

- Their technical capability to handle full context windows could be valuable for enterprise applications requiring in-depth analysis and reasoning tasks.

- Groq's strategy combines specialized hardware and aggressive pricing to compete with tech giants, focusing on scalability and performance advantages.

- The success of Groq's approach hinges on maintaining performance while scaling globally, posing a challenge for many infrastructure startups.

Read Full Article

6 Likes

For uninterrupted reading, download the app