Big Data News

TechBullion

328

Image Credit: TechBullion

Building the Future with Data Engineering: Uma Uppin on Crafting Scalable Infrastructure, Tracking Business Metrics, and Embracing an AI-Driven World

- Uma Uppin delves into the evolving field of data engineering, exploring how it forms the backbone of data-driven organizations today.

- Uma provides a detailed look at the strategies that enable businesses to turn raw data into actionable insights.

- Critical components of data infrastructure and data quality were defined and the importance of tracking user metrics like acquisition, retention, and churn were explored.

- Data engineering gathers events when a new user onboards a product and tracks their activity on the product and builds pipelines to compute the metrics across various time windows.

- Starting with a data infrastructure and analytics team is advocated, the analytics team can swiftly build pipelines to analyze different stages of the funnel.

- It becomes essential to establish a data engineering function that will build foundational core dimensions and metrics.

- Observability is a vital element in building effective monitoring systems, allowing teams to maintain oversight and respond quickly to issues.

- Two main types of alerting and monitoring systems are identified.

- Organizations can build several key metrics to help them understand a product’s success.

- With AI rapidly advancing, a company’s success will depend on its ability to build and integrate generative AI applications.

Read Full Article

19 Likes

Precisely

379

Image Credit: Precisely

Modern Data Architecture: Data Mesh and Data Fabric 101

- Data mesh and data fabric are two modern data architectures that serve to enable better data flow, faster decision-making, and more agile operations.

- Data mesh is a decentralized approach to data management, designed to shift creation and ownership of data products to domain-specific teams.

- Data fabric is a unified approach to data management, creating a consistent way to manage, access, and share data across distributed environments.

- Data mesh empowers subject matter experts and domain owners to curate, maintain, and share data products that impact their domain.

- Data fabric weaves together different data management tools, metadata, and automation to create a seamless architecture.

- Data mesh accelerates innovation and improves alignment between data and business objectives, while data fabric offers advanced analytics, faster decisions and improved compliance and security.

- The implementation of either data mesh or data fabric requires a cultural shift towards valuing and utilizing data, strong governance frameworks, investing in metadata management practices, and self-service tools.

- Data management using modern architectures like data mesh and data fabric will help unlock the potential of your data, empowering your organization to be agile, data-driven, and able to respond to market changes and make informed decisions in real time.

- Data mesh focuses on decentralization and data fabric takes a more unified approach.

- Modern data architectures converge on the same goal: empowering your organization to be agile, data-driven, and able to respond to market changes and make informed decisions in real time.

Read Full Article

22 Likes

Siliconangle

19

Image Credit: Siliconangle

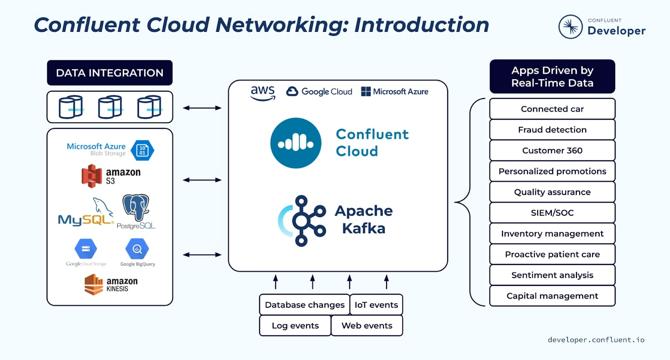

Data streaming leader Confluent posts strong Q3 results, boosts year-end forecast

- Data streaming software provider, Confluent Inc., reported strong Q3 results with revenue and earnings beats.

- Adjusted earnings per share for Q3 were 10 cents, beating analysts' expectations of 5 cents per share.

- Subscription revenue grew by 27% YoY to $239.9 million, and cloud service revenue increased by 42% YoY to $130 million.

- Confluent raised its full-year 2024 subscription revenue guidance and expects positive non-GAAP operating margin and free cash flow margin for 2024.

Read Full Article

1 Like

Amazon

86

Image Credit: Amazon

Streamline AI-driven analytics with governance: Integrating Tableau with Amazon DataZone

- Amazon DataZone has announced that its users can query data stored in the service's platform thanks to an integration of its Athena JDBC driver technology with business analysis tools like Tableau. The integration promises to streamline workflow by integrating a JDNC connection and providing users with access to a secure and intuitive connection process for their big data analytics needs.

- According to Senior Vice President of Advanced Analytics at Salesforce, Ali Tore, the collaboration represents a unique opportunity to empower businesses with data integration and collaboration capabilities. Tore says that the partnership with Amazon provides organisations the ability to access data held across different platforms more easily, a major draw for business leaders looking to make informed decisions in real-time.

- Tableau is one of dozens of partner analytics tools which has seen direct integration into Amazon DataZone. Other software programs include DBeaver and Domino, among others.

- Amazon DataZone is AWS's data management service for cataloguing and governing data. With the integration of Tableau it aims to offer solutions to sharing data within a project, creating data products and streamlining access to data, with a view to developing an environment that encourages data sharing, tailored solutions and generative AI potential.

- To begin querying and visualising metadata belonging to Amazon DataZone clients, users should download the Athena JDBC driver for Tableau, copy the JDBC connection string from the Amazon DataZone portal, and follow the detailed instructions given by the platform to configure both tools for use.

- With the single sign-on sign in, Tableau users can construct their own bespoke, governed data set by directly accessing Amazon DataZone’s clients’ assets. They can easily query, visualize, and share data while also being governed by the requisite control provided by Amazon DataZone.

- Amazon DataZone has expanded its offerings, seeking to provide users with increased functionality in how they can access, analyze, and visualize their subscribed data. The integration is available now in all AWS commercial regions.

- The recent enhancements of Amazon DataZone enable seamless integration with Tableau. By leveraging its data governance capabilities, the two services help break down data silos while giving business analysts access to advanced analytical techniques tailored to their specific requirements. The service provides organizations with the opportunity to work together on generating valuable business insights while maintaining security and control over distributed data.

- The Tableau integration into Amazon DataZone fits into the long term focus of Amazon in providing their customers with the functionality to make informed decisions from data while maintaining security and control over data generated across multiple platforms.

- The joint integration of data is expected to help companies break down silos, facilitate collaboration, boost productivity, and make better-informed business decisions.

Read Full Article

5 Likes

Amazon

242

Image Credit: Amazon

Expanding data analysis and visualization options: Amazon DataZone now integrates with Tableau, Power BI, and more

- Amazon DataZone now integrates with popular BI and analytics tools like Tableau, Power BI, Excel, SQL Workbench, and DBeaver allowing data users to seamlessly query their subscribed data lake assets. The integration empowers data users to analyze governed data within Amazon DataZone using familiar tools, boosting productivity and flexibility.

- Amazon DataZone enables data users to locate and subscribe to data from multiple sources within a single project while natively integrating with Amazon-specific options like Amazon Athena, Amazon Redshift, and Amazon SageMaker.

- With the launch of JDBC connectivity, Amazon DataZone expands its support for data users, allowing them to work in their preferred environments and ensure secure, governed access within Amazon DataZone.

- Amazon DataZone strengthens its commitment to empowering enterprise customers with secure, governed access to data across the tools and platforms they rely on. For example, Guardant Health uses Amazon DataZone to democratize data access across its organization.

- To get started, download and install the latest Athena JDBC driver for your tool of choice. After installation, copy the JDBC connection string from the Amazon DataZone portal into the JDBC connection configuration to establish a connection from your tool.

- Amazon DataZone continues to expand its offerings, providing you with more flexibility to access, analyze, and visualize your subscribed data. With support for the Athena JDBC driver, you can now use a wide range of popular BI and analytics tools.

- The integration supports secure and governed data access in Amazon DataZone projects on AWS, on premises, and from third-party sources.

- This new JDBC connectivity feature enables unbiased data to flow seamlessly into these tools, supporting productivity across teams.

- This feature is supported in all AWS commercial regions where Amazon DataZone is currently available.

- The integration ensured that the subscribed data remains secure and accessible to authorized users.

Read Full Article

14 Likes

Siliconangle

260

Image Credit: Siliconangle

Eversource, EY use process mining to cut backlogs and boost customer service

- Eversource Energy has leveraged automation, process mining, and reduction of manual work to improve operations and customer service.

- Backlogs in processes were costing Eversource money, leading to the initiation of their automation journey.

- Eversource partnered with Ernst & Young to implement proof of concept and build foundational capabilities for automation.

- Through intelligent automation and process mining, Eversource gained granular insights, reduced burdens on employees, and optimized operations.

Read Full Article

15 Likes

Amazon

59

Image Credit: Amazon

Simplify data ingestion from Amazon S3 to Amazon Redshift using auto-copy

- Amazon Redshift is a cloud data warehouse that can analyze exabytes of data, and data ingestion is the process of getting data into Amazon Redshift. Amazon Redshift launched auto-copy support to simplify data loading from Amazon S3 to Amazon Redshift. By creating auto-copy jobs, it is now possible to set up continuous file ingestion rules to track your Amazon S3 paths and automatically load new files into Amazon Redshift without additional tools or custom solutions.

- The auto-copy feature in Amazon Redshift uses the S3 event integration to simplify automatic data loading from Amazon S3 with a simple SQL command. It can be quickly set up using a simple SQL statement in JDBC/ODBC clients.

- Auto-copy jobs offer automatic and incremental data ingestion from an Amazon S3 location without the need to implement a custom solution. Users can now load data from Amazon S3 automatically without having to build a pipeline or using an external framework.

- Auto ingestion is enabled by default on auto-copy jobs. Files already present at the S3 location will not be visible to the auto-copy job.

- Amazon Redshift auto-copy jobs keep track of loaded files and minimize data duplication while also having automatic error handling of bad quality data files.

- Customers can also load an Amazon Redshift table from multiple data sources and maintain multiple data pipelines for each source/target combination.

- To monitor and troubleshoot auto-copy jobs, Amazon Redshift provides a range of system tables for users to get summary details, exception details, error details, or the status and details of each file that was processed by a auto-copy job.

- Some of the main things to consider when using auto-copy are that existing files in Amazon S3 prefix are not loaded, and the following features are unsupported: MAXERROR parameter, manifest files, and key-based access control.

- GE Aerospace uses AWS analytics and Amazon Redshift to enable critical business insights that drive important business decisions and noted that auto-copy simplifies data pipelines, accelerates analytics solutions, and lets them spend more time adding value through data.

- Overall, Amazon Redshift auto-copy feature offers a simple and effective way to ingest data from Amazon S3 to Amazon Redshift, and users can begin ingesting data to Redshift from S3 with simple SQL commands and gain access to the most up-to-date data.

Read Full Article

3 Likes

Cloudera

118

Image Credit: Cloudera

#ClouderaLife Employee Spotlight: Julia Ostrowski

- Julia Ostrowski is the Director of Enterprise Entitlement at Cloudera since 2019, joining via Hortonworks.

- Julia's responsibility includes reviewing the entire entitlement business process.

- Julia is deeply involved in various philanthropic initiatives within Cloudera as well as in her own free time.

- Julia mentors up to four colleagues at a time under Cloudera’s mentorship program.

- She drives value during her day-to-day work and is always open to leveraging Cloudera's extensive giving and volunteering programs.

- Julia is also involved in Cloudera’s Teen Accelerator Program, an initiative organized by Cloudera’s volunteer group, Cloudera Cares.

- Cloudera’s Teen Accelerator Program offers students a six-week paid internship program at Cloudera and 1:1 employee mentorship.

- Julia is a part of a group of Cloudera employees that assist Second Harvest in distributing food to people in need.

- She fosters dogs and cats for local animal rescues, and occasionally they find a permanent home with her.

- Julia enjoys the flexibility Cloudera granted her to explore a new position in IT that she truly enjoys.

Read Full Article

7 Likes

SiliconCanals

370

Image Credit: SiliconCanals

Belgian startup Wobby secures €1.1M for its AI information platform for knowledge workers

- Antwerp-based startup Wobby has secured €1.1M in seed funding for its AI-powered information platform.

- The investment came from Belgian and international funds including Shaping Impact Group’s SI3 fund, V-Ventures, and imec.istart.

- Wobby's platform aims to make data-driven decisions accessible to knowledge workers by centralizing research and reporting tools.

- The funds will be used to expand into new European markets and initiate commercial activities in the United States.

Read Full Article

22 Likes

Siliconangle

766

Image Credit: Siliconangle

TigerEye’s new AI Analyst offers enhanced decision-making in sales and revenue operations

- TigerEye Labs Inc. has launched its conversational AI Analyst, a service designed to provide professionals with faster, smarter decision-making capabilities.

- The AI Analyst is time-aware and allows teams to ask complex questions about opportunities, forecasts, revenue, metrics, and segmentation.

- It combines large language models with business intelligence to deliver reliable insights and instant answers.

- The service helps teams make proactive decisions, optimize business functions, and track changes and forecast future performance.

Read Full Article

10 Likes

Amazon

366

Image Credit: Amazon

Improve OpenSearch Service cluster resiliency and performance with dedicated coordinator nodes

- Amazon OpenSearch Service has released dedicated coordinator nodes for its OpenSearch domains.

- Data nodes in Amazon OpenSearch Service perform coordination for data-related requests.

- This leads to hotspots forming and resource scarcity, causing node failures.

- Dedicated coordinator nodes limit request coordination and dashboard hosting to the coordinator nodes, and request processing to the data nodes.

- This results in more resilient and scalable domains.

- Adding coordinator nodes leads to higher indexing and search throughput.

- Additionally, it results in more resilient clusters, as each node has a specific function.

- Dedicated coordinator nodes separate a cluster’s coordination capacity from the data storage capacity.

- With dedicated coordinator nodes, you can achieve up to a 90% reduction in the number of IP addresses reserved by the service in your VPC.

- It is recommended to set the count of coordinator nodes to 10% of the number of data nodes.

Read Full Article

22 Likes

Amazon

416

Image Credit: Amazon

Control your AWS Glue Studio development interface with AWS Glue job mode API property

- AWS Glue Jobs API is a robust and versatile interface that allows data engineers and developers to programmatically manage and run ETL jobs in order to automate, schedule, and monitor large-scale data processing.

- You can use AWS Glue Jobs API to create AWS Glue script, visual, or notebook job mode programmatically.

- AWS Glue jobs mode property will determine job mode corresponding to script, visual, or notebook.

- AWS Glue users can choose the mode that best fits their preferences for some extract, transform and load developers, data scientists and data engineers.

- The JobMode property has been introduced to improves your user interface experience.

- You can use CreateJob API to create AWS Glue script or visual or notebook jobs.

- You can use AWS CloudFormation to create different types of AWS Glue jobs by specifying the JobMode parameter with the AWS::Glue::Job resource.

- This post demonstrates how AWS Glue Job API works with a newly introduced job mode property.

- A new property named JobMode is introduced, which describes the mode of AWS Glue jobs available.

- The new console experience helps users search and discover jobs based on JobMode.

Read Full Article

25 Likes

Amazon

443

Image Credit: Amazon

How BMW streamlined data access using AWS Lake Formation fine-grained access control

- BMW has implemented AWS Lake Formation’s fine-grained access control (FGAC) in its Cloud Data Hub (CDH), saving up to 25% on compute and storage costs.

- BMW wanted to centralise data from its various business units and countries in the CDH to break down data silos and to improve decision-making across its global operations, but the initial set-up of CDH supported only coarse-grained access control.

- AWS Lake Formation is a service that centralises the data lake creation and management process and includes fine-grained access control, which enables granular control of access to data lake resources at the table, column, and row levels.

- BMW CDH has become a success since BMW decided to build it in a strategic collaboration with Amazon Web Services (AWS) in 2020.

- BMW CDH is built on Amazon Simple Storage Service (Amazon S3) and allows users to discover datasets, manage data assets, and consume data for their use cases.

- BMW CDH follows a decentralized, multi-account architecture to foster agility, scalability, and accountability.

- BMW used AWS Glue plus Amazon S3 as CDH’s technical metadata catalog and stored data assets.

- The CDH uses the AWS Resource Access Manager (AWS RAM). With Lake Formation, BMW can control access to data assets at different granularities such as permissions at the table, column, or row level.

- BMW can save up to 25% on compute and storage costs by using AWS Lake Formation, which provides finer data access management within the Cloud Data Hub.

- The integration of Lake Formation has enabled BMW to reduce storage costs, higher compute expenses for data processing and drift detection, and project delays because of time-consuming provisioning processes and governance overhead.

Read Full Article

26 Likes

Siliconangle

247

Image Credit: Siliconangle

Dell PowerStore and Intel drive storage cost efficiency and sustainability for AHEAD clients

- AHEAD Inc. partners with Dell Technologies Inc. to provide storage cost efficiency solutions to its clients.

- Dell PowerStore, which leverages Intel Xeon processors, offers low latency performance and data compression techniques for enhanced efficiency.

- Dell backs PowerStore with a five-to-one data reduction guarantee, ensuring predictable cost efficiencies for customers.

- The focus on enhancements for the PowerStore platform has also led to a reduction in power consumption, making it a sustainable storage solution.

Read Full Article

14 Likes

Siliconangle

54

Image Credit: Siliconangle

Dremio throws its support to Polaris data catalog and expands deployment options for Iceberg lakehouse

- Dremio Corp. has shown its support for Snowflake Inc.'s Polaris data catalog, becoming a third-party endorser for the system based on the open-source Apache Iceberg file format.

- This is a significant endorsement as the fight to become the open-source Iceberg platform of choice for artificial intelligence development between Snowflake and Databricks is underway.

- Dremio says that over time it plans to adopt Polaris as its main catalog and integrate in advanced features not available to Unity Catalog managed service users.

- Dremio has also made its data catalog available for on-premises, cloud, and hybrid cloud deployment and is the only lakehouse provider to support all three options.

- Both Snowflake and Databricks are committed to supporting the Iceberg format, and both companies intend to eventually offer their catalogs freely as open source.

- However, write capabilities, column- and row-level access control, and automated table management will require Dremio's commercial catalog offering, which is based on the open-source Project Nessie.

- Dremio's catalog also enables centralized data governance with role-based access control, supports constructs such as branching, version control, virtual development environments, and time travel.

- Dremio intends to merge its catalog with and add read/write capabilities to Polaris while its Project Nessie offering is retired.

- "Dremio differentiates itself by supporting hybrid environments," said Kevin Petrie, VP of research at BARC GmbH.

- "Do you want the open-source catalog backed by one or two vendors or by the community? We believe customers will go with one that's community-backed," said Read Maloney, Dremio's CMO.

Read Full Article

3 Likes

For uninterrupted reading, download the app