Data Science News

Analyticsindiamag

492

Image Credit: Analyticsindiamag

OpenAI to Lease 4.5 GW Compute from Oracle for Stargate

- OpenAI is planning to lease 4.5 gigawatts of computing power from Oracle for its Stargate project to meet the AI firm's substantial computational needs.

- This lease signifies an extraordinary amount of energy, equivalent to powering millions of American homes, with each gigawatt being comparable to the output of a nuclear reactor serving around 750,000 homes.

- Oracle's recent cloud agreement, projected to generate $30 billion in annual revenue from fiscal 2028 onwards, includes the Stargate arrangement with OpenAI, part of a disclosed contract.

- Oracle plans to expand its data centre infrastructure across the US to support OpenAI's increasing demand, with potential locations in Texas, Michigan, Wisconsin, Wyoming, New Mexico, Georgia, Ohio, and Pennsylvania.

Read Full Article

14 Likes

Analyticsindiamag

1.1k

Image Credit: Analyticsindiamag

TCS to Push AI Transformation for 60 Singapore SMEs, Startups

- Tata Consultancy Services (TCS) is launching an innovation centre in Singapore to drive AI initiatives for 60 SMEs and startups.

- The facility at Changi Business Park will facilitate collaboration with experts to develop, prototype, and scale AI solutions.

- TCS plans to recruit 50 recent graduates from local universities to support the centre's functions in data science and cybersecurity.

- The centre aims to provide technology consulting expertise to SMEs and serve as a platform for fintech companies and start-ups to showcase products.

Read Full Article

19 Likes

Analyticsindiamag

118

Image Credit: Analyticsindiamag

The AI Foundry by Tredence: Building Real-World Intelligence with GenAI

- The AI Foundry by Tredence is hosting an event on July 26 in Bengaluru focused on agentic systems and generative AI.

- The event will include sessions on designing and orchestrating multi-agent AI architectures for autonomous task execution and decision-making at scale.

- Highlights of the agenda include talks by Tredence co-founders, a design thinking workshop, and a tour of the Tredence AI Experience Centre.

- The event targets developers, AI engineers, and product teams working on GenAI, agent-based solutions, or orchestration platforms.

Read Full Article

7 Likes

Analyticsindiamag

77

Image Credit: Analyticsindiamag

‘Every Single AI Researcher Making $10-100 Million is a Dota 2 Player’

- Top AI researchers making millions credit their success to playing Dota 2.

- Dota 2 serves as a talent filter for elite AI researchers globally.

- Achieving success in Dota 2 requires strategic planning and split-second decision-making.

Read Full Article

4 Likes

Medium

0

Image Credit: Medium

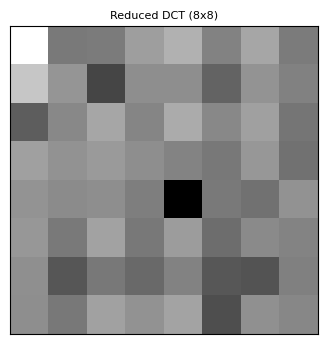

(Part II) A Cloud platform for Images: A Friendly Guide to Perceptual Hashing

- Explore the powerful Perceptual Hash, a revolutionary image hashing technology.

- Unlike traditional hashing algorithms, p-hash focuses on image structure and essence.

- It uses the Discrete Cosine Transform to create a unique and robust fingerprint.

- P-hash handles image variations elegantly, creating a canonical hash for all orientations.

- This technology powers many daily features, advancing towards conceptual hashing in AI.

Read Full Article

Like

Medium

405

Image Credit: Medium

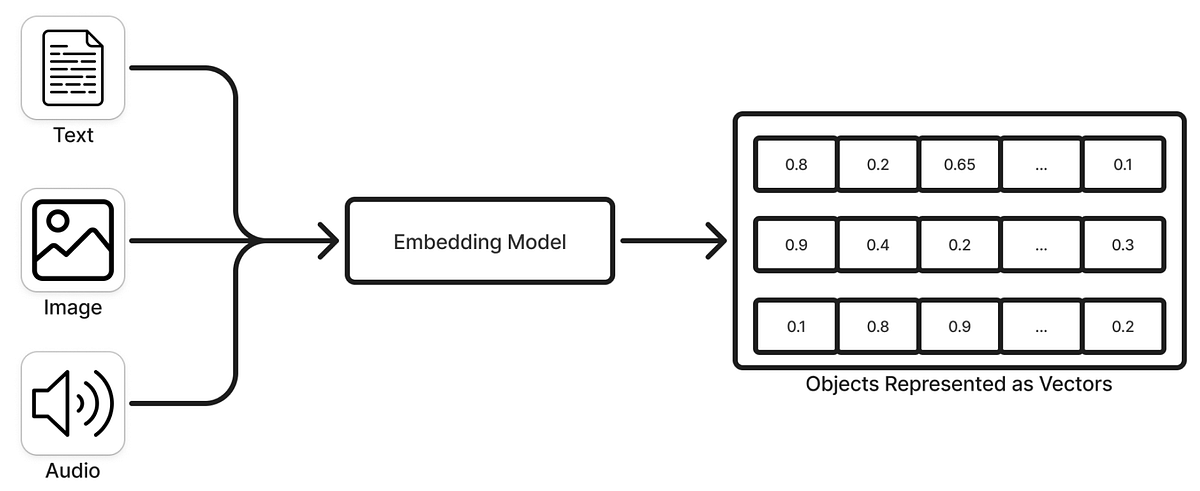

The Secret Maps Behind YouTube and Spotify: Understanding Vectors and Vector Databases (Part 1)

- YouTube uses vectors to analyze and recommend videos with similar content by converting video data into numeric representations via machine learning models called embeddings.

- These vectors are stored in specialized systems known as vector databases designed to efficiently manage high-dimensional vectors at scale.

- Vector databases support functions like semantic similarity search, enabling AI applications to deliver smart recommendations and experiences without custom retrieval logic.

- Popular vector databases, such as Milvus and Faiss, are commonly used for semantic search, recommendation engines, and image similarity tasks, showcasing their importance in AI applications.

Read Full Article

24 Likes

VentureBeat

242

Image Credit: VentureBeat

Dust hits $6M ARR helping enterprises build AI agents that actually do stuff instead of just talking

- Dust, an AI platform, grows annual revenue to $6 million.

- Shift in enterprise AI adoption from chatbots to advanced systems.

- Dust's AI agents automate business tasks like updating CRM records and creating GitHub issues.

- Utilizes Model Context Protocol for secure data access and specialized orchestration.

- Represents trend of AI-native startups leveraging advanced AI capabilities for practical implementation.

Read Full Article

14 Likes

Analyticsindiamag

213

Image Credit: Analyticsindiamag

Generative AI Needs Its iTunes Moment for AI Copyright

- The ongoing debate regarding copyright enforcement and AI technologies has highlighted the need for specific regulations to address how AI uses copyrighted content.

- Rishi Agarwal, from TeamLease Regtech, suggests that generative AI should adopt a model similar to how the music industry monetized digital music through platforms like iTunes.

- He proposes a system where publishers can register their content with AI platforms, triggering microtransactions when the content is referenced by AI models, thereby compensating creators for their work.

- Agarwal emphasizes the importance of implementing fair monetization models to ensure all stakeholders receive a fair share of the revenue generated through AI-generated content.

Read Full Article

12 Likes

VentureBeat

246

Image Credit: VentureBeat

HOLY SMOKES! A new, 200% faster DeepSeek R1-0528 variant appears from German lab TNG Technology Consulting GmbH

- A German lab TNG Technology Consulting GmbH introduced DeepSeek-TNG R1T2 Chimera.

- The model delivers fast inference with shorter responses and reduced compute costs.

- R1T2 integrates three parent models, maintaining high reasoning while reducing inference cost.

- Built on Assembly-of-Experts method, R1T2 inherits strength from various pre-trained models.

Read Full Article

14 Likes

Analyticsindiamag

5.4k

Image Credit: Analyticsindiamag

Indian IT Set for Tepid Q1 as Global Risks Mount

- Indian IT faces challenges as AI reshapes the industry, impacting growth and predictability.

- Q1 FY26 expected to see muted growth due to global risks and US economic slowdown.

- Top IT firms project slower growth, with Tier-2 firms likely to outperform. Limited valuations expected.

Read Full Article

11 Likes

Educba

2.8k

Image Credit: Educba

Benefits of Big Data

- Big Data empowers organizations with smarter decisions, enhanced efficiency, and competitive edge.

- It enables cost reduction, personalized customer experiences, innovation, and competitive advantage.

- Big Data transforms healthcare, smart cities, supply chain management, HR practices, and more.

- The future of Big Data includes real-time analytics growth, automated pipelines, and ethical data use.

Read Full Article

25 Likes

Analyticsindiamag

13.3k

Image Credit: Analyticsindiamag

Accenture Dares to Reinvent, But Will the Gamble Pay Off?

- Accenture to integrate core services into Reinvention Services under CEO Manish Sharma.

- Aims to strengthen position as AI leader, providing seamless client experiences worldwide.

- Experts view move as bold, but emphasize challenges in cultural, integration changes.

- Success hinges on internal transformation, accountability, and innovation in AI era.

Read Full Article

41 Likes

Analyticsindiamag

412

Image Credit: Analyticsindiamag

Zoho Opens First AI & Robotics R&D Centre in Kerala, Brings Tech Jobs to Rural Kollam

- Zoho Corporation launches its first AI and robotics research and development centre in the rural village of Neduvathoor near Kerala's Kollam district.

- The new centre, located in a 3.5-acre IT park, currently employs 250 workers with plans for further expansion, emphasizing Zoho's goal of creating high-tech jobs in rural areas.

- Zoho has recruited 40 graduates from the region for training in AI, machine learning, robotics, and engineering to promote local talent development.

- The aim of the centre is to boost rural development by bringing advanced technology work to areas that are not traditionally part of India's IT growth, potentially inspiring similar initiatives by other companies.

Read Full Article

23 Likes

Analyticsindiamag

139

Image Credit: Analyticsindiamag

How This GCC Powers EV Innovation Through Virtual Twins

- India's electric vehicle (EV) sector is projecting significant expansion in 2025, with domestic automakers launching new models, mainly in the premium segment.

- Despite a global slowdown in EV demand, India's EV sales have risen by 20%, with ambitious targets set by the government for 2030 to increase EV market share in various vehicle categories.

- Dassault Systèmes in India is leveraging virtual twin technology and AI-driven simulations to refine vehicle performance, advance autonomous driving, and simulate real-world scenarios for more efficient transportation.

- The 3DEXPERIENCE Lab in India supports startups and entrepreneurs in developing transformative solutions in healthcare, cleantech, and mobility by providing access to advanced simulation, design, and collaboration capabilities.

Read Full Article

8 Likes

Analyticsindiamag

8.7k

Image Credit: Analyticsindiamag

How This Company Powers EV Innovation Through Virtual Twins

- India is focusing on electric, autonomous, and connected vehicles as part of its net-zero carbon target by 2070.

- India's EV sector is expected to expand significantly by 2025, with new models being launched and ambitious targets set for EV adoption by 2030.

- Dassault Systèmes is using virtual twin technology and AI-driven simulations to enhance vehicle performance, autonomous driving, and traffic management.

- The company's 3DEXPERIENCE platform is aiding in the development of EV products, encouraging indigenous innovation and cross-industry collaboration.

Read Full Article

39 Likes

For uninterrupted reading, download the app