Databases

Amazon

251

Image Credit: Amazon

Build an AI-powered text-to-SQL chatbot using Amazon Bedrock, Amazon MemoryDB, and Amazon RDS

- Text-to-SQL is a valuable approach leveraging large language models to automate SQL code generation for various data exploration tasks like analyzing sales data and customer feedback.

- The article discusses building an AI text-to-SQL chatbot using Amazon RDS for PostgreSQL and Amazon Bedrock, with Amazon MemoryDB for accelerated semantic caching.

- Amazon Bedrock, with foundation models from leading AI companies like AI21 Labs and Amazon, assists in generating embeddings and translating natural language prompts into SQL queries for data interaction.

- Utilizing semantic caching with Amazon MemoryDB enhances performance by reusing previously generated responses, reducing operational costs and improving scalability.

- Implementing parameterized SQL safeguards against SQL injection by separating parameter values from SQL syntax, enhancing security in user inputs.

- The article highlights Table Augmented Generation (TAG) as a method to create searchable embeddings of database metadata, providing structural context for precise SQL responses aligned with data infrastructure.

- The solution architecture includes creating a PostgreSQL database on Amazon RDS, using Streamlit for the chat application, Amazon Bedrock for SQL query generation, and leveraging AWS Lambda for interactions.

- The step-by-step guide covers prerequisites, deploying the solution with CDK, loading data to the RDS, testing the text-to-SQL chatbot application, and cleaning up resources efficiently.

- By following best practices like caching, parameterized SQL, and table augmented generation, the solution showcases enhanced SQL query accuracy and performance in diverse scenarios.

- Authors Frank Dallezotte and Archana Srinivasan provide insights into leveraging AWS services for scalable solutions and optimizing AI and ML workloads with Amazon RDS and Amazon Bedrock.

- The demonstration exhibits the capability of the text-to-SQL application to support complex JOINs across multiple tables, emphasizing its versatility and performance.

Read Full Article

15 Likes

Dbi-Services

109

Image Credit: Dbi-Services

SQLDay 2025 – Wrocław – Sessions

- The SQLDay conference in Wrocław began with a series of sessions covering cloud, DevOps, Microsoft Fabric, AI, and more.

- Morning Kick-Off: The day started with sponsors' presentation, acknowledging their support for the event.

- Key Sessions: Sessions included discussions on Composable AI, migrating SQL Server databases to Microsoft Azure, Fabric monitoring, DevOps in legacy teams, productivity solutions, and Azure SQL Managed Instance features.

- Insights: The sessions provided insights on integrating AI into enterprise architecture, best practices for database migration, monitoring tools for Microsoft Fabric, challenges of introducing DevOps in legacy systems, productivity solutions using AI, and updates on Azure SQL Managed Instance features.

Read Full Article

6 Likes

Dbi-Services

415

Image Credit: Dbi-Services

SQLDay 2025 – Wrocław – Workshops

- SQLDay 2025 in Wrocław featured pre-conference workshops on various Microsoft Data Platform topics.

- Workshop sessions included topics such as Advanced DAX, Execution Plans in Depth, Becoming an Azure SQL DBA, Enterprise Databots, Analytics Engineering with dbt, and more.

- Sessions catered to different professionals, ranging from experienced Power BI users to SQL Server professionals and those transitioning to cloud environments.

- SQLDay workshops offered valuable insights into Azure, Power BI, SQL Server, and data engineering, providing attendees with practical knowledge and hands-on experiences.

Read Full Article

25 Likes

Dbi-Services

223

Image Credit: Dbi-Services

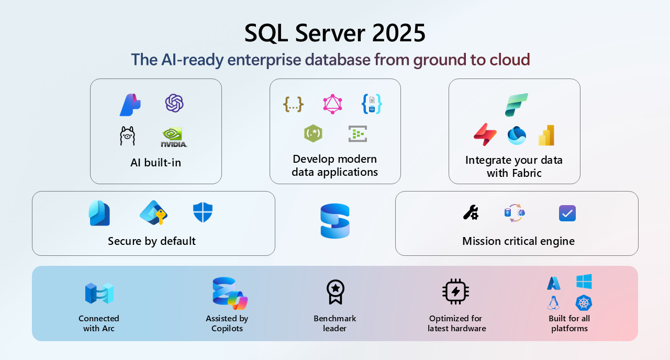

SQL Server 2025 Public Preview and SSMS 21 now available

- SQL Server 2025 public preview and SSMS 21 are now available for download.

- Key changes with SQL Server 2025 include a native vector type, vector index, optimized locking, and REST API enhancement.

- SSMS 21, based on Visual Studio 2022, offers built-in Copilot integration and a Dark Theme.

- Users are encouraged to install SSMS 21, provide feedback, and expect more blog posts to showcase new features of SQL Server 2025.

Read Full Article

13 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

352

Image Credit: Dev

Spark Augmented Reality (AR) Filter Engagement Metrics

- Completed an SQL challenge on interviewmaster.ai involving analyzing engagement metrics of branded AR filters.

- Query 1: Retrieved AR filters with user interactions in July 2024 and sorted by total interaction count.

- Query 2: Obtained total interactions per AR filter in August 2024, filtered for filters with over 1000 interactions.

- Query 3: Identified top 3 AR filters with the most interactions in September 2024.

Read Full Article

21 Likes

Dbi-Services

86

Image Credit: Dbi-Services

PostgreSQL 17-18 major upgrade – blue-green migration with minimal downtime

- PostgreSQL 18 Beta 1 introduces new features like improved IO on reads, pg_createsubscriber, and pg_upgrade options to facilitate smoother major upgrades through blue-green migration using logical replication technology.

- pg_createsubscriber automates setting up logical replication by converting a physical standby server into a logical replica without copying initial table data, simplifying the process, especially for large databases.

- PostgreSQL 18 Beta 1 adds the --all flag to pg_createsubscriber for creating logical replicas for all databases in an instance with a single command, streamlining replication setup in multi-database environments.

- PostgreSQL 18 brings new capabilities to pg_upgrade, such as the --swap option for faster upgrades, along with improvements like pg_stat_subscription_stats, enhancing monitoring of logical replication write conflicts.

- The article outlines a step-by-step blue-green migration process starting with setting up the blue (primary) environment with PostgreSQL 17, configuring replication settings, creating a physical replica (green environment), and transitioning to logical replication.

- The guide covers creating a publication for all tables, using pg_createsubscriber for logical replication setup, upgrading the green environment to PostgreSQL 18 using pg_upgrade with the --swap method, and ensuring successful logical replication post-upgrade.

- The process involves performing failover and cutover to the upgraded green environment after stopping new writes on the primary, syncing latest LSN, dropping subscription, and executing failover commands to switch application traffic to the upgraded instance.

- The article concludes with insights on leveraging blue-green migrations for major version jumps, automation using tools like Ansible playbooks, involving DEV teams for testing post-upgrade, and addressing logical replication limitations.

Read Full Article

5 Likes

Dev

109

Image Credit: Dev

Rediscovering ACID – The Foundation of Reliable Databases

- Engineers are rediscovering the importance of ACID properties in databases like PostgreSQL and MySQL.

- ACID stands for Atomicity, Consistency, Isolation, and Durability, ensuring reliable transactions.

- Atomicity guarantees that transactions complete entirely or not at all, exemplified through money transfers.

- Consistency enforces rules like constraints and foreign keys, Isolation prevents transaction interference, and Durability ensures data persists post-commit.

Read Full Article

6 Likes

Dev

420

Image Credit: Dev

Python for Oracle on ARM Linux | Part 2 - "shell like" SQL scripts

- Python can be a powerful alternative to shell scripting for Oracle DBAs, providing better readability and cross-platform compatibility.

- By using Python's subprocess module, DBAs can automate tasks like running SQL commands, managing script execution, and spooling outputs.

- Key benefits include integration with Python capabilities like data parsing, reporting, and automation frameworks.

- The essential Python module needed for these tasks is subprocess, which allows running programs such as sqlplus.

- Setting up variables like connection string, SQL file path, output file path, and command argument is crucial for the process.

- Subprocess is utilized with shell=True to run the SQL*Plus UNIX command and execute SQL scripts.

- An example SQL script provided in the article demonstrates data retrieval and JSON processing for weather data.

- A Python script 'py_ora.py' is created to run SQL files via SQL*Plus, redirecting outputs to a specified file for review.

- Running 'py_ora.py' in a miniconda environment successfully executes the SQL file and captures the expected outputs.

- The approach showcased emphasizes the use of Python over traditional shell scripting for Oracle DBA tasks, enhancing automation and maintainability.

Read Full Article

25 Likes

Siliconangle

393

Image Credit: Siliconangle

How Dell is riding the AI wave while serving its massive installed base

- Dell Technologies, led by founder Michael Dell, has successfully navigated tech waves by focusing on consistent growth and profitability.

- As Dell aims to capture the AI opportunity, they are focusing on AI factory systems, leveraging their global channel and modernizing core infrastructure.

- Dell's revenue trajectory depicts key milestones, strategic pivots, and a focus on positioning itself in the AI wave.

- The company's valuation has surged due to its AI focus, capital return strategy, and stock performance, reflecting market confidence in its direction.

- Dell's revenue engine is divided between Client Solutions Group (55% revenue) and Infrastructure Solutions Group (45% revenue), emphasizing the importance of commercial PCs and enterprise gear.

- The company's margin dynamics have shifted towards AI-optimized servers and storage, with a focus on margin expansion through higher-value products.

- Dell's strategy includes focusing on storage refresh cycles, software partnerships, and leveraging their hardware scale in the AI era.

- The article discusses Dell's peer comparison in terms of revenue multiples and provides insights into its valuation and market positioning.

- Dell's strategy to tap into the AI-driven demand involves leveraging its installed base, offering customer choice, and aiming for end-to-end AI factory solutions.

- The article also touches upon Dell's AI spending focus, highlighting the growing importance of enterprise AI and Dell's approach to addressing this market.

- In conclusion, Dell faces execution risks in GPU supply, facility retrofits, software offerings, and competitive challenges, but aims to differentiate through end-to-end AI solutions and channel partnerships.

Read Full Article

23 Likes

Dev

329

Image Credit: Dev

Understanding Database Indexes: A Library Analogy

- Indexes in databases are crucial for efficient data retrieval, similar to how a library catalog is essential for finding books quickly.

- In a database, without indexes, searching for specific data requires a full table scan, which is computationally expensive.

- By creating indexes on columns, databases can significantly speed up queries by skipping irrelevant rows.

- Indexes work by mapping column values to row locations, typically using a B-tree data structure for rapid lookups.

- Different types of indexes, like Primary Key, Unique, and Composite Indexes, serve various purposes in optimizing database queries.

- While indexes improve query performance by enabling faster reads, they can slow down write operations and consume additional storage space.

- Without indexes, database searches would involve scanning every row, leading to slower query times, especially in large datasets.

- Analogous to a library catalog, indexes guide database searches to specific data efficiently, saving time and resources.

- Understanding index types and implementing them correctly can enhance database query optimization for improved performance.

- Database indexes play a vital role in transforming slow searches into quick lookups, improving overall data retrieval efficiency.

Read Full Article

19 Likes

Dev

13

Image Credit: Dev

What is SQL and Why Does It Matter

- SQL is a powerful tool for anyone who works with data, enabling communication with relational databases to show, add, change, or remove data.

- SQL is not just about databases but about asking questions, similar to Google Search but for a company's private data.

- SQL is widely used in various companies and tools, including Excel, Google Sheets, Power BI, and Tableau, making it an essential skill in today's data-driven world.

- Learning SQL helps in breaking down problems, asking the right questions, and spotting patterns, outliers, and insights faster, making it a valuable skill for data-related tasks.

Read Full Article

Like

Dev

453

Image Credit: Dev

🚀 Seamless Data Magic: From Notion to Database with MCP Server & AI Agents

- The goal is to create a seamless connection between non-engineering contributors managing data in Notion and engineers requiring formatted data for database insertion.

- Challenges arise when values like 'Japan' or 'Tokyo' do not correspond with the foreign keys in separate tables.

- The tech stack includes Notion for data entry, MCP Server for bridging Notion and external tools, Cursor Agent for automation, and TypeScript for data handling.

- The solution involves using the Cursor Agent to infer semantic data as foreign keys, enabling structured data retrieval from Notion, and converting the data to a payload for database insertion.

Read Full Article

27 Likes

Dev

453

Image Credit: Dev

SQL Project in VS: Why column order matters?

- SQL Projects in Visual Studio allow managing database schema as code and integration with CI/CD pipelines.

- Adding new columns in the middle of a table in SQL Projects is treated as a breaking change, resulting in slower deployment with temp tables and potential risks like locking or downtime.

- Best practice is to append new columns at the end of the table to ensure faster, non-blocking deployments without the need for temp tables, making deployments safer and more predictable.

- Understanding the impact of column order in SQL Projects can help avoid performance issues, delays in deployment, and production downtime, ensuring effective and efficient use of SSDT.

Read Full Article

27 Likes

Amazon

9

Image Credit: Amazon

Amazon DynamoDB data modeling for Multi-tenancy – Part 3

- This article is the third in a series focusing on Amazon DynamoDB data modeling for a multi-tenant issue tracking application.

- It covers the validation of the data model for performance and security, along with extending the model for new access patterns and requirements.

- Validating the data model includes observing scaling patterns, using distributed load testing, and testing tenant isolation.

- Metrics play a crucial role in data model validation, and tools like Amazon CloudWatch Contributor Insights and AWS X-Ray are recommended.

- Key metrics to monitor include most accessed keys and most throttled keys, helping to detect performance issues and bottlenecks.

- Testing for tenant isolation involves creating test cases with different IAM credentials to prevent unauthorized cross-tenant access.

- Extending the data model can be achieved by overloading existing Global Secondary Indexes (GSIs), creating additional GSIs, or adding new tables.

- Considerations such as table per tenant vs. shared table, GSI proliferation costs, on-demand vs. provisioned capacity, and storage optimization are vital for cost efficiency.

- The article concludes by emphasizing the importance of having a scalable and secure DynamoDB data model for multi-tenancy, with recommendations for further learning resources.

- The authors of this article include Dave Roberts, Josh Hart, and Samaneh Utter, each bringing expertise in areas like SaaS solutions architecture and DynamoDB specialization.

Read Full Article

Like

Amazon

325

Image Credit: Amazon

Amazon DynamoDB data modeling for Multi-Tenancy – Part 2

- This article is the second in a series focusing on Amazon DynamoDB data modeling for a multi-tenant SaaS application, particularly an issue tracking application.

- It covers key aspects like selecting a primary key design, creating a data schema, and implementing access patterns using the AWS CLI.

- Considerations for selecting a primary key for multi-tenancy are discussed, emphasizing tenant isolation and DynamoDB's throughput limits.

- The article stresses the importance of using a composite primary key for 'get all' requirements in multi-tenant scenarios.

- It advises against using long and descriptive attribute names due to DynamoDB's item size limit.

- The article demonstrates using tenantId in the primary key and strategies to distribute data across partitions to leverage DynamoDB's scalability.

- Best practices for data schema design in SaaS are outlined, focusing on performance optimization and avoiding filter expressions.

- Modeling item collections, summaries, secondary indexes, transactions, and aggregations are discussed to meet various access patterns efficiently.

- Use of DynamoDB Streams and AWS Lambda for aggregations and ensuring tenant isolation in Global Secondary Indexes (GSIs) are highlighted.

- The article concludes by summarizing key takeaways on query efficiency, tenant isolation, GSI usage, and implementation using AWS CLI.

Read Full Article

19 Likes

For uninterrupted reading, download the app