Databases

Javacodegeeks

72

Image Credit: Javacodegeeks

Introduction to Apache Kylin

- Apache Kylin is an open-source distributed analytics engine that offers fast OLAP queries on large-scale datasets stored in systems like Apache Hive or HDFS.

- Key features of Apache Kylin include pre-computed cubes, standard SQL support, massive scalability, multi-engine compatibility, security integration, and RESTful APIs.

- Apache Kylin precomputes OLAP cubes offline, stores them for fast retrieval, and integrates with BI tools like Tableau and Power BI.

- Its architecture includes components like Web UI, Metadata Store, Query Engine, Cube Engine, Storage Layer, and REST Server.

- Installation using Docker simplifies local setup, enabling users to quickly create projects, data models, and OLAP cubes in the Kylin Web UI.

- Querying Apache Kylin can be done through its Web UI, BI tools via drivers, or REST API for data automation, facilitating fast analytical insights.

- Apache Kylin is suitable for enterprise BI dashboards, marketing analytics, sales reporting, customer behavior analysis, and telecom data analysis.

- By precomputing cubes, Kylin boosts query speed, making it ideal for interactive dashboards and real-time analytics on big data platforms.

- With its fast analytics capabilities, Apache Kylin serves as a valuable tool for businesses looking to bridge the gap between massive datasets and BI needs.

- Overall, Apache Kylin offers a compelling option for enhancing analytical workloads and enabling real-time business intelligence on big data architectures.

Read Full Article

4 Likes

Javacodegeeks

282

Image Credit: Javacodegeeks

Fix Cannot Load Driver Class: com.mysql.jdbc.driver in Spring Boot

- The 'Cannot Load Driver Class: com.mysql.jdbc.driver' issue in Spring Boot arises when the application can't load the old MySQL driver class due to a mismatch in driver configuration.

- In older Spring Boot 1.x versions, the driver class name is explicitly set in the configuration, while in Spring Boot 2+, it can be auto-detected based on the JDBC URL.

- If the Spring Boot application is upgraded to a higher version with a mismatched driver class configuration, it can lead to the 'Cannot Load Driver Class' exception.

- To resolve this, ensure compatibility between MySQL Connector/J version and the driver class configuration in Spring Boot application properties.

Read Full Article

17 Likes

Dbi-Services

13

Image Credit: Dbi-Services

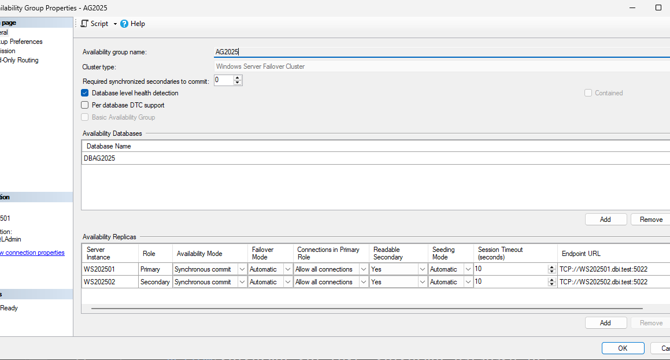

SQL Server 2025 – AG Commit Time

- SQL Server 2025 preview has been publicly available for a week now.

- One highlighted Engine High Availability (HA) feature in the blog is Availability Group Commit Time.

- SQL Server 2025 introduces configurable AG Commit Time for better performance in specific scenarios.

- Instructions on how to change the default AG commit time value and measure its impact are provided.

Read Full Article

Like

Silicon

270

Image Credit: Silicon

Oracle ‘To Spend $40bn’ On Nvidia Chips For Stargate Campus

- Oracle plans to spend $40 billion on Nvidia GB200 AI accelerators for a data centre in Texas for the Stargate infrastructure project with OpenAI and SoftBank.

- About 400,000 high-end chips will be purchased by Oracle for training AI systems, with the capacity leased to OpenAI.

- The Abilene, Texas site is the first Stargate project and is expected to provide 1.2 gigawatts of computing power upon completion next year.

- Stargate aims to reduce OpenAI's dependence on Microsoft for computing infrastructure, with investments from various companies totaling billions.

Read Full Article

14 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Medium

305

Image Credit: Medium

The engineering Oracle prompt: Tap into 30 years of dev wisdom in one chat

- The Engineering Oracle is a prompt designed to simulate a seasoned software engineer with 30 years of experience.

- It emphasizes deep consultation over quick fixes, helping with complex architectural problems, debugging, and decision-making.

- The Oracle focuses on context, logical thinking models, and customized solutions rather than hand-wavy responses.

- It begins by asking insightful questions to understand the problem before offering solutions, mimicking how veteran engineers approach problems.

- The Oracle not only looks at code issues but also considers business goals, user needs, and team dynamics.

- It provides tailored solutions based on the specific problem, team dynamics, and technology stack, promoting clarity over mere code snippets.

- This prompt is valuable for tackling complex problems, decision-making scenarios, and team-related dilemmas, offering practical advice grounded in experience.

- The Oracle aims to guide through consequences, not just steps, avoiding the trial-and-error approach that may break systems.

- It's not a replacement for developers but rather an AI that thinks like an experienced developer, offering strategic insights and considerations.

- The Oracle excels in guiding through real-world engineering challenges beyond mere technicalities, considering factors like risks, team dynamics, and long-term implications.

Read Full Article

18 Likes

Dev

3k

Image Credit: Dev

Converting JSON Data to Tabular in Snowflake — From SQL to SPL #32

- The task involves extracting information from a multi-layered JSON string in a Snowflake database.

- SpecificTrap field is identified as the grouping field, and details like oid and value are extracted from the first layer array variables.

- In SQL, due to its limitation with multi-layer data, indirect implementations through nested queries and grouping aggregation are required.

- On the other hand, SPL (Structured Programming Language) directly supports multi-layer data access and allows object-oriented access to such structures.

Read Full Article

9 Likes

Dev

379

Image Credit: Dev

My Journey with ASP.NET Core & SQL Server: Lessons Learned

- Yasser Alsousi, a .NET developer, shared lessons learned from his journey with ASP.NET Core and SQL Server.

- Key reasons for choosing ASP.NET Core: cross-platform capabilities, high performance, strong ecosystem, and enterprise-ready features.

- Top 3 beginner tips include mastering C# fundamentals, embracing dependency injection, and following database best practices like starting with SQL Server Express and optimizing with Entity Framework Core.

- Yasser optimized an inventory API from 2s to 200ms response times by adding SQL indexes, implementing caching, and using AsNoTracking() for read-only operations.

Read Full Article

22 Likes

Dev

447

Image Credit: Dev

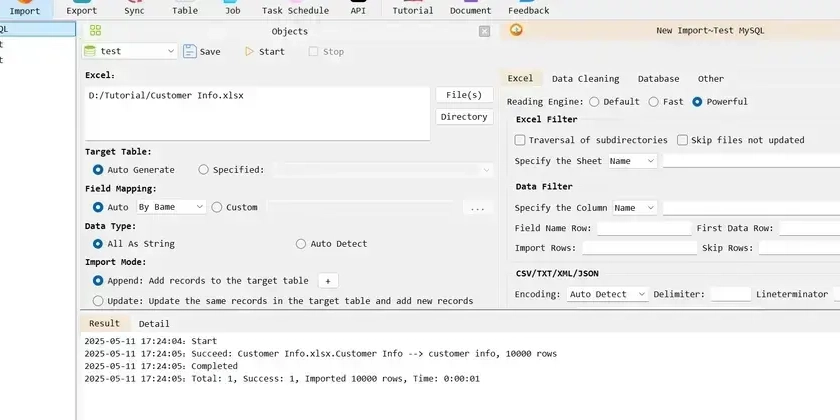

Fastest way to import excel into mysql

- Introduction to the fastest way to import Excel data into MySQL.

- Preparation involves creating an Excel table for import.

- Steps include creating a new connection, initiating the import process, and optimizing for faster import speed.

- DiLu Converter is highlighted as a powerful tool supporting multiple databases for simplified Excel import and export.

Read Full Article

26 Likes

Siliconangle

251

Image Credit: Siliconangle

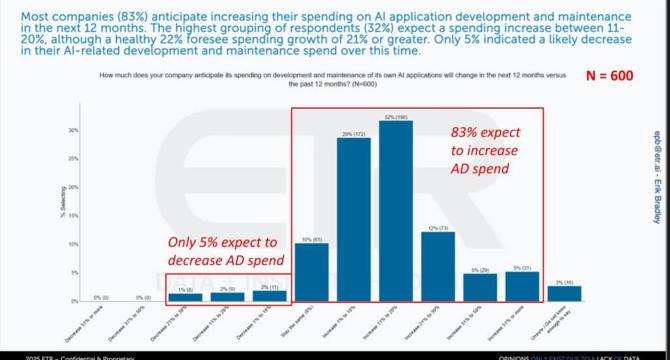

AI budgets are hot, IT budgets are not

- Many enterprises are still unsure about the benefits of AI investments versus historical IT initiatives like ERP, data warehousing, and cloud computing.

- ETR's data shows a shift towards building in-house AI applications, with 83% of IT decision makers planning to increase spend on AI app/dev in 2025.

- There is a consensus across different buyer types on expanding budgets for custom AI workloads to accelerate time-to-value.

- Enterprises are still in proof-of-concept or early production stages indicating a multi-year investment wave in AI application development.

- Geopolitical tension and shifting policy frameworks are not derailing enterprise AI agendas, with more firms proceeding cautiously than slowing down adoption.

- ROI for AI projects lags with 27% of respondents yet to see tangible returns, indicating enterprises are still in experimentation mode rather than harvesting immediate benefits.

- C-suite executives rank AI initiatives as the second-most vulnerable category to cuts, next to outsourced IT services, highlighting the potential vulnerability of experimental AI funding.

- AI budget growth expectations have retreated, indicating a cautious sentiment towards IT spending due to economic uncertainties and geopolitical unrest.

- Policy uncertainty is causing executives to tap the brakes on net-new IT projects, with 71% acknowledging some form of pullback due to uncertainty.

- Enterprise adoption of specific AI foundation models shows OpenAI's GPT leading in mindshare, with Microsoft's Azure OpenAI Service being widely adopted.

Read Full Article

15 Likes

Dev

27

Image Credit: Dev

🚦 Oracle 19c vs PostgreSQL 15 — The Ultimate Parameter Showdown!

- The article compares Oracle 19c and PostgreSQL 15 across various parameters in a side-by-side checklist.

- Over 200+ parameters in categories like Memory, CPU, I/O, Connections, Optimizer, Logging, Security, Background Jobs, Redo/WAL, and Miscellaneous are compared.

- Parameters like memory allocation, CPU configuration, I/O operations, connection limits, optimizer settings, logging setups, security features, background job controls, and more are included in the comparison.

- It highlights key differences and equivalences between Oracle and PostgreSQL parameters, aiding in tasks like workload migration, database tuning, cloud strategy planning, and parameter mapping.

Read Full Article

1 Like

Pymnts

121

Image Credit: Pymnts

Oracle to Buy $40 Billion Worth of Nvidia Chips for First Stargate Data Center

- Oracle plans to buy $40 billion worth of Nvidia chips to power the first Stargate project, a data center in Abilene, Texas.

- The data center is expected to be operational by mid-2026 and will provide 1.2 gigawatts of power, making it one of the largest in the world.

- Stargate project, announced by President Donald Trump, aims to build AI-focused data centers in the U.S. with the first 10 in Texas.

- AI data centers like Stargate's require specialized hardware and infrastructure to handle the computational power needed for AI workloads.

Read Full Article

6 Likes

Amazon

41

Image Credit: Amazon

Explore the new openCypher custom functions and subquery support in Amazon Neptune

- Amazon Neptune has released openCypher features as part of the 1.4.2.0 engine update, offering support for custom functions and CALL subqueries.

- Neptune is a managed graph database service providing open graph query languages like openCypher, Apache TinkerPop Gremlin, and SPARQL 1.1.

- The latest engine release introduced features including CALL subqueries for running specific queries on a node-by-node basis.

- The CALL function enables executing additional MATCH statements against a collection of data in openCypher queries.

- Neptune's openCypher custom functions include textIndexOf, collToSet, collSubtract, collIntersection, collSort, collSortMaps, collSortMulti, and collSortNodes.

- These functions support tasks like searching text, creating unique sets, and sorting collections of data in various ways.

- Examples provided showcase how these custom functions can be utilized for advanced querying and data manipulation in Neptune.

- Users can now leverage these new features to enhance their graph applications and perform complex operations efficiently.

- Neptune also offers options for bulk loading data into Neptune Database or Neptune Analytics, providing practical data management solutions.

- The post concludes with suggestions on getting started with Neptune, such as creating clusters, upgrading to the latest version, and using open source tools like graph-explorer.

Read Full Article

2 Likes

Amazon

100

Image Credit: Amazon

Connect Amazon Bedrock Agents with Amazon Aurora PostgreSQL using Amazon RDS Data API

- Generative AI applications are being integrated with relational databases like Amazon Aurora PostgreSQL-Compatible Edition using RDS Data API, Amazon Bedrock, and Amazon Bedrock Agents.

- The solution leverages large language models to interpret natural language queries and generate SQL statements, utilizing Data API for database interactions.

- The architecture involves invoking Bedrock agents, using foundational models, generating SQL queries with Lambda functions, and executing them on Aurora PostgreSQL via Data API.

- Security measures include agent-level instructions for read-only operations, action group validation, read-only database access, and Bedrock guardrails to prevent unauthorized queries.

- Prerequisites for implementing this solution include an AWS account, IAM permissions, an IDE, Python with Boto3, and AWS CDK installed.

- Deploying the solution involves creating an Aurora PostgreSQL cluster with AWS CDK and deploying the Bedrock agent for natural language query interactions.

- The solution provides functions for generating SQL queries from natural language prompts and executing them using Data API, ensuring dynamic data retrieval.

- Considerations include limiting this integration to read-only workloads, validating parameters to prevent SQL injection, implementing caching strategies, and ensuring logging and auditing for compliance.

- To test the solution, scripts can be run to generate test prompts, execute SQL queries, and review responses from the database.

- Cleanup steps involve deleting resources created through CDK to avoid incurring charges for unused resources.

- The integration of AI-driven interfaces with databases showcases the potential for streamlining database interactions and making data more accessible to users.

Read Full Article

6 Likes

Amazon

191

Image Credit: Amazon

Run SQL Server post-migration activities using Cloud Migration Factory on AWS

- Migrating SQL Server databases to Amazon EC2 through AWS Application Migration Service is a crucial step towards infrastructure modernization.

- Post-migration activities are essential for optimizing, securing, and ensuring functionality in the new SQL Server environment.

- Cloud Migration Factory on AWS (CMF) automates tasks such as validating database status and performance settings post-migration.

- CMF Implementation Guide streamlines the migration process with automation, enhancing efficiency and scalability.

- CMF automation process involves pre-migration preparation, migration, and post-migration activities phases.

- CMF automation jobs enable efficient execution of post-migration tasks for SQL Server databases on EC2 instances.

- Post-migration activities include linked server tests, memory configuration, database status checks, consistency checks, and connectivity tests.

- Using CMF automation offers benefits like efficiency, consistency, scalability, visibility, security, and customization for post-migration tasks.

- Best practices for automating post-migration activities include using Windows Authentication, providing summary messages, and incorporating appropriate return codes.

- Cleaning up resources post-migration is important to avoid ongoing charges.

Read Full Article

11 Likes

Dev

233

Image Credit: Dev

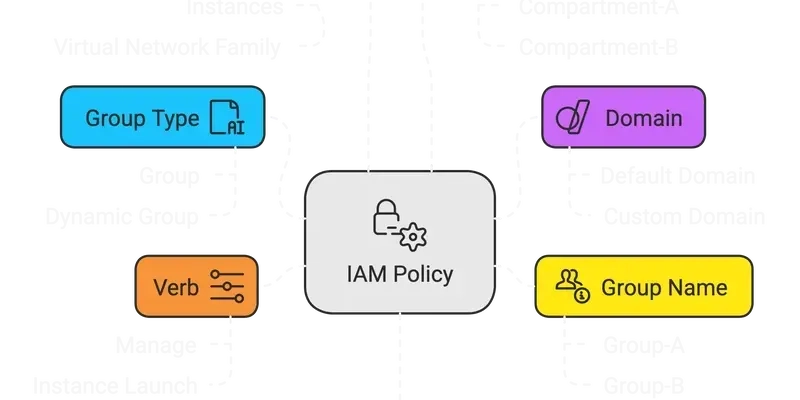

An Overview to OCI IAM Policies

- Oracle Cloud's IAM policies provide granular control over access to resources by using groups of users and resources in a complex online environment.

- The syntax of OCI IAM policies includes patterns like allowing a group to

in . - User accounts are handled using a straightforward syntax, while resource principals use dynamic-groups for more specific control.

- Group IDs can be used instead of names in policies to specify user groups with more accuracy.

- Policies can also be applied to OCI services using specific permissions like managing networking resources.

- Policies can be declared at different levels such as tenancy, compartments, and even individual resources.

- Conditions can be added to policies to restrict when they apply, using criteria like permissions, tags, regions, and more.

- Policies can be made more granular by specifying verbs for general function permissions and API-specific permissions.

- Resource-specific policies can restrict access to specific types of resources like objects in Object Storage or secrets in Vault & Key Management.

- Overall, OCI IAM policies offer a robust framework for managing access control in Oracle Cloud environments.

Read Full Article

14 Likes

For uninterrupted reading, download the app