Databases

Alvinashcraft

123

Dew Drop – May 7, 2025 (#4414)

- Microsoft introduces new Surface Copilot+ PCs including Surface Pro and Surface Laptop

- .NET CLI tool can now be turned into a local MCP Server for GitHub Copilot in VS Code

- Fedora Linux becomes an official WSL distro, Nested App Authentication now available in Microsoft 365

- New updates in Kubernetes v1.33, Node v24.0.0 released, and Windows experiences improved

Read Full Article

7 Likes

Dev

202

Image Credit: Dev

Indexing in MySQL for PHP Developers: Do’s and Don’ts

- MySQL indexing is a crucial aspect for PHP developers to optimize database performance.

- Indexing helps in quick searching and retrieval of data by creating a structured way of accessing information.

- Dos of MySQL indexing include indexing columns used in WHERE, ORDER BY, and GROUP BY clauses.

- Utilizing composite indexes for multiple column queries can enhance performance.

- Indexing foreign keys speeds up join processes for table relationships.

- Regularly analyzing queries with EXPLAIN and optimizing indexes is essential for efficient database operations.

- Avoid over-indexing to prevent negative impacts on INSERT, UPDATE, and DELETE operations.

- Avoid indexing columns with low cardinality and high repetition for better efficiency.

- Be cautious when using functions or expressions in WHERE clauses to ensure effective index utilization.

- Consider data types compatibility for indexed columns to prevent MySQL from using a complete table scan.

Read Full Article

12 Likes

Dbi-Services

155

Image Credit: Dbi-Services

M-Files IMPACT Global Conference 2025 – Day 1

- The annual M-Files Customer and Partner Conference is being held in Athens, Greece, offering attendees a chance to network and learn about the latest M-Files product developments.

- Keynote sessions by M-Files CEO Jay Bhatt and Founder Antti Nivala discussed strategic themes like reducing content friction, providing a horizontal platform with vertical applications, automating workflows, and leading in applied AI.

- The event highlighted the M-Files product vision and roadmap for 2025, focusing on AI innovation, user experience, enhancing knowledge work, and achieving rapid time to value at scale.

- Sessions throughout the day covered collaborations with Microsoft, customer success stories, and technical and business-related topics, culminating in an evening gathering to wrap up day 1 of the conference.

Read Full Article

9 Likes

Dev

32

Image Credit: Dev

How to Manage Polymorphic Associations in SQL Efficiently?

- Introduction to Polymorphic Associations in SQL: Managing relationships in relational databases can be complex, especially with entities associated with multiple other entities.

- The Problem with Current Implementation: Reliance on VARCHAR columns for relationships lacks elegance and data integrity. String manipulation for parsing ownership and referencing IDs from different tables makes data integrity maintenance challenging.

- Advantages of Using Foreign Key Constraints: Foreign key constraints ensure data integrity, lead to cleaner queries, and ease maintenance compared to string-based relationships.

- Exploring Alternatives: Using a junction table or separate tables for different owners can provide more robust designs and enhance readability and maintainability in SQL implementations.

Read Full Article

1 Like

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

HRM Asia

224

Day 1 of HR Tech Asia 2025: Building tech fluency and inclusive AI for the future of work

- HR Tech Asia 2025 took place from 5 to 8 May in Singapore, emphasizing (Re)Align, (Re)Define & (Re)Invent.

- Gan Siow Huang stressed on tech adoption for organisations and AI implementation with inclusivity.

- Brian Sommer highlighted AI's impact on HR and the need for AI fluency in decision-making.

- A panel discussed digital maturity, critical skills, and business value delivery in HR.

- Quah emphasized HR's role in understanding business trends for strategic talent management.

- MacInnis noted AI's potential to democratize talent access globally.

- Muthusamy discussed progressing HR capabilities towards advanced digital functions at Visa.

- Lalchandani highlighted agility's importance in navigating global uncertainties for business resilience.

- Kung Teong Wah tackled job redesign myths and shared successful transformation strategies.

- Dr. Corrie Block discussed love's transformative impact on leadership and workplace culture.

- Harkanwal Virdi and Rachna Sampayo focused on AI's role in automating tasks, personalized employee experiences, and workforce design.

Read Full Article

13 Likes

Analyticsindiamag

425

Oracle and Andhra Pradesh Government to Train 400,000 Students in AI, Cloud and Data Science

- Oracle has partnered with the Andhra Pradesh State Skill Development Corporation to provide skills development training in AI, Cloud, and Data Science to 400,000 students.

- The initiative will offer free, structured digital training through Oracle MyLearn, covering over 300 hours of content including Oracle Cloud Infrastructure, AI services, Data Science, APEX, DevOps, and Security.

- The collaboration aims to enhance employability and equip students for technology-driven careers, with a focus on creating a highly skilled IT workforce in Andhra Pradesh.

- Students will have access to tailored learning paths, certifications, and digital badges to showcase their job readiness, contributing to Oracle's mission of empowering youth with future-ready skills in India.

Read Full Article

25 Likes

Global Fintech Series

86

Image Credit: Global Fintech Series

New Oracle Cloud Services Help Retail Financial Institutions Modernize Lending and Collections

- Oracle introduced new cloud services, Oracle Banking Retail Lending Servicing Cloud Service and Oracle Banking Collections Cloud Service, to help retail financial institutions modernize their lending operations.

- These services are part of the Oracle Banking Cloud Services retail banking portfolio, aiming to accelerate digital transformation and improve borrower experiences.

- Oracle Banking Retail Lending Servicing Cloud Service optimizes loan product management and operations, while Oracle Banking Collections Cloud Service supports the complete collection lifecycle and management of delinquencies.

- The cloud-native services enable financial institutions to modernize with less risk, automate processes, and deliver enhanced digital services to customers, all built on the security and scalability of Oracle Cloud Infrastructure.

Read Full Article

5 Likes

Dbi-Services

13

Image Credit: Dbi-Services

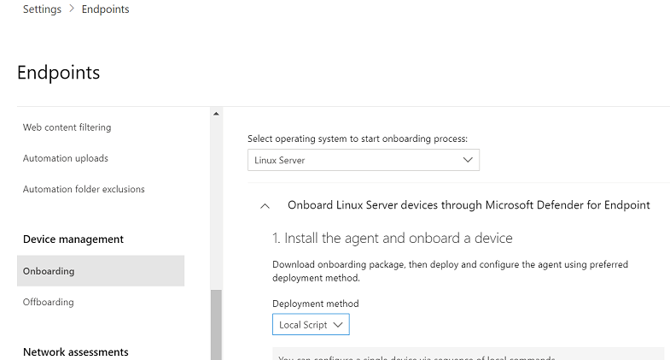

Setting up Microsoft Defender & Arc on Linux

- Setting up Microsoft Defender and Arc on a Linux server may seem counterintuitive but has its benefits.

- The setup is based on Microsoft's official best practices for Defender on Linux.

- Generate an onboarding script from the Microsoft Azure portal for Linux servers.

- Download and save the onboarding package for deployment on each server.

- Different setup steps are provided for RPM-based distributions like CentOS and SLES, as well as Debian and Ubuntu.

- Commands for installing necessary dependencies, adding repositories, and installing packages are outlined.

- Configuration steps for enabling real-time protection and connecting to ARC are detailed.

- Benefits of using Defender on Linux include real-time threat protection and cross-platform support.

- Defender also offers advanced threat detection, vulnerability checks, centralized management, and compliance assistance.

- Newly added Linux machines may take time to appear in the Defender and Arc Azure Portal for full synchronization.

Read Full Article

Like

Alvinashcraft

430

Dew Drop – May 6, 2025 (#4413)

- TechBash 2025 Keynotes Announced, Docker MCP Catalog and Toolkit introduced for AI agents, and Teams AI Library and MCP support updated.

- Web & Cloud Development highlights include error handling in Minimal API apps, Azure services comparison, and improving WebStorm's performance.

- Insights on migrating Xamarin apps to .NET MAUI, nuances of StringComparison.InvariantCulture, and best practices for shared libraries in .NET apps.

- AI updates cover remote MCP server registration, Azure ML features, Generative AI for SDKs, and the benefits of vibe coding.

- Discussions on observability, Flutter DataGrids for trading apps, Android Material 3 design leak, and exploring mirror fabric for 3D printing.

- Podcasts cover AI code generation, Agile history, cybersecurity, developer trends, and insights on microservices.

- Events and community updates, along with database insights on MySQL, SQL Server ML integration, Postgres, and SharePoint Roadmap Pitstop.

- PowerShell release announcement and diverse topics like OpenAI's evolution, Windows 11 Insider builds, wind energy lawsuits, and the 20th anniversary of Open Document Format.

- Additional link collections featuring AWS updates, reading lists, and book recommendations on user experience design and writing.

Read Full Article

25 Likes

Medium

247

Image Credit: Medium

Easy Ways to Speed Up SQL Queries

- Adding indexes can help your database find data quicker, especially for commonly searched columns.

- Cleaning up joins, using INNER JOIN, and ensuring columns have indexes can speed up SQL queries.

- Examining the query plan with tools like EXPLAIN in MySQL can help identify and fix any slowdowns.

- Limiting the amount of data pulled and using WHERE to filter early can also improve query performance.

Read Full Article

14 Likes

Dev

292

Image Credit: Dev

How to Select N Messages with Sum of Verbosity Less than N?

- To select messages with a specified sum of verbosity less than a specified limit N in SQL, a recursive approach or cumulative sum strategy can be used.

- The process involves creating a Common Table Expression (CTE) to recursively build the result set while ensuring the total verbosity stays under the specified threshold.

- The SQL solution described is compatible with databases like PostgreSQL and SQLite, leveraging features such as CTEs for clarity and modularity.

- By adjusting the threshold value '70' in the query, the solution can be applied to different scenarios where the sum of a specific column needs to be limited.

Read Full Article

17 Likes

HRKatha

434

Image Credit: HRKatha

Oracle to skill 4 lakh students in Andhra Pradesh, make them employable

- Oracle and Andhra Pradesh State Skill Development Corporation (APSSDC) collaborate to provide skills development training to four lakh students in Andhra Pradesh to enhance their employability.

- The training will be delivered through Oracle MyLearn platform, offering access to digital training material and foundational certifications in various technologies like Oracle Cloud Infrastructure, AI services, Data Science, DevOps, and Security.

- The initiative aims to create a highly-skilled IT workforce in Andhra Pradesh, offering training in cloud technologies, professional-level certifications, and additional training tailored to individual learning levels and educational goals.

- Students will also earn badges showcasing their readiness for specialized job roles, facilitating their job placement within the state.

Read Full Article

26 Likes

Dev

352

Image Credit: Dev

Substring from a Column of Strings — From SQL to SPL #25

- Retrieve the word ‘EN’ and the subsequent string of numbers from the DESCRIPTION field in a database table tbl.

- If the string does not contain ‘EN’, return null. The string of numbers may include digits and special characters like '/', '-', '+', '%', or '_'.

- In SQL, the task can be completed using CROSS APPLY with XML syntax, but the code is complex and lengthy.

- In SPL (Structured Pattern Language), the task can be achieved using string-splitting functions and ordered calculation functions with simple and easy-to-understand code.

Read Full Article

21 Likes

Dev

173

Image Credit: Dev

How to Write a SELECT SQL Query for Oracle 19c Groups?

- This article discusses how to write a SELECT SQL query in Oracle 19c to identify the latest account numbers from specific groups based on previous account numbers.

- The dataset comprises Account Number and Previous Number columns, allowing for the determination of the latest account number in each group.

- The query utilizes Common Table Expressions (CTEs) and functions like REGEXP_SUBSTR and ROW_NUMBER to isolate the latest account numbers for distinct groups.

- By leveraging SQL techniques and structured querying, users can efficiently extract the most recent records from hierarchical data structures in Oracle 19c.

Read Full Article

10 Likes

Dev

0

Image Credit: Dev

How to Create a PHP Registration Form as a Single Page Application?

- Creating a multi-page registration form in PHP can be cumbersome, especially with slow load times due to new HTTP requests for each page.

- A Single Page Application (SPA) approach enhances user experience by loading HTML once and using JavaScript for smooth navigation.

- Benefits of SPA include faster navigation, improved user experience, and reduced server load through fewer requests.

- Converting a PHP registration form to an SPA involves setting up the project, creating form pages with JavaScript, and handling form submission efficiently.

Read Full Article

Like

For uninterrupted reading, download the app