Databases

Dev

105

Image Credit: Dev

How to Implement Mixed Computations Between Oracle and MySQL with esProc

- Logical data warehouses often face challenges with configuring views and preprocessing data for multi-source mixed computations.

- DuckDB provides a lightweight solution but lacks a native Oracle connector, making custom development complex.

- esProc is a lightweight solution that supports the common JDBC interface, allowing mixed computations between various relational databases.

- esProc can be used to perform cross-database mixed computations by connecting to Oracle and MySQL, loading data, and executing scripts.

Read Full Article

6 Likes

VoltDB

284

Image Credit: VoltDB

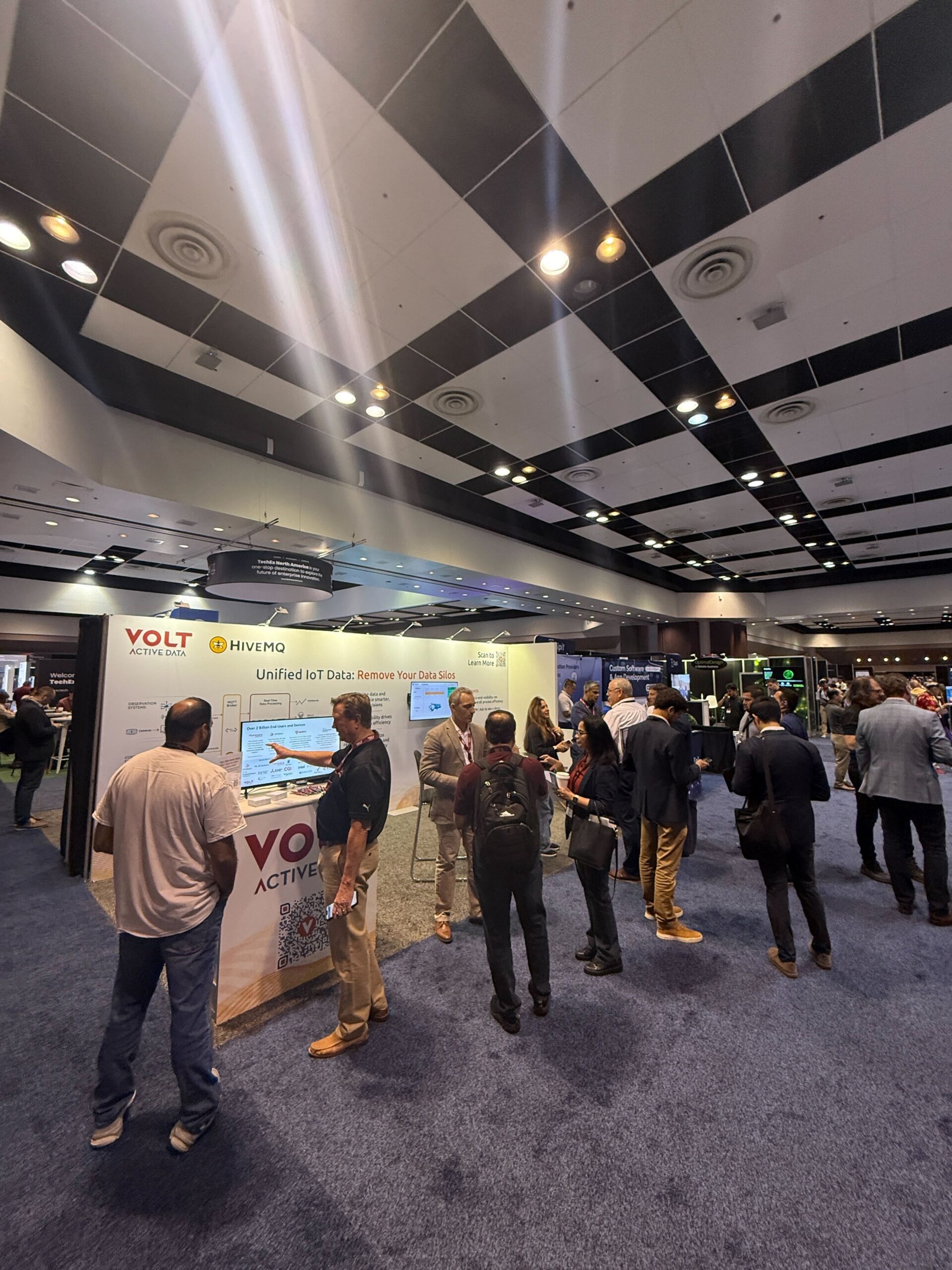

From Tsunami to Transformation: 6 Key Takeaways from IoT Tech Expo North America 2025

- IoT Tech Expo North America 2025 highlighted the convergence of Industrial IoT, AI, and edge computing, emphasizing the need for enterprises to adapt quickly to stay relevant.

- Edge computing is evolving to become the primary point of value creation in real-time systems, with a projected 400% increase in edge devices in the next five years.

- AI and IoT were lauded as a potent combination driving industrial transformation, with AI at the edge enabling autonomy and responsive decision-making.

- Predictive maintenance is maturing, shifting towards data-centric scheduling and dynamic predictive models based on AI and machine learning.

- Resilience is fundamental in complex edge networks, leading to the rise of self-healing systems that adapt and recover autonomously.

- Agentic AI, capable of real-time decision-making at the edge, is emerging as a key aspect, enabling automation in sectors like manufacturing.

- Data overload emerged as a significant challenge, emphasizing the need to clean and desilo data for actionable insights and real-time intelligence.

- The expo stressed intentional innovation, urging businesses to focus on solving real problems, collecting relevant data, and applying intelligence for decision-making.

- The call to action is to re-architect for a future of distributed, intelligent decision-making to stay ahead of the curve in the face of upcoming technological advancements.

Read Full Article

17 Likes

Dbi-Services

4

Image Credit: Dbi-Services

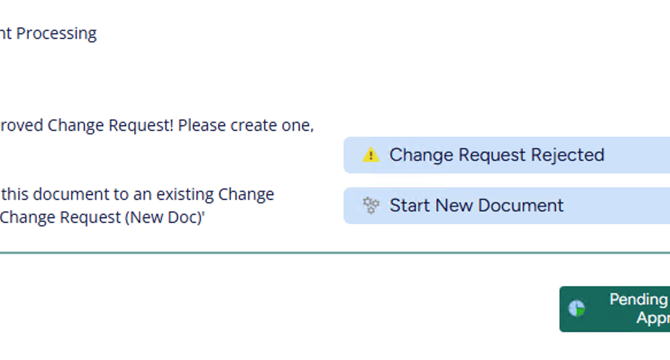

M-Files Online June 2025 (25.6) Sneak Preview

- The June 2025 release of M-Files Client includes new features like Visual Workflows, Global Search, Co-authoring improvements, and M-Files URL enhancements.

- Visual Workflows feature allows users to easily observe workflow status with graphical views.

- Global Search enables searching across all accessible vaults and provides quicker access to different vaults from the home screen.

- Co-authoring improvements now make co-authoring the default behavior when opening documents in Microsoft 365 Office desktop applications.

Read Full Article

Like

Siliconangle

144

Image Credit: Siliconangle

Beyond walled gardens: How Snowflake navigates new competitive dynamics

- Snowflake is evolving by embracing open data and expanding its governance model into an open data ecosystem while enhancing its simplicity message and governance strategy.

- The company is focusing on Systems of Intelligence (SOIs) to become a high-value part of the artificial intelligence-optimized software stack.

- Snowflake's shift from an analytic warehouse to enabling intelligent data apps is highlighted, signaling a strategic pivot.

- The company is improving its platform to cater not only to traditional analytic workload customers but also data engineering and data science personas.

- Snowflake's post-garden strategy aims to turn cooperative openness into control of the System of Intelligence layer, facing competition from various application vendors.

- The industry is shifting towards Systems of Intelligence, reframing competitive debates and emphasizing the integration of analytics with operational applications.

- Snowflake's strategic focus is on binding analytic insight to operational action before competitors to secure a strong position in the System of Intelligence layer.

- The company's Snowflake Summit 2025 introduced various features aligning with an engagement-intelligence-agency model, aiming to expand its total addressable market.

- Snowflake's approach includes simplifying data access, extending governance, enhancing data pipelines, and focusing on offering a comprehensive AI application framework.

- Snowflake's key challenges include reclaiming data engineering workloads, closing the ingest gap with competing connectors, and solidifying its position in the System of Intelligence high ground.

- The company is navigating towards securing control of the rich, governed knowledge graph essential for success in the agent-driven future where enterprise value lies.

Read Full Article

8 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

105

Image Credit: Dev

How to Configure Docker Volumes for MySQL

- Docker containers are ephemeral by default, so Docker provides volumes to persist data across container restarts.

- Volumes are crucial for databases like MySQL to ensure persistent storage.

- You can create, list, inspect, and remove volumes using Docker commands.

- Running a MySQL container with a volume allows you to keep the MySQL data persistent.

Read Full Article

6 Likes

Mjtsai

374

Pair Networks Price Increase

- Pair Networks, a hosting provider, significantly increased its rates from $88 to $159 per year, with the change taking effect upon service renewal starting January 1, 2025.

- The company also announced the launch of new Pair Platinum Mail services, replacing the current free email offerings. The transition to the new email service bundles will increase the cost from $159 to $639 starting June 1, 2025.

- Customers expressed dissatisfaction with Pair's approach, citing the lack of flexibility in purchasing mailboxes and the perceived marketing rigidity of the new service offerings.

- This sudden increase in prices, coupled with changes in services, has prompted customers to explore alternative hosting options or consider migrating their websites to different servers.

Read Full Article

22 Likes

VoltDB

264

Image Credit: VoltDB

Predictive vs. Preventive Maintenance: What’s the Difference—and Why It Matters

- Preventive maintenance is a scheduled approach involving routine inspections and part replacements at set intervals, while predictive maintenance uses real-time data to forecast maintenance needs based on equipment condition.

- Preventive maintenance reduces unexpected breakdowns but may lead to unnecessary maintenance, whereas predictive maintenance minimizes downtime by fixing issues before they occur.

- Preventive maintenance relies on scheduled triggers and has lower data dependence, while predictive maintenance requires real-time data platforms, sensors, and analytics, making it more complex and suitable for critical systems.

- The ideal strategy often involves a combination of preventive and predictive maintenance, evolving from preventive to predictive as data capabilities grow, with Volt Active Data highlighted for enabling predictive maintenance through real-time data processing.

Read Full Article

15 Likes

Amazon

326

Image Credit: Amazon

Real-time Iceberg ingestion with AWS DMS

- Real-time Iceberg ingestion with AWS DMS enables low-latency access to fresh data with reduced complexity and improved efficiency.

- AWS DMS simplifies the migration of various data stores, enabling migrations into the AWS Cloud or between cloud and on-premises setups.

- Iceberg, an open table format, facilitates large-scale analytics on data lakes with ACID support, schema evolution, and time travel.

- Etleap customers benefit from Iceberg by achieving low latency, operational consistency, and simplifying data replication across multiple data warehouses.

- Exactly-once processing in low-latency pipelines is ensured using Flink's two-phase commit protocol, maintaining data integrity and fault tolerance.

- Iceberg table maintenance includes tasks like data file compaction and snapshot expiration to ensure high query performance and storage efficiency.

- Etleap integrates Iceberg tables with various query engines like AWS Athena, Amazon Redshift, Snowflake, making data querying seamless across platforms.

- Building reliable, low-latency data pipelines with Iceberg utilizing AWS tools and Iceberg's features supports real-time operational requirements and data lake modernization.

- The architecture demonstrated in the post enables streaming changes from operational databases to Iceberg with end-to-end latencies of under 5 seconds.

- Caius Brindescu, a Principal Engineer at Etleap, highlights the benefits of Iceberg ingestion and his expertise in Java backend development and big data technologies.

Read Full Article

19 Likes

Amazon

357

Image Credit: Amazon

Migrate Google Cloud SQL for PostgreSQL to Amazon RDS and Amazon Aurora using pglogical

- PostgreSQL is a popular open-source relational database for many developers and can be easily deployed on AWS with services like Amazon RDS and Aurora PostgreSQL-Compatible Edition.

- Migrating a PostgreSQL database from Google Cloud SQL to Amazon RDS or Aurora can be accomplished using the pglogical extension for logical replication.

- The pglogical extension replicates data changes efficiently and is resilient to network faults, functioning with both RDS for PostgreSQL and Aurora PostgreSQL.

- Steps involve setting up the primary Cloud SQL instance, configuring logical replication with pglogical extension, and creating provider and subscriber nodes.

- Limitations of pglogical include the need for workarounds for sequences, primary key changes, extensions, materialized views, and DDLs during migration.

- The setup allows for replicating lower PostgreSQL versions to higher ones and the authors provide expertise in database migration and optimization.

- The use of surrogate keys, manual extension replication, and considerations for schema changes and large objects are discussed to address limitations in replication.

- The post provides detailed steps, commands, and precautions for a successful migration from Google Cloud to AWS RDS or Aurora PostgreSQL.

- Authors include Sarabjeet Singh, Kranthi Kiran Burada, and Jerome Darko, who specialize in database solutions and migrations at AWS.

Read Full Article

21 Likes

Amazon

101

Image Credit: Amazon

Upgrade your Amazon DynamoDB global tables to the current version

- Amazon DynamoDB is a serverless NoSQL database with single-digit millisecond performance that offers global tables for replicating data across AWS Regions.

- The Current version of global tables (2019.11.21) is more efficient and user-friendly compared to the Legacy version (2017.11.29).

- Operational benefits of the Current version include improved availability, operational efficiency, and cost effectiveness.

- The Current version offers lower costs, consuming up to 50% less write capacity than the Legacy version for common operations.

- Upgrading to the Current version requires fulfilling prerequisites, such as consistent TTL settings and GSI configurations.

- Considerations before upgrading involve understanding behavior differences between versions, like changes in conflict resolution methods.

- Upgrading can be initiated with a single click in the AWS management console, ensuring no interruption in availability during the process.

- Post-upgrade validation involves confirming data accessibility and conducting conflict resolution tests across regions.

- Common challenges during the upgrade process include inconsistencies in settings and permissions, which can be mitigated through proper auditing and tooling updates.

- AWS recommends using the Current version for enhanced cost efficiency, automated management, and multi-region resilience.

Read Full Article

6 Likes

Cloudblog

366

Image Credit: Cloudblog

From data lakes to user applications: How Bigtable works with Apache Iceberg

- The latest Bigtable Spark connector version offers enhanced support for Bigtable and Apache Iceberg, enabling direct interaction with operational data for various use cases.

- Users can leverage the Bigtable Spark connector to build data pipelines, support ML model training, ETL/ELT, and real-time dashboards accessing Bigtable data directly from Apache Spark.

- Integration with Apache Iceberg facilitates working with open table formats, optimizing queries and supporting dynamic column filtering.

- Through Data Boost, high-throughput read jobs can be executed on operational data without affecting Bigtable's performance.

- Use cases include accelerated data science by enabling data scientists to work on operational data within Apache Spark environments, and low-latency serving for real-time updates and serving predictions.

- The Bigtable Spark connector simplifies reading and writing data from Bigtable using Apache Spark, with the option to create new tables and perform batch mutations for higher throughput.

- Apache Iceberg's table format simplifies analytical data storage and sharing across engines like Apache Spark and BigQuery, complementing Bigtable's capabilities.

- Combining advanced analytics with both Bigtable and Iceberg enables powerful insights and machine learning models while ensuring high availability and real-time data access.

- User applications like fraud detection and predictive maintenance can benefit from utilizing Bigtable Spark connector in combination with Iceberg tables for efficient data processing.

- The integration of Bigtable, Apache Spark, and Iceberg allows for accelerated data processing, efficient data pipelines handling large workloads, and low-latency analytics for user-facing applications.

Read Full Article

22 Likes

Dev

61

Image Credit: Dev

Safeguarding Your PostgreSQL Data: A Practical Guide to pg_dump and pg_restore

- PostgreSQL users can rely on pg_dump and pg_restore utilities for data backup and recovery.

- pg_dump creates a dump of a PostgreSQL database, facilitating recreation of the database.

- Key options for pg_dump include connection settings, output control, and selective backup features.

- Backup strategies like full database, specific tables, schema-only, and plain text backups are discussed.

- pg_restore and psql are used for restoring backups in various formats.

- Restoration scenarios cover custom/archive formats and plain text dumps.

- Advanced tips address permissions, ownership issues, and choosing the right backup format.

- Recommendations include automating backups and utilizing tools like Chat2DB for streamlined database management.

- Understanding and effectively using pg_dump and pg_restore is essential for data protection and management in PostgreSQL.

- Automating backups is crucial for data safety, and tools like Chat2DB can enhance database management experiences.

- Elevate database management with Chat2DB for simplifying tasks, optimizing queries, and enhancing productivity.

Read Full Article

3 Likes

Sdtimes

234

Image Credit: Sdtimes

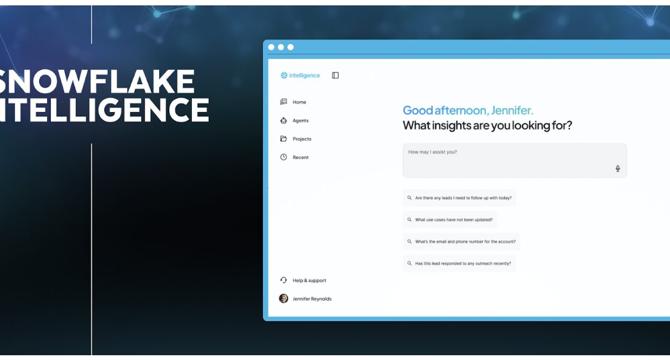

Snowflake introduces agentic AI innovations for data insights

- Snowflake has introduced new agentic AI innovations to bridge the gap between enterprise data and business activity, creating connected and trusted AI and ML workflows.

- Snowflake Intelligence, powered by intelligent data agents, offers a natural language experience for generating actionable insights from structured and unstructured data, utilizing Snowflake Openflow and LLMs from Anthropic and OpenAI.

- Snowflake's AI capabilities have expanded with solutions like SnowConvert AI and Cortex AISQL, enabling faster migrations from legacy platforms to Snowflake and providing generative AI tools for extracting insights from various data sources.

- CData Software has launched the CData Snowflake Integration Accelerator, offering no-code data integration tools for Snowflake customers to enhance data ingestion, transformations, and live connectivity with Snowflake data.

Read Full Article

14 Likes

Dev

420

Image Credit: Dev

Postgres vs. MySQL: DDL Transaction Difference

- Database schema changes require careful planning and execution, making DDL transaction handling crucial for database management systems.

- Transactional DDL in PostgreSQL 17 allows DDL statements in multi-statement transaction blocks for atomic commit or rollback.

- PostgreSQL ensures that DDL operations are fully transactional except for certain database or tablespace operations.

- MySQL 8 introduced Atomic DDL, providing statement-level atomicity but lacks support for multi-statement transactions.

- MySQL 8's DDL statements are atomic at the statement level, committing implicitly before execution.

- MySQL's atomic DDL is restricted to InnoDB storage engine, ensuring crash recovery and statement-level atomicity.

- In PostgreSQL, transactional DDL allows for complete rollback of all DDL operations within a transaction.

- MySQL 8 only supports statement-level atomicity, undoing an entire DDL transaction block is not possible.

- PostgreSQL's DDL handling supports multi-statement transactions and savepoints, providing fine-grained control over schema changes.

- In summary, PostgreSQL 17 offers full transactional DDL with multi-statement support, while MySQL 8 provides atomic DDL at the statement level.

Read Full Article

25 Likes

VentureBeat

92

Image Credit: VentureBeat

CockroachDB’s distributed vector indexing tackles the looming AI data explosion enterprises aren’t ready for

- Cockroach Labs' latest update focuses on distributed vector indexing and agentic AI in distributed SQL scale, promising a 41% efficiency gain and core database improvements.

- With a decade-long reputation for resilience, CockroachDB emphasizes survival capabilities aimed to meet mission-critical needs, especially in the AI era.

- The introduction of vector-capable databases for AI systems has become commonplace in 2025, yet distributed SQL remains crucial for large-scale deployments.

- CockroachDB's C-SPANN vector index utilizes the SPANN algorithm to handle billions of vectors across a distributed system.

- The index is nested within existing tables, enabling efficient similarity searches at scale by creating a hierarchical partition structure.

- Security features in CockroachDB 25.2 include row-level security and configurable cipher suites to address regulatory requirements and enhance data protection.

- Nearly 80% of technology leaders feel unprepared for new regulations, emphasizing the growing concern over financial impacts of outages due to security vulnerabilities.

- The rise of AI-driven workloads introduces 'operational big data,' demanding real-time performance and consistency for mission-critical applications.

- Efficiency improvements in CockroachDB 25.2, like generic query plans and buffered writes, enhance database performance and optimize query execution.

- Leaders in AI adoption must consider investing in distributed database architectures to handle the anticipated data traffic growth from agentic AI.

Read Full Article

5 Likes

For uninterrupted reading, download the app