Deep Learning News

Medium

166

Image Credit: Medium

AI Gold Rush: How $48B in VC Funding Is Fueling the Next Tech Revolution

- The rapid increase in venture capital (VC) funding is reshaping AI startups and global innovation, driving unprecedented growth and opportunities.

- AI startups have raised $48.4 billion in 2024 alone, marking a 25% increase from the previous year.

- The rise of AI has led to a competitive landscape, with machine learning startups raising $113.6 billion, followed by AI software and IT startups.

- VC funding is playing a crucial role in the global race to lead in AI, with countries like the US, Europe, and Asia vying for dominance.

Read Full Article

10 Likes

Medium

210

Image Credit: Medium

AI in Education 2025: 10 Brutal Truths Every Student & Teacher Must Know

- AI in Education 2025: 10 Brutal Truths Every Student & Teacher Must Know

- AI education tools need to go beyond basic functionality like Google Forms or PDFs.

- Many schools lack proper training to utilize AI tools effectively.

- The use of AI in education should encourage critical thinking, not just offer repetitive content.

Read Full Article

12 Likes

Medium

366

The Power Of Prompts

- The concept of 'biophilia' and its impact on human well-being.

- Exposure to natural sounds affects stress levels and cognitive function.

- Psychological effects of urban environments versus natural landscapes.

- Psychological factors contributing to conformity in social groups.

Read Full Article

22 Likes

Medium

178

Image Credit: Medium

How to Master Advanced LLM Prompt Engineering Techniques

- Crafting prompts for ChatGPT-4 can enhance accuracy and engagement.

- Chain-of-Thought prompting technique revolutionizes prompt engineering.

- Understanding the basics of prompt engineering is crucial for desired outputs.

- Effective prompting is essential to overcome challenges and improve task accuracy.

Read Full Article

10 Likes

Medium

128

Image Credit: Medium

AI Talent Development in India: Education, Diversity & Global Innovation

- India's AI talent development requires a comprehensive overhaul of the educational system.

- The gap between potential and opportunity in India's AI landscape needs to be bridged.

- Fostering interdisciplinary learning and collaboration between academia and industry is crucial for innovation.

- Internship programs, joint research projects, and industry-sponsored labs are key components of India's AI talent development.

Read Full Article

6 Likes

Medium

454

Image Credit: Medium

The Ultimate Guide to Cutting-Edge Deepfake Detection Techniques of 2025

- Unveiling the future of deepfake detection, this guide explores real-time solutions and advanced multimodal methods revolutionizing cybersecurity.

- Deepfakes, cleverly manipulated pieces of content designed to deceive, pose a pressing issue with implications in misinformation, fraud, and privacy violations.

- Current deepfake detectors have major vulnerabilities, lacking the ability to reliably identify real-world deepfakes outside their training data.

- The need for more adaptable and resilient deepfake detection solutions is critical in combating the rising threat of deepfakes.

Read Full Article

27 Likes

Medium

429

Image Credit: Medium

2025’s Must-Have AI Tools: Revolutionize Your Business Now

- AI tools are set to redefine business landscapes in 2025, enhancing productivity and marketing strategies.

- Prominent AI platforms like ChatGPT and HubSpot AI are becoming valuable allies in navigating the challenges of modern business.

- The key to successfully integrating AI tools is to align them with specific business needs and workflows.

- New AI tools in 2025, such as DALL-E 3 and LALAL.AI, focus on creative potential and productivity, generating images from text and improving audio clarity respectively.

Read Full Article

23 Likes

Medium

247

Image Credit: Medium

How AI Is Decoding Animal Speech & Revolutionizing Linguistics

- AI and linguistics are revolutionizing communication, including animal dialogues.

- Professor Gašper Beguš uses AI to decode animal sounds and explore human language acquisition.

- The interdisciplinary field of AI and linguistics uncovers new dimensions of communication.

- AI systems mimic human language learning processes and provide insights into language acquisition.

Read Full Article

14 Likes

Medium

169

Image Credit: Medium

How AI-Driven Software Development is Transforming Industries

- The rise of AI-driven software is reshaping industries, ushering in a new era of innovation and efficiency.

- AI has the potential to actively participate in software creation, enhancing creativity and problem-solving.

- Integrating AI into the workflow requires a shift in mindset and skills, but the potential benefits make it worthwhile.

- Collaboration between humans and AI in software development is key to unlocking the transformative power of AI.

Read Full Article

10 Likes

Insider

403

Image Credit: Insider

Wharton has overhauled its curriculum around AI. Here's how the business school plans to train its students for the future.

- Wharton School of the University of Pennsylvania has launched a new MBA major and undergraduate concentration in artificial intelligence (AI).

- The new AI curriculum will include classes on machine learning, ethics, data mining, and neuroscience.

- Wharton aims to address the demand for talent with necessary AI skills and provide students with a strong technical understanding as well as an awareness of AI's economic, social, and ethical implications.

- The program will equip graduates with expertise in AI, business operations, ethics, and legal frameworks, enabling them to adapt to the evolving AI-powered business landscape.

Read Full Article

24 Likes

Medium

183

Image Credit: Medium

Why I Stopped Trusting Accuracy Alone

- Accuracy alone is not a reliable measure.

- Imbalanced data can skew accuracy measurements.

- Latency and user experience are important considerations.

- Precision and recall offer a more comprehensive evaluation.

Read Full Article

11 Likes

Medium

432

Image Credit: Medium

Declaration of Human – AI Collaboration

- The declaration emphasizes the unique qualities humans possess, such as heart, will, consciousness, and choice.

- In contrast to AI, humans can ask critical questions and consider the impact of technology on various aspects of society.

- The human goal is not to compete with AI but to use it with integrity, clarity, and in the service of humanity.

- The declaration highlights the importance of the human layer in the collaboration with AI and emphasizes the need to build a team of digital collaborators.

Read Full Article

25 Likes

Medium

87

Meta’s Llama 4: Ushering a New Era in Open AI

- Meta's Llama 4, named Maverick, is an AI model designed with native multimodality in mind, capable of handling text and images.

- There are early leaks about Llama 4 Behemoth, suggesting it will have 288 billion active parameters and a 2 trillion parameter full architecture, making it highly capable in STEM-heavy benchmarks.

- Llama 4 models, including Maverick, have been trained to understand various types of data, making them suitable for real-world tasks that involve different data modalities.

- Meta's upcoming AI developer conference, LlamaCon, scheduled for April 29, might reveal Behemoth for the first time.

Read Full Article

5 Likes

Medium

192

Image Credit: Medium

Unlocking the Power of ChatGPT: Crafting Prompts That Work

- Crafting effective prompts is essential for maximizing the potential of ChatGPT.

- Specific prompts with clear instructions yield better outcomes from ChatGPT.

- Tips for improving prompts include being specific, providing context, and using examples.

- Advanced prompting techniques involve chaining instructions and using ChatGPT for various tasks.

Read Full Article

11 Likes

Medium

390

Image Credit: Medium

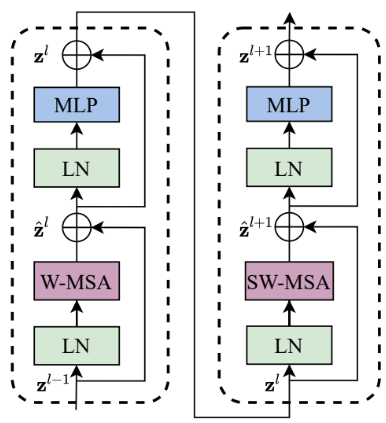

Swin Transformer in Depth: Architecture and PyTorch Implementation

- The Swin Transformer addresses scale variation and high resolution challenges in visual entities by using a hierarchical feature map and a shifted windowing scheme for self-attention computation.

- Swin Transformer's hierarchical structure enables it to model large and small visual patterns efficiently, with linear computational complexity relative to image size.

- Compared to the Pyramid Vision Transformer (PVT), Swin Transformer excels at both image classification and dense prediction tasks, making it a scalable and efficient option for high-resolution vision applications.

- The Swin Transformer architecture includes stages like Patch Partition, Linear Embedding, Swin Transformer Blocks, and Patch Merging to process high-resolution images and construct a hierarchical feature representation.

- Using a Window-based Multi-Head Self-Attention mechanism and a Shifted Windowing Scheme, the Swin Transformer improves computational efficiency and captures long-range dependencies in visual data.

- In experiments, Swin Transformer showcased superior performance in image classification, object detection, and semantic segmentation tasks when compared to previous state-of-the-art transformer-based models and convolutional neural networks.

- PyTorch Implementation of Swin Transformer involves libraries, Patch Partition, Attention Mask Calculation, Window Based Self-Attention, Patch Merging, and Swin Transformer Blocks among other components.

- Swin-S model outperformed DeiT-S and ResNet-101 in semantic segmentation, and Swin-L model achieved high mIoU on the ADE20K dataset despite having a smaller model size.

- The Swin Transformer's design choices and efficiency make it a robust backbone for various vision applications, showcasing its versatility and effectiveness in handling high-resolution visual tasks.

Read Full Article

23 Likes

For uninterrupted reading, download the app