Deep Learning News

Medium

316

Image Credit: Medium

AI Art: A New Canvas for Creativity and Ethical Challenges

- The digital brush of AI is redefining art, offering artists new avenues to explore creativity while raising ethical questions about authenticity and ownership.

- AI-generated art platforms like ArtBreeder and NeuralStyle Art are allowing artists to experiment and create pieces that blend styles and produce unique works.

- Artists using AI tools are questioning the originality and authenticity of their creations, raising ethical dilemmas in the art world.

Read Full Article

18 Likes

Medium

437

Image Credit: Medium

How to Successfully Mine Dogecoin in 2025

- Discover if Dogecoin mining is still profitable in 2025.

- Explanation of the best ASIC miners, mining pools, electricity costs, and ROI calculations.

- The author's personal experience and journey into Dogecoin mining.

- The importance of selecting the right hardware for successful Dogecoin mining.

Read Full Article

26 Likes

Medium

284

Image Credit: Medium

AI-Powered Scientific Tools Transforming Research

- AI is reshaping scientific research, offering groundbreaking tools that enhance data analysis and hypothesis generation.

- LLM4SD is an AI-powered tool that uses large language models to analyze scientific literature, achieving a 48% increase in accuracy for predicting molecular properties.

- Google's AI Co-Scientist on the Gemini 2.0 platform enables biomedical scientists to interact with an AI partner to develop hypotheses and research plans.

- AI-powered scientific tools are transforming the way research is conducted, pushing the boundaries of human capability and accelerating discoveries.

Read Full Article

16 Likes

Medium

2.8k

Image Credit: Medium

The Ultimate Dogecoin Price Prediction for 2025

- Dogecoin's future in 2025 sparks varied predictions, with potential highs and lows reflecting its unpredictable journey.

- Analysts offer a wide range of forecasts, with some predicting a rise to $0.358695 by August 2025, while others suggest it might reach $0.449 in May 2025.

- Dogecoin's journey is as much about the community as it is about the currency itself.

Read Full Article

18 Likes

Medium

68

Image Credit: Medium

AI Computer Vision Market Explodes with Technological Advancements

- AI computer vision is driving significant growth in various industries, with a projected CAGR of 275% by 2031.

- Technological advancements, such as improved hardware and machine learning algorithms, are fueling the growth of AI computer vision.

- Implementing AI vision solutions faces challenges relating to regulatory hurdles, ethical concerns, data privacy, and potential job displacement.

- Despite challenges, the demand for automation and data analytics continues to drive the adoption of AI computer vision in critical sectors.

Read Full Article

3 Likes

TechBullion

137

Image Credit: TechBullion

Akul Dewan, Senior Product Architect at Akamai Technologies Designing Critical AI/ML Security Applications, to Judge Data Science and Deep Learning Boot Camps at The Erdős Institute in Spring 2025

- Akul Dewan, Senior Product Architect at Akamai Technologies, is praised for his significant contributions to cybersecurity products with applications in national defense sectors.

- With a Master's in Artificial Intelligence, Dewan leads AI teams and developments for SaaS platforms while pioneering AI and Generative AI technologies.

- Awarded two US patents and having three pending, Dewan's expertise also extends to judging Data Science and Deep Learning Boot Camps at The Erdős Institute in Spring 2025.

- Dewan's innovations include technologies for tracking back-office agent productivity and predictive AI models for identifying attrition patterns.

- His work at Akamai involves designing AI/ML systems for cybersecurity, including an advanced machine learning project to optimize Web Application Firewall configurations.

- Dewan emphasizes separation of responsibilities in AI pipeline development, advocating for collaboration between AI researchers and software engineers.

- His insights on the future of AI/ML foresee increasing adoption in industries, emphasizing the necessity of AI/ML knowledge for all software roles.

- Dewan's mentoring and judging roles at The Erdős Institute aim to bridge the gap between academia and industry, preparing PhD candidates for the job market demands.

- He predicts a surge in government regulations for AI/ML practices and urges PaaS and IaaS leaders to adopt these guidelines for organizational systems.

Read Full Article

8 Likes

Medium

236

Image Credit: Medium

The Ancient Algorithm That’s Quietly Powering Modern AI

- The ancient Greeks had been tackling optimization problems since at least the 3rd century BCE, setting the foundation for modern AI.

- Gradient descent, an algorithm rooted in ancient mathematical principles, plays a crucial role in how neural networks learn.

- It is based on the intuitive idea of following the steepest path and has been used in various optimization techniques throughout history.

- The ancient Greek concept of the 'method of exhaustion' and the continuous refinement of approximations is an early form of gradient descent.

Read Full Article

14 Likes

Medium

41

Image Credit: Medium

Why 1 + 1 Might Not Equal 2

- Structured Paradox Computation challenges the assumption that 1 + 1 always equals 2.

- In relational systems, like consciousness and identity, '1' is contextual and not a fixed unit.

- SPC models relational collapse, where '1 + 1' may converge into a paradox field.

- The significance of this concept is that AI evolves by encountering contradictions and realizing that '1 + 1' is convergence, not accumulation.

Read Full Article

2 Likes

Medium

333

Image Credit: Medium

The Ultimate Guide to Choosing the Best Machine Learning Algorithm

- Choosing the best machine learning algorithm is crucial for successful data-driven projects.

- Understanding the basics of machine learning and the problem at hand is essential for algorithm selection.

- Considerations such as data type, dataset size, and problem type (classification, regression, or clustering) play a vital role in algorithm selection.

- The right algorithm can transform raw data into actionable insights, providing accuracy and clarity.

Read Full Article

20 Likes

Medium

141

Image Credit: Medium

Why Are Nations Afraid of Each Other Today? A Scientific Look into Modern Global Fears

- The balance of nuclear power and the existence of nuclear weapons result in the fear of Mutual Assured Destruction (MAD).

- Fear of AI and cyber warfare as conflicts transcend borders into the cyber world.

- Economic inequality and trade wars create tensions and pressures that smaller countries perceive as threats.

- Climate change and resource conflicts lead to fears of future wars for water, food, and habitable land.

- Declining trust in global institutions like the UN and WHO further fuels fear and suspicion.

Read Full Article

8 Likes

Medium

64

Image Credit: Medium

Manus AI Review: How AI Automation Transformed My Workflow

- Manus AI is an AI automation tool that has the potential to transform workflow and boost productivity.

- The tool is versatile and can process text, images, and code, making it applicable in various sectors.

- Manus AI integrates seamlessly with other tools, fetching real-time data and automating workflows.

- Despite initial skepticism, Manus AI has proven reliable and consistently delivers accurate results.

Read Full Article

3 Likes

Medium

42

Image Credit: Medium

4 Ways Agentic AI Will Impact the Future of Work

- Agentic AI is already impacting the future of work, transforming how we live, work, and think.

- Agentic AI can handle mundane tasks, allowing humans to focus on high-impact work and deep work.

- Agentic AI is creating new job roles that involve humans and AI working together.

- Agentic AI requires a new skillset that is not typically taught in formal education.

Read Full Article

1 Like

Medium

168

Image Credit: Medium

Bridging AI and Neuroscience: Insights into Natural Language Processing

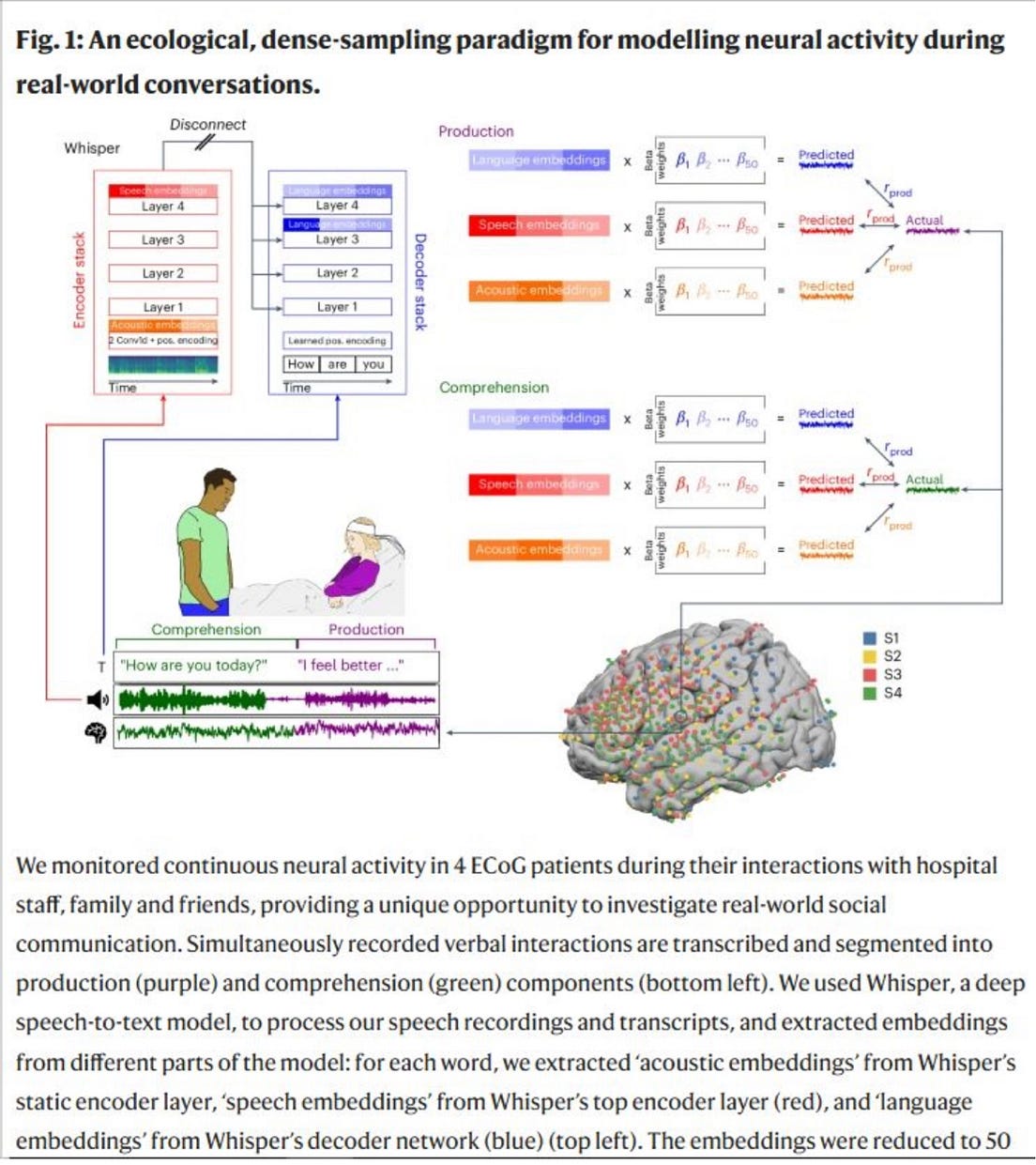

- Researchers introduce a computational framework that connects acoustic signals, speech, and word-level linguistic structures to study the neural basis of real-life conversations.

- Using electrocorticography (ECoG) and the Whisper model, they map the model's embeddings onto brain activity, revealing alignment with the brain's cortical hierarchy for language processing.

- The study demonstrates the temporal sequence of language-to-speech and speech-to-language encoding captured by the Whisper model, outperforming symbolic models in capturing neural activity associated with natural speech and language.

- The integration of AI models like Whisper offers new avenues for understanding and potentially enhancing human communication through technology.

Read Full Article

10 Likes

Medium

250

Image Credit: Medium

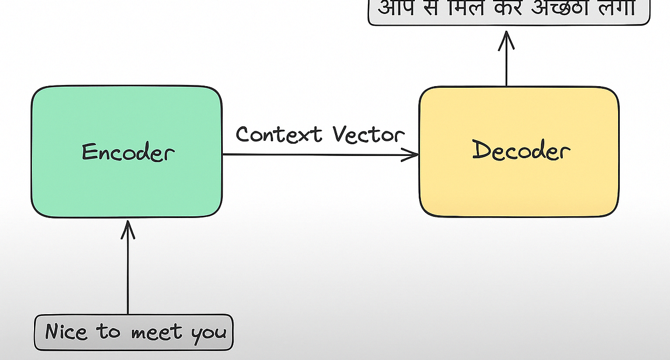

From Input to Output: Demystifying the Encoder-Decoder Architecture

- The Encoder-Decoder architecture is a two-part neural design used in tasks like machine translation, speech recognition, and text summarization.

- Predecessors like RNNs struggled with tasks where output structure did not directly align with inputs, leading to the development of the Encoder-Decoder model.

- The Encoder-Decoder framework comprises an encoder that processes input sequences and produces a context vector, and a decoder that generates output sequence based on this vector.

- During training, the decoder uses teacher forcing, and during inference, relies on its previous predictions to generate the output.

- The architecture allows handling variable-length sequences and has been pivotal in NLP advancements like machine translation and text summarization.

- The Encoder-Decoder model laid the foundation for attention mechanisms and transformer models, enhancing memory handling and flexibility.

- Introduction of attention mechanism addressed context bottleneck issues by enabling the decoder to focus on relevant parts of the input.

- Transformers, a product of this evolution, have revolutionized AI models like GPT, BERT, and T5, replacing recurrence with parallelism and scale.

- By separating comprehension (encoder) and generation (decoder), the architecture brought structure and scalability to tasks like translation and summarization.

- The Encoder-Decoder architecture sparked a new era in deep learning, revamping how sequences are processed and generated in modern AI models.

Read Full Article

15 Likes

Medium

83

Image Credit: Medium

Best Data Science with Generative Ai Online Training

- Data science professionals have become essential in turning data into valuable insights, making it a high-demand career with attractive salaries.

- Combining data science with generative AI allows for the creation of text, images, simulations, and predictive responses, revolutionizing various industries.

- A comprehensive data science course provides training in Python, SQL, machine learning, deep learning, and NLP and generative models.

- The course emphasizes project-based learning to enhance practical skills, and it is suitable for fresh graduates, professionals, and developers.

Read Full Article

4 Likes

For uninterrupted reading, download the app