Neural Networks News

Medium

377

Image Credit: Medium

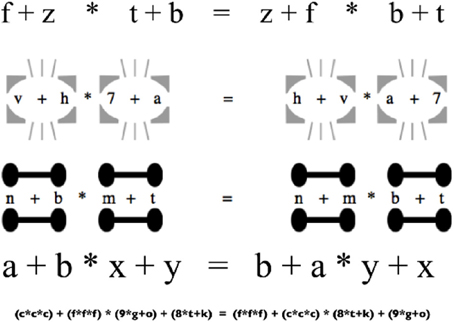

Blending Neural Networks with Symbolic Knowledge

- Blending neural networks with symbolic knowledge enhances AI systems by combining data-driven learning with structured knowledge.

- Neural networks excel at pattern recognition but often lack true understanding and face challenges in generalization and transparency.

- Knowledge graphs offer structured knowledge that can be reasoned with, providing context and relationships.

- The synergy of neural networks and knowledge graphs addresses limitations of data-driven AI, enhancing reasoning and perception.

- Hybrid AI models combine the strengths of neural networks in perception with knowledge graphs in reasoning, creating powerful AI systems.

- The integration of neural networks and knowledge graphs is being applied in various sectors and shows promising results.

- Challenges in integrating these two paradigms include architectural design complexities and knowledge graph maintenance.

- Advancements in automated knowledge graph construction and sophisticated reasoning techniques are paving the way for more seamless integration.

- The future of AI lies in weaving neural networks and knowledge graphs together to create more intelligent and trustworthy systems.

- This hybrid approach unlocks a new generation of AI that mirrors human understanding and interaction with the world.

Read Full Article

22 Likes

Medium

144

Image Credit: Medium

AI Strategy Through the Lens of Network Power

- AI strategy is viewed through the lens of networks, emphasizing the importance of connections and empowerment.

- The focus shifts from what AI can do to what it connects and who it empowers.

- The effectiveness of AI lies in the network it operates within.

- An example cites a retail organization that benefitted from AI's demand forecasting after connecting the network.

- The key was not just having the insight but also leveraging the network effectively.

- The true value emerged when people collaborated within the connected network.

- In a connected era, success is attributed to those who understand and operate within networks.

- AI should be allowed to excel at its functions, but it's crucial for individuals to leverage and integrate it effectively.

Read Full Article

8 Likes

Hackernoon

361

Image Credit: Hackernoon

Brainwaves to Bytes: How AI Networks are Rewiring the Mind-Machine Interface

- Neural interfaces are enabling the control of devices with brainwaves, converting them into digital instructions.

- Brainwaves are electrical signals from neurons categorized into delta, theta, alpha, beta, and gamma waves, each representing different cognitive states and intentions.

- Neural interfaces capture brainwaves through non-invasive methods like EEG using scalp electrodes.

- Invasive methods, like Neuralink's implantation of threads in the brain, offer higher resolution at the expense of surgery.

- AI algorithms process raw brainwave signals with techniques like filtering, feature extraction, and machine learning to decode intentions.

- Decoded brainwave patterns control software or hardware interfaces in real-time, enabling tasks like cursor movement, speech synthesis, and prosthetic control.

- Neuralink's system streams neural signals with minimal latency for computer software control, demonstrating the current state of brain-machine interface technology.

- Challenges persist in adapting AI models to signal variability, ensuring data privacy, maintaining implant stability, and addressing ethical concerns.

- The future implications include aiding neurological diseases, enhancing human-computer interaction, and expanding accessibility and healthcare.

- The brain-computer revolution, driven by AI and innovative platforms like Neuralink, signifies a new era where thoughts directly interface with the digital world.

Read Full Article

21 Likes

Medium

335

Image Credit: Medium

The Rise of Neural Networks: Unlocking the Power of Deep Learning

- Machine learning initially focused on teaching computers to learn from data using manual rules and statistical techniques.

- Neural networks, inspired by the human brain, revolutionized AI by enabling complex tasks like image recognition through deep learning.

- Modern deep learning systems use neural networks to identify patterns in large datasets with minimal human input.

- Neural networks consist of layers of neurons that recognize data patterns and relationships through interconnected computations.

- The perceptron, a fundamental unit in neural networks, processes inputs through weight multiplication and activation functions.

- Deep learning overcame complexity by using networks with multiple hidden layers to learn intricate data patterns.

- Architectures like CNNs and RNNs handle spatial or temporal data through specialized components and non-linear activation functions.

- Deep learning training adjusts weights via backpropagation and optimization algorithms like gradient descent.

- Deep learning applications have reshaped industries, with companies using it for search engines, recommendations, and self-driving technology.

- Despite its successes, deep learning faces challenges like interpretability, robustness, and scalability.

- The future of deep learning looks optimistic with advancements in hardware, open datasets, and AI accessibility.

Read Full Article

20 Likes

Medium

53

Image Credit: Medium

Struggling to Choose the Right Machine Learning Model? Here Are 7 Practical Tips to Help You Decide

- Struggling to choose the right machine learning model from a long list of options? Here are 7 practical tips to help you make informed decisions.

- Understand the problem type you are solving—classification, regression, clustering, etc., before selecting a model.

- Consider the dataset's structure, especially for high-dimensional data, to avoid overfitting and poor generalization.

- Choose models suited to your dataset's feature characteristics for improved performance.

- Factor in time, computing power, and resources available when selecting a model for efficiency and effectiveness.

- Focus on generalization rather than just training accuracy to ensure your model performs well on unseen data.

- Evaluate performance with cross-validation, regularization, and monitoring metrics like validation loss and test accuracy.

- Define success metrics based on your problem to guide model selection and training.

- Consider different evaluation strategies like probability scores, precision, recall, or ranking quality based on the problem.

- Article concludes with an invitation for feedback, suggests upcoming topics, and emphasizes the importance of understanding model selection.

Read Full Article

3 Likes

Hackernoon

381

Image Credit: Hackernoon

What If Your LLM Is a Graph? Researchers Reimagine the AI Stack

- The global knowledge graph market is projected to reach $6.93 Billion by 2030 from $1.06 Billion in 2024, with a CAGR of 36.6%.

- Market signals indicate a growing adoption of graph technology in various industries.

- Graph landscape is evolving rapidly with new graph database engines, Graph RAG variants, and applications at scale.

- Building and evaluating knowledge graphs as durable assets is emphasized, highlighting the importance of understanding their value.

- Knowledge graphs are considered organizational CapEx, contributing to data governance and AI applications.

- Knowledge graphs power household products like the Samsung Galaxy S25 and ServiceNow through acquisitions.

- Graph technology is crucial for pragmatic AI, providing the essential truth layer for trustworthy AI adoption.

- Various Graph RAG variants like OG-RAG, NodeRAG, and GFM-RAG are emerging, enhancing retrieval-augmented generation for LLMs.

- Graph database engines are evolving, and advancements in standardization and performance are observed in the market.

- New capabilities in graph analytics and visualization are introduced, along with developments in Graph Foundation Models and LLM applications.

Read Full Article

22 Likes

Medium

8

Image Credit: Medium

How Does ChatGPT Work? A Beginner-Friendly Overview

- ChatGPT is an AI language model developed by OpenAI that works using the GPT (Generative Pre-trained Transformer) architecture.

- The model is pre-trained with vast amounts of data from various sources like books, websites, and forums to generate human-like responses.

- ChatGPT uses a Transformer brain with layers of attention mechanisms to focus on critical parts of a sentence.

- The model undergoes fine-tuning with supervised learning to improve responses and avoid offensive or incorrect outputs.

Read Full Article

Like

Medium

57

Image Credit: Medium

How Does AI Learn?

- Machine Learning (ML) is a core part of AI, allowing machines to learn from data instead of being manually programmed.

- AI learning is akin to teaching a child through examples rather than instructions.

- To teach AI to recognize cats in photos, examples of photos labeled “cat” and “not cat” are shown to enable learning of patterns like pointy ears, fur, and whiskers.

- AI uses neural networks, mimicking the way human brains work with layers of neurons working together to learn from data patterns.

Read Full Article

3 Likes

Medium

352

Image Credit: Medium

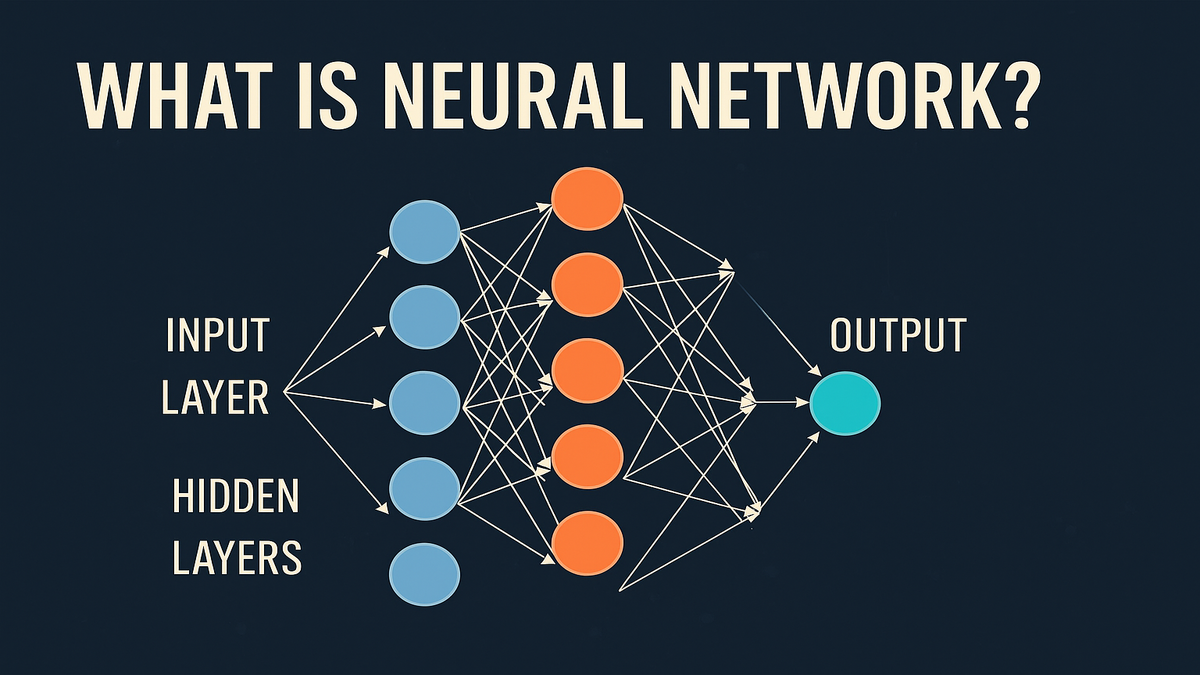

What Is Neural Network? Simplified for Everyone

- A neural network is a set of algorithms modeled after the human brain, allowing machines to learn from data, recognize patterns, and make decisions.

- Neural networks are crucial in modern AI applications like voice recognition, self-driving cars, and medical diagnostics.

- They consist of layers where data enters, processes through mathematical operations in hidden layers, and gives a final result.

- Neural networks learn through training by adjusting internal settings to reduce errors between their guess and the correct answer.

Read Full Article

21 Likes

Semiengineering

103

Image Credit: Semiengineering

Connecting AI Accelerators

- Experts discussed the various ways AI accelerators are being applied today.

- Efforts are being made in photonics to connect elements at a chiplet level or between NPUs for parallelization.

- Connectivity and data movement pose challenges in connecting accelerators.

- Arm is involved in open standards to ensure proper specs and interfaces for AI accelerators.

- The industry is domain-specific, driving the adoption of different interconnect technologies.

- Future scenarios include supercomputers in cars and smartphones, with accelerated decision-making systems.

- Uncertainty remains in how chiplets will plug together and how AI evolution will impact decision-making.

- Parallel development of standards and hardware poses challenges in ensuring complete thought and security measures.

- Issues like neural networks' fit and implications on human life remain crucial in AI accelerator development.

- The industry faces challenges in adapting to rapid advancements in AI technology.

Read Full Article

6 Likes

Hackernoon

322

Image Credit: Hackernoon

PreCorrector Takes the Lead: How It Stacks Up Against Other Neural Preconditioning Methods

- The article discusses a novel approach, PreCorrector, in preconditioner construction, utilizing neural networks to outperform classical numerical preconditioners.

- Authors mention the challenge of selecting effective preconditioners, as the choice depends on specific problems and requires theoretical and numerical understanding.

- Li et al. introduced a method using GNN and a new loss function to approximate matrix factorization for efficient preconditioning.

- FCG-NO Rudikov et al. combined neural operators with conjugate gradient for PDE solving, proving to be computationally efficient.

- Kopanicáková and Karniadakis proposed hybrid preconditioners combining DeepONet with iterative methods for parametric equations solving.

- The HINTS method by Zhang et al. combines relaxation methods with DeepONet for solving differential equations effectively and accurately.

- PreCorrector aims to reduce κ(A) by developing a universal transformation for sparse matrices, showing superiority over classical preconditioners on complex datasets.

- Future work includes theoretical analysis of the loss function, variations of the target objective, and extending PreCorrector to different sparse matrices.

- The article provides references to related works on iterative methods, neural solvers, and preconditioning strategies for linear systems.

- Appendix details training data, additional experiments with ICt(5) preconditioner, and information about correction coefficient α.

- The paper is available on arxiv under CC by 4.0 Deed license, facilitating attribution and sharing under international standards.

Read Full Article

19 Likes

Hackernoon

376

Image Credit: Hackernoon

PreCorrector Proves Its Worth: Classical Preconditioners Meet Their Neural Match

- A new approach called PreCorrector has been developed to enhance classical preconditioners for linear systems using neural networks.

- PreCorrector outperforms classical preconditioners like IC(0) and ICt(1) in constructing better preconditioners for complex linear systems.

- The neural design of PreCorrector improves efficiency and memory trade-off by achieving speed-ups compared to traditional preconditioners.

- The PreCorrector approach showcases good generalization across different grids and datasets, maintaining quality during transfers for inference.

Read Full Article

22 Likes

Hackernoon

291

Image Credit: Hackernoon

Teaching Old Preconditioners New Tricks: How GNNs Supercharge Linear Solvers

- Large linear systems in computational science often use iterative solvers with preconditioners.

- A novel approach using graph neural networks (GNNs) for preconditioner construction outperforms classical methods and neural network-based preconditioning.

- The approach involves learning correction for well-established preconditioners from linear algebra with GNNs.

- Extensive experiments demonstrate the superiority of the proposed approach and loss function over classical preconditioners.

Read Full Article

17 Likes

Medium

228

Image Credit: Medium

Regularisation: A Deep Dive into Theory, Implementation and Practical Insights

- The blog delves deep into regularisation techniques, providing intuitions, math foundations, and implementation details to bridge theory and code for researchers and practitioners.

- Bias in models could lead to oversimplification and underfitting, resulting in poor performance on training and test data.

- Variance in models causes overfitting, performing well on training data but failing to generalize to unseen data.

- The bias-variance tradeoff shows the inverse relationship between bias and variance.

- A good model finds a balance between bias and variance for optimal performance on unseen data.

- Bias and Underfitting; Variance and Overfitting are related concepts but not interchangeable.

- Different regularisation techniques like L1, L2, and Elastic Net aim to mitigate overfitting by penalizing large weights.

- Regularisation helps find the sweet spot between overfitting and underfitting in models.

- Methods like L1, L2, and Elastic Net are discussed in the blog for regularising models.

- Implementation details on how to apply L1, L2, and Elastic Net Regularisation in practice are provided.

- The blog also touches upon Dropout, Early Stopping, Max Norm Regularisation, Batch Normalisation, and Noise Injection as regularization techniques.

Read Full Article

13 Likes

Medium

278

Image Credit: Medium

Types of AI: ANI, AGI, and ASI Explained Simply

- ANI (Artificial Narrow Intelligence) is designed to perform a specific task well, such as Siri, Google Translate, Netflix recommendations, and Gmail spam filters.

- AGI (Artificial General Intelligence) aims to think, learn, and reason like humans, but it is currently just a future vision.

- ASI (Artificial Superintelligence) is a theoretical AI surpassing human intelligence in all aspects, still in the realm of science fiction.

- Understanding these AI types - ANI, AGI, and ASI - prepares us for future opportunities and challenges in the AI landscape.

Read Full Article

16 Likes

For uninterrupted reading, download the app