Cloud News

Dev

358

Image Credit: Dev

🚀 Amazon ECR Now Supports IPv6 for Private and Public Registries

- Amazon ECR now supports IPv6 for both private and public registries, enabling users to pull container images over IPv6 using AWS SDK or Docker/OCI CLI.

- The new dual-stack endpoints automatically resolve to IPv4 or IPv6 addresses based on client/network configuration, simplifying transitions away from NAT-based networking.

- IPv6 support offers virtually unlimited IP addresses, improved routing efficiency, easier scaling across container fleets, and better compatibility with modern cloud-native tools.

- To get started, users need to update their endpoint URL, ensure IPv6 compatibility in their VPC/network, and continue using Docker/OCI CLI or AWS SDK without changes in command syntax.

Read Full Article

21 Likes

Dev

238

Image Credit: Dev

Scaling and troubleshooting Amazon EKS just got easier with MCP on Anthropic Claude

- Troubleshooting Amazon EKS just got easier with MCP on Anthropic Claude, simplifying Kubernetes operations for Platform/DevOps Engineers.

- Transition from VMs to containers and microservices has complexities in maintaining operational excellence and managing Kubernetes upgrades.

- Amazon EKS Auto Mode offers improved functionality over AWS Fargate, ensuring clusters and components are up to date with the latest patches.

- EKS Auto Mode requires testing applications for compatibility with upcoming Kubernetes versions, simplifying Kubernetes operations.

- Integrating an MCP server with Amazon EKS Auto Mode can streamline troubleshooting processes using Claude Desktop UI, reducing operational overhead.

- The idea of connecting an MCP server to EKS for troubleshooting purposes could enhance operational efficiency for Engineers.

- With tools like Claude, running kubectl commands is automated, eliminating the need to search for commands and switches on CNCF webpages.

- Using tools like MCP could potentially reduce the reliance on certifications like CKA and CKAD for Kubernetes administrators.

- A GitHub repo by Alexi-led showcases an impressive project integrating MCP with EKS Auto Mode, making troubleshooting and scaling seamless.

- Automated processes like scaling with Karpenter through Claude demonstrate the potential of simplifying Kubernetes management with integrated tools.

Read Full Article

14 Likes

Hackernoon

110

Image Credit: Hackernoon

Tired of Broken Chatbots? This AI Upgrade Fixes Everything

- Function calling in AI allows models to understand when to use external tools or services to complete tasks, bridging the gap between human language and computer systems.

- Before function calling, AI struggled with generating exact API calls or parsing responses, leading to unreliable interactions and inaccurate results.

- Function calling makes AI more reliable, capable of accessing real-time data, controlling devices, and performing calculations, enhancing user experience with natural language requests.

- Function calling simulates giving an AI access to a toolbox, enabling it to execute functions like making coffee based on parameters provided, resembling interactions with a barista.

- The core mechanics of function calling involve structured function definitions, intent recognition, parameter extraction, response formatting, and context awareness for seamless interactions.

- Scaffolding code for function calling involves defining functions, implementing them, processing messages, handling function calls, and incorporating responses into natural language conversations.

- A practical example of function calling in action is demonstrated through a weather checking function using Azure OpenAI, where the AI recognizes the need for weather data and calls the appropriate function to provide real-time information.

- Function calling with OpenAI differs slightly from Azure OpenAI in client initialization and model referencing, showcasing variations in implementation strategies between the two platforms.

- The transformative nature of function calling allows AI to book appointments, access real-time data, control devices, process payments, and perform various actions, augmenting its capacity to interact with the physical world.

- Function calling revolutionizes AI, enabling it to go beyond passive interactions and engage actively with users, setting the stage for a future where natural language becomes a universal interface for digital systems.

Read Full Article

6 Likes

Hackernoon

156

Image Credit: Hackernoon

This One Python Tool Fixed My AI's Function-Calling Chaos

- Using Pydantic for type validation, JSON Schema for structural definition, and proper error handling can create robust applications with consistent AI outputs, reducing the parsing complexity.

- Inconsistent AI function outputs can lead to errors and edge cases, making it crucial to ensure precise data structure alignment.

- The tension between natural language flexibility and structured data rigidity can be resolved by implementing a validation layer like Pydantic.

- Pydantic bridges the gap between dynamic data and strict function expectations by enforcing type annotations and validating data formats.

- Pydantic helps convert AI outputs to expected formats, handling discrepancies and ensuring consistency in data processing.

- Implementing Pydantic validation in function calling workflows significantly reduces error rates and accelerates development by eliminating the need for defensive code.

- Using Pydantic models to define JSON Schema for AI understanding and validating AI outputs streamlines the input-output pipeline and enhances data reliability.

- The process of converting natural language inputs to validated data outputs through Pydantic validation demonstrates a reliable framework for consistent function outputs.

- Pydantic validation ensures that data arriving at functions matches expectations, reducing errors and increasing development efficiency.

- The pattern of using Pydantic with AI function calling can be applied in various domains like customer support, calendar management, e-commerce, healthcare, and finance for structured data extraction.

- AI function calling with strict output validation acts as a bridge between natural language complexity and system orderliness, enabling reliable, structured data processing.

Read Full Article

9 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Databases

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Medium

128

Image Credit: Medium

Behind the Cloud — The TRUTH About TeraBox.

- TeraBox, a cloud storage platform offering 1TB of free storage, raises concerns among cybersecurity experts due to potential risks associated with the 'freemium' model and monetization strategies.

- The business model of TeraBox revolves around ad support, referral programs, and premium upsells, with the hidden cost being users' data, leading to cybersecurity vulnerabilities and potential privacy breaches.

- Cybersecurity experts warn about the risks of state-sponsored data collection and unauthorized access to sensitive information through platforms like TeraBox, highlighting the importance of switching to providers with better encryption and governance policies.

- The article underscores the need for users, especially tech professionals, developers, and privacy-conscious individuals, to be cautious about the potential consequences of using free cloud storage services like TeraBox, where personal data privacy could be compromised.

Read Full Article

7 Likes

Medium

36

Image Credit: Medium

Automating Google Cloud Image Upgrades with a Custom Renovate Datasource

- Building VM images with tools like Packer allows for baking dependencies and versioning images for precise control and automation.

- A proposed image naming convention includes using semver or Unix timestamp with a triple hyphen separator to adhere to Google Cloud naming restrictions.

- Creating a custom datasource for Google Cloud images in Renovate involves building a cloud function that interacts with GCP Compute API to retrieve and format image versions.

- Deploying the cloud function on GCP with Terraform, setting up Renovate to access it securely, and using a custom Renovate manager allows for automated upgrading of GCP images based on versioning conventions.

Read Full Article

2 Likes

Medium

298

Image Credit: Medium

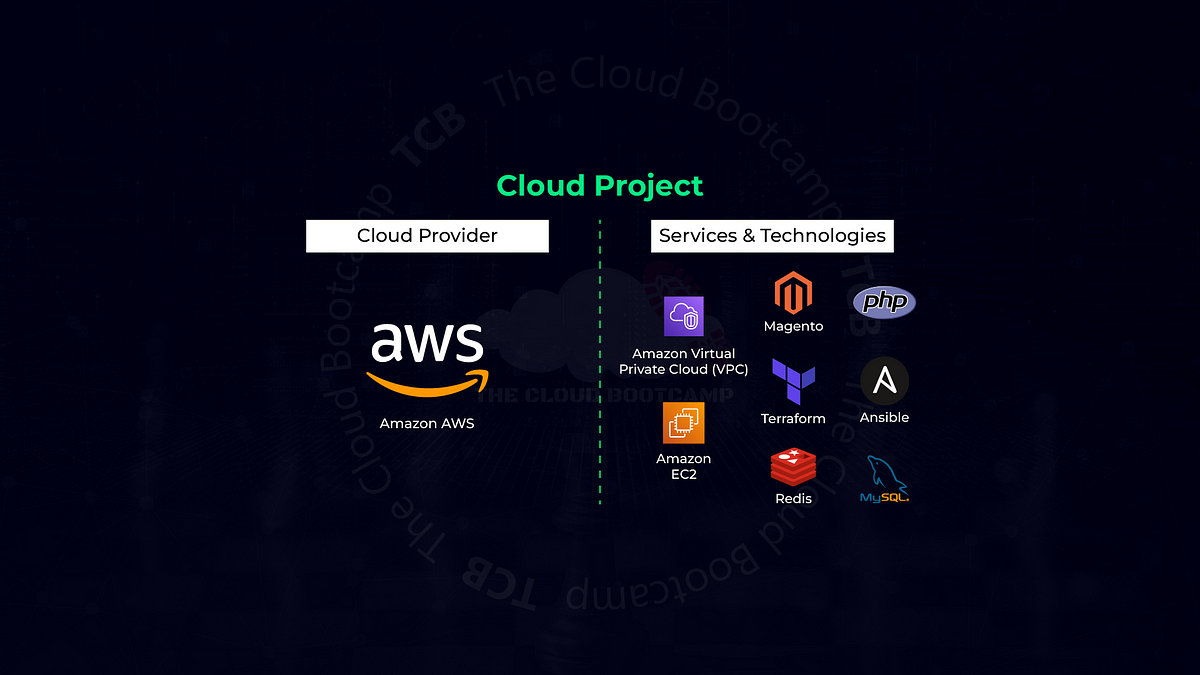

Implementation of an E-Commerce System on AWS in an automated way using Terraform and Ansible

- Infrastructure for an E-Commerce system was provisioned on AWS using Terraform and Ansible.

- Designed architecture with single VPC, public and private subnets, EC2 instance, RDS, and Redis.

- Provisioned infrastructure with Terraform using modules for VPC, Compute, Database, and Security Groups.

- Configured EC2 instance with Ansible for system updates, software installations, Magento setup, and optimization.

Read Full Article

17 Likes

Medium

59

Image Credit: Medium

The AWS Lambda 200MB Deployment Limit Will Waste Your Time. Here’s What to Do Instead.

- The AWS Lambda 200MB deployment limit can waste time and hinder progress.

- Struggles with deployment due to the size limit can lead to significant delays and frustration.

- Switching to Fargate + ECR can provide a solution, allowing for containerized deployment without the size constraints.

- In conclusion, choose the appropriate tool based on requirements to avoid unnecessary time wastage and focus on shipping features efficiently.

Read Full Article

3 Likes

Medium

248

Image Credit: Medium

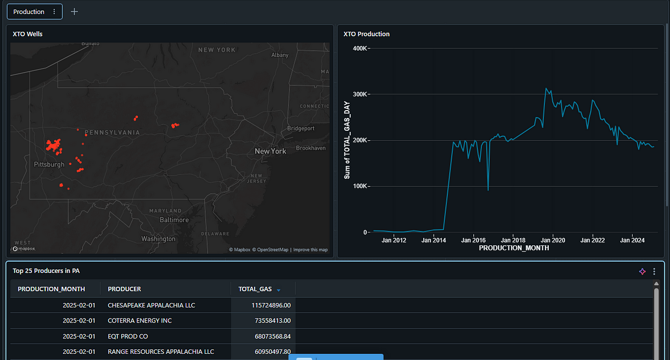

From Shale to Scale: Wrangling Oil & Gas Data with GCP and Databricks

- The article discusses using GCP and Databricks to analyze Oil & Gas data, particularly focusing on Pennsylvania production data available from 1980 to February 2025.

- By uploading data to GCP buckets and utilizing Databricks, the article explains how to clean, combine, and analyze large datasets at scale.

- The project involves working with production data for each well and inventory data for each producer, totaling about 14 Excel files with 1.06 GB of data.

- Creating a GCP storage bucket, setting up access for Databricks, and organizing data into clean tables are key steps in the process.

- The article also covers generating visualizations like maps using Plotly and Folium, and creating interactive dashboards on Databricks.

- The dashboard showcases well locations, monthly production, and top producers, demonstrating the value of leveraging public data and powerful tools for insights.

- The project highlights the transformation of unstructured data into actionable insights through cloud storage, PySpark, and Databricks.

- It encourages exploration of technology-infrastructure-energy intersections and offers to connect for further collaborative ideas or projects.

- The article concludes by emphasizing the potential of public datasets for valuable discoveries and invites readers to engage in data-driven explorations.

- For more information or collaboration, the author can be reached at linkedin.com/in/dmitryabrown.

Read Full Article

14 Likes

Medium

401

Image Credit: Medium

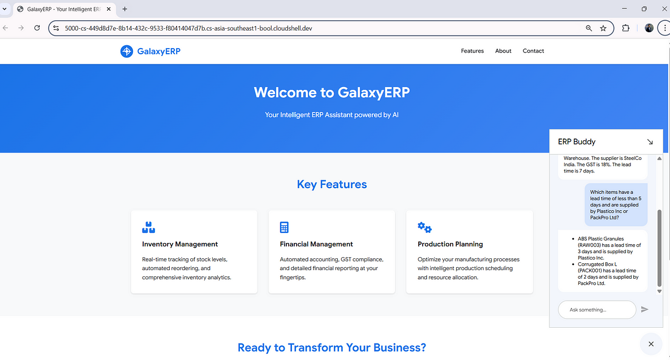

From Bootcamp to ERP Revolution: Building an AI Agent with MCP & Vertex AI

- Model Context Protocol (MCP) serves as a universal connector for AI agents, simplifying the integration of ERP systems with AI tools.

- The goal was to create an AI agent for GalaxyERP using Vertex AI by uploading inventory data and setting grounding confidence for accurate responses.

- Challenges included handling complex GST queries and limited cloud credits, which were overcome by creating custom playbooks and utilizing Google Cloud Free Tier.

- Key takeaway emphasized that AI enhances human capabilities rather than replacing them, while encouraging developers to explore MCP's potential for enterprise systems.

Read Full Article

23 Likes

Medium

55

Image Credit: Medium

A Simple Way to Set Up Google OAuth for N8N.

- Setting up Google OAuth for n8n can be a daunting process, but it only needs to be done once for all Google connections in n8n.

- Key steps include creating credentials in Google Cloud, setting up the OAuth consent screen, and adding redirect URLs.

- Ensuring correct redirect URIs is crucial, as even small mismatches can cause connection issues.

- Once OAuth setup is complete, connecting Google services to n8n for automation becomes straightforward and enables various automation possibilities.

Read Full Article

3 Likes

Medium

326

[SOLVED] How to fetch real-time google cloud run job logs?

- To fetch real-time logs from a specific job running on Google Cloud Run, the current method of using Google Cloud Logging API with filters for periodic log retrieval has limitations in terms of API rate limits.

- An alternative and more efficient approach is to set up a Log Sink to route logs to Pub/Sub. This allows relevant logs filtered by job name to be forwarded to a Pub/Sub topic, enabling real-time log processing by a Django backend without hitting API rate limits.

- Another option is to use a Log Sink to send logs to BigQuery for structured storage and analysis, which can also help in eliminating API rate limits.

- Implementing these approaches can ensure real-time log monitoring for long-running jobs and scalability for multiple users and pipelines on Google Cloud Run.

Read Full Article

19 Likes

Digitaltrends

13

Image Credit: Digitaltrends

The RTX 5060 is coming to laptops. Here’s what we know about Nvidia’s mainstream GPU

- The Nvidia RTX 5060 is set to launch in May for laptops, stirring excitement among fans and enthusiasts despite drama around availability of Nvidia graphics cards.

- Many laptop brands are already advertising RTX 50-series laptops, with anticipated RTX 5060 options across various models.

- Reports suggest that there will be RTX 5060 laptops available from every major OEM.

- Key features of Acer Predator Helios Neo 16S include a 16-inch 2560 x 1600 OLED display, Intel Core Ultra 9 275HX processor, and thin design with various ports.

- ASUS ROG Zephyrus G14 offers a 14-inch 2880 x 1800 OLED display, Ryzen AI HX 370 processor, and aluminum body with notable features like high-quality speakers and RGB backlit keyboard.

- Dell Alienware 16 Area-51 features a 16-inch QHD+ display, Intel Core Ultra 9 processor 275HX, unique liquid teal design, and dual cameras with Windows Hello support.

- Gigabyte AERO X16 boasts a 16-inch 2560 x 1600 IPS WQXGA display, AMD Ryzen AI 9 HX 370 processor, and Lunar White and Space Gray color options with a one zone RGB backlit keyboard.

- These upcoming RTX 5060 laptops offer diverse specifications including impressive displays, powerful processors, ample storage, connectivity options, and unique design features.

- Consumer anticipation is high for the release of RTX 5060 laptops, with each brand offering its twist on the mainstream GPU in upcoming laptop models.

- Overall, Nvidia's RTX 5060 is expected to provide a range of options for laptop gamers and creators, with different brands incorporating the GPU into their unique laptop designs.

Read Full Article

Like

Digitaltrends

9

Image Credit: Digitaltrends

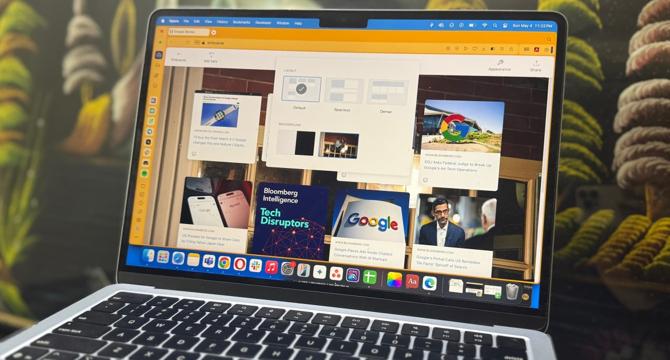

I tried a hidden tool in Edge and Opera. Now it’s hard to go back to Safari

- Safari lacks a visual diary feature for organizing content effectively, compared to Opera and Microsoft Edge.

- Chromium-based browsers are favored for extensions but face challenges in syncing between desktop and mobile.

- Safari's tab group organization can be overwhelming for heavy users, leading to resource consumption.

- Edge and Opera excel in content organization with features like Collections and Pinboards, offering greater flexibility and convenience.

- Opera's Pinboards function like Pinterest boards, allowing easy saving, customization, and sharing of web content.

- Customizable Pinboards in Opera sync across devices and enable sharing via custom URLs without login requirements.

- In Microsoft Edge, Collections provide a convenient side panel for organizing and saving web content with features like incognito mode and Copilot integration.

- Collections in Edge allow quick copy of items with headlines, sources, URLs, and thumbnails for easy reference.

- Edge's Collections sync across devices and offer features for deep research queries, providing a seamless cross-platform experience.

- The author finds Edge and Opera's organizational tools essential for cataloging research effectively and states a reluctance to return to Safari for daily browsing.

Read Full Article

Like

Digitaltrends

243

Image Credit: Digitaltrends

Whatever GPU you buy, make sure it’s not the RTX 5060 8GB

- The launch of Nvidia's RTX 50 series has been plagued with issues such as melting cables, crashes, missing ROPs, and high pricing.

- The 8GB version of the RTX 5060 Ti sold out quickly, raising concerns about the upcoming release of the RTX 5060 with only 8GB VRAM.

- In 2025, insufficient VRAM has become a real problem, impacting performance. The 8GB and 16GB versions of the RTX 5060 Ti showed noticeable performance differences.

- Tests revealed that the 8GB card performed significantly worse in games compared to the 16GB version, with lower FPS and frame time issues.

- The 8GB RTX 5060 Ti struggled in games like Spiderman 2 and Warhammer 40,000, showing poorer performance than the 16GB model.

- Techspot's review highlighted performance gaps between the 8GB and 16GB variants, with the latter outperforming by over 80% in some cases.

- Amid inflated GPU costs and tariff impacts, purchasing GPUs with inadequate VRAM, like the 8GB 5060 Ti, is not justifiable for gamers.

- Nvidia and AMD are urged not to release GPUs with less than 12GB VRAM in 2025, emphasizing the importance of sufficient memory for gaming performance.

- Consumers are advised to avoid GPUs with insufficient VRAM and push for better stock availability to send a message to manufacturers.

- The GPU market faces challenges with high prices and stock shortages, making it difficult for gamers to acquire suitable graphics cards.

Read Full Article

14 Likes

For uninterrupted reading, download the app