Devops News

Dev

127

Image Credit: Dev

Platform Engineering: The Next Evolution in DevOps

- Platform Engineering is the practice of designing and maintaining internal platforms that provide reusable and standardized tools, environments, and workflows for developers.

- Platform engineering introduces an abstraction layer, reducing complexity and making it easier for developers to focus on building applications rather than managing infrastructure.

- A platform engineering stack typically includes self-service portals, infrastructure as code, Kubernetes and container orchestration, CI/CD pipelines, and observability & monitoring.

- Platform engineering offers features such as standardized developer workflows, self-service capabilities, security and compliance by default, automated infrastructure management, and integrated monitoring & logging, providing benefits like faster deployment cycles, reduced operational overhead, improved developer experience, and better scalability.

Read Full Article

7 Likes

Dev

50

Image Credit: Dev

Why Kubernetes Is Everywhere — But Setting It Up Still Kinda Sucks (Unless You Follow This Guide)

- Kubernetes has become the backbone of modern cloud-native infrastructure — the control plane for running containerized applications at scale.

- Kubernetes is being used by various industries including financial institutions, healthcare platforms, retail giants, AI startups, and gaming companies for efficient app deployment.

- Setting up a realistic Kubernetes cluster at home or in a lab can be complex, requiring physical hardware, networking setup, service exposure, and troubleshooting.

- A Kubernetes blog series is available to help users with practical, real-world walkthroughs to properly set up and optimize their clusters.

Read Full Article

3 Likes

Dev

309

Image Credit: Dev

What the Heck Is Linux, and Why You Should Bother Learning It (Especially Red Hat) in 2025

- Linux is an open-source operating system that is used widely in various domains.

- Red Hat Enterprise Linux (RHEL) is a corporate version of Linux, popular in enterprise environments.

- Linux is used in web servers, cloud platforms, smartphones, and supercomputers.

- Learning Linux, especially Red Hat, in 2025 can offer career opportunities in cloud computing, cybersecurity, and system administration.

Read Full Article

18 Likes

Dev

127

Image Credit: Dev

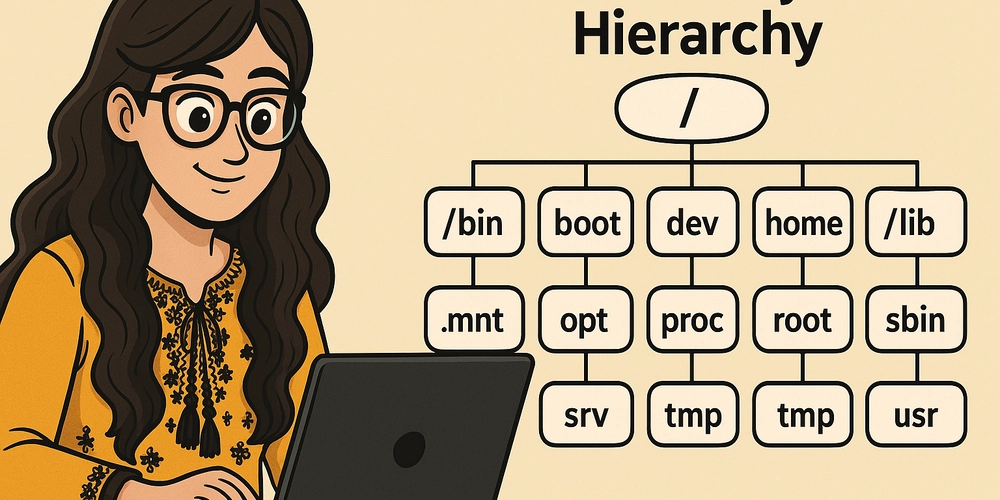

Understanding the Linux File System Hierarchy

- The Linux File System Hierarchy is a fundamental topic in Linux and is essential for system administrators.

- The root directory (/) serves as the starting point for all files and directories.

- Key directories like /bin, /sbin, /etc, /home, /var, /tmp, /usr, /boot, /dev, /proc, /mnt, and /media have specific purposes and usage.

- Understanding the file system hierarchy is important for tasks like user management, troubleshooting, and package installations, making it crucial for the RHCSA exam.

Read Full Article

7 Likes

Discover more

- Programming News

- Software News

- Web Design

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Amazon

397

Image Credit: Amazon

Accelerate large-scale modernization of .NET, mainframe, and VMware workloads using Amazon Q Developer

- Legacy software applications pose challenges for businesses due to maintenance, security, and compliance issues, driving the need for modernization using new frameworks and cloud services.

- Traditional software modernization processes are labor-intensive, costly, and time-consuming, hindering the adoption of cloud technologies for critical applications.

- Amazon Q Developer, a generative AI-powered assistant, offers intelligent automation and scalability for large-scale modernization and migration of .NET, mainframe, and VMware workloads.

- Q Developer uses AI and autonomous agents to analyze, plan, and implement workload-specific modernization steps, enabling multifunctional teams to transform multiple workloads simultaneously.

- By automating complex processes, Q Developer accelerates modernization, improves scalability, and enhances the quality of transformations, leading to faster and cost-effective outcomes.

- Teams leveraging Amazon Q Developer can modernize applications up to four times faster, realize cost savings, and streamline migration planning compared to traditional methods.

- Q Developer facilitates collaboration through task parallelization and web-based interfaces, enabling multi-functional teams to work together efficiently on large-scale projects.

- The expertise of Q Developer agents in languages, frameworks, and domains ensures high-quality modernization outcomes, enhancing security, compliance, and operational performance.

- Amazon Q Developer transformation capabilities are currently available in preview, offering a unified web experience and IDE support for organizations looking to modernize their workloads.

- To explore the Amazon Q Developer transformation capabilities, interested users can visit the Q Developer web page for demonstrations, documentation, and workload-specific transformation insights.

- Krishna Parab and Elio Damaggio lead the product marketing and development efforts for Amazon Q Developer transformation capabilities, bringing extensive experience in AI, tech, and product management.

Read Full Article

23 Likes

Dev

282

Image Credit: Dev

Lift Scholarship Application is now Open!

- The Linux Foundation has opened applications for the Lift Scholarship program.

- The scholarship program grants free access to training courses and certification exams for deserving candidates.

- There are 16 categories available for applicants to choose from based on their experience.

- Applicants are advised to avoid using AI-generated content or copied responses when completing the application.

Read Full Article

17 Likes

The New Stack

31

Image Credit: The New Stack

Harness Kubernetes Costs With OpenCost

- The rising complexity and costs associated with Kubernetes have become a common concern for many organizations.

- Cloud Foundry Korifi, built on Kubernetes, simplifies application deployment and management by abstracting away complexities.

- OpenCost, an Open Source CNCF project, offers comprehensive cost visibility and optimization capabilities for cloud infrastructure.

- Korifi streamlines application deployment, supports various languages, automates networking, and enhances the developer experience.

- OpenCost provides insight into cloud spending, supports multiple providers, aids data-driven decision-making, and encourages community-driven development.

- Installing Korifi and OpenCost on a local Kubernetes cluster involves using Helm and following specific configurations.

- OpenCost UI displays cost information per pod and namespace, aiding in accountability, optimization, and anomaly identification.

- Utilizing open source tools like OpenCost with Korifi allows for monitoring costs at a granular level, aiding in better resource allocation decisions.

- The integration of OpenCost with Kubernetes clusters offers insights into infrastructure costs beyond just cloud provider charges.

- Understanding and optimizing costs through tools like OpenCost and Korifi helps engineering teams make informed decisions for resource allocation.

Read Full Article

1 Like

Solarwinds

209

Image Credit: Solarwinds

SolarWinds and Turn/River Capital: Supercharging Innovation and Operational Resilience

- SolarWinds, a trusted provider of IT management software, has partnered with Turn/River Capital to enhance innovation and operational resilience for its customers.

- This partnership will enable SolarWinds to continue driving observability, improving database management, and streamlining IT service desk operations.

- SolarWinds aims to deliver operational resilience, helping enterprises adapt, sustain, and grow in times of business turmoil.

- With the support of Turn/River Capital, SolarWinds will develop customer-centric solutions and leverage state-of-the-art AI and security tools to enhance incident response, observability functions, and service desk tasks.

Read Full Article

12 Likes

Siliconangle

197

Image Credit: Siliconangle

VMware ups Tanzu’s gen AI support, sheds Kubernetes dependence

- VMware's Tanzu division of Broadcom Inc. adds generative artificial intelligence and support for Model Context Protocol to its platform-as-a-service, dissociating from Kubernetes.

- Model Context Protocol (MCP) by Anthropic enables AI models to interact with external data in a structured manner, allowing Tanzu users to build gen AI applications faster.

- Broadcom's AI-ready PaaS optimized for private cloud aims to facilitate the development and deployment of gen AI and agentic applications for enterprises.

- Gartner forecasts a significant increase in agentic AI adoption by 2028, with Broadcom focusing on simplifying agent implementation through common data services.

- Tanzu's Spring AI platform supports gen AI patterns, enables faster iterations, and offers integrated observability and utilities for AI model evaluation.

- The refreshed Tanzu platform includes automated container builds, external model connections, and updates applications without ticketing to enhance developer productivity.

- Tanzu disassociates from Kubernetes, emphasizing the importance of an 'IaaS dial tone' and highlighting Kubernetes as a low-level technology not suited for application platforms.

- Tanzu will integrate with Anthropic's Claude LLM for proxy requests, governance controls, and efficient model usage, while leveraging Kubernetes runtime components.

- The focus is on simplifying the platform for users, unifying essential components like service mesh and observability while dissociating from Kubernetes complexity.

- Broadcom aims to provide an efficient solution for building and deploying gen AI applications, emphasizing the shift away from Kubernetes dependence towards an IaaS framework.

Read Full Article

11 Likes

Siliconangle

65

Image Credit: Siliconangle

Operant AI introduces AI Gatekeeper for runtime protection across hybrid cloud environments

- Operant AI Inc. has introduced AI Gatekeeper, a new product providing runtime artificial intelligence protection.

- AI Gatekeeper offers comprehensive runtime defense for enterprises deploying AI applications and agents across hybrid and private clouds.

- The solution includes trust scores, agentic access controls, and threat blocking for enhanced security.

- AI Gatekeeper enables visibility into runtime threats and provides protection for MCP and AI nonhuman identities.

Read Full Article

4 Likes

Dev

387

Image Credit: Dev

Building a Self-Healing CI/CD Pipeline on GitLab

- Auto-resume from stuck jobs. Improve resilience. Save time.

- A fully open-source system that allows your pipelines to automatically resume from the last successful stage — without manual intervention.

- Each stage of your GitLab pipeline records progress to .ci-progress.json (shared volume), and a Python watchdog script checks these files to trigger a new pipeline if the current one is stuck or timed out.

- Key features include pipeline resuming from last good stage, JSON-based per-pipeline tracking, retry limits + max age protection, and compatibility with hybrid GitLab runner setups.

Read Full Article

23 Likes

Solarwinds

6

Image Credit: Solarwinds

Over-Emphasis on Degrees Is Harming the Tech Industry

- Job postings in the tech industry often prioritize university degrees, creating a barrier to entry for skilled individuals without formal credentials.

- The over-emphasis on degrees in hiring harms diversity within organizations and limits the range of voices and experiences.

- The lack of diversity in the tech industry excludes low-income earners, single parents, and other marginalized groups, hindering innovation and market response.

- To improve the situation, organizations should revise job descriptions, focus on relevant skills and practical experience, offer diversified training programs, and promote a culture of inclusion.

Read Full Article

Like

Dev

200

Image Credit: Dev

From Legacy Systems to Cloud-Native: Lessons Learned During Our Digital Shift

- Transitioning from legacy infrastructure to a cloud-native ecosystem involves a full mindset shift.

- Key takeaways: audit existing systems, embrace containerization and microservices, empower developer autonomy, prioritize observability over monitoring, and shift security left.

- Moving to cloud-native requires a cultural change with increased collaboration, automation, and end-to-end ownership of code.

- CorporateOne's digital transformation journey has resulted in faster, resilient, and more user-friendly systems.

Read Full Article

10 Likes

Dev

209

Image Credit: Dev

LAMP Stack (WEB STACK) Implementation in AWS

- The LAMP stack consists of Linux, Apache, MySQL, and PHP, facilitating dynamic web hosting and app development.

- Linux serves as the foundational OS, while Apache handles web page serving and supports advanced features.

- MySQL is used for data storage and management, with MariaDB as a compatible alternative.

- PHP processes dynamic content, interacts with databases, and supports web app development.

- LAMP stack applications include CMS, e-commerce sites, web apps, and custom web solutions.

- Prerequisites for LAMP stack implementation in AWS include launching an Ubuntu EC2 instance and setting up security groups.

- Installation steps cover setting up Apache, MySQL, and PHP, creating virtual hosts, and enabling PHP on the website.

- Configuring Apache virtual hosts allows for multiple websites on a single server and proper site hosting.

- Enabling PHP involves adjusting the DirectoryIndex settings to prioritize index.php over index.html.

- Testing PHP functionality through creating and removing a phpinfo() script helps ensure proper server configuration.

- The LAMP stack's versatility offers developers a reliable environment for building and deploying web applications.

Read Full Article

12 Likes

Openstack

22

Image Credit: Openstack

How OpenStack Powers BT Group’s 5G Network Transformation

- BT Group leads the UK's shift to open-source 5G infrastructure with Canonical's OpenStack.

- 5G required a distributed architecture and network function virtualization, driving BT Group to adopt open-source solutions.

- Implementing OpenStack allowed BT Group to deploy 5G to over 75% of the UK population.

- BT Group needed to virtualize network functions and chose Canonical for expertise in OpenStack deployment.

- Canonical's telco-grade OpenStack distribution enabled cost-effective and scalable operations for BT Group.

- Extensive training empowered BT Group's team to operate OpenStack effectively.

- The collaboration between BT Group and Canonical led to successful 5G deployment across the UK.

- 5G transformation enhanced network performance, customer experience, and scalability for BT Group.

- BT Group's 5G network allowed for seamless connectivity in high-density areas like sports stadiums.

- EE was recognized as the UK's Best Mobile Network following the successful 5G deployment.

Read Full Article

1 Like

For uninterrupted reading, download the app