Devops News

Dev

91

Image Credit: Dev

The Self-Hosting Rabbit Hole

- The article discusses the trade-off between convenience and over-optimization in project momentum.

- The author transitioned from a cloud-hosted analytics platform to Coolify, a PaaS for self-hosting codebases.

- Platforms like Heroku and Vercel offer convenience by abstracting AWS infrastructure complexities.

- Coolify simplifies server management by providing a web interface and automated deployment.

- Self-hosting with Coolify streamlines deployment processes, eliminating manual configurations.

- The article highlights the benefits of self-hosting for learning and cost optimization.

- Installing Coolify on a fresh server is recommended to avoid conflicts and streamline setup.

- Beware of vendor lock-in risks when platforms control both tooling and infrastructure.

- The author explores the trend of self-hosting open-source software for learning and customization.

- The article compares the convenience of Plausible's cloud version versus self-hosting for maintenance and updates.

Read Full Article

5 Likes

Siliconangle

297

Image Credit: Siliconangle

AI in application development: Maturity matters more than speed

- AI in application development requires operational maturity. Without solid pipelines and DevOps discipline, AI can amplify existing inefficiencies instead of fixing them.

- Adopting AI to accelerate application development is no longer optional. Organizations must have infrastructure automation, a mature API strategy, and a strong data management strategy.

- Successful AI adoption in software development is about enabling velocity through maturity. AI should be seen as an augmentation layer, not a replacement for good engineering practices.

- Building internal platforms and embracing platform engineering is crucial to abstracting infrastructure complexity for developers and allowing them to focus on delivering business value through AI.

Read Full Article

17 Likes

Dev

384

Image Credit: Dev

Using Grammatical Evolution to Discover Test Payloads: A New Frontier in API Testing

- Grammatical Evolution (GE), when fused with Genetic Algorithms (GA), opens up a beautifully chaotic new approach to payload discovery in API testing.

- Genome, Grammar, Fitness Function, and Operators are the core components of Grammatical Evolution.

- Grammatical Evolution allows meaningful payload structure with randomness, enabling injections, formatting errors, anomalies, and memory/delay triggers.

- The system tracks payloads, finds vulnerabilities, and offers regression discovery, making it useful for QA engineers, security testers, and automation engineers.

Read Full Article

23 Likes

Dev

229

Image Credit: Dev

Stream Logs from Docker to Grafana Cloud with Alloy

- Setting up logging inside containers can be annoying, but Grafana Alloy simplifies the process.

- A Flask server running inside a Docker container can send logs to Grafana Cloud using Grafana Alloy without host volumes.

- The process involves setting up Grafana Cloud, creating a Flask app with logging, and configuring Alloy and Loki.

- With this setup, logs from the Flask app are sent to Grafana Cloud, making it easier to manage and analyze the logs.

Read Full Article

13 Likes

Discover more

- Programming News

- Software News

- Web Design

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Solarwinds

54

Image Credit: Solarwinds

5 Things We Learned About Operational Resilience at SolarWinds Day

- Operational Resilience is Strengthened with Strong Incident Response

- Aligning Business and Technology Is Critical to Success

- Tool Sprawl, Siloes, and Fragmentation Are Communication Issues

- AI Continues to Become More Central to Effective IT Operations

Read Full Article

3 Likes

Amazon

320

Image Credit: Amazon

Simplifying Code Documentation with Amazon Q Developer

- Amazon Q Developer's /doc agent automates README generation and updates, reducing time spent on documentation.

- The /doc agent uses generative AI to analyze codebase and respect .gitignore files for documentation.

- Users can create new READMEs or update existing ones with Amazon Q's /doc agent in their IDE.

- For projects without documentation, selecting 'Create a README' initiates the process.

- The /doc agent scans source files, summarizes, and generates documentation for the selected folder.

- Documentation syncing assists in keeping documentation aligned with code changes.

- Iterative improvement is enabled through feedback loops for comprehensive documentation.

- Different levels of documentation creation are supported for modular projects.

- Maintaining hierarchical documentation structure aids in specificity and manageability.

- Amazon Q Developer's /doc agent helps automate and streamline documentation management for software projects.

Read Full Article

19 Likes

Dev

59

Image Credit: Dev

When One Tech Stack Isn’t Enough: Orchestrating a Multi-Language Pipeline with Local FaaS

- The article discusses orchestrating a multi-language pipeline using a local-first FaaS approach to handle real-time data processing across various systems.

- The scenario involves using different languages and tools like Rust, Go, Python, Node.js, and Shell Script for different nodes in the pipeline.

- Node 1 (Rust) focuses on high-performance ingestion, utilizing Rust's memory safety features and ownership model for handling high event throughput.

- Node 2 (Go) deals with filtering and normalization, leveraging Go's concurrency features and ability to compile into a single binary for deployment flexibility.

- Node 3 (Python) handles ML classification, benefiting from Python's ecosystem for data science tasks and ease of loading pretrained models.

- Node 4 (Node.js) manages external service notifications, utilizing Node.js's event-driven nature for real-time hooks like Slack notifications, webhooks, etc.

- Node 5 (Shell Script) focuses on archiving logs using classic CLI tools like tar and gzip, known for their reliability and efficiency in handling file archiving.

- Data adapters play a crucial role in handling different data formats and ensuring seamless communication between nodes while keeping the underlying data format complexities abstracted.

- By adopting a local-first FaaS mindset and unifying the pipeline into a single computational graph, the article highlights significant reductions in friction between teams working with different languages and tools.

- The approach allows each team to focus on their specific node's functionality without being heavily dependent on other teams, thereby streamlining the overall data processing workflow.

- Overall, the local-first FaaS approach proves beneficial for managing a multi-language environment, providing flexibility and efficiency in handling diverse tasks across the data processing pipeline.

Read Full Article

3 Likes

Dev

187

Image Credit: Dev

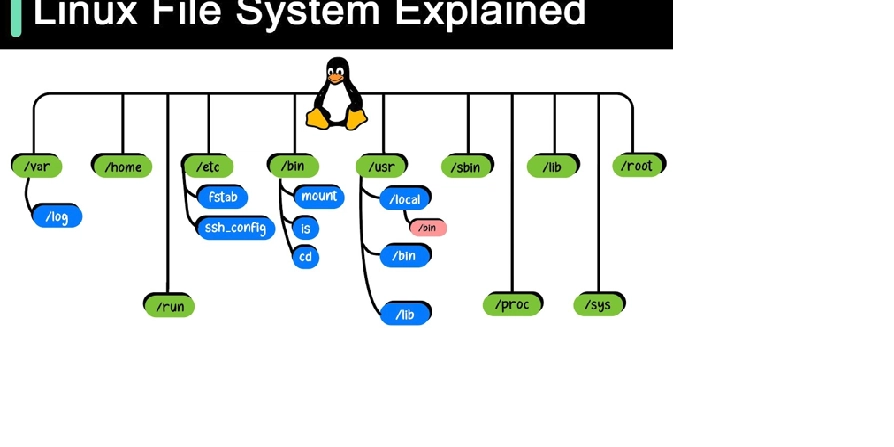

Mastering the Backbone of Linux: The File System Structure You Must Know

- The Linux file system serves as the backbone of the operating system, providing a structured way to store, manage, and access data essential for businesses and IT professionals.

- Its hierarchical design, guided by the Filesystem Hierarchy Standard (FHS), ensures consistency and predictability across Linux distributions.

- Key directories explored in the Linux file system include the root directory, user directories, system configuration, boot files, binary executables, device files, process information, temporary files, logs and variable data, mount points, and libraries.

- Root directory (/) is crucial in organizing critical paths for enterprise systems, and IT professionals use it for maintaining file hierarchy efficiently.

- User directories (/home and /root) are utilized by businesses to provide isolated workspaces for employees and by IT for privileged tasks.

- System Configuration (/etc) stores critical configuration files for network settings and application configurations essential for businesses and IT teams.

- Boot Files (/boot) are utilized for managing GRUB configuration files in dual-boot setups for testing and operational purposes in enterprises.

- Binary Executables (/bin and /sbin) are used for essential and administrative commands by employees, businesses, and IT professionals.

- Device Files (/dev) store information for managing storage devices efficiently by businesses and IT during troubleshooting or setup processes.

- Process Information (/proc) provides real-time performance metrics for optimizing resource utilization in businesses and aiding system administrators in diagnosing performance issues.

Read Full Article

11 Likes

Dev

210

Image Credit: Dev

🚀 10 DevOps Best Practices That Saved My Team (and Sanity)

- Infrastructure as Code (IaC) using tools like Terraform, Pulumi, or AWS CloudFormation is crucial.

- Automate tests, including unit tests, integration tests, and smoke tests, before deploying code.

- Dockerize applications to improve portability, predictability, and production readiness.

- Monitor everything but be cautious not to trigger alert fatigue, and use thresholds based on SLOs.

Read Full Article

12 Likes

Medium

265

7 Tools That Will Make You a More Productive DevOps Engineer

- fzf is a command-line fuzzy finder that makes searching interactive and super fast.

- tmux allows you to create multiple terminal sessions within a single window and manage remote servers.

- fzf provides faster file and command searching, reducing the need for manual typing.

- tmux is useful for keeping SSH sessions active, running multiple tasks in parallel, and remote server management.

Read Full Article

15 Likes

Dev

311

Image Credit: Dev

🎉 2000 Followers on Dev.to – Thank You, DevOps Fam! 🙌

- DevOps Challenge Hub has reached 2000 followers on Dev.to.

- The journey of learning, sharing, and growing together in the DevOps space.

- Emphasis on simplifying DevOps and cloud concepts for learners.

- Future plans include more hands-on projects, emerging tech in DevOps, and collaborations.

Read Full Article

18 Likes

Medium

210

Image Credit: Medium

Grind LeetCode or Build Projects?

- Build projects, then LeetCode.

- Projects and open-source contributions are important for gaining experience and filling your resume with relevant content.

- Having at least three unit-tested projects is recommended, which can be web or mobile applications accessible to others.

- Grinding LeetCode is important for testing algorithms and data structures, which is often a requirement for top companies.

Read Full Article

12 Likes

Dev

412

Image Credit: Dev

This Is Likely the Computing Technology that Supports the Most Data Sources

- Enterprise data sources have evolved to include databases, files, APIs, streaming data, and more, requiring technologies to support multi-source computation.

- The 'logical data warehouse' is a common approach for multi-source computation but faces limitations in supporting diverse data sources.

- esProc is a technology that excels in multi-data source support without the need for heavy modeling, offering a lightweight approach and extensive connectivity capabilities.

- esProc supports various data sources like relational databases, non-relational databases, file formats, message queues, big data platforms, APIs, and more.

- Compared to other technologies, esProc not only supports a wider range of sources but also offers easier usability for cross-source computation.

- esProc offers a simple and powerful scripting language, SPL, for cross-source computation, providing seamless connectivity and computation capabilities.

- Users can easily extend new data sources in esProc without the need for complex development, enhancing flexibility and usability.

- esProc prioritizes flexibility and extensibility over transparency, allowing for easier handling of complex and irregular data structures.

- With seamless integration into mainstream application systems and flexible deployment options, esProc ensures a satisfying user experience and ease of integration.

- esProc stands out for supporting a wide variety of data sources, offering extensibility, unified syntax, structured data format compatibility, and smooth integration with mainstream systems.

- Overall, esProc emerges as a computing technology that supports a wide range of data sources while providing user-friendly experiences, quick extensibility, and powerful support.

Read Full Article

24 Likes

Dev

196

Image Credit: Dev

My Learnings About Etcd

- Etcd is a distributed key-value store, fully open-source and implemented in Golang.

- It is a critical component of the control plane in Kubernetes, storing all cluster data in a key-value format.

- Etcd is designed as a fault-tolerant and highly available system, utilizing the RAFT consensus algorithm for ensuring strong consistency.

- Etcd uses the BoltDB storage engine and follows the B+ Tree design to provide consistent and predictable reads.

Read Full Article

11 Likes

Dev

41

Image Credit: Dev

How to Build a Self-Service DevOps Platform (Platform Engineering Explained)

- As organizations scale their DevOps practices, the demand for efficiency, autonomy, and standardization grows.

- A Self-Service DevOps Platform is an internal platform that provides standardized workflows, automation, and tooling to enable developers to deploy, manage, and scale applications efficiently without requiring deep knowledge of infrastructure.

- Key components of a Self-Service DevOps Platform include Infrastructure as Code, CI/CD Pipelines, Observability & Monitoring, Self-Service Portals, and Security & Compliance.

- Benefits of implementing a Self-Service DevOps Platform include faster development cycles, developer autonomy, cost efficiency, scalability, and improved security.

Read Full Article

2 Likes

For uninterrupted reading, download the app