Devops News

Dev

438

Image Credit: Dev

Harness vs Spinnaker: The Future of Continuous Deployment Tools

- Harness and Spinnaker are powerful continuous deployment (CD) tools offering advanced automation and deployment capabilities.

- Harness is a modern software delivery platform that simplifies continuous integration (CI) and CD, leveraging AI-powered automation, security, and cost optimization.

- Spinnaker, developed by Netflix and open-sourced in 2015, is a multi-cloud continuous delivery platform that integrates with cloud providers and enables automated deployment strategies.

- Harness is known for its ease of use, AI-driven automation, and cost management, while Spinnaker excels in robust multi-cloud deployments and strong integration with cloud providers.

Read Full Article

26 Likes

Dev

384

Image Credit: Dev

Linux + Terraform: Building Safe Infrastructure with Variable Validation

- Terraform variable validation ensures correct and safe input values before creating infrastructure.

- It prevents errors, enforces standards, improves security, and provides better user experience.

- The step-by-step lab shows how to validate different variable types in Terraform with practical examples.

- Validating variables helps prevent misconfigurations, enforce policies, and improve security.

Read Full Article

23 Likes

Medium

251

Image Credit: Medium

From Feature Factory to Purpose-Driven Development: Why Anticipated Outcomes Are Non-Negotiable

- A senior architect dismissed the practice of defining expected outcomes for a new project as 'stupid', reflecting resistance and lack of alignment in a transforming organization.

- Purpose-Driven Development (PDD) focuses on solving the right problems with clear goals and intentions, emphasizing understanding the 'why' behind every initiative.

- The practice of including 'Anticipated Outcome' for each Epic or Initiative creates focus, intention, and ties daily work to meaningful goals.

- Product Managers and Technical Leaders may initially feel frustration when asked to define anticipated outcomes due to lack of early involvement or challenges in translating technical needs into business value.

- Documenting the anticipated outcome helps offer clarity, direction, and ensures strategic moves are made, reducing the risk of working on tasks that may not matter.

- The Feature Factory Ratio metric measures the balance between value-driven work and the risk of operating like a 'feature factory', highlighting the importance of connecting work to value.

- Superficial alignment in digital transformation poses a challenge as it impedes true transformation and creates cultural drag within organizations.

- Transformation is viewed as a leadership posture rather than a checkbox, emphasizing the importance of building belief and alignment in mindset and actions for successful modern software leadership.

- Realizing the challenges in transformation journeys, adapting leadership approaches, meeting teams where they are, and guiding them towards outcome-driven practices is essential for progress.

- Software delivery with a clear purpose is identified as more effective, empowering, and valuable for the business, customers, and the teams involved, highlighting the importance of clarity as a leadership responsibility.

- The practice asserts that the true problem lies upstream if teams are unaware of the purpose behind their work, emphasizing the role of leadership in fostering clarity and alignment.

Read Full Article

15 Likes

Dev

73

Image Credit: Dev

Stream Logs from Two Services in a Single Docker Container to Grafana Cloud Loki with Alloy

- This news article explains how to stream logs from multiple Docker services to Grafana Cloud with Alloy.

- The article provides a step-by-step guide and a sample configuration file for setting up the log streaming process.

- It covers the concept of file matching, log ingestion, adding metadata labels to log lines, and defining the output to Grafana Cloud Loki.

- The setup allows for fine-grained control over log shipping, filtering, and visualization, enabling the creation of dashboards based on log content.

Read Full Article

4 Likes

Discover more

- Programming News

- Software News

- Web Design

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

320

Image Credit: Dev

Migrating from Bitbucket to GitLab? Here’s how to keep your teams moving without missing a beat.

- During transitions from Bitbucket to GitLab, teams may still push code to Bitbucket. Keeping both Bitbucket and GitLab repositories in sync ensures nothing gets lost, avoids disruption, and allows for a gradual migration.

- There are two reliable ways to synchronize repositories: Option 1 is using GitLab's built-in repository mirroring, and Option 2 is syncing using GitLab CI/CD and Bitbucket webhooks.

- Option 1: Using GitLab Repository Mirroring: Set up mirroring in GitLab project settings, provide Bitbucket repository URL, and choose the sync interval.

- Option 2: Sync Using GitLab CI/CD and Bitbucket Webhooks: Add a .gitlab-ci.yml file to both Bitbucket and GitLab repositories, configure GitLab CI/CD variables, create a pipeline trigger token in GitLab, and add a webhook in Bitbucket.

Read Full Article

19 Likes

Dev

206

Image Credit: Dev

How I Deployed a Vite React App to Azure Static Web App (SWA) Using Azure DevOps CI/CD

- This news article discusses the process of deploying a Vite React app to Azure Static Web Apps (SWA) using Azure DevOps CI/CD.

- The article provides a step-by-step guide on pushing code to Azure Repos, creating a Static Web App on Azure, setting up an Azure Pipeline for CI/CD, and adding a deployment token to the pipeline.

- The tech stack used includes React + Vite frontend, Azure, Azure Static Web Apps (SWA), Azure DevOps Pipelines, and YAML.

- The full guide includes instructions on setting up an automated pipeline and achieving a seamless production deployment.

Read Full Article

12 Likes

Dev

260

Image Credit: Dev

Protect your Website with SafeLine WAF

- Safeline WAF is a robust tool that provides protection against modern web threats, offering a defense-in-depth approach with multiple security layers.

- It distinguishes itself through performance optimization, integrating DevOps, and key features like real-time monitoring, automated response actions, and compliance support.

- Safeline WAF excels in accuracy, maintaining low false positive rates, and offers a balanced approach to security and performance.

- Performance benchmarks showcase Safeline as a high-performing WAF solution in terms of detection rate, false positive rate, accuracy, and average response time.

- Compared to other WAF solutions like CloudFlare and ModSecurity, Safeline demonstrates superior detection rates, minimal false positives, and exceptional accuracy.

- For installation, Safeline WAF can be set up using Docker Compose, following a straightforward process with prerequisites, environment variable configuration, and accessing the web interface.

- The article covers the basics of Safeline WAF, its key features, performance benchmarks, comparison with other WAF solutions, and a step-by-step guide for installing Safeline WAF using Docker Compose.

- Implementing a strong WAF solution like Safeline is essential for organizations to protect web applications against sophisticated threats while maintaining performance and compliance.

- By leveraging AI-powered detection, Safeline WAF enables organizations to enhance their security posture without compromising agility or user experience.

- Safeline WAF's comprehensive visibility, automated response actions, and performance optimization make it suitable for organizations of all sizes seeking robust web application protection.

- In conclusion, Safeline WAF is a compelling option for organizations looking to secure their web applications effectively against evolving threats.

Read Full Article

15 Likes

Dev

228

Image Credit: Dev

Mastering Linux User Management: The Essential Guide for Every Admin

- Linux user accounts are an essential part of system administration, controlling resource allocation and access.

- There are two main types of users in Linux: system users and normal users.

- Each Linux user is assigned a unique User Identification (UID) for identification purposes.

- User account information in Linux is stored in /etc/passwd and /etc/shadow files.

Read Full Article

13 Likes

Medium

434

Image Credit: Medium

I Replaced Jenkins with GitHub Actions — Was It a Mistake?

- The author decided to replace Jenkins with GitHub Actions.

- Initially skeptical, the author found the idea of writing workflows as code in the same repository with tight integration to GitHub elegant.

- While the migration wasn't a mistake, it also wasn't painless for the team.

- GitHub Actions had matured and offered a simpler, tighter, and more modern solution.

Read Full Article

26 Likes

Medium

137

Image Credit: Medium

From 10 Users to 1 Million: How We Scaled Using DevOps Principles

- Embracing DevOps principles helped in scaling the company from 10 users to 1 million.

- To improve deployment process, continuous integration and deployment (CI/CD) pipelines were introduced.

- By implementing centralized logging and monitoring tools, the company was able to identify and address performance issues.

- The changes resulted in significant time savings and a reduction in production bugs.

Read Full Article

8 Likes

TechBullion

364

Image Credit: TechBullion

Revolutionizing DevOps: The Role of AI in Modern Software Development

- Artificial intelligence (AI) is reshaping DevOps and transforming software development.

- AI-powered coding tools assist developers in writing, debugging, and optimizing code, leading to increased efficiency and reduced error rates.

- AI-driven predictive analytics optimize workflow efficiency and reduce deployment failures in Continuous Integration and Continuous Deployment (CI/CD) pipelines.

- AI in DevOps security improves incident response through real-time threat detection, automated remediation workflows, and streamlined governance.

Read Full Article

21 Likes

Dev

215

Image Credit: Dev

Cilium & eBPF: The Future of Secure & Scalable Kubernetes Networking

- Cilium, powered by eBPF, is revolutionizing cloud networking, security, and observability in Kubernetes.

- eBPF is an advanced technology in the Linux kernel, enabling safe and efficient packet processing.

- Cilium enhances Kubernetes networking through packet filtering and routing, network policies, load balancing, and observability.

- Cilium and eBPF offer advanced features like high-performance networking, identity-aware security policies, load balancing, and deep observability.

Read Full Article

12 Likes

Dev

59

Image Credit: Dev

Managing EC2 Instances with the AWS CLI

- Managing EC2 instances with the AWS CLI offers efficiency, automation, scalability, and flexibility compared to the Management Console for cloud infrastructure management.

- Setting up AWS CLI on Amazon Linux involves installing and configuring AWS credentials like Access Key ID, Secret Access Key, region, and output format.

- Launching an EC2 instance via AWS CLI involves using commands like 'aws ec2 run-instances' with details like image id, instance type, key pair, security group id, and subnet id.

- Listing all EC2 instances can be done using the 'aws ec2 describe-instances' command to check statuses and essential details like Instance ID and tags.

- Terminating instances with 'aws ec2 terminate-instances' saves costs by deleting unneeded instances, contributing to cost optimization in AWS environments.

- Using the AWS CLI over the Console offers benefits like faster provisioning, automation, cost optimization, and consistency, making it preferred for DevOps engineers managing instances.

- By leveraging scripts and commands like run-instances and terminate-instances, DevOps engineers can streamline tasks, automate deployments, and ensure efficient infrastructure management.

- Managing EC2 instances with the AWS CLI is crucial for DevOps engineers as it simplifies repetitive tasks, enables automation, and enhances infrastructure management capabilities.

- AWS CLI empowers cloud professionals to handle tasks effectively, reduce errors, and optimize costs, making it a valuable tool for AWS infrastructure management.

- Experience the power of the AWS CLI in managing EC2 instances to enhance your cloud workflow, automation strategies, and cost-saving initiatives.

Read Full Article

3 Likes

Dev

347

Image Credit: Dev

Zen and the Art of Workflow Automation

- Repetitive tasks in our daily workflow can be a hidden invitation to mindfulness.

- Mindfulness involves noticing repetitive friction and reflecting on the need for automation.

- Automation not only saves time but also frees mental bandwidth and enhances the flow state.

- The author shares a personal example of automating Git branch creation and highlights the benefits of personalizing automation.

Read Full Article

20 Likes

Dev

219

Image Credit: Dev

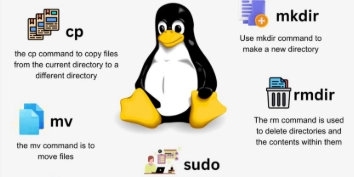

Getting Started with Linux Commands: Mastering File and Directory Operations

- Linux is known for its powerful command-line utilities that give users granular control over their systems.

- This article focuses on essential file and directory operations in Linux, including using the cat command for file content manipulation and managing files and directories.

- The cat command allows you to write, append, and replace file content, while basic commands like touch, cp, mv, and rm are used for creating, copying, moving, and deleting files and directories.

- By mastering these commands, users can efficiently navigate and manipulate their Linux systems, building a strong foundation for advanced tasks like scripting, system administration, and automation.

Read Full Article

13 Likes

For uninterrupted reading, download the app