Devops News

Medium

0

Image Credit: Medium

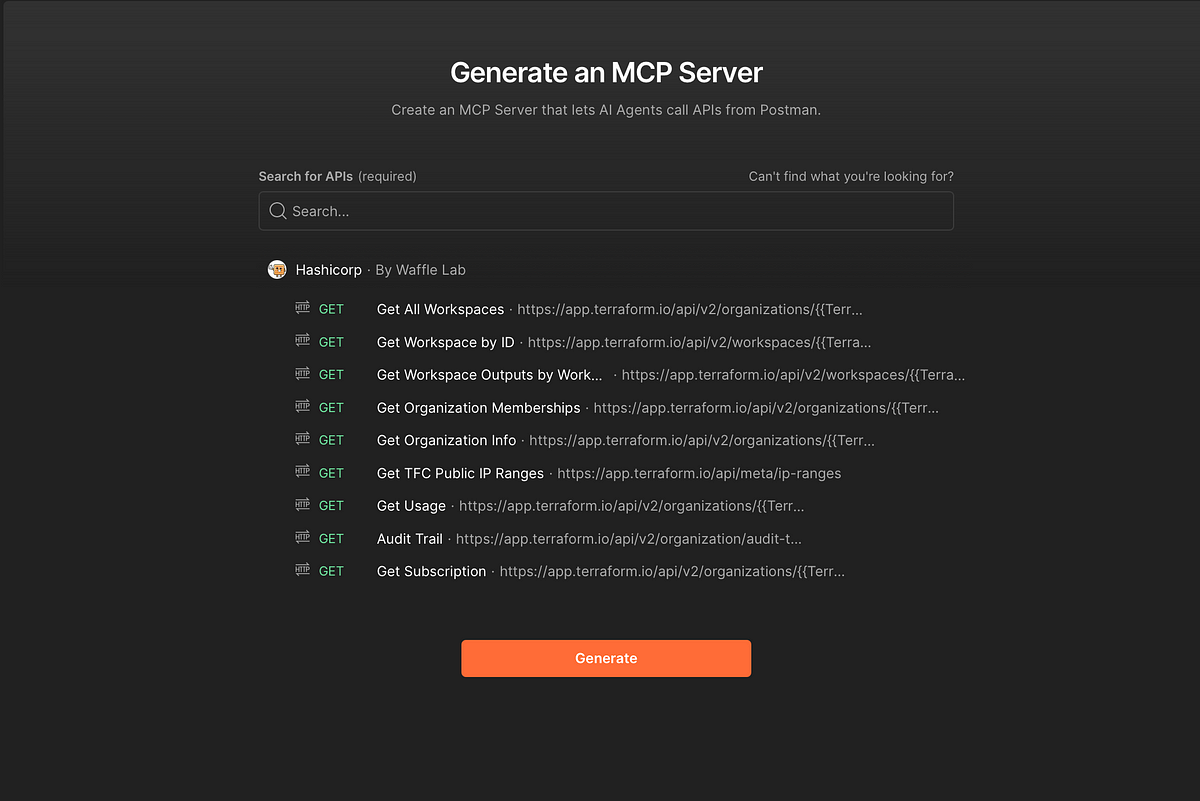

Postman’s Model Context Protocol (MCP) Server Integration with GitHub Copilot and CLI

- Postman's Model Context Protocol (MCP) servers revolutionize the integration of AI with infrastructure tools like GitHub Copilot and CLI.

- MCP enables AI systems to securely access and interact with external tools and data sources as a universal translator.

- Postman allows creating custom MCP servers from REST API collections, like the HCP Terraform MCP server implementation.

- MCP servers expose standardized interfaces for AI systems to interact with services such as Kubernetes and Discord.

- Postman's MCP server follows a modular architecture, where each tool file corresponds to a specific API endpoint.

- Users can interact with MCP servers directly in Postman, configuring requests and APIs within the application.

- Integration with GitHub Copilot in VS Code allows for natural language queries interacting with the infrastructure.

- The MCP CLI provides a command-line interface for querying the MCP servers directly, offering flexibility in interactions.

- Postman's MCP server capabilities bridge API development with dynamic AI-accessible tools, transforming development and testing processes.

- The article also mentions Infralovers' AI Essentials for Engineers training, focusing on practical AI integration in technical workflows.

Read Full Article

Like

Dev

257

Image Credit: Dev

How to Automatically Renew Let’s Encrypt SSL Certs with Certbot on Ubuntu 🔐

- Automating Let’s Encrypt SSL certificate renewal with Certbot on Ubuntu is a seamless process for developers.

- Certbot is preferred for its free service, reliability, and automatic renewal features, allowing developers to focus on coding.

- The initial step involves installing Certbot with simple commands on Ubuntu.

- To obtain the SSL certificate, developers can use Certbot's command specifying the domain names.

- Certbot also has a timer that triggers auto-renewal, ensuring certificates are updated before expiry.

- Old-school cron can be used instead of the timer by disabling it and setting up a cron job for renewal.

- Hooks can be utilized to automate stopping and starting services during certificate renewal.

- A bash script example is provided for automating the certificate renewal process and handling related tasks.

- Important to test automation periodically and backup certificates for uninterrupted service.

Read Full Article

15 Likes

Dev

109

Image Credit: Dev

Why Your Terraform Platform Isn't Scaling—and What to Do About It

- The article discusses the importance of establishing the root layer in a Terraform platform to support multi-environment setups for scalability.

- It highlights the challenges of having an unautomated foundation for automation processes, leading to issues with identity, pipelines, and secrets management.

- The author recounts a real-world scenario where the production environment was automated, but the platform supporting it lacked automation, causing inefficiencies.

- The concept of automating the root layer to create reproducible environments, manage identities, secrets, and pipelines is emphasized for scalability.

- The article outlines the different components of the root layer, including the Root Workspace, Workspaces Workspace, and Shared Modules Workspace.

- It provides a step-by-step guide on bootstrapping the root workspace, workspaces workspace, and creating the shared modules workspace for infrastructure building blocks.

- The approach is proven in real-world implementations across various organizations, enabling faster deployment, stronger governance, and reduced dependencies.

- Potential questions and concerns, such as the need for separate workspaces, managing service principals, Terraform Cloud dependency, and the level of automation, are addressed in the article.

- The root layer architecture is presented as a solution for building a scalable, secure, and autonomous platform using Terraform, promoting autonomy and code-driven management.

- The article concludes by encouraging readers to consider implementing a similar root layer structure for better infrastructure and platform management.

Read Full Article

6 Likes

Dev

342

Image Credit: Dev

CONTAINERIZATION

- Containerization packages applications with their dependencies into a single unit called a container for consistent operation across various environments.

- It involves isolating applications in user spaces to run in diverse environments, regardless of the infrastructure type or vendor.

- Key principles include isolation, portability, efficiency, scalability, fault tolerance, and agility.

- Use cases of containerization include cloud migration, microservice architecture adoption, and IoT device deployment.

- The containerization process includes building self-sufficient software packages, creating container images, and using container engines to manage resources.

- Steps to containerize an application using Visual Studio Code involve cloning a repository, creating a Dockerfile, building an image, and running a container.

- Updating an application in a container involves changing source code, rebuilding the image, and starting a new container while removing the old one.

- Removing an old container involves stopping it and then removing it using the Docker CLI.

- To start and view the updated application, use the docker run command and refresh the browser to see changes.

Read Full Article

20 Likes

Discover more

- Programming News

- Software News

- Web Design

- Open Source News

- Databases

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

228

Image Credit: Dev

Getting Started with Docker - How to install Docker and set it up correctly

- To get started with Docker, you need to install it on your system and verify that it's running properly.

- The installation process varies for Windows, Mac, and Linux operating systems.

- After installing Docker, ensure it's running by checking Docker Desktop for Windows and Mac or starting Docker manually on Linux.

- You can verify Docker's status with the 'docker info' command.

- Running 'docker run hello-world' will confirm that Docker is set up correctly.

- This command downloads a test container from Docker Hub and runs it to display a confirmation message.

- Docker images are lightweight packages containing everything needed to run a container.

- The 'docker run hello-world' command retrieves the 'hello-world' image if not found locally and creates a container from it.

- After running the container, a message 'Hello from Docker!' confirms successful installation.

- This process demonstrates how Docker pulls images from Docker Hub and runs containers.

- The message from Docker explains the steps taken: contacting the daemon, pulling the image, creating the container, and streaming the output.

- Finally, Docker suggests trying to run an Ubuntu container to further explore Docker capabilities.

- By following these steps, you can start using Docker and running containers on your system.

- Installing and setting up Docker is essential for managing containers and running applications in a consistent environment.

- The detailed installation guide provided ensures that Docker is installed correctly and ready for use.

- Running the 'hello-world' container serves as a simple test to validate Docker's functionality and setup.

- Successfully completing these steps indicates that Docker is installed, running, and configured properly.

Read Full Article

13 Likes

Dev

50

Image Credit: Dev

Understanding virtualization & containers in the simplest way

- The article explains the concepts of virtualization, virtual machines (VMs), and containers in a simple way.

- It discusses the traditional method of running applications on separate physical servers and the limitations it posed.

- Virtualization allows multiple operating systems to run on a single physical machine, leading to better resource utilization and cost savings.

- Containers, unlike VMs, share the host OS, making them lightweight, fast, and resource-friendly.

- Docker is introduced as a containerization platform that simplifies the process of creating, deploying, and managing containers.

- A step-by-step mini-project is provided to run an Ubuntu container using Docker, highlighting its practical application and benefits.

- The article explains the importance of Ubuntu containers for testing software, running Linux tools, experimenting with different distributions, and learning Linux.

- It also mentions other container images available, such as Alpine Linux, Nginx, PostgreSQL, Node.js, and Python.

- Step-by-step instructions are given to download the Ubuntu container image, run the container, check the OS version, install software, exit, and restart the container.

- The article provides a comprehensive understanding of virtualization, containers, and how to work with Docker in a practical manner.

Read Full Article

3 Likes

Dev

401

Image Credit: Dev

51 docker commands that you'll ever need to get started with docker 🔥

- 1. Check Docker version installed using 'docker --version'

- 2. Display system-wide information with 'docker info'

- 3. List all commands or get help on a specific one using 'docker help'

- 4. Download an image from Docker Hub with 'docker pull

' - 5. List all local images with 'docker images'

- 6. Build image from a Dockerfile in the current directory using 'docker build -t

.' - 7. Rename an image locally with 'docker tag

' - 8. Remove an image with 'docker rmi

' - 9. Save an image to a .tar archive with 'docker save -o

.tar ' - 10. Load image from a .tar archive with 'docker load -i

.tar' - 11. Show layers of an image with 'docker history

' - 12. Run a container with 'docker run

' - 13. Run interactively with terminal access using 'docker run -it

/bin/bash' - 14. Run in detached (background) mode with 'docker run -d

' - Eligible for Web Story: true

Read Full Article

24 Likes

Medium

450

Image Credit: Medium

You don’t need more features. You need better logs.

- Product managers sometimes treat engineers as mere extensions of their roadmap spreadsheet due to market pressure and constant demands for updates.

- Collisions between the push for rapid development and a team lacking the courage to address being 'flying blind' can lead to operational chaos.

- Stopping development often feels like a failure to many product managers as it implies less data, iteration, and fewer chances to showcase progress.

- The problem is not about good or bad product managers but rather the overlooking of the critical aspects like logs, metrics, and observability by some brilliant PMs.

Read Full Article

20 Likes

Dev

8

Image Credit: Dev

🚀 How I Deployed My Ubuntu EC2 Instance with NGINX and Customized My Web Page

- The post details the process of launching an Ubuntu EC2 instance on AWS, installing NGINX, and customizing a web page to display the author's name and the current date.

- Requirements for the process include an AWS account, a key pair (.pem file), and PowerShell or Git Bash on Windows.

- Step 1 involves launching a new Ubuntu EC2 instance named 'nginx-demo' with Ubuntu Server 22.04 LTS AMI, t2.micro instance type, and proper network settings.

- Upon launching, the public IPv4 address is copied for later use.

- Step 2 explains how to connect to the EC2 instance via PowerShell using the .pem key file.

- Step 3 guides on installing NGINX on the EC2 instance using appropriate terminal commands.

- Start and enable the NGINX service using systemctl commands as part of Step 3.

- Step 4 includes customizing the default NGINX web page by editing the index.nginx-debian.html file with personalized content.

- Instructions provided for replacing the default body content with custom HTML displaying the author's name and the date.

- Step 5 instructs to test the customized page by accessing the EC2 public IP in a web browser.

- By following the steps, users should see the personalized NGINX page with the specified content.

- The post includes terminal commands and screenshots to aid in the deployment process.

Read Full Article

Like

Dev

553

Image Credit: Dev

Transforming IT Operations: The Power of AIOps and Generative AI

- The synergy between AIOps and Generative AI revolutionizes IT operations towards proactive management and autonomous systems.

- GenAI enhances AIOps with intelligent root cause analysis, incident explanation, and clear actionable insights.

- Conversational AIOps powered by GenAI offers real-time diagnostics, automated workflows, and efficient IT support.

- GenAI's synthetic data generation improves AIOps predictive capabilities for proactive anomaly detection and predictive maintenance.

- Automated runbook and script generation by GenAI speeds up incident response and supports autonomous remediation.

- GenAI democratizes technical insights, translating complex data into comprehensible reports for better cross-functional collaboration.

- Challenges in GenAI implementation include data quality, model interpretability, bias mitigation, and security measures.

- The future vision involves autonomous IT environments driven by AIOps and Generative AI, reshaping how businesses manage digital infrastructure.

- Responsibly deploying GenAI in AIOps requires careful planning and continuous oversight.

- The transformative potential of Generative AI and AIOps lies in freeing up IT professionals for strategic initiatives and innovative problem-solving.

Read Full Article

6 Likes

Dev

417

Image Credit: Dev

Essential Resources for Software Technical Debt Management

- Technical debt is the cost of taking shortcuts in software development that will need to be paid back later.

- Managing technical debt is crucial for maintaining team productivity, software quality, and feature delivery in agile environments.

- It can be intentional (prioritizing speed with plans to refactor later) or unintentional (arising from poor design or lack of knowledge).

- Proactive technical debt management leads to faster feature delivery, higher quality software, and a more productive team.

- Core strategies for managing technical debt include prevention, management, monitoring, and effective communication.

- Resources like in-depth guides, best practices, and essential tools are available to help in technical debt management.

- Important guides cover practical strategies for managing technical debt effectively and preventing its negative impacts.

- Tools like SonarQube, Stepsize, Jira, and GitHub Issues assist in identifying and tracking technical debt within software projects.

- Understanding technical debt's origins and utilizing available resources can transform it from a burden to a manageable part of software development.

Read Full Article

24 Likes

Dev

351

Image Credit: Dev

Modern Docker Made Easy: Real Apps, Volumes, and Live Resource Updates

- Docker Desktop 4.42 introduces native IPv6, MCP Toolkit, and Model Runner AI capabilities.

- Tutorial focuses on building a containerized app with advanced features for professional use.

- Key concepts include network parity, AI workflows, service catalog, and pro-grade features.

- A mini-app called Ping Counter is used to demonstrate various Docker functionalities.

- Building the image is done through Dockerfile and commands such as docker build.

- Initial runs show containers default to a fresh state each time.

- Persistence is added via volumes to ensure state retention beyond container lifecycles.

- Dynamic runtime tuning allows live updates of CPU and memory limits without restarting containers.

- Live monitoring with docker stats showcases runtime resource consumption.

- Rebuilding and redeploying include changing configurations and reflecting updates in logs.

- Cleanup and security practices involve managing containers, images, and volumes efficiently.

- Advanced concepts like health checks, non-root execution, IPv6 support, AI model experimentation, and MCP Toolkit usage are mentioned.

- Guide provides a comprehensive overview of Docker features for real-world applications and emphasizes security best practices.

- Readers are encouraged to apply Docker knowledge in professional workflows for further advancements.

Read Full Article

21 Likes

Dev

262

Image Credit: Dev

🧪How to Build a Linux Lab Using Vagrant

- Vagrant is a tool that automates the creation and management of virtual machines for setting up Linux study labs.

- Vagrant requires a provider like VirtualBox, VMware, Hyper-V, or Docker to create VMs.

- To begin, download and install VirtualBox and Vagrant.

- Open PowerShell as an administrator and navigate to the desired folder to create the VM.

- Two options to create the VM: Use 'vagrant init centos/7' for a pre-configured CentOS 7 box or 'vagrant init' for manual setup.

- Running 'vagrant up' starts the VM, while 'vagrant ssh' allows access to the VM.

- Various Linux distributions are available to try using Vagrant, such as Ubuntu, Debian, and generic Linux options.

- Additional commands like 'vagrant halt', 'vagrant suspend', 'vagrant resume', 'vagrant reload', and 'vagrant destroy' help manage the VM.

- Consider using PuTTY or MobaXterm for SSH access to the VM for stability and convenience.

- For detailed Vagrantfile customization, a quick search can provide tailored solutions.

- Community-created Vagrant boxes offer a wide range of Linux distros for study and testing purposes.

Read Full Article

15 Likes

Dev

1.3k

Image Credit: Dev

Revolutionizing DevSecOps: How AI is Reshaping Software Security

- The article discusses how AI is transforming DevSecOps to enhance software security throughout the SDLC.

- AI aids in threat intelligence by detecting anomalies in real-time, enabling predictive threat detection and response.

- It improves vulnerability detection tools by reducing false positives and prioritizing critical flaws efficiently.

- AI enhances security testing through intelligent fuzzing, generating diverse test cases to uncover complex vulnerabilities.

- It automates security policy enforcement, suggesting remediation steps, and orchestrating incident response for efficient DevSecOps workflows.

- AI assistants help in secure code generation and review by identifying potential security issues during coding and code reviews.

- Benefits include increased speed, improved accuracy, reduced manual effort, proactive security, and optimized resource allocation.

- Challenges include data privacy concerns, algorithmic bias, the need for human oversight, management of false positives/negatives, and integration complexity.

- Future outlook predicts more advanced AI models for complex code understanding, zero-day vulnerability prediction, and autonomous patching.

- The integration of AI into DevSecOps offers both benefits and challenges, shaping the future of software security.

Read Full Article

23 Likes

Dev

216

Image Credit: Dev

GCP Fundamentals: Compute Engine API

- The Compute Engine API is a RESTful interface that allows for the creation, management, and destruction of VMs within Google Cloud Platform, enabling automation and Infrastructure as Code.

- It provides access to resources like instances, disks, networks, and firewalls, with integration with IAM, Cloud Logging, and VPC.

- Benefits include speed, scalability, consistency, cost optimization, and version control, with use cases like disaster recovery, CI/CD pipelines, and batch processing.

- Key features cover instance, image, disk, networking, machine types, templates, groups, metadata, console, and security enhancements.

- Detailed use cases include DevOps staging, ML training clusters, ETL pipelines, IoT edge computing, autoscaling web tiers, and dynamic game server provisioning.

- The API's architecture integrates with IAM, Cloud Logging, Monitoring, and KMS, managing VM instances, disks, networking, and application code.

- Pricing considerations include machine types, regions, OS, storage, and networking, with optimization strategies like right-sizing and CUDs.

- Security measures involve IAM roles, service accounts, firewall rules, Shielded VMs, certifications, compliance, and governance practices.

- Integration with other GCP services includes BigQuery, Cloud Run, Pub/Sub, Cloud Functions, and Artifact Registry for enhanced functionality.

- Considered alongside AWS EC2 and Azure Compute APIs, Compute Engine API excels in flexibility, pricing, integration, machine types, and networking.

- Common mistakes to avoid include IAM permission errors, incorrect zone selection, quota ignorance, not using templates, and over-provisioning resources.

Read Full Article

13 Likes

For uninterrupted reading, download the app