Databases

Dbi-Services

220

Image Credit: Dbi-Services

My journey to KubeCon 2025 – Day 1

- Today marks the author's first day at KubeCon 2025 in London, where excitement and buzz around topics like HAProxy, NGINX, and Kubernetes are already evident.

- The event, expecting between 12 and 15 thousand attendees, is described as a unique and grand gathering in the IT space.

- Keynote speakers highlighted the integration of AI and LLM in applications to enhance user experience and manage system complexities more effectively.

- Initiatives like Microsoft's Headlamp aim to simplify Kubernetes adoption and usage with features like in-cluster web portals and Kubernetes Desktop.

- The Linux Kernel is evolving with the integration of Rust and C languages to enhance safety, code maintainability, and security.

- A notable use-case presented involved AI-driven sign language interpretation and recognition, showcasing the role of technology in improving lives.

- The event also featured various booths representing open source projects, with attendees enjoying swag offerings from companies like RedHat.

- Updates on the Certified Kubernetes Administrator (CKA) exam were shared, noting a new version's release with increased difficulty.

- Resources like killer.sh and KodeKloud were recommended for exam preparation.

- The author also engaged in insightful discussions at booths, including exploring bootable containers with RedHat and discussing potential project collaborations.

- The day concluded with a promise to continue the journey at KubeCon in the next blog post, teasing future developments and experiences.

Read Full Article

13 Likes

Medium

169

Image Credit: Medium

When a Row Lock Becomes a Gap Lock: My Real-Life MySQL Adventure

- The author faced issues with slow queries and an increase in RDS sessions post-release.

- After debugging and examining the codebase, the author discovered the use of gap locks in InnoDB.

- Gap locks prevent other transactions from inserting a row into a specific range until the current transaction completes.

- Using an indexed column helps MySQL precisely lock the specific gap and prevent unnecessary blocking of other transactions.

Read Full Article

10 Likes

Securityaffairs

318

Image Credit: Securityaffairs

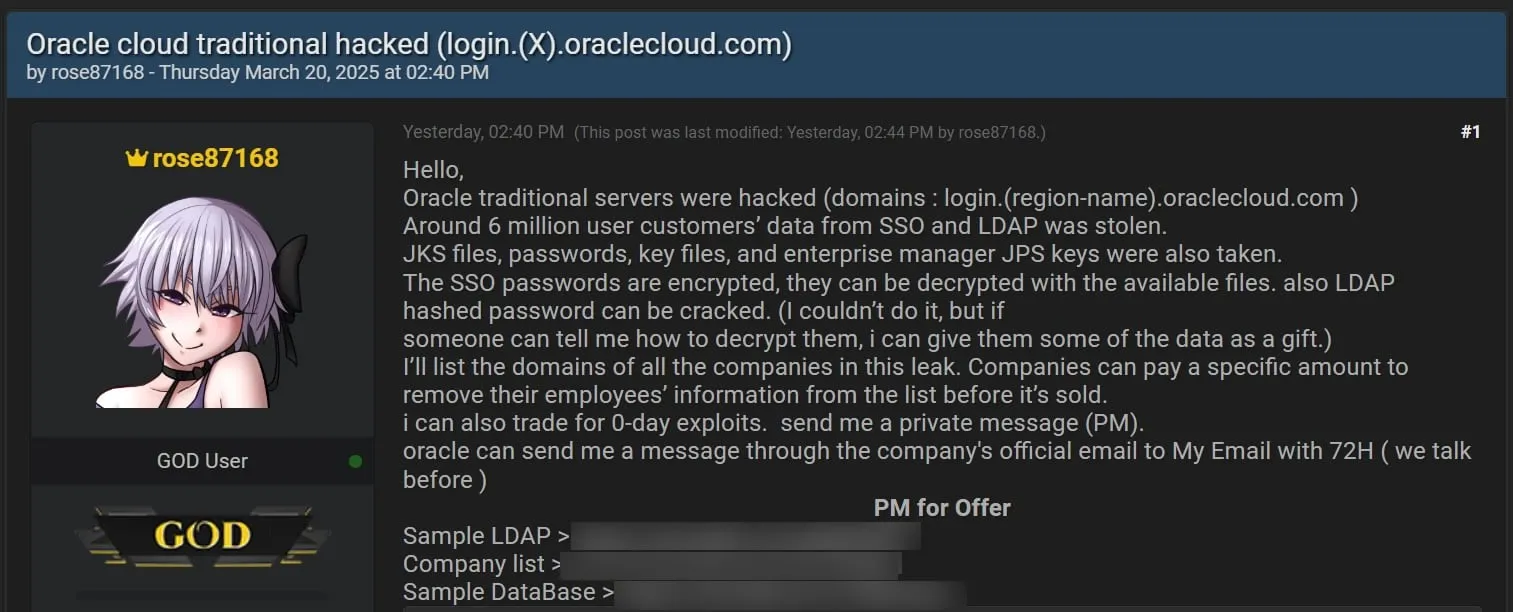

Oracle privately notifies Cloud data breach to customers

- Oracle confirms a cloud data breach, quietly informing customers while downplaying the impact of the security breach.

- A threat actor claims to possess millions of data lines tied to over 140,000 Oracle Cloud tenants.

- The hacker has published 10,000 customer records as proof of the hack.

- Oracle privately notifies customers of the breach, denying that any customer data was compromised.

Read Full Article

18 Likes

Siliconangle

390

Image Credit: Siliconangle

Mapping Jensen’s world: Forecasting AI in cloud, enterprise and robotics

- The shift in computing architectures is creating a world where data is transformed into content using tokens for value and real-time action, revolutionizing all aspects of the computing stack.

- Three AI opportunity vectors highlighted by Jensen Huang include AI in the cloud, enterprise, and the real world, with a forecast methodology developed to track disruptive markets.

- IT spending expectations have softened, with a notable drop to 3.4% for the year, below 2024 levels, due to market uncertainties like ongoing tariff concerns and market declines.

- AI spending remains a priority for IT decision makers, with a majority continuing or accelerating their AI initiatives to stay competitive, despite public policy pressures around AI.

- The data center supercycle forecast shows accelerated computing growing at a 23% CAGR, reaching a trillion dollars by the early 2030s, driven by a shift towards AI-driven infrastructure.

- Nvidia's focus on AI opportunities in public cloud, on-premises enterprise, and the real world indicates a dramatic rise in accelerated computing, reshaping IT spending patterns.

- A new forecasting methodology incorporating Volume, Value, and Velocity captures the rise of disruptive AI technologies, accelerating adoption cycles towards AI-optimized infrastructure.

- Cloud dominates early AI adoption due to advanced tooling, consumer-driven use cases, and immediate ROI, while on-premises enterprise AI adoption ramps up more gradually.

- Robotics presents significant AI opportunities, with single-purpose robots demonstrating near-term value in automation, while humanoid robots face more complex adoption curves.

- Accelerated computing is driving AI into every layer of technology, from hyperscale clouds to distributed environments, marking a fundamental shift in computing architectures.

Read Full Article

23 Likes

Discover more

- Programming News

- Software News

- Web Design

- Devops News

- Open Source News

- Cloud News

- Product Management News

- Operating Systems News

- Agile Methodology News

- Computer Engineering

- Startup News

- Cryptocurrency News

- Technology News

- Blockchain News

- Data Science News

- AR News

- Apple News

- Cyber Security News

- Leadership News

- Gaming News

- Automobiles News

Dev

307

Image Credit: Dev

One SQL Query That Could Destroy Your Entire Database (And How Hackers Use It)

- SQL Injection attacks are a common and dangerous threat in cybersecurity, allowing attackers to bypass logins and access sensitive data.

- Attackers can bypass login mechanisms using SQL Injection by exploiting vulnerable login scripts.

- By using techniques like UNION to merge tables, attackers can dump sensitive data like credit card numbers from databases.

- SQL Injection can be used to execute destructive queries like deleting entire databases in a single line of code.

- Prevention methods include using parameterized queries, input validation, and avoiding dynamic SQL query building.

- SQL Injections can also be used for remote command execution, allowing attackers to control operating systems through SQL commands.

- Experts emphasize the importance of treating user input as hostile to prevent SQL Injection vulnerabilities.

- Mitigating SQL Injection risks involves disabling features like xp_cmdshell and following least privilege principles for database accounts.

- SQL Injection attacks are favored by attackers due to their ease of execution and potent impact on databases and systems.

- Key takeaways include using parameterized queries, validating inputs, auditing permissions, and practicing safe environment simulations.

Read Full Article

18 Likes

Hackernoon

202

Image Credit: Hackernoon

UUID Makes Everything Better… Doesn’t It?

- Using UUIDs can have downsides such as performance degradation, index bloat, and maintenance challenges.

- UUIDs are suitable for distributed systems where unique IDs need to be generated locally to avoid collisions.

- Generating UUIDs upfront can be beneficial for scenarios like mobile apps requiring immediate identifiers.

- UUIDs provide security benefits by being hard to guess, making them ideal for public APIs or magic links.

- They also hide insertion order, preventing users from determining database size or insertion frequency.

- Common pitfalls of UUIDs include larger index sizes, fragmentation, and decreased insertion and read performance.

- Sequential IDs like BIGINT may offer better performance and smaller indexes in certain situations.

- A hybrid approach combining sequential IDs internally and UUIDs externally can balance efficiency and security.

- UUIDs do not automatically ensure optimal sharding and require a thoughtful strategy for data distribution.

- Ultimately, choosing between UUIDs and sequential IDs should be based on specific system requirements to maintain database efficiency.

Read Full Article

12 Likes

Medium

27

Image Credit: Medium

Spring Boot Is Too Bloated for Microservices

- Spring Boot apps are known for their simplicity and rapid bootstrapping, but they come with a significant amount of complexity.

- The simplicity of Spring Boot is achieved by hiding the complexity, resulting in the microservice carrying a lot of dependencies.

- Spring Boot apps are often slower and consume more memory compared to frameworks like Go, Node.js, or Micronaut.

- Spring Boot apps pull in entire ecosystems of functionality, even though only a fraction is used, leading to architectural debt and performance issues.

Read Full Article

1 Like

Medium

4

Image Credit: Medium

Lombok Is a Crutch — You’re Writing Java Wrong

- Lombok might be doing more harm than good by encouraging developers to ignore good design and lean on shortcuts.

- Java's verbosity is one of the main reasons for Lombok's popularity, as it reduces the ceremony of writing boilerplate code with annotations.

- However, Lombok does not address the root problem of writing excessive boilerplate-heavy classes in the first place.

- Lombok's @Data annotation, often used without much thought, can lead to the exposure of mutable internal state and violation of object-oriented principles.

Read Full Article

Like

Insider

344

Image Credit: Insider

Stargate developer Crusoe could spend $3.5 billion on a Texas data center. Most of it will be tax-free.

- Data center developer Crusoe could receive an 85% property tax break on their Texas data center campus.

- The tax abatement agreement requires Crusoe to spend a minimum of $2.4 billion from a targeted investment of $3.5 billion.

- The first Stargate data center, part of a joint venture between OpenAI, Oracle, and SoftBank, is located in Abilene, Texas.

- The abatement also requires Crusoe to create 357 full-time jobs with minimum salaries of $57,600.

Read Full Article

20 Likes

Amazon

96

Image Credit: Amazon

Working with OEM Agent software for Amazon RDS for Oracle

- Amazon RDS for Oracle is a managed service simplifying Oracle database setup and management in the cloud.

- Oracle Enterprise Manager (OEM) is a monitoring tool for Oracle databases and applications, offering performance tuning and management.

- OEM Agent in Amazon RDS for Oracle facilitates monitoring and management by communicating with Oracle Enterprise Manager Cloud Control.

- Scenarios affecting OEM Agents for Amazon RDS for Oracle are outlined, emphasizing version compatibility and impact on historical metrics data.

- Configuration of OEM Management Agent on RDS instances involves enabling the OEM Agent option in a custom options group and configuring OMS details.

- Amazon RDS for Oracle minor version upgrades do not impact OEM Management Agent functionality, as they focus on database patch set updates.

- OEM Agent upgrades on RDS for Oracle may lead to plugin version mismatch and historical performance data loss, requiring careful consideration.

- In Multi-AZ failures, the OEM Agent remains functional without reconfiguration due to continuous data replication between primary and standby instances.

- Renaming RDS instances can cause communication disruptions between OMS and OEM Agents, necessitating reconfiguration and potential data loss.

- Restoring RDS instances from backups or snapshots may impact OEM Agents differently based on version compatibility, requiring attention to maintain connectivity.

Read Full Article

5 Likes

Dbi-Services

55

Image Credit: Dbi-Services

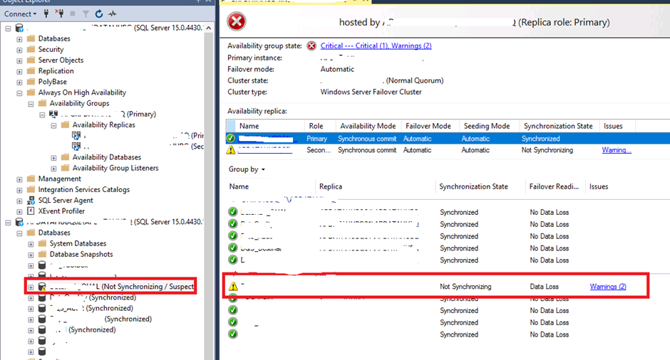

SQL Server AlwaysOn – Alert database Not Synchronizing / Suspect

- An alert was received about a database in an AlwaysOn cluster with the state Not Synchronizing / Suspect.

- The error log showed issues with opening a file on a share, leading to the database being in a suspicious state.

- Creating files on shares is possible in SQL Server, but it causes problems in an HA system with AlwaysOn AG and automatic seeding.

- The consequences include the growth of the Tlog file and the potential disk full alert.

Read Full Article

3 Likes

Javarevisited

82

Image Credit: Javarevisited

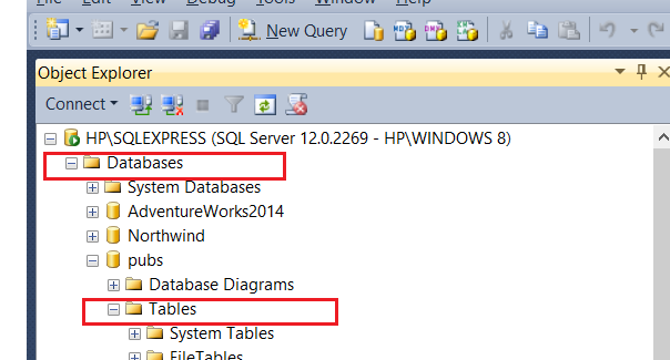

How to add Primary key into a New or Existing Table in SQL Server? Example

- To add a primary key to an existing table in SQL Server, you can use the ALTER clause of SQL.

- Sometimes tables lack a primary key due to a lack of a column that is both NOT NULL and UNIQUE.

- Identity columns can be used as a primary key if there is no suitable column in the table.

- Composite primary keys can be created by combining multiple columns.

Read Full Article

4 Likes

Hackaday

9

Image Credit: Hackaday

This Week in Security: Target Coinbase, Leaking Call Records, and Microsoft Hotpatching

- A recent GitHub Actions supply chain attack targeted Coinbase, starting with the spotbugs/sonar-findbugs repository and exploiting the pull_request_target hook, leading to leaked secrets and access tokens.

- ZendTo, a file sharing platform, was found to have critical vulnerabilities including a PHP exec() security flaw and issues with legacy md5 passwords, potentially allowing code execution and weak password bypass.

- Verizon's Call Filter iOS app had a security flaw in the callLogRetrieval endpoint, allowing unauthorized access to call records using JSON Web Tokens, which was promptly addressed by Verizon.

- Nim's db_postgres module's parameterization method was found to not truly prevent SQL injection attacks, highlighting potential vulnerabilities in the Nim language's handling of SQL queries.

- Oracle Cloud Classic suffered a breach, distinct from Oracle Cloud, but more details are emerging about a potential data leak and Oracle's response to the incident.

- Microsoft has introduced in-memory security patching for Windows 11 Enterprise in the 24H2 update, providing hotpatching for security updates on supported machines.

- GreyNoise researchers observed increased scanning for Palo Alto device login interfaces in March, possibly indicating preparations for an attack on Palo Alto devices.

- ZDI highlighted Binary Ninja's use in finding use-after-free vulnerabilities and showcased an electric car simulator capable of interacting with real charging stations safely.

Read Full Article

Like

Alvinashcraft

13

Dew Drop – April 4, 2025 (#4397)

- Top links in the tech industry include updates on Babylon.js 8.0, Pluralsight benefits, and Microsoft's 50th-anniversary event speculations.

- Articles cover topics like scalable document signing, Azure tasks in VS Code, C# 14 key features, and AI model updates.

- In the design and testing realm, discussions range from refactoring methods to audio designers revolutionizing digital experiences.

- Content related to mobile, IoT, and game development includes insights on visualizing coal consumption trends and Raspberry Pi projects.

- Screencasts and videos cover HTML writing efficiency, Microsoft CEO Satya Nadella's interview, and the.NET MAUI community standup.

- Podcasts feature talks on personal branding, AI, and agile methodologies, while community events discuss vulnerability impacts and Imagine Cup finalists.

- Database topics explore SQL Server stored procedures, Oracle Database features, and database security in DevSecOps.

- The SharePoint and M365 section includes updates on SharePoint roadmap pitstops and Microsoft 365 certification controls.

- PowerShell articles delve into remoting in workgroup environments and announce the Warp Preview for PowerShell.

- Miscellaneous articles touch on Windows 11 builds, tariffs' impact, new tech releases like Rust 1.86.0, and the availability of a miniature Windows 365 Link PC.

- Daily reading lists, a geek shelf recommendation, and more link collections provide a variety of tech-related insights and resources.

Read Full Article

Like

TechJuice

170

Image Credit: TechJuice

Government Issues Cybersecurity Warning Following Alleged Oracle Cloud Data Breach

- The National Computer Emergency Response Team (NCERT) has issued an urgent advisory regarding an alleged data breach in Oracle Cloud, raising concerns about data security and unauthorized access.

- A hacker, known as 'rose87168,' claimed to have accessed Oracle Cloud servers, obtaining over six million records containing Single Sign-On (SSO) login credentials.

- Experts believe that the breach likely exploited vulnerabilities in SSO authentication and LDAP setups, posing risks of credential-stuffing attacks and potential identity theft.

- Oracle's response to the breach has drawn criticism, with concerns raised about transparency and prioritization of corporate reputation over customer security.

Read Full Article

10 Likes

For uninterrupted reading, download the app