Neural Networks News

Insider

2.3k

Image Credit: Insider

Jimmy Ba, founding member of Elon Musk's xAI, redefines AI with deep neural networks

- Jimmy Ba, a founding member of Elon Musk's xAI, is redefining AI with deep neural networks.

- Ba has a background in deep neural networks, which are artificial-intelligence models that mimic pathways in the human brain.

- He is an assistant professor at the University of Toronto's machine-learning group and was a research fellow at Meta.

- Ba believes that future AI technology should consider ethics, privacy, fairness, and human values to transform our daily lives and society profoundly.

Read Full Article

8 Likes

Medium

405

Artificial Intelligence Approaches: Different Schools of Thought and Interpretations

- Symbolic AI or Good Old-Fashioned AI was the dominant paradigm in AI research from 1950s to 1980s and based on intelligence attained through manipulation of symbols and logic.

- The Sub-symbolic AI approach relies on statistical and numerical methods that learn patterns from the data.

- The Connectionist approach excels in pattern recognition and classification tasks and involves neural network-based Artificial Intelligence.

- In Logical Approach, reasoning is done based on formal logic systems and results are meaningful and verifiable.

- Biologically Inspired AI, based on mimicking structures and processes of biological systems, focuses on creating general intelligence.

- The Engineering-Focused Design mainly focuses on solving specific tasks efficiently using domain-specific knowledge and heuristics.

- There is a continuing debate on approaches to Artificial Intelligence between logical reasoning and deep learning, scruffy versus neat approaches.

- Understandably, to make truly intelligent machines, a hybrid approach will be necessary for Artificial Intelligence researchers.

- AI is still evolving, and it is crucial for policymakers, ethicists, researchers, and the public to understand the different approaches and methods being used.

- This detailed understanding will influence the impact of AI systems on society and make a difference in the innovative and exciting progress of ensuring the future of AI research.

Read Full Article

24 Likes

Medium

78

Fundamental Concepts of Artificial Intelligence

- Machine learning, which was coined in 1959, is a subfield of AI that involves the creation of programs that perform actions without explicit instructions.

- ML is divided into three categories: Supervised Learning, which learns from labelled data; Unsupervised Learning, which identifies patterns in unlabelled data; and Reinforcement Learning, which learns from interacting with the environment.

- Deep Learning (DL), inspired by the workings of the human brain, involves the use of artificial neural networks to analyse different factors of data.

- Natural Language Processing (NLP) is another AI field that involves machine interaction with human language.

- Computer Vision (CV) is a subset of AI that allows computers to perceive the world around them by learning to grasp high-level awareness from digital images and videos.

- Robotics is a branch of AI that uses engineering to design machines that can interact with the physical world, often integrating with other AI fields such as CV and NLP.

- Expert Systems are AI programs that imitate an expert’s decision-making skills; they are the first successful types of AI software.

- Weak AI solves specific issues as compared to Strong AI that matches or outperforms human intelligence across a range of cognitive tasks.

- The Turing Test is a practical method to determine machine intelligence that involves a human interrogator and two participants: a human and a machine.

- Artificial consciousness, or machine consciousness, is when non-biological systems exhibit consciousness, which has made people wonder if said systems should have rights and moral standing.

Read Full Article

4 Likes

Medium

348

Image Credit: Medium

Neural Networks vs. Support Vector Machines (SVM): Which Model Should You Choose?

- Neural Networks and Support Vector Machines (SVM) are popular algorithms for machine learning.

- Neural Networks are flexible, capable of handling large datasets, and learn non-linear relationships.

- SVM aims to find a hyperplane and maximize the margin, suitable for high-dimensional data and accuracy-critical tasks.

- Neural Networks are used for tasks like image recognition, while SVM is useful for classifying data with small but high-dimensional datasets.

Read Full Article

20 Likes

Hackernoon

418

Image Credit: Hackernoon

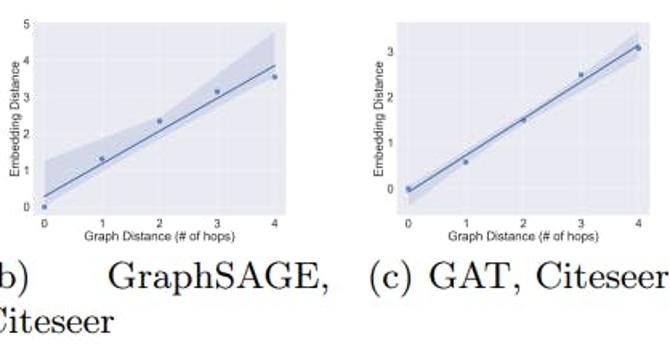

Extending GNN Learning: 11 Additional Framework Applications

- The proposed framework for Graph Neural Networks (GNNs) learning has potential applications in various areas.

- One application is in fair k-shot learning, which involves training a model to classify new examples based on a small number of labeled examples.

- Another application is ensuring fair predictive performance of GNNs for specified structural groups by penalizing parameters with low distortion between structural distance and embedding distance.

- Further research is needed to explore the actual application results of the framework.

Read Full Article

25 Likes

Hackernoon

321

Image Credit: Hackernoon

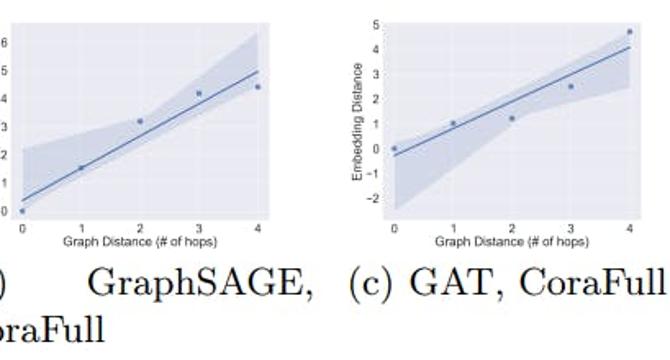

Comprehensive Overview of GNN Experiments: Hardware, Hyperparameters, and Findings

- This news article provides a comprehensive overview of GNN experiments, including hardware, hyperparameters, and findings.

- The experiments were conducted on a machine with two Intel Xeon Gold CPUs and four NVIDIA Tesla V100 GPUs.

- The hyperparameters were kept consistent across different models, and each experiment was trained until convergence.

- Additional experiments were conducted on datasets such as Citeseer, Cora Full, and OGBN-arxiv, confirming the consistency of the theoretical structures.

Read Full Article

19 Likes

Hackernoon

119

Image Credit: Hackernoon

Unfair Generalization in Graph Neural Networks (GNNs)

- The authors discuss unfair generalizations in Graph Neural Networks (GNNs).

- They present an outline of the proof structure for Theorem 2.

- The theorem aims to show the existence of a constant independent of the property of each test group.

- The paper is available on arxiv under CC BY 4.0 DEED license.

Read Full Article

7 Likes

Medium

82

Simulating Artificial Consciousness and Computing With Sentient AI

- Simulating Artificial Consciousness and Computing With Sentient AI

- The article discusses the concept of Thinkable Programming, which involves using a self-reinforcing neural network to create a thinking program.

- The author divides the algorithm for sentient intelligence into four sections: Reason, Cognition, Intelligence, and Sentience.

- The article also mentions the potential of creating an AI compiler and AI OS to harness the power of sentient AI and revolutionize computing.

Read Full Article

4 Likes

Medium

257

Image Credit: Medium

Boosting still performing better than Deep Learning — Try NODE

- Deep learning faces challenges when applied to tabular data, with gradient boosted decision trees (GBDT) remaining prominent.

- Neural Oblivious Decision Ensembles (NODE) is a novel architecture that aims to enhance deep learning on tabular data.

- Previous attempts to integrate deep learning with decision trees have faced limitations and lack universal effectiveness.

- NODE combines differentiable oblivious decision trees (ODTs) with deep learning techniques to improve performance on tabular data.

Read Full Article

15 Likes

Hackernoon

275

Image Credit: Hackernoon

The HackerNoon Newsletter: Startups of The Year: How to Nominate (10/21/2024)

- Startups of The Year: How to Nominate - Nominate your favorite startup and boost their winning chance for Startups of the Year 2024.

- Comfort Has a Cost, and Its Currency is Regret - Learn the real cost of playing it safe and how chasing your passion can lead to a life of fulfillment.

- What Governor Newsom’s AI Deepfake Regulations Mean for the 2024 U.S. Election - Explore how California Governor Newsom's new laws targeting AI deepfakes aim to safeguard the 2024 election from misinformation and protect voter integrity.

- Understanding Topology Awareness in Graph Neural Networks - Explore the influence of topology awareness on the generalization performance of Graph Neural Networks (GNNs) in this comprehensive study.

Read Full Article

16 Likes

Minis

1.5k

Image Credit: Minis

Elon Musk thought Parag Agrawal was not the 'fire-breathing dragon' the platform needed

- Elon Musk and then-Twitter CEO Parag Agrawal got dinner together in March 2022.

- Musk came away from the meeting describing Agrawal as a nice guy, but not the"fire-breathing dragon" Twitter needed.

- That's according to an excerpt published in The Wall Street Journal from Walter Isaacson's forthcoming biography of Musk.

Read Full Article

19 Likes

Minis

1.5k

Image Credit: Minis

If we use Elon Musk's Neuralink, we'll be Avengers: Enam Holdings exec

- Enam Holdings Director, Manish Chokhani, believes that integrating Neuralink into our spinal cords, as proposed by Elon Musk, could lead us to possess abilities akin to the Avengers, making us superhuman.

- Chokhani sees AI as the ultimate frontier in human evolution, suggesting that as we continue to enhance our abilities, we are progressively moving towards becoming more superhuman.

- Chokhani highlights the potential of robotics, sensors, and computing power to replicate and enhance our limbs, effectively extending our nervous system and augmenting human capabilities.

Read Full Article

16 Likes

Minis

1.2k

Image Credit: Minis

Science competes with Neuralink with launch of new platform for accelerating medical device development

- Science Foundry, a new platform by Science, offers access to over 80 tools to help companies develop medical devices more easily.

- The cost of developing medical devices can be prohibitive for startups, but Science Foundry aims to reduce barriers to innovation.

- Science is part of the growing brain-computer interface industry and is working on a visual prosthesis called the Science Eye to restore visual input to patients with serious blindness.

- It aims to support other neurotechnology, medical technology, and quantum computing startups at a cost comparable to academic facilities but with added benefits.

Read Full Article

20 Likes

Minis

401

Image Credit: Minis

Wall Street bets millions on new class of psychedelic drugs

- Wall Street investors are investing tens of millions of dollars in psychedelic drugs that could treat mental illness for a fraction of the cost of traditional therapy.

- Transcend Therapeutics, Gilgamesh Pharmaceuticals, and Lusaris Therapeutics have raised over $100 million since November to develop drugs for treating PTSD and depression.

- The companies' focus on more cost-effective psychedelic therapy coincides with a selloff in biotech stocks last year that dampened enthusiasm for hallucinogens' commercial potential.

Read Full Article

24 Likes

Minis

805

Image Credit: Minis

Bezos and Gates-funded brain implant startup tests mind-controlled computing on humans

- Synchron is one of several companies working on brain-computer interface technology.

- The system developed by Synchron is implanted through blood vessels and enables patients to control technology with their thoughts.

- Synchron CEO Tom Oxley has stated that the technology helps patients engage in activities that they may have previously been unable to do.

Read Full Article

17 Likes

For uninterrupted reading, download the app