Neural Networks News

Minis

1y

670

Image Credit: Minis

Man in US developed 'uncontrollable' Irish accent after cancer diagnosis

- A man in North Carolina developed an uncontrollable Irish accent after being diagnosed with prostate cancer.

- The man had never visited Ireland and had no immediate family from there.

- The case was jointly studied and reported by Duke University and Carolina Urologic Research Center.

- FAS is a rare condition that occurs due to a neurological disorder, brain injury, or psychological disorder.

Read Full Article

10 Likes

Minis

1y

578

Image Credit: Minis

New brain chips may bend your mind in strange & troubling ways: Study

- Neuralink, a neurotech startup founded by Elon Musk, is working on implanting its skull-embedded brain chip in humans.

- Brain-computer interfaces (BCIs) are being developed to facilitate direct communication between human brains and external computers for medical use cases such as helping paralyzed people communicate.

- BCIs are still in their infancy, and there are ethical concerns and potential negative effects to consider, such as dependency on the devices and changes to the sense of self.

- BCIs have surpassed their early sci-fi depictions and are attracting significant private and military funding for medical and non-medical uses.

Read Full Article

5 Likes

Minis

1y

467

Image Credit: Minis

Musk's Neuralink may have illegally transported pathogens, animal advocates say

- The Physicians Committee for Responsible Medicine (PCRM) has announced its intention to request a government investigation into Neuralink, a brain-implant firm owned by Elon Musk.

- The group claims to have evidence suggesting that the removal of brain implants from monkeys, which may have carried infectious diseases, was conducted unsafely.

- PCRM alleges that these incidents occurred in 2019.

- The animal welfare group is calling for the government to investigate these claims and ensure that proper safety protocols are in place to protect both animals and human health.

Read Full Article

28 Likes

Minis

1y

1k

Image Credit: Minis

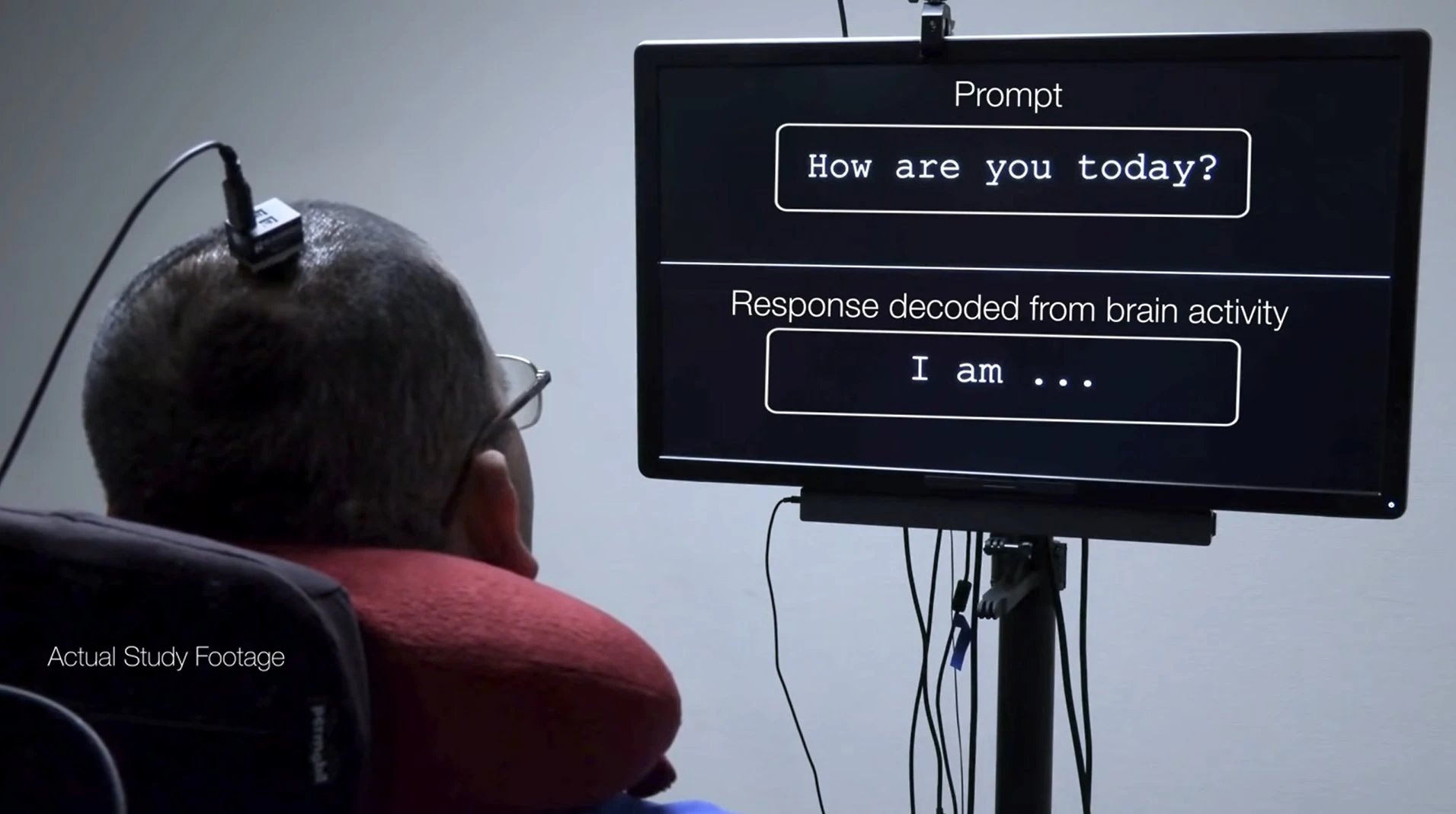

Brain implant communication sets new record of 62 words per minute

- Researchers at Stanford University claim a new record for communicating thoughts to words through brain implant.

- A 67-year-old woman with ALS was able to communicate at a rate of 62 words per minute through the implant, 3.4 times faster than previous record.

- The study was conducted at Stanford University in the US.

Read Full Article

29 Likes

For uninterrupted reading, download the app