Data Analytics News

Medium

246

Image Credit: Medium

Case Study: Smart Route Recommender for Bangalore Traffic

- A data-driven system has been developed to understand and predict traffic patterns in Bangalore, providing alternative route recommendations for commuters.

- Using a publicly available dataset from Kaggle with 17,000 rows called Bangalore’s Traffic Pulse, a reliability index was calculated to measure road stability and volatility.

- The roads were categorized based on metrics related to speed deviation and congestion deviation, leading to the calculation of a Route Risk Score that balances traffic pain and unpredictability.

- Personalized route recommendations are provided to users based on their preferences, such as prioritizing predictability over traffic pain, enhancing the commuting experience in Bangalore.

Read Full Article

14 Likes

Medium

224

Image Credit: Medium

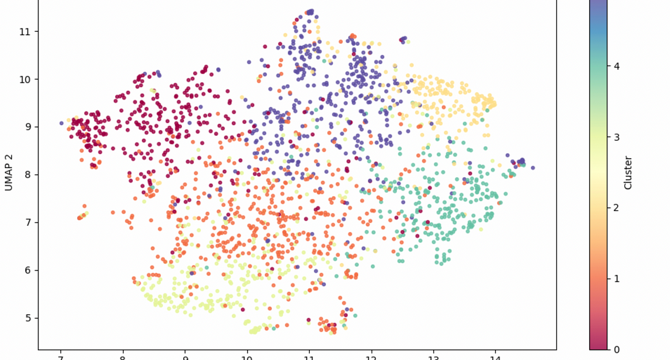

Case Study: An Unsupervised AI Pipeline to Cluster Tweets by Emotion and Meaning

- Tweets were processed and tagged with emotional tone using the facebook/bart-large-mnli model.

- Each tweet was converted into a numerical vector using the SentenceTransformers model to capture context and meaning.

- KMeans clustering was applied to detect latent structure in tweets based on the semantic embeddings.

- UMAP was used to visualize the tweet clusters and sentiment layout in a 2D space for further analysis.

Read Full Article

13 Likes

Medium

226

Image Credit: Medium

The Human Cost of Automation: Jobs Lost vs. Lives Improved

- Automation brings benefits like faster turnaround times, lower error rates, reduced costs, and new opportunities in emerging sectors.

- However, millions of jobs are at risk of automation by 2030, leading to psychological impacts, economic inequality, and social disruption.

- The focus should shift from job security to skill security, emphasizing lifelong learning, upskilling, and prioritizing human capabilities like creativity and empathy.

- The ethical dilemma lies in balancing efficiency gains from automation with societal well-being, ensuring technology uplifts humanity without leaving people behind.

Read Full Article

13 Likes

Medium

109

Image Credit: Medium

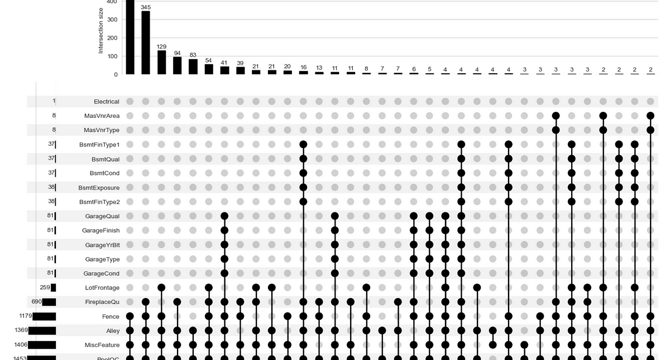

Handling Missing Values: A Comprehensive Guide

- Learn how to detect, diagnose, visualize, and impute missing values responsibly to maintain analysis honesty and model robustness.

- Investing time in rich visual diagnostics helps in avoiding one-size-fits-all imputations and preserving analysis integrity.

- Iterating through steps forms a missing-data remediation plan, transforming static visualizations into defensible strategies.

- Imputation methods should be chosen based on the type of model to prevent information loss or model bias, ensuring a statistically sound approach.

Read Full Article

6 Likes

Medium

70

Image Credit: Medium

Mastering Financial Literacy: The Key to Wealth, Stability, and Empowered Living

- Financial literacy is the ability to understand and use various financial skills for personal financial management, budgeting, investing, and retirement planning.

- Financial literacy empowers individuals to make informed decisions, avoid debt traps, build credit, generate wealth, and plan for the long term.

- Core pillars of financial literacy include budgeting, saving, investing, debt management, retirement planning, insurance & risk management.

- Real-life benefits of financial literacy include avoiding scams, better credit scores, improved saving and investing habits, early retirement possibilities, and financial security.

Read Full Article

4 Likes

TheStartupMag

309

Image Credit: TheStartupMag

The Future of Financial Strategies in a Rapidly Evolving World

- Technology and globalization are influencing financial strategies, forcing organizations to innovate and stay agile to remain relevant and competitive.

- The role of technology is significant, with fintech innovations reshaping the sector through AI, machine learning, and real-time analytics.

- Globalization tightens economies worldwide, emphasizing the need for robust risk management and adaptable financial strategies.

- Key trends like sustainable finance, digital currencies, and data-driven decisions are reshaping the financial landscape, offering opportunities for innovation and growth.

Read Full Article

11 Likes

TechBullion

389

Image Credit: TechBullion

18 Surprising Ways Data Analytics Uncovered Hidden Revenue Opportunities

- Data analytics can uncover hidden revenue opportunities through unexpected insights and analysis across various industries.

- For example, analyzing delivery data and weather patterns led to a 43% increase in online orders for a seafood business during monsoon season.

- Identifying and addressing issues like incomplete checkout processes for specific regions can lead to significant revenue growth by tapping into untapped markets.

- Small changes in strategies, such as personalized email offers for repeat customers, can result in a 20% increase in repeat purchases and overall revenue.

- Analyzing customer behavior data can reveal unexpected growth opportunities, such as targeting specific user segments with personalized discounts.

- Data analysis can uncover hidden revenue streams, like replacing underperforming assets with better alternatives leading to a 13% boost in select portfolios.

- Unearthing trends like midnight purchases driven by unique content can result in new revenue streams, like the 'pajama conversion' audience.

- Optimizing regional messaging and checkout experiences based on data insights can lead to an 18% increase in conversions through partner/referral funnels.

- Analyzing user engagement data can reveal valuable insights, such as the impact of billing feature adoption on platform retention and revenue growth.

- Reallocating resources based on integrated reporting can lead to a 15-25% increase in revenue by leveraging mid-funnel campaigns.

- Uncovering anomalies and underutilized features can drive revenue growth and improve customer engagement, emphasizing the importance of leveraging data insights.

Read Full Article

23 Likes

Medium

282

Image Credit: Medium

Your Brain, Hacked: Navigating the Next Frontier of Neurotechnology

- Neurotechnology is advancing rapidly, with companies investing in brain-computer interfaces to merge human cognition with AI.

- The ethical implications of neurotechnology include concerns about privacy, manipulation, and control over thoughts and emotions.

- Neurotech offers promises of enhancing capabilities but also raises questions about exploitation, control, and inequality.

- There is a growing need for establishing 'neurorights' to protect individuals from brain hacking and cognitive manipulation.

Read Full Article

17 Likes

Medium

388

Image Credit: Medium

Beware the Proxy Trap: What Jeff Bezos Taught Me About Measuring What Matters

- Product management often relies on metrics for clarity, but Jeff Bezos warns against mistaking proxies for reality.

- User retention was the primary focus for a team managing a financial SaaS platform that supported over $1.5 billion in user accounts.

- Long-term user engagement, tied to achieving financial goals, was crucial for both user outcomes and business success.

- Implementing a Product Health Dashboard provided a deeper understanding of user behavior and shifted focus towards outcome-driven product health.

Read Full Article

23 Likes

Medium

271

Image Credit: Medium

Building the Future with Data: Why Every Business Needs a Data Scientist on Board

- Businesses are actively seeking to hire data scientists to convert raw data into actionable insights as data grows in complexity and volume.

- Data scientists play a crucial role in analyzing and extracting real-time insights from data, providing predictive power, automation, optimization, and innovation to businesses across various industries.

- Data scientists offer valuable benefits to businesses such as predicting customer behavior, identifying inefficiencies, assessing risk, and uncovering market opportunities.

- Partnering with firms like Magic Factory can help businesses access skilled data scientists efficiently and cost-effectively to stay competitive in the data-driven age.

Read Full Article

16 Likes

Medium

278

Image Credit: Medium

Case Studies on When Big Companies Chased the Wrong Vanity Metrics

- Wells Fargo fixated on the number of new accounts opened as a key metric, leading to unethical behavior and overlooking important indicators like customer satisfaction.

- Microsoft's Xbox division focused on total console sales as a benchmark, neglecting user engagement and service revenue. This approach faltered when compared to Sony's user-centric strategy.

- Both cases highlight the pitfalls of prioritizing vanity metrics over meaningful data that align with business sustainability and customer needs.

- Microsoft's shift to highlight monthly active users instead of total sales demonstrates the importance of refocusing on actionable metrics that drive long-term success.

Read Full Article

16 Likes

Medium

212

Image Credit: Medium

Succinct Stage 2: From Learning Zero-Knowledge to Powering It

- Succinct introduces Stage 2, 'Prove with Us,' a major leap in decentralizing the proving layer of the blockchain.

- Stage 2 shifts community focus from learning to active participation by running prover nodes in the Succinct Prover Network.

- The initiative aims to build a scalable, secure, and verifiable blockchain future by involving the community in generating and verifying zero-knowledge proofs.

- Individuals can get involved by visiting the Succinct Blog, joining Discord, or following @SuccinctLabs for updates, enabling them to contribute to the world's first community-operated proving network.

Read Full Article

12 Likes

VentureBeat

362

Image Credit: VentureBeat

The $1 Billion database bet: What Databricks’ Neon acquisition means for your AI strategy

- The $1 billion acquisition of serverless PostgreSQL startup Neon by Databricks highlights the importance of databases for enterprise AI.

- Data is crucial for training AI, and without proper data, AI efforts often fail.

- Databricks' acquisition of Neon signifies the value of databases in the era of agentic AI and modern development practices.

- Neon's technology, although based on the established PostgreSQL platform, has a serverless approach for building AI applications.

- PostgreSQL, as an open-source relational database platform, is widely used and trusted for its stable features.

- Serverless databases like PostgreSQL offer ease of operations and allow for on-demand deployment, attracting developers for quick application development.

- Various cloud providers offer PostgreSQL services, including serverless options, making it a popular choice for AI applications.

- Databricks' acquisition of Neon complements their existing offerings and aims to build a developer and AI-agent-friendly database platform.

- Serverless PostgreSQL is seen as a foundational technology for AI and analytics, providing scalability and cost-effectiveness for AI projects.

- Neon's serverless PostgreSQL approach separates storage and compute, enabling automated scaling for AI projects.

- This acquisition suggests that a focus on serverless database capabilities is crucial for successful AI implementations, emphasizing the need for dynamic resource allocation.

Read Full Article

21 Likes

Pymnts

349

Image Credit: Pymnts

Equifax Launches US-Based Business Record Data on Cloud

- Equifax has launched its B2bConnect SMB data on the Equifax Cloud, providing near real-time access to 67 million U.S.-based business records.

- B2bConnect enables B2B marketers to query data for identifying prospects, generate insights, and customize files for CRM or marketing automation platforms.

- The data includes demographics, business contacts, firmographics, and compliance features like data use rights and marketability flags.

- Equifax expects the cloud transition to drive innovation and cost savings by streamlining data access, accelerating product development, and enhancing predictive model development.

Read Full Article

21 Likes

Pymnts

0

Image Credit: Pymnts

This Week’s B2B Innovation: AI Procurement, Digital Payments and Ongoing Pressures

- The B2B space is undergoing a radical transformation to smoothen legacy inefficiencies and become more efficient.

- Artificial intelligence (AI) is reshaping procurement practices, automation streamlines payment systems, and geopolitical factors affect small business sentiment in the B2B sector.

- A new B2B economy is emerging, driven by the infusion of AI into spend management, digital B2B payment acceleration, and global economic pressures on small businesses.

- AI is playing a prominent role in spend management as platforms like Procurify's 'Spend Insights' and Coupa's acquisition of Cirtuo increase efficiency and strategic decision-making.

- Accounts payable (AP) is evolving from a transactional role to a strategic financial insight hub, thanks to automation transforming processes and providing valuable insights.

- B2B payments are becoming smarter and faster, with innovations like FlexPoint automating complex transactions and partnerships like Cleo and Paystand streamlining B2B transactions.

- Efforts are focused on eliminating friction, enhancing security, and improving cash flow visibility across the B2B payments ecosystem.

- Small businesses are grappling with economic turbulence, with factors like rising tariffs, inflation, and geopolitical tensions impacting their operations and decision-making.

- Integrated B2B payment systems and digital tools offer some relief, but small businesses still face challenges in mitigating the broader economic headwinds.

- The evolving B2B economy in 2025 emphasizes the importance of embracing technology, adapting to uncertainty, and building resilient networks of value for success.

Read Full Article

Like

For uninterrupted reading, download the app