Neural Networks News

Medium

272

Image Credit: Medium

Generative AI series: Getting started with the tech for Non-AI people

- Generative AI can generate various types of data, such as audio, code, images, text, simulations, 3D objects, and videos.

- Neural Network models are the foundation of Generative AI, designed to mimic the processing power of the human brain.

- Transformer models, like GPT-3 and LaMDA, are neural networks that excel at tasks like transduction or transformation of input sequences into output sequences.

- GAN (Generative Adversarial Network) architecture comprises two models - Generator and Discriminator, which work together to generate plausible outputs based on original datasets.

Read Full Article

16 Likes

Analyticsinsight

218

Image Credit: Analyticsinsight

How to Write Neural Networks in R

- Neural networks in R are powerful tools for advanced data analysis and machine learning.

- Neural networks consist of interconnected nodes that process input data and generate predictions.

- To write neural networks in R, set up the necessary environment and libraries like keras and tensorflow.

- Follow the steps of data preparation, model architecture, compiling, training, and evaluation for building robust neural network models.

Read Full Article

13 Likes

Medium

13

Image Credit: Medium

Unveiling the Dominance of Data Science in Today’s Market.

- Data science serves as the conduit through which organizations can extract meaningful insights from vast volumes of data, enabling informed decision-making and strategic planning.

- Data science fuels the development of cutting-edge technologies that redefine the way we interact with the world, driving innovation across diverse sectors.

- By analyzing customer preferences, behavior patterns, and feedback, businesses can create bespoke products and services that resonate with their target audience, enhancing customer experiences.

- Data science equips organizations with the tools and techniques to identify potential risks, anticipate market fluctuations, and devise proactive strategies to mitigate adverse impacts, mitigating risks and uncertainties.

Read Full Article

Like

Medium

327

Adaptive Learning Rate Scheduling: Optimizing Training in Deep Networks

- Adaptive learning rate scheduling techniques have gained prominence for optimizing training in deep networks.

- Learning rate controls parameter updates in the optimization algorithm.

- Adaptive scheduling methods dynamically adjust the learning rate based on feedback during training.

- Popular techniques include learning rate schedulers, adaptive optimization algorithms, cyclical learning rate policies, and learning rate range tests.

Read Full Article

19 Likes

Semiengineering

159

Image Credit: Semiengineering

Optimizing Event-Based Neural Network Processing For A Neuromorphic Architecture

- A new technical paper titled “Optimizing event-based neural networks on digital neuromorphic architecture: a comprehensive design space exploration” was published.

- The paper discusses the challenges in reducing the overhead of event-driven processing and increasing the mapping efficiency of near/in-memory computing in neuromorphic processors.

- The authors propose spike-grouping to reduce energy and latency in event-driven processing, and the event-driven depth-first convolution to increase area efficiency and latency in convolutional neural networks (CNNs).

- The proposed optimizations result in significant improvements in energy efficiency, latency, and area efficiency compared to state-of-the-art neuromorphic processors.

Read Full Article

9 Likes

Medium

195

Image Credit: Medium

Complex dynamics of anisotropy and intrinsic dimensions have been discovered in transformers

- Anisotropy and intrinsic dimensions have been discovered in transformers.

- Anisotropy measures the stretch and heterogeneity of the embedding space.

- Local intrinsic dimension characterizes the shape and complexity of a neighborhood.

- Experiments showed patterns in anisotropy and intrinsic dimension during training.

Read Full Article

11 Likes

Medium

127

Image Credit: Medium

Recurrent Neural Network Alternatives: Deep Learning (Week 09)

- Gated Recurrent Units (GRU) and Long Short-Term Memory (LSTM) are alternative solutions to Recurrent Neural Networks (RNN).

- GRU has a more efficient architecture for processing sequential data and requires fewer parameters to be updated.

- LSTM utilizes Back Propagation Through Time (BPTT) to update weights and improve performance.

- Bi-Directional LSTM achieved a test accuracy of 87.48%.

Read Full Article

7 Likes

Medium

209

Image Credit: Medium

Architex — Community Owned Liquidity Program

- Architex is launching a community-owned liquidity program to enhance decentralized liquidity.

- Users can stake LP tokens in mining pools to earn rewards in real-yield and ARCX tokens.

- The program follows game theory concepts to create a positive liquidity loop.

- Architex will offer up to 100% APY in LP Mining Pools for 60 days, starting on April 1st, 2024.

Read Full Article

12 Likes

Medium

451

Image Credit: Medium

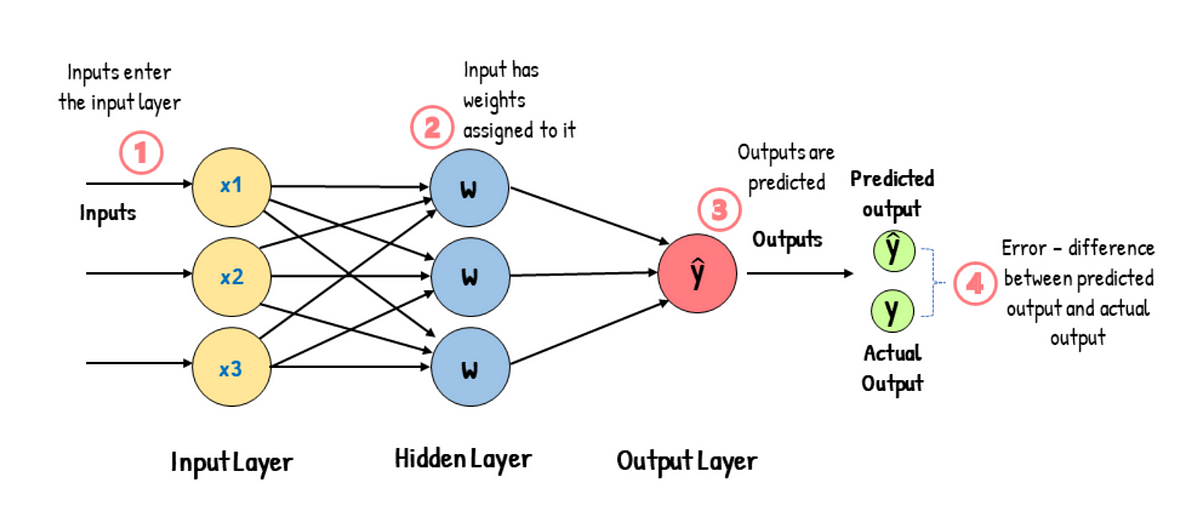

Building Blocks for AI Part 3: Neural Networks

- Artificial Neural Networks (ANNs) mimic the structure and function of the human brain.

- Neurons in ANNs receive inputs, calculate weighted sums, add bias, apply activation functions, and produce outputs.

- Neurons in ANNs are organized into layers, and learning is achieved through backpropagation and gradient descent.

- Neural networks consist of input layer, hidden layers, and output layer, learning by adjusting weights to minimize prediction errors.

Read Full Article

27 Likes

Medium

246

TensorFlow Comprehensive Training

- TensorFlow is an open-source machine learning framework developed by Google Brain Team.

- It provides a comprehensive ecosystem of tools, libraries, and resources for building and deploying machine learning models efficiently.

- TensorFlow offers both high-level APIs like Keras for rapid development and low-level APIs like TensorFlow Core for advanced customization.

- It is suitable for both beginners and experienced practitioners in the field of machine learning.

Read Full Article

14 Likes

Medium

228

Image Credit: Medium

WindSmart: Offshore Wind Turbine Maintenance with Neural Networks and Gaussian Mixture Models

- WindSmart is a machine learning solution that aims to improve offshore wind turbine maintenance.

- The global installed offshore wind capacity is projected to increase 15-fold by 2050.

- WindSmart focuses on reducing maintenance costs for offshore wind turbines through early anomaly detection.

- The solution utilizes neural networks and Gaussian mixture models to predict failures and improve precision.

- Future work includes refining data modeling and exploring supervised machine learning techniques.

Read Full Article

13 Likes

Medium

36

Future-Proof Resilience : A Connected Landscape

- The risk landscape for smart devices is rapidly growing, with over 40 million devices being attacked, highlighting the need for effective security measures.

- Deep tech research is focused on addressing these threats and preventing future attacks.

- Foundation models are crucial for mitigating threats, enabling anomaly detection, threat prediction, and task automation.

- There is a need for innovative solutions that can handle security in constrained environments, such as IoT and onboard electronic systems.

- Users should advocate for the adoption of foundation models and support ongoing research and development in this field.

Read Full Article

2 Likes

Medium

4

Image Credit: Medium

Generative AI: A Brushstroke of Creation in the Canvas of Tomorrow

- The roots of generative AI stretch back to the 1960s with the dawn of neural networks.

- Generative Adversarial Networks (GANs) revolutionized generative AI in the 2010s.

- Generative AI has diverse applications in various fields.

- Ethical considerations and responsible development are crucial for the future of generative AI.

Read Full Article

Like

Towards Data Science

215

Image Credit: Towards Data Science

Implementing Simple Neural Network Backpropagation from Scratch

- The XOR gate problem involves learning a simple pattern of relationships between inputs and outputs that a neural network can capture.

- A 2-layer fully connected neural network is used as an example to solve the XOR gate problem.

- The network structure consists of an input layer with 2 nodes, a hidden layer with 4 nodes, and an output layer with 1 node.

- The training data is represented by a matrix of size (2,4), and each example has 2 inputs.

Read Full Article

12 Likes

Medium

371

Image Credit: Medium

How does LLM work on AI platforms

- LLMs, such as GPT, go through a pre-training process where they learn to anticipate the next word in a sequence based on context.

- The Transformer architecture, with feedforward neural networks and self-attention processes, forms the foundation of LLMs.

- LLMs can be fine-tuned on specific tasks by providing labeled data to improve their performance.

- Once trained, LLMs can generate text by sampling from the vocabulary distribution conditioned on the input text.

Read Full Article

22 Likes

For uninterrupted reading, download the app